Announcing gRPC Support in NGINX

Today, we’re excited to share the first native support for gRPC traffic within NGINX. If you’re as keen as we are, you can pull the snapshot from our repository and share your feedback. If initial feedback is positive, we’ll include this capability in the next NGINX OSS release (1.13.10). The next NGINX Plus release, R15, will inherit this support for gRPC as well as the support for HTTP/2 server push support introduced in NGINX 1.13.9.

NGINX can already proxy gRPC TCP connections. This new capability can terminate, inspect, and route gRPC method calls. You can use it to:

- Publish a gRPC service, and then use NGINX to apply HTTP/2 TLS encryption, rate limits, IP‑based access control lists, and logging. You can operate the service using unencrypted HTTP/2 (h2c cleartext) or wrap TLS encryption and authentication around the service.

- Publish multiple gRPC services through a single endpoint, using NGINX to inspect and route calls to each internal service. You can even use the same endpoint for other HTTPS and HTTP/2 services, such as websites and REST‑based APIs.

- Load balance a cluster of gRPC services, using Round Robin, Least Connections, or other methods to distribute calls across the cluster. You can then scale your gRPC‑based service when you need additional capacity.

What is gRPC?

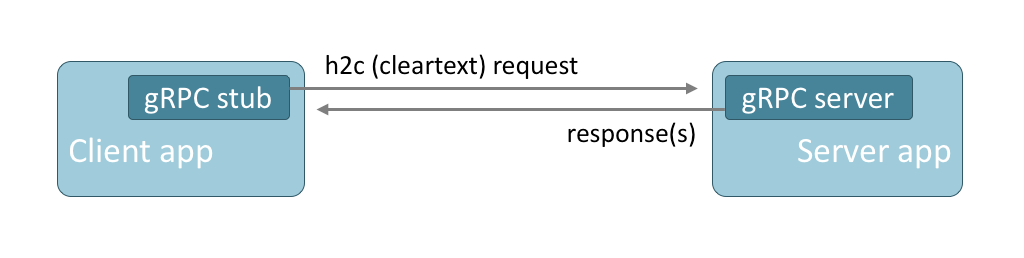

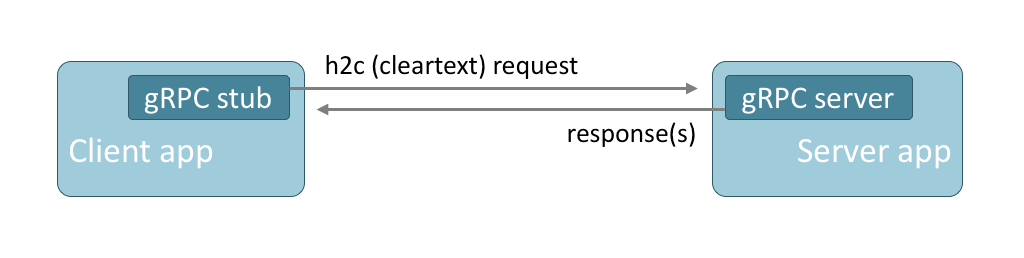

gRPC is a remote procedure call protocol, used for communication between client and server applications. It is designed to be compact (space‑efficient) and portable across multiple languages, and it supports both request‑response and streaming interactions. The protocol is gaining popularity, including in service mesh implementations, because of its widespread language support and simple user‑facing design.

gRPC is transported over HTTP/2, either in cleartext or TLS‑encrypted. A gRPC call is implemented as an HTTP POST request with an efficiently encoded body (protocol buffers are the standard encoding). gRPC responses use a similarly encoded body and use HTTP trailers to send the status code at the end of the response.

By design, the gRPC protocol cannot be transported over HTTP. The gRPC protocol mandates HTTP/2 in order to take advantage of the multiplexing and streaming features of an HTTP/2 connection.

Managing gRPC Services with NGINX

The following examples use variants of the gRPC Hello World quickstart tutorials to create simple client and server applications. We share details of the NGINX configuration; the implementation of the client and server applications is left as an exercise for the reader, but we do share some hints.

Exposing a Simple gRPC Service

First, we interpose NGINX between the client and server applications. NGINX then provides a stable, reliable gateway for the server applications.

Start by deploying NGINX with the gRPC updates. If you want to build NGINX from source, remember to include the http_ssl and http_v2 modules:

$ auto/configure --with-http_ssl_module --with-http_v2_moduleNGINX listens for gRPC traffic using an HTTP server and proxies traffic using the grpc_pass directive. Create the following proxy configuration for NGINX, listening for unencrypted gRPC traffic on port 80 and forwarding requests to the server on port 50051:

http {

log_format main '$remote_addr - $remote_user [$time_local] "$request" '

'$status $body_bytes_sent "$http_referer" '

'"$http_user_agent"';

server {

listen 80 http2;

access_log logs/access.log main;

location / {

# Replace localhost:50051 with the address and port of your gRPC server

grpc_pass grpc://localhost:50051;

}

}

}Ensure that the address in the grpc_pass directive is correct. Recompile your client to point to NGINX’s IP address and listen port.

When you run your modified client, you see the same responses as before, but the transactions are terminated and forwarded by NGINX. You can see them in the access log you configured:

$ tail logs/access.log

192.168.20.1 - - [01/Mar/2018:13:35:02 +0000] "POST /helloworld.Greeter/SayHello HTTP/2.0" 200 18 "-" "grpc-go/1.11.0-dev"

192.168.20.1 - - [01/Mar/2018:13:35:02 +0000] "POST /helloworld.Greeter/SayHelloAgain HTTP/2.0" 200 24 "-" "grpc-go/1.11.0-dev"Publishing a gRPC Service with TLS Encryption

The Hello World quickstart examples use unencrypted HTTP/2 (cleartext) to communicate. This is much simpler to test and deploy, but it does not provide the encryption needed for a production deployment. You can use NGINX to add this encryption layer.

Create a self‑signed certificate pair and modify your NGINX server configuration, as follows:

server {

listen 1443 ssl http2;

ssl_certificate ssl/cert.pem;

ssl_certificate_key ssl/key.pem;

#...

}Modify the gRPC client to use TLS, connect to port 1443, and disable certificate checking – necessary when using self‑signed or untrusted certificates. For instance, if you’re using the Go example, you need to:

- Add

crypto/tlsandgoogle.golang.org/grpc/credentialsto your import list -

Modify the

grpc.Dial()call to the following:creds := credentials.NewTLS( &tls.Config{ InsecureSkipVerify: true } ) // remember to update address to use the new NGINX listen port conn, err := grpc.Dial( address, grpc.WithTransportCredentials( creds ) )

That’s all you need to do to secure your gRPC traffic using NGINX. In a production deployment, you need to replace the self‑signed certificate with one issued by a trusted certificate authority (CA). The clients would be configured to trust that CA.

Proxying to an Encrypted gRPC Service

You may also want to encrypt the gRPC traffic internally. You first need to modify the server application to listen for TLS‑encrypted (grpcs) rather than unencrypted (grpc) connections:

cer, err := tls.LoadX509KeyPair( "cert.pem", "key.pem" )

config := &tls.Config{ Certificates: []tls.Certificate{cer} }

lis, err := tls.Listen( "tcp", port, config )In the NGINX configuration, you need to change the protocol used to proxy gRPC traffic to the upstream server:

# Use grpcs for TLS-encrypted gRPC traffic

grpc_pass grpcs://localhost:50051;Routing gRPC Traffic

What can you do if you have multiple gRPC services, each implemented by a different server application? Wouldn’t it be great if you could publish all these services through a single, TLS‑encrypted endpoint?

With NGINX, you can identify the service and method, and then route traffic using location directives. You may already have deduced that the URL of each gRPC request is derived from the package, service, and method names in the proto specification. Consider this sample SayHello RPC method:

package helloworld;

service Greeter {

rpc SayHello (HelloRequest) returns (HelloReply) {}

}Invoking the SayHello RPC method issues a POST request for /helloworld.Greeter/SayHello, as shown in this log entry:

192.168.20.1 - - [01/Mar/2018:13:35:02 +0000] "POST /helloworld.Greeter/SayHello HTTP/2.0" 200 18 "-" "grpc-go/1.11.0-dev"Routing traffic with NGINX is very straightforward:

location /helloworld.Greeter {

grpc_pass grpc://192.168.20.11:50051;

}

location /helloworld.Dispatcher {

grpc_pass grpc://192.168.20.21:50052;

}

location / {

root html;

index index.html index.htm;

}You can try this out yourself. We extended the example Hello World package (in helloworld.proto) to add a new service named Dispatcher, then created a new server application that implements the Dispatcher methods. The client uses a single HTTP2 connection to issue RPC calls for both the Greeter and Dispatcher services. NGINX separates the calls and routes each to the appropriate gRPC server.

Note the “catch‑all” / location block. This block handles requests that do not match known gRPC calls. You can use location blocks like this to deliver web content and other, non‑gRPC services from the same, TLS‑encrypted endpoint.

Load Balancing gRPC Calls

How can you now scale your gRPC services to increase capacity and to provide high availability? NGINX’s upstream groups do exactly this:

upstream grpcservers {

server 192.168.20.21:50051;

server 192.168.20.22:50052;

}

server {

listen 1443 ssl http2;

ssl_certificate ssl/certificate.pem;

ssl_certificate_key ssl/key.pem;

location /helloworld.Greeter {

grpc_pass grpc://grpcservers;

error_page 502 = /error502grpc;

}

location = /error502grpc {

internal;

default_type application/grpc;

add_header grpc-status 14;

add_header grpc-message "unavailable";

return 204;

}

}Of course, you can use grpc_pass grpcs://upstreams if your upstreams are listening on TLS.

NGINX can employ a range of load‑balancing algorithms to distribute the gRPC calls across the upstream gRPC servers. NGINX’s built‑in health checking will detect if a server fails to respond, or generates errors, and then take that server out of rotation. If no servers are available, the /error502grpc location returns a gRPC‑compliant error message.

Next Steps

This is the initial release of our gRPC proxy support. Maxim Dounin was the lead developer for this feature, and we would also like to thank Piotr Sikora for his early patches and guidance.

Please feel free to share any feedback in the Comments below, or through our community mailing list, and submit any confirmed bug reports to our trac bug tracker.

The post Announcing gRPC Support in NGINX appeared first on NGINX.

Source: Announcing gRPC Support in NGINX