Architecting Robust Enterprise Application Network Services with NGINX and Diamanti

If you’re actively involved in architecting enterprise applications to run in production Kubernetes environments, or in deploying and managing the underlying container infrastructure, you know firsthand how containers use IT resources quite differently from non‑containerized applications, and how important it is to have an application‑aware network that can adapt at the fast pace of change typical of containerized applications.

In this blog, we look at how the synergy between bare‑metal container infrastructure and an application‑centric load‑balancing architecture enables enterprises to deploy network services that are tailored to the needs of their containerized applications.

Recently, Diamanti – a leading provider of bare‑metal container infrastructure – announced its technology partnership with NGINX, developer of the eponymous open source load balancer. Below, we’ll offer up a look at a few common use cases for modern load balancing and application network services deployed in Kubernetes environments, with NGINX and NGINX Plus running on the Diamanti D10 Bare‑Metal Container Platform.

With Diamanti’s newly released support for multi‑zone Kubernetes clusters, the combination of NGINX and Diamanti enhances not only application delivery and scalability, but high availability as well.

This blog assumes a working knowledge of Kubernetes and an understanding of basic load‑balancing concepts.

Key Functional Requirements for Application Network Services

To meet the needs of Kubernetes‑based applications, network services need to provide the following functionality:

- Application exposure

- Proxy – Hide an application from the outside world

- Routing/ingress – Route requests to different applications and offer name‑based virtual hosting

- Performance optimization

- Caching – Cache static and dynamic content to improve performance and decrease the load on the backend

- Load balancing – Distribute network traffic across multiple instances of an application

- High availability (HA) – Ensure application uptime in the event of a load balancer or site outage

- Security and simplified application management

- Rate limiting – Limit the traffic to an application and protect it from DDoS attacks

- SSL/TLS termination – Secure applications by terminating client TLS connections, without any need to modify the application

- Health checks – Check the operational status of applications and take appropriate action

- Support for microservices

- Central communications point for services – Enable backend services to be aware of each other

- Dynamic scaling and service discovery – Scale and upgrade services seamlessly, in a manner that is completely transparent to users

- Low‑latency connectivity – Provide the lowest latency path between source and target microservices

- API gateway capability – Act as an API gateway for microservices‑based applications

- Inter‑service caching – Cache data exchanged by microservices

Diamanti’s Bare-Metal Container Platform Eliminates Major Obstacles To Building Robust Network Services For Kubernetes

At best, container networking in Kubernetes is challenging. All of of our customers who have attempted to build their own container infrastructure have told us that not only is network configuration complex, but it is extremely difficult to establish predictable performance for applications requiring it, unless they substantially overprovision their infrastructure. Quality of service (QoS) is also critical for stable functioning of multi‑zone clusters, which is easily disrupted by fluctuations in network throughput and storage I/O.

Because the Diamanti platform is purpose‑built for Kubernetes environments, it can be made operationally ready for production applications in a fraction of the time it takes to build a Kubernetes stack from legacy infrastructure. More importantly, it solves major challenges around building application network services with the following attributes and features:

- Plug-and-play networking

- Diamanti’s custom network processing allows for plug‑and‑play data center connectivity, and abstracts away the complexity of network constructs in Kubernetes.

- Built‑in monitoring capabilities allow for clear visibility into a pod’s network usage.

- Diamanti assigns routable IP addresses to each pod, enabling easy service discovery. A routable IP address means that load balancers within the network are already accessible, so exposing them requires no additional steps.

- Support for network segmentation, enabling multi‑tenancy and isolation.

- Support for multiple endpoints to allow higher bandwidth and cross‑segment communication.

- QoS

- Diamanti provides QoS for each application. This guarantees bandwidth to load‑balancer pods, ensuring that the load balancers are not bogged down by other pods co‑hosted on same node.

- Applications behind the load balancer can also be rate limited using pod‑level QoS.

- Multi-zone support

- Diamanti supports setting up a cluster across multiple zones, allowing you to distribute applications and load balancers across multiple zones for HA, faster connectivity, and special access needs.

NGINX Ingress Controller And Load Balancer Are Key Building Blocks For Application Network Services

Traditionally, applications and services are exposed to the external world through physical load balancers. The Kubernetes Ingress API was introduced in order to expose applications running in a Kubernetes cluster, and can enable software‑based Layer 7 (HTTP) load balancing through the use of a dedicated Ingress controller. A standard Kubernetes deployment does not include a native Ingress controller. Therefore, users have the option to employ any third‑party Ingress controller, such as the NGINX Ingress controller, which is widely used by NGINX Plus customers in their Kubernetes environments.

With advanced load‑balancing features using a flexible software‑based deployment model, NGINX provides an agile, cost‑effective means of managing the needs of microservices‑based applications. The NGINX Kubernetes Ingress controller provides enterprise‑grade delivery services for Kubernetes applications, with benefits for users of both open source NGINX and NGINX Plus. With the NGINX Kubernetes Ingress controller, you get basic load balancing, SSL/TLS termination, support for URI rewrites, and upstream SSL/TLS encryption.

Reference Architectures

There are many ways to provision the NGINX load balancer in a Kubernetes cluster running on the Diamanti platform. Here, we’ll focus on two important architectures which uniquely exemplify the joint value of Diamanti and NGINX.

Load Balancing and Service Discovery Across a Multi-Zone Cluster

Diamanti enables the distribution of Kubernetes cluster nodes across multiple zones. This configuration greatly enhances application HA as it mitigates the risks inherent to a single point of failure.

In a multi‑zone Diamanti cluster, the simplest approach is to deploy an NGINX Ingress controller in each zone. This approach has several benefits:

- Having multiple zones establishes HA. If a load balancer at one zone fails, another can take over to serve the requests.

- East‑west load balancing can be done within or across the zones. The cluster administrator can also define a particular zone affinity so that requests tend to stay within a zone for low latency, but go across zones in the absence of a local pod.

- Network traffic to the individual zones’ load balancers can be distributed via an external load balancer, or via another in‑cluster NGINX Ingress controller.

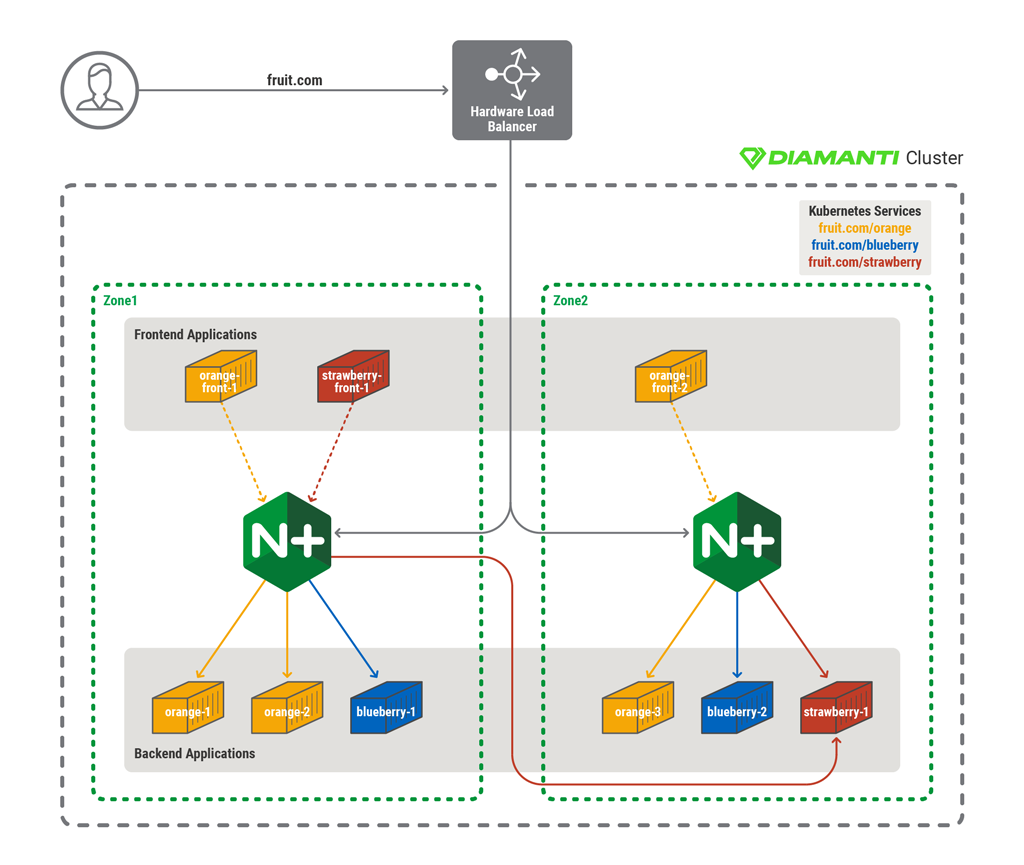

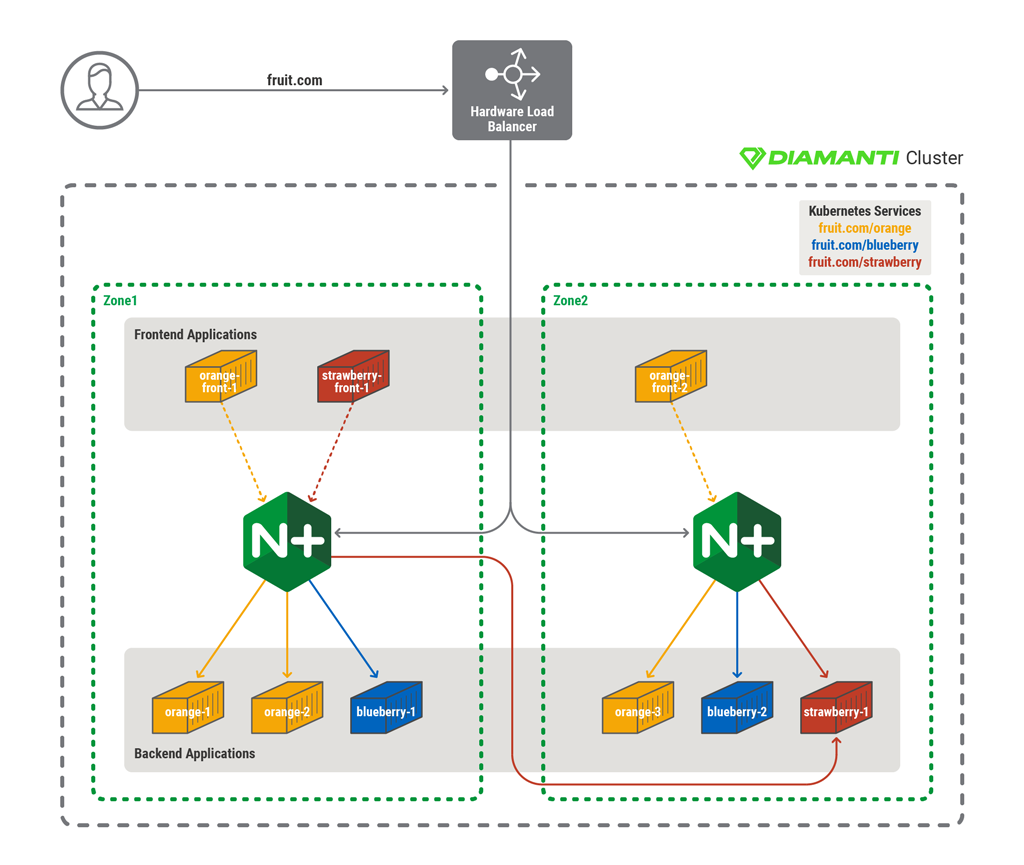

The following diagram shows an example of an multi‑zone architecture.

In this example, the Kubernetes cluster is distributed across two zones and is serving the application fruit.com. A DNS server or external load balancer can be used to distribute inbound traffic in round‑robin fashion to the NGINX Ingress controller of each zone in the cluster. Through defined Ingress rules, the NGINX Ingress controller discovers all of the frontend and backend pods of orange, blueberry, and strawberry services.

To minimize latency across zones, each zone’s Ingress controller can be configured to discover only the services within its own zone. In this example, however, Zone 1 does not have a local backend strawberry service. Therefore, Zone 1’s Ingress Controller needs to be configured to discover backend strawberry services in other zones as well. Alternatively, each zone’s load balancer can be configured to detect all pods in the cluster, across zones. In that case, load balancing can be executed based on the lowest possible latency to ensure preference for pods within the local zone. However, this approach increases the risk of a load imbalance within the cluster.

Once the Ingress controller is set up, services can be accessed via hostname (orange.fruit.com, blueberry.fruit.com, and strawberry.fruit.com). The NGINX Ingress controller for each zone load balances all pods of related services. Frontend pods (such as orange‑front) access the backend orange services within the local zone to avoid high network latency. However, frontend pods that do not have any services running within the local zone (such as strawberry‑front) need to go across zones to access the backend service.

Load Balancing and Service Discovery in a Fabric Mesh Architecture

Of the many possible modern load‑balancing approaches, the most flexible approach is doing client‑side load balancing directly from each pod. This architecture is also referred to as a service mesh or fabric model. Here, each pod has its own Ingress controller that runs as a sidecar container, making it fully capable of performing its own client‑side load balancing. Ingress controller sidecars can be manually deployed to pods or can be set up to be automatically injected with a tool such as Istio. In addition to the per‑pod Ingress controllers, a cluster‑level Ingress controller is required in order to expose the desired services to the external world. A service mesh architecture has the following benefits:

- Facilitates east‑west load balancing and microservices architecture

- Each pod is aware of all the backends, which helps to minimize hops and latency

- Enables secure SSL/TLS communication between pods without modifying applications

- Facilitates built‑in health monitoring and cluster‑wide visibility

- Facilitates HA for east‑west load balancing

The following diagram shows an example of a service‑mesh architecture.

Here the cluster is configured with one NGINX ingress controller per pod to construct a fabric, and serves the application fruit.com. A DNS server or external load balancer routes requests for fruit.com to the top‑level Ingress controller, which is the primary ingress point to the cluster. According to Ingress rules, the top‑level Ingress controller discovers all the pods for the orange, blueberry, and strawberry services, as does does each side Ingress controller in the fabric.

Once the Ingress controller fabric is set up, services can be accessed via hostname (orange.fruit.com, blueberry.fruit.com, and strawberry.fruit.com). The cluster‑level NGINX Ingress controller load balances for all pods of related services, based on hostname. Other frontend application pods running on same cluster (orange‑front, blueberry‑front, and strawberry‑front) can access their respective backend application pods directly, via their respective sidecar Ingress controllers.

Conclusion

Robust application network services are critical for enterprise organizations seeking to improve availability, deployment, and scalability for production Kubernetes applications. For these purposes, the choice of container infrastructure and approach to modern load balancing are the key success factors. That being said, Diamanti and NGINX pair well together to mitigate the risks of downtime, manage network traffic, and optimize overall application performance.

The post Architecting Robust Enterprise Application Network Services with NGINX and Diamanti appeared first on NGINX.

Source: Architecting Robust Enterprise Application Network Services with NGINX and Diamanti