Building a Web Frontend with Microservices and NGINX Plus

Here at NGINX we’ve begun exploring the frontiers of microservices application development through our NGINX Microservices Reference Architecture, with a sample photo storage and sharing site as our prototype. This blog post is the first in a series of articles dedicated to exploring the real-world problems and solutions that developers, architects, and operations engineers deal with on a daily basis in building their web-scale applications.

This article addresses an application-delivery component that has been largely ignored in the microservices arena: the web frontend. While many articles and books have been written about service design, there is a paucity of information about how to integrate a rich, user-experience-based web component that overlays onto microservice components. This article attempts to provide a solution to the thorny problem of web development in a microservices application.

In many respects, the web frontend is the most complex component of your microservices-based application. On a technical level, it combines business and display logic using a combination of JavaScript and server languages like PHP, HTML, and CSS. Adding more complexity, the user experience of the web app typically crosses microservice boundaries in the backend, making the web component a default control layer. This is typically implemented through some sort of state machine, but must also be fluid, high-performance, and elegant. These technical and user-experience requirements run counter to the design philosophy of the modern, microservices web which is small, focused, and ephemeral. In many respects, it is better to compare the web frontend of an app to an iOS or Android client, which is both a service-based client and a rich application unto itself.

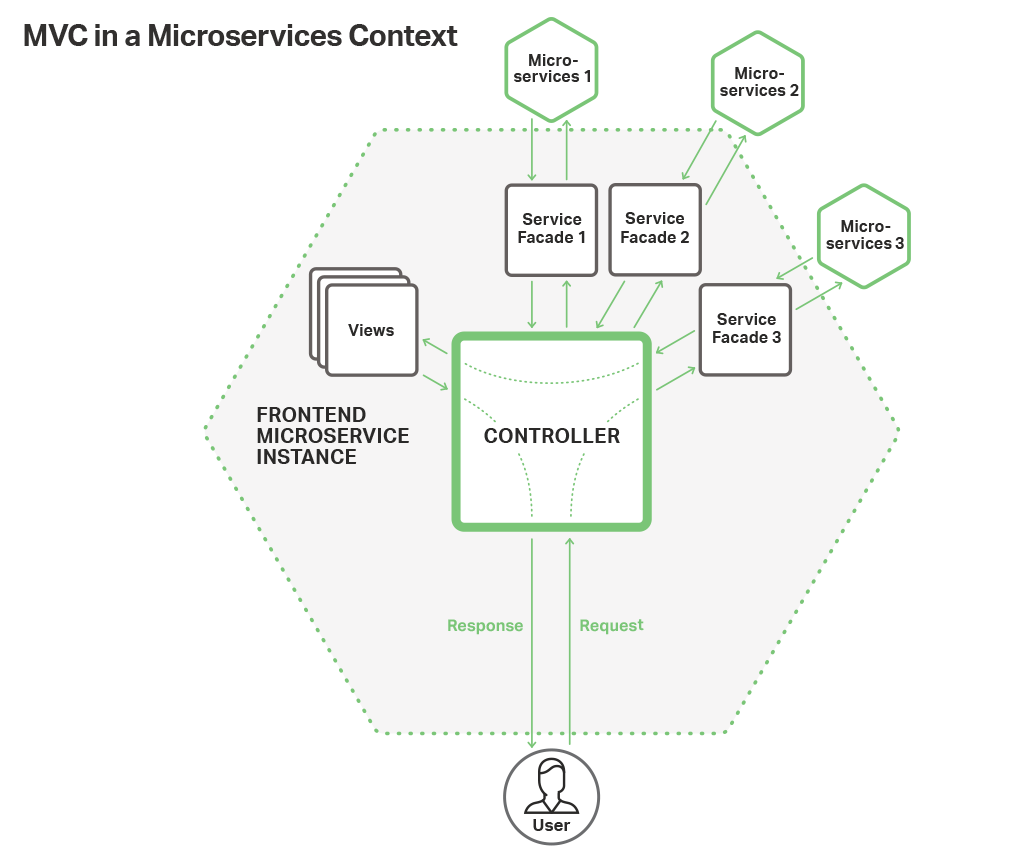

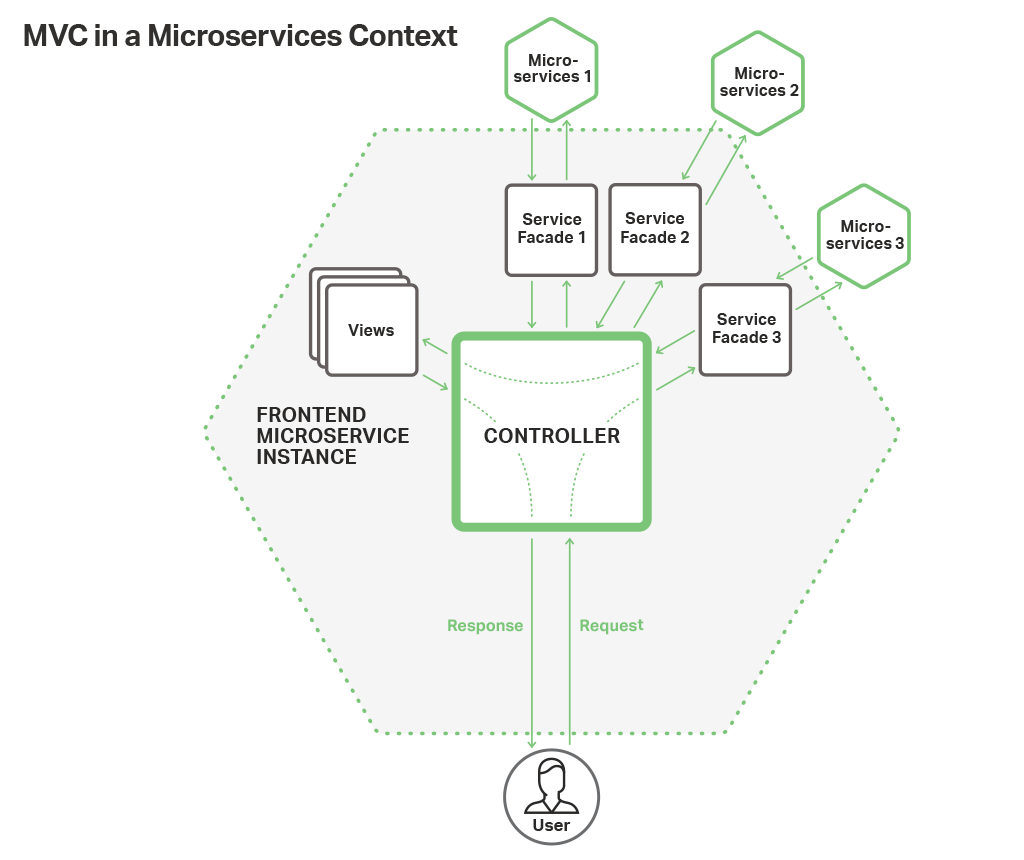

Our approach to building a web frontend combines the best of web application design with microservices philosophy, to provide a rich user experience that is service‑based, stateless, and connected. When building a microservices web component, the solution combines a Model-View-Controller (MVC) framework for control, attached resources to maintain session state, and routing by NGINX Plus to provide access to services.

Using MVC for Control

One of the most important technical steps forward in web application design has been the adoption of MVC frameworks. MVC frameworks can be found in every major language from Symfony on PHP to Spring in Java, in Ruby on Rails, and even on the browser with JavaScript frameworks like EmberJS. MVC frameworks segment code into areas of like concern – display logic is managed in views, data structures are managed in models, and state changes and data manipulation are managed through controllers. Without an MVC framework, control logic is often intermixed with display logic on the page – a pattern that is common in standard PHP development.

The clear division of labor in MVC helps guide the process of converting web applications into microservice-like, frontend components. Fortunately, the biggest area of change is confined to the controller layer. Views don’t need to change in any significant way – the stateless, ephemeral nature of a microservice doesn’t change the basic way data is displayed. Similarly, models in an MVC system map easily to the data structures of microservices, and the default approach to interacting with models is through the microservices that manage them. In many respects, this mode of interaction makes model development easier because the data structures and manipulation methods are the domain of the microservices teams that implement them, rather than the web frontend team.

It is in controllers where the biggest changes are required. Controllers typically manage the interplay between a user’s actions, the data models, and the views. If a user clicks on a link or submits a form, the controller intercepts the request, marshalls the relevant components, initiates the methods within the models to change the data, collects the data, and passes it to the views. In many respects, controllers implement a finite state machine (FSM) and manage the state transition tables that describe the interaction of action and logical state. Where there are complex interactions across multiple services, it is fairly common to build out manager services that the controllers interact with – this makes testing more discreet and direct.

In the NGINX Microservices Reference Architecture, we used the PHP framework Symfony for our MVC system. Symfony has many powerful features for implementing MVC and adheres to the clear separation of concerns that we were looking for in an MVC system. We implemented our models using services that connected directly with the backend microservices and our views as TWIG templates. The controllers handle the interfaces between the user actions, the services (through the use of façades), and the views. If we had tried to implement the application without an MVC framework for the web frontend, the code and interplay with the microservices would have been much messier and without clear areas to overlay the web frontend onto the microservices.

Maintaining Session State

Web applications can become truly complex when they provide an cohesive interface to a series of actions that cross service boundaries. Consider a common e-commerce shopping cart implementation. The user begins by selecting a product or products to buy as he or she navigates across the site. When finished shopping and ready to check out, the user clicks on the cart icon to initiate the purchase flow. The app presents a list of the items marked for purchase, along with relevant data like quantity ordered. The user then proceeds through the purchase flow, putting in shipping information, billing information, reviewing the order, and finally authorizing the purchase. Each form is typically validated and the information can be utilized by the next screen (quantity to review, shipping info to billing, etc). The user typically can move back and forth between the screens to make changes until the order is finally submitted.

In monolithic applications like Oracle ATG Web Commerce, form data is maintained throughout a session for easy access by the application objects. To maintain this association, users are pegged to an application instance via a session cookie. ATG even has a complex scheme for maintaining sessions in a clustered environment to provide resiliency in case of a system fault. The microservices approach eschews the idea of session state and in-memory session data across page requests, so how does a microservices web app deal with the shopping cart situation described above?

This is the inherent conundrum of a web app in a microservices environment. In this scenario, the web app is probably crossing service boundaries – the shipping form might connect to a shipping service, the billing form to a billing service, and so on. If the web app is supposed to be ephemeral and stateless, how is it supposed to keep track of the submitted data and state of the process?

There are a number of approaches to solving this problem, but the format we like the best is to use a caching-oriented attached resource to maintain session state (see 12factor.net for further details on attached resources). Using an attached resource like Redis to maintain session state means that the same logical flows and approaches used in monolithic apps can be applied to a microservices web app, but data is stored in a high-speed, atomically transactional caching system like Redis or Memcached instead of in memory on the web frontend instance.

With a caching system in place, users can be routed to any web frontend instance and the data is readily available to the instance, much as it was using an in-memory session system. This also has the added benefit of providing session persistence in case the user chooses to leave the site before purchasing – the data in the cache can be accessed for an extended period of time (typically days, weeks, or months) whereas in-memory session data is typically cleared after about 20 minutes. While there is a slight performance hit from using a caching system instead of in-memory objects, the inherent scalability of the microservices approach means that the application can be scaled much more easily in response to load and the performance bottleneck typically associated with a monolithic application becomes a non-issue.

The NGINX Microservices Reference Architecture implements a Redis cache, allowing session state to be saved across requests where needed.

Routing to and Load Balancing Microservices

While maintaining session state adds complexity to the system, modern web applications don’t just implement functional user interactions in the server logic. For a variety of user experience reasons, most web applications also implement key functionality of the system in JavaScript on the browser. The NGINX Microservices Reference Architecture, for example, implements much of the photo uploading and display logic in JavaScript on the client. However, JavaScript has some inherent limitations that can make it difficult to access microservices directly because of a security feature called cross-site scripting (XSS). XSS prevents JavaScript applications from accessing any server other than the one they were loaded from, otherwise known as the origin. Using NGINX Plus, we are able to overcome this limitation by routing to microservices through the origin.

A typical approach to implementing microservices is to provide each service with a DNS entry. For example, we might name the uploader microservice in our Microservices Reference Architecture uploader.example.com and the web app pages.example.com. This makes service discovery fairly simple in that it requires only a DNS lookup to find the endpoints. However, because of XSS, JavaScript applications cannot access hosts other than the origin. In our example the JavaScript app can only connect to pages.example.com, not to uploader.example.com.

As mentioned before, we use the PHP Symfony framework to implement the web app in the NGINX Microservices Reference Architecture. To achieve the highest performance, the system was built in a Docker container with NGINX Plus running the FastCGI Process Manager (FPM) PHP engine. Combining NGINX Plus with FPM allows us to have a tremendous amount of flexibility in configuring the HTTP/HTTPS component of the web interaction, as well as providing us with powerful, software-based load-balancing features. The load-balancing features are particularly important when providing JavaScript with access to the microservices that it needs to interact with.

By configuring NGINX Plus as the web server and load balancer, we can easily add routes to the needed microservices using the location directive and upstream server definitions. In this case, the JavaScript application accesses pages.example.com/uploader instead of uploader.example.com. This has the added benefit that NGINX Plus provides powerful load-balancing features like health checks of the services and Least Connections load balancing across any number of instances of uploader.example.com. In this way, we can overcome the XSS limitation of JavaScript applications and allow them to have full access to the microservices they need to interact with.

http {

resolver ns.example.com valid=30s;

# use local DNS and override TTL to whatever value makes sense

upstream uploader {

least_time header;

server uploader.example.com;

zone backend 64k;

}

server {

listen 443;

server_name www.example.com;

root /www/public_html;

status_zone pages;

## Default location

location / {

# try to serve file directly, fall back to app.php

try_files $uri /app.php$is_args$args;

}

location /uploader/image {

proxy_pass http://uploader;

proxy_set_header Host uploader.example.com;

}

}

}

Conclusion

Implementing web application components in microservices apps is challenging because they don’t fit neatly into the standard microservices component architecture. They typically cross service boundaries and require server logic and browser-based display logic. These unique features need complex solutions to work properly in a microservices environment. The easiest way to approach this is to:

- Implement the web app using an MVC framework to clearly separate logical control from the data models and display views.

- Use a high-speed caching attached resource to maintain session state.

- Use NGINX Plus for routing to and load balancing microservices, to provide browser-based JavaScript logic with access to the microservices it needs to interact with.

This approach maintains microservices best practices while providing the rich web features needed for a world-class web frontend. Web frontends created using this methodology enjoy the scalability and development benefits of a microservices approach.

Be sure to attend our webinar for more insights into how to build a web frontend using microservices and NGINX Plus.

For in-depth information about many aspects of microservices, check out our blog series from industry thought leader Chris Richardson.

The post Building a Web Frontend with Microservices and NGINX Plus appeared first on NGINX.

Source: Building a Web Frontend with Microservices and NGINX Plus