Deploying Application Services in Kubernetes, Part 1

If we’ve observed just one change that has come with the growth of Kubernetes and cloud‑native architectures, it’s that DevOps teams and application owners are taking more direct control over how their applications are deployed, managed, and delivered.

Modern applications benefit from an increasingly sophisticated set of supporting “application services” to ensure their successful operation in production. The separation between the application and its supporting services has become blurred, and DevOps engineers are discovering that they need to influence or own these services.

Let’s look at a couple of specific examples:

- Canary and blue‑green deployments – DevOps teams are pushing applications into production, sometimes multiple times per day. They actively use the traffic‑steering capabilities of the load balancer or application delivery controller (ADC) to validate new application instances with small quantities of traffic before switching all traffic over to them from the old instances.

- DevSecOps pipelines – DevOps teams are building security policies, such as web application firewall (WAF) rule sets, directly into their CI/CD pipelines. Policies are handled as just another application artefact and deployed automatically into test, pre‑production, and production environments. Although a SecOps team may define the security policy, DevOps performs the actual deployment into production.

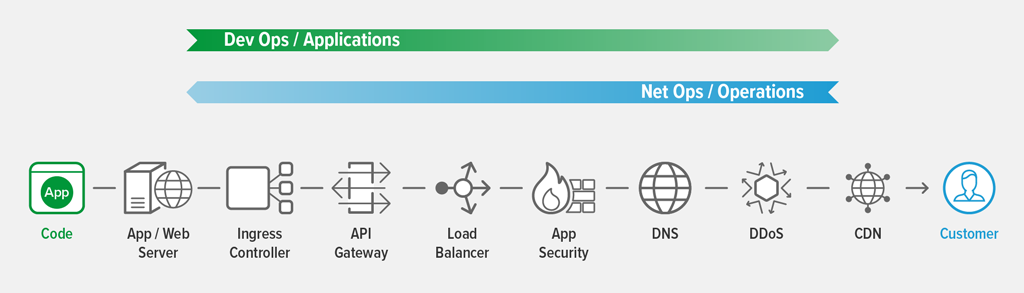

These examples each relate to what we refer to as “application services” – capabilities that are not part of an application’s functional requirements but are necessary to ensure its successful operation. They can include caching, load balancing, authentication, WAF, and denial-of-service measures, to provide scale, performance acceleration, or security.

NetOps and DevOps Both Have an Interest in Application Services

The rise of DevOps in no way takes away from the role of NetOps teams, who still have responsibility for the operation of the entire platform and its required application services. NetOps’s need to control these global‑scale services is still vital. In fact, where both NetOps and DevOps have an interest in an application service such as ADC or WAF, we often see duplication of that service. This is not an inefficiency, but rather reflects the differing needs and goals of the parties as they each make use of that service:

- NetOps and SecOps are responsible for the overall security, performance, and availability of the corporate infrastructure. Their goals are measured in terms of reliability, uptime, and latency, and in their ability to establish a secure perimeter. The tools they use are typically multi‑tenant (managing traffic for many applications and many lines of business), infrastructure‑centric, and long‑lasting. The monitoring and alerting tools they use are similarly infrastructure‑focused.

- DevOps and DevSecOps are responsible for individual applications that their associated line of business needs to operate. Their goals are met when they can iterate quickly, bring new services to market easily, and respond to changing business needs. Things are expected to break, and reliability and uptime take second place to the ability to rapidly troubleshoot and resolve the inevitable, unforeseen production issues. The tools they use tend to be software‑based (often open source), easily automatable via config files and APIs, and rapidly deployed and scaled as needed.

Paradoxically, Sometimes Duplication Leads to Operational Efficiency

Why is duplication of functionality not an inefficiency? Simply put, because both NetOps and DevOps need to make use of certain functionality but have very different goals, metrics, and ways of operating. We have seen countless examples of how expecting DevOps and NetOps to share a common ADC (for example) creates conflict and its own types of inefficiencies.

NetOps and Operations teams care most about the global services that tend to be located at the front door of the infrastructure, closer to customers. DevOps and Applications teams care most about the application‑specific services that are deployed closer to the application code. Their interests often overlap at the middle.

You can create operational efficiency by providing the right tools for each team according to its needs. For example, F5’s BIG‑IP ADC infrastructure meets the needs of NetOps very effectively, having been refined to that purpose over many years of product development. NGINX’s software ADC meets the needs of DevOps users very well, as a software form factor that is easily deployed and automated through CI/CD pipelines.

The careful placement of ownership and responsibility for each application service is at the heart of building operational efficiency. For example, when you deploy two tiers of ADC or load balancer:

- NetOps has the ability to manage all network traffic, apply security policies, optimize routing, and monitor the health and performance of the infrastructure. A steady, expertly managed configuration minimizes the risk from changes and maximizes the opportunities for uptime. Placement at the front door of the data center allows NetOps to create a secure, managed perimeter that all traffic must pass through.

- DevOps teams have full control over per‑application ADCs, giving them the freedom to optimize and adapt the ADC configuration for their applications and operational processes. They can fine‑tune the parameters necessary to cache and accelerate the app, and optimize health checks, timeouts, and error handling. They can safely operate blue‑green and other deployments without fear of interrupting other, unrelated services running on the same infrastructure.

Locating ADC capabilities in two places – at the front door of the infrastructure and close to the application – allows for the specialization and control that improves the efficiency of how you deploy and operate the services your business depends on.

Kubernetes Highlights the Need to Intelligently Locate Application Services

Enterprises are adopting Kubernetes at breakneck speed as part of a DevOps‑centric digital transformation initiative. Kubernetes provides DevOps engineers with an application platform that is easily automatable, offers a consistent runtime, and is highly scalable.

Many of the application services that traditionally sit at the front door of the data center can be deployed or automated from Kubernetes. This further highlights the involvement that DevOps teams have in managing the operation of their applications in production, and it introduces more options when you consider how and where vital application services are to be deployed.

In the second article in this series, we’ll look at some well‑established practices for deploying services such as WAF for applications that are running in Kubernetes. We’ll consider the trade‑offs between different options, and the criteria that matter most to help you make the best decisions.

Want to try the NGINX Plus Ingress Controller as a load balancer in your Kubernetes environment, along with NGINX App Protect to secure your applications? Start your free 30-day trial today or contact us to discuss your use cases.

The post Deploying Application Services in Kubernetes, Part 1 appeared first on NGINX.

Source: Deploying Application Services in Kubernetes, Part 1