Ensuring Application Availability with F5 DNS Load Balancer Cloud Service and NGINX Plus

Application downtime is a big deal, costing Fortune 1000 companies between $1.25 billion and $2.5 billion annually according to an IDC report. An outage can impact customer confidence and bring your business to a standstill.

To reduce downtime and mitigate its costs, you can start by removing points of failure from your existing infrastructure with a modern, scalable application platform like NGINX’s. You can further optimize performance and improve the availability of their applications by employing DNS.

This post focuses on how NGINX Plus and the F5 DNS Load Balancer Cloud Service work together to increase your application’s availability.

How Can DNS Minimize Downtime?

DNS is fundamental to every request made on the Internet. It makes the first – and hence most critical – decision about every request: how to route it to the right application.

By steering clients away from non‑responsive application instances, DNS load balancing, also known as global server load balancing (GSLB), eliminates the downtime your users experience when their requests go to a down server.

A modern DNS load‑balancing solution uses application health monitors and information about client locations to route requests correctly every time and optimize the end‑user experience.

NGINX Plus and F5 DNS Load Balancer

NGINX is a lightweight, flexible, high performance, all-in-one software load balancer, WAF, reverse proxy, web server, content cache, and API gateway, powering more of the busiest 100,000 sites than any other web server. NGINX software is available as both open source and as NGINX Plus, an enterprise‑grade version with additional features. This blog takes advantage of active health checks, which are exclusive to NGINX Plus.

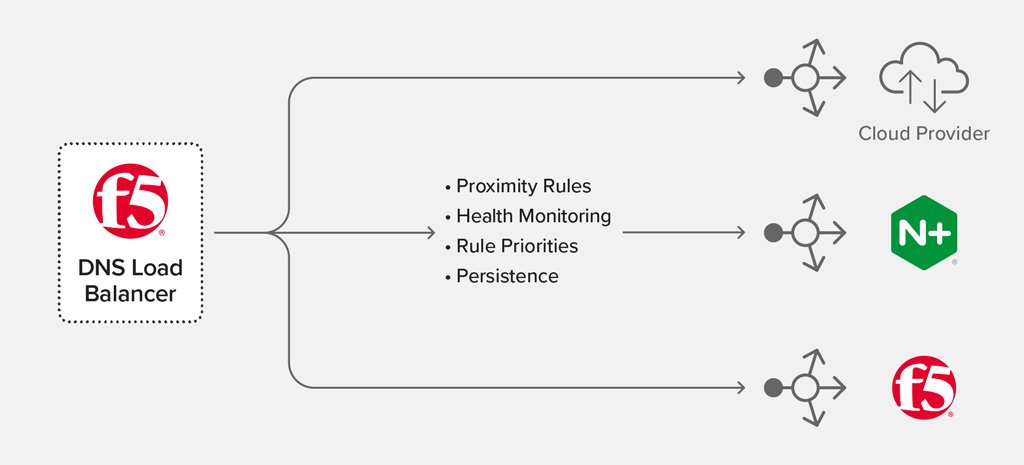

F5’s DNS Load Balancer Cloud Service is a scalable, global traffic management service for optimizing application performance and availability utilizing intelligent load‑management capabilities.

With NGINX Plus and DNS Load Balancer acting together, traffic is routed automatically around impacted servers and locations when one or more servers go down within a single region or globally. The servers are automatically added back to the load‑balanced pool when they come back up.

Application Load Balancing Across Multiple Regions and Geographies

For simplicity, in this blog we use a sample topology with two NGINX Plus instances located in separate cloud regions in the United States, both serving the same application. A real‑world deployment is likely to have to have application instances (and the accompanying NGINX Plus reverse‑proxy instances) in multiple regions around the world.

When users request www.example.com in their browser, the primary DNS service directs the browser to request information for www.gslb.example.com. The DNS Load Balancer service is configured for global traffic management, responding to all DNS queries in the *.gslb.example.com subdomain.

DNS Load Balancer sends advanced health monitor probes to determine application health, and based on the response directs clients to the application instances in a region only if they are available. In our sample topology, for example, if the backend application proxied by the NGINX Plus instance in the us-west region is unavailable, then DNS Load Balancer steers all requests towards the application instances hosted in us-east, and vice versa.

Configuring Load Balancing Records

To configure DNS Load Balancer to route traffic to your application, you define an intelligent DNS record called a load balancing record (LBR). In the LBR, you specify the hosts (IP endpoints) to route traffic among, and the rules for selecting the best response for each end‑user request.

When a client request comes in, DNS Load Balancer matches it against the list of hosts in the LBR. Further, it can determine the geographic location of the requesting client and use priority scoring to direct the client to the closest application instances.

The following example uses DNS Load Balancer’s declarative API to define an LBR containing these configuration objects :

- IP endpoints (under

virtual_servers) – The IP addresses and related information for the hosts advertised by DNS Load Balancer to clients of your application. In our example, the two IP endpoints are the NGINX Plus instances in the us-east and us-west regions. -

Pools (under

pools) – Logical groupings of IP endpoints and the load‑balancing algorithm used to distribute traffic among them. In our example,nginx_us_pooldefines round‑robin load balancing between the two NGINX Plus instances. When adapting the configuration to your deployment, just change theaddressfields in the blocks undervirtual_serversto the IP addresses of your NGINX Plus instances.A real‑world topology probably has multiple pools, each grouping together IP endpoints located in a different geographic area.

- Health monitors (under

monitors) – An advanced HTTP check to determine if the hosts serving your application are available. In our example, the monitor checks the status of the NGINX Plus instances. It interprets an HTTP response code from NGINX Plus in the200–499range to mean that the proxied application is available. A5xxresponse indicates that the application is unavailable; DNS Load Balancer marks that region as down and responds to client requests with the address of the IP endpoint in the other region. Thereceivevalue of the monitor supports any valid regular expression string, which allows you to confirm whether dynamically generated content is present in the HTTP response. - Load balancing record (under

load_balanced_records) – A DNS record that determines how traffic is routed to your pools. In our example, we define a DNSArecord for www.

{

"zone":"gslb.example.com",

"load_balanced_records":{

"WWW":{

"aliases": [

"www"

],

"rr_type": "A",

"display_name": "www",

"enable": true,

"persistence": false,

"proximity_rules": [

{

"region": "global",

"pool": "nginx_us_pool",

"score": 1

}

]

}

},

"pools": {

"nginx_us_pool": {

"display_name": "NGINX US Pool",

"enable": true,

"remark": "",

"rr_type": "A",

"ttl": 60,

"load_balancing_mode": "round-robin",

"max_answers": 1,

"members": [

{

"virtual_server": "us_west_1_nginx",

"monitor": "http_monitor"

},

{

"virtual_server": "us_west_2_nginx",

"monitor": "http_monitor"

}

]

}

},

"virtual_servers": {

"us_west_1_nginx": {

"virtual_server_type": "cloud",

"display_name": "US West NGINX Instance 1",

"port": 80,

"address": "192.0.2.100",

"monitor": "http_adv_monitor"

},

"us_east_1_nginx": {

"virtual_server_type": "cloud",

"display_name": "US East NGINX Instance 1",

"port": 80,

"address": "198.51.100.50",

"monitor": "http_adv_monitor"

}

},

"monitors": {

"http_adv_monitor": {

"display_name": "HTTP Monitor",

"monitor_type": "https_advanced",

"target_port": 443,

"receive": "HTTP/1.. (2|3|4).{2}",

"send": "GET / HTTP/1.0"

}

}

}Configuring NGINX Plus Active Health Checks

Next, we configure each NGINX Plus instance to periodically check the health of the application servers in the backend upstream group in its region. If all servers fail the health check, NGINX Plus automatically returns 502 (Bad Gateway) and a custom error page, error_fail.html. As noted just above, DNS Load Balancer interprets the 502 code to mean the backend application is unavailable in that region.

Note that the match block assumes that the backend application is configured to return the indicated response body.

upstream backend {

zone backend 64k;

server backend1.example.com;

server backend2.example.com;

server backend3.example.com;

}

# Match successful backend app health checks

match server_ok {

status 200;

body ~ '{"HealthCheck":"OK"}';

}

server {

listen 80;

status_zone backend;

location / {

proxy_pass http://backend;

}

location @healthcheck {

internal;

proxy_pass http://backend;

error_page 500 502 503 504 = /error_fail.html;

health_check uri=/healthcheck match=server_ok interval=30s;

}

}Testing the Configurations

With the DNS Load Balancer and NGINX Plus configurations in place, you can use a tool like curl or HTTPie (as here) to test your application:

$ http --headers https://www.example.com

HTTP/1.1 200 OK

...To determine where DNS Load Balancer is directing clients, you can use a DNS troubleshooting tool like dig. Output like the following – expected in normal circumstances – indicates that DNS Load Balancer is alternately returning the addresses of the two NGINX Plus instances (192.0.2.100 is US West NGINX Instance 1 and 198.51.100.50 is US East NGINX Instance 1).

$ dig +short www.example.com

192.0.2.100

$ dig +short www.example.com

198.51.100.50

$ dig +short www.example.com

192.0.2.100

...Handling Application Failures

As previously mentioned, application outages are a significant drag on customer confidence. Even if your application has the world’s best error page, why send your customers to an application instance that is experiencing an outage?

Here we’ve configured our app deployment to prevent such a situation. When NGINX Plus returns a 502 error to the health monitor, DNS Load Balancer sends all new traffic to the other, available region.

We use the dig tool again, and this time it shows that DNS Load Balancer is returning the address for US West NGINX Instance 1 (192.0.2.100) only:

$ dig +short www.example.com

192.0.2.100

$ dig +short www.example.com

192.0.2.100

$ dig +short www.example.com

192.0.2.100

...As soon as at least one of the backend servers in the unavailable region comes back online, DNS Load Balancer automatically directs traffic to that region once again.

Summary

We’ve shown how simple it is to scale your application to multiple regions and use DNS Load Balancer as a modern global traffic management solution to provide increased availability to your customers.

Take advantage of our DNS Load Balancer free tier offering to see for yourself how NGINX software and F5 SaaS solutions can boost the performance and manageability of your applications.

You can also request access to our upcoming NGINX Plus Integration Early Access Preview for DNS Load Balancer. This integration enables you to configure your NGINX Plus instances to share their configurations and performance information with DNS Load Balancer for optimization of global application traffic management.

The post Ensuring Application Availability with F5 DNS Load Balancer Cloud Service and NGINX Plus appeared first on NGINX.

Source: Ensuring Application Availability with F5 DNS Load Balancer Cloud Service and NGINX Plus