Introducing NGINX Service Mesh

We are pleased to introduce a development release of NGINX Service Mesh (NSM), a fully integrated lightweight service mesh that leverages a data plane powered by NGINX Plus to manage container traffic in Kubernetes environments. NSM is available for free download. We hope you will try it out in your development and test environments, and look forward to your feedback on GitHub.

Adopting microservices methodologies comes with challenges as deployments scale and become more complex. Communication between the services is intricate, debugging problems can be harder, and more services imply more resources to manage.

NSM addresses these challenges by enabling you to centrally provision:

- Security – Security is more critical now than ever. Data breaches can cost organizations millions of dollars every year in lost revenue and reputation. NSM ensures all communication is mTLS‑encrypted so that there is no sensitive data on the wire for hackers to steal. Access controls enable you to define policies about which services can talk to each other.

- Traffic management – When deploying a new version of an application, you might want to limit the amount of traffic it receives at first, in case there is a bug. With NSM intelligent container traffic management you can specify policies that limit traffic to new services and slowly increase it over time. Features like rate limiting and circuit breakers give you full control over the traffic flowing through your services.

- Visualization – Managing thousands of services can be a debugging and visibility nightmare. NSM helps to reign that in with a built‑in Grafana dashboard displaying the full suite of metrics available in NGINX Plus. In addition, the Open Tracing integration enables fine‑grained transaction tracing.

- Hybrid deployments – If your enterprise is like most, your entire infrastructure doesn’t run in Kubernetes. NSM ensures legacy applications are not left out. With the NGINX Kubernetes Ingress Controller integration, legacy services can communicate with mesh services and vice versa.

NSM secures applications in a zero‑trust environment by seamlessly applying encryption and authentication to container traffic. It delivers observability and insights into transactions to help organizations deploy and troubleshoot problems quickly and accurately. It also provides fine‑grained traffic control, enabling DevOps teams to deploy and optimize application components while empowering Dev teams to build and easily connect their distributed applications.

What Is NGINX Service Mesh?

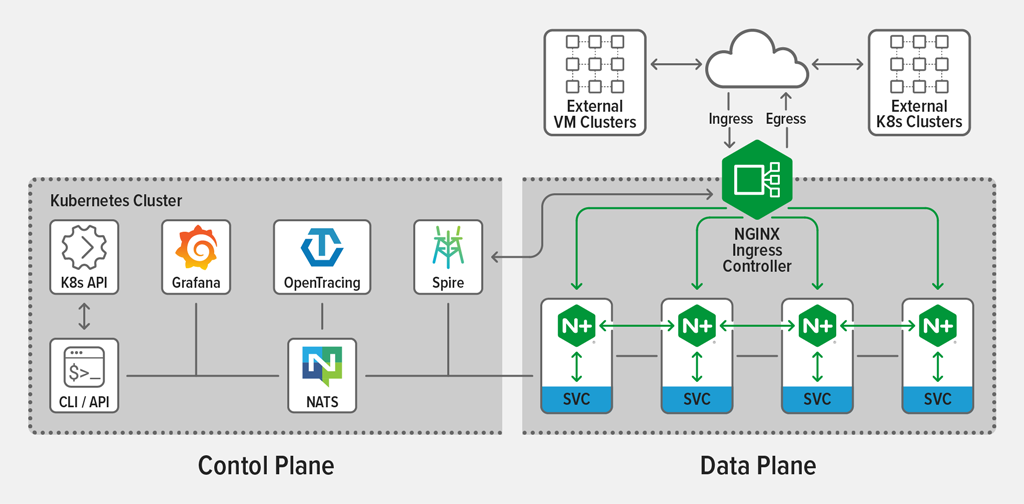

NSM consists of a unified data plane for east‑west (service-to-service) traffic and a native integration of the NGINX Plus Ingress Controller for north‑south traffic, managed by a single control plane.

The control plane is designed and optimized for the NGINX Plus data plane and defines the traffic management rules that are distributed to the NGINX Plus sidecars.

With NSM, sidecar proxies are deployed alongside every service in the mesh. They integrate with the following open source solutions:

- Grafana – Visualization of Prometheus metrics; the built‑in NSM dashboard helps you get started

- Kubernetes Ingress controllers – Management of ingress and egress traffic for the mesh

- SPIRE – Certificate Authority for managing, distributing, and rotating certificates for the mesh

- NATS – Scalable messaging plane for delivering messages, such as route updates, from the control plane to the sidecars

- Open Tracing – Distributed tracing (both Zipkin and Jaeger are supported)

- Prometheus – Collection and storage of metrics from the NGINX Plus sidecar, such as numbers of requests, connections, and SSL handshakes

Features and Components

NGINX Plus as the data plane spans the sidecar proxy (east‑west traffic) and the Ingress controller (north‑south traffic) while intercepting and managing container traffic between services. Features include:

- Mutual TLS (mTLS) authentication

- Load balancing

- High availability

- Rate limiting

- Circuit breaking

- Blue‑green and canary deployments

- Access controls

Getting Started with NGINX Service Mesh

To get started with NSM, you first need:

- Access to a Kubernetes environment. NGINX Service Mesh is supported on several Kubernetes platforms, including Amazon Elastic Container Service for Kubernetes (EKS), the Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), VMware vSphere, and stand‑alone bare‑metal clusters.

- The

kubectlcommand line utility installed on the machine where you are installing NSM. - Access to the NGINX Service Mesh release package. The package includes NSM images that need to be pushed to a private container registry accessible by the Kubernetes cluster. The package also includes the

nginx-meshctlbinary used to deploy NSM.

To deploy NSM with default settings, run the following command. During the deployment process, the trace confirms successful deployment of mesh components and finally that NSM is running in its own namespace:

$ DOCKER_REGISTRY=your-Docker-registry ; MESH_VER=0.6.0 ;

./nginx-meshctl deploy

--nginx-mesh-api-image "${DOCKER_REGISTRY}/nginx-mesh-api:${MESH_VER}"

--nginx-mesh-sidecar-image "${DOCKER_REGISTRY}/nginx-mesh-sidecar:${MESH_VER}"

--nginx-mesh-init-image "${DOCKER_REGISTRY}/nginx-mesh-init:${MESH_VER}"

--nginx-mesh-metrics-image "${DOCKER_REGISTRY}/nginx-mesh-metrics:${MESH_VER}"

Created namespace "nginx-mesh".

Created SpiffeID CRD.

Waiting for Spire pods to be running...done.

Deployed Spire.

Deployed NATS server.

Created traffic policy CRDs.

Deployed Mesh API.

Deployed Metrics API Server.

Deployed Prometheus Server nginx-mesh/prometheus-server.

Deployed Grafana nginx-mesh/grafana.

Deployed tracing server nginx-mesh/zipkin.

All resources created. Testing the connection to the Service Mesh API Server...

Connected to the NGINX Service Mesh API successfully.

NGINX Service Mesh is running.For additional command options, including nondefault settings, run:

$ nginx-meshctl deploy –hTo verify that the NSM control plane is running properly in the nginx-mesh namespace, run:

$ kubectl get pods –n nginx-mesh

NAME READY STATUS RESTARTS AGE

grafana-6cc6958cd9-dccj6 1/1 Running 0 2d19h

mesh-api-6b95576c46-8npkb 1/1 Running 0 2d19h

nats-server-6d5c57f894-225qn 1/1 Running 0 2d19h

prometheus-server-65c95b788b-zkt95 1/1 Running 0 2d19h

smi-metrics-5986dfb8d5-q6gfj 1/1 Running 0 2d19h

spire-agent-5cf87 1/1 Running 0 2d19h

spire-agent-rr2tt 1/1 Running 0 2d19h

spire-agent-vwjbv 1/1 Running 0 2d19h

spire-server-0 2/2 Running 0 2d19h

zipkin-6f7cbf5467-ns6wc 1/1 Running 0 2d19hDepending on deployment options which set the policies for manual or auto‑injection, NGINX sidecar proxies are injected into deployed applications by default. To learn how to disable auto injection, see our documentation.

For example, if we deploy the sleep application in the default namespace and then examine the Pod, we see that two containers are running – the sleep application and the associated NGINX Plus sidecar:

$ kubectl apply –f sleep.yaml

$ kubectl get pods –n default

NAME READY STATUS RESTARTS AGE

sleep-674f75ff4d-gxjf2 2/2 Running 0 5h23mYou can also monitor the sleep application with the native NGINX Plus dashboard by running this command to expose the sidecar to your local machine:

$ kubectl port-forward sleep-674f75ff4d-gxjf2 8080:8886Then navigate to http://localhost:8080/dashboard.html in your browser. You can also connect to the Prometheus server to monitor the sleep application.

You can use custom resources in Kubernetes to configure traffic policies like access control, rate limiting, and circuit breaking. For more information, see the documentation.

Summary

NGINX Service Mesh is freely available for download at the F5 portal. Please try it out in your development and test environments, and submit your feedback on GitHub.

To try out the NGINX Plus Ingress Controller, start your free 30-day trial today or contact us to discuss your use cases.

The post Introducing NGINX Service Mesh appeared first on NGINX.

Source: Introducing NGINX Service Mesh