NGINX Load Balancing Deployment Scenarios

We are increasingly seeing NGINX Plus used as a load balancer or application delivery controller (ADC) in a number of deployment scenarios and this post describes some of the most common ones. We discuss why customers are using the different deployment scenarios, some things to consider when using each scenario, and some likely migration paths from one to another. If you’re ready to get started or have any questions about how NGINX Plus can best meet your needs, contact us and speak with a Technical Solutions Architect.

Introduction

Many companies are looking to move away from hardware load balancers and to NGINX Plus to deliver their applications. There can be many drivers for this decision: to give the application teams more control of application load balancing; to move to virtualization or the cloud, where it is not possible to use hardware appliances; to employ DevOps tools, which can’t be used with hardware load balancers; to move to an elastic, scaled‑out infrastructure, not feasible with hardware appliances; and more.

If you are deploying a load balancer for the first time, NGINX Plus might be the ideal platform for your application. If you are adding NGINX Plus to an existing environment that already has hardware load balancers in place, you can deploy NGINX Plus in parallel or in series.

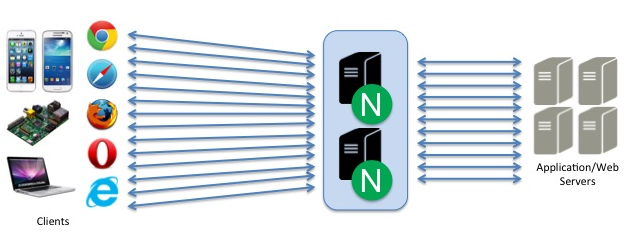

Scenario 1: NGINX Plus Does All Load Balancing

The simplest deployment scenario is where NGINX Plus handles all the load balancing duties. NGINX Plus might be the first load balancer in the environment or it might be replacing a legacy hardware‑based load balancer. Clients connect directly to NGINX Plus which then acts as a reverse proxy, load balancing requests to pools of backend servers.

This scenario has the benefit of simplicity with just one platform to manage, and can be the end result of a migration process that starts with one of the other deployment scenarios discussed below.

If SSL/TLS is being used, NGINX Plus can offload SSL/TLS processing from the backend servers. This not only frees up resources on the backend servers, but centralizing SSL/TLS processing also increases SSL/TLS session reuse. Creating SSL/TLS sessions is the most CPU‑intensive part of SSL/TLS processing, so increasing session reuse can have a major positive impact on performance.

Both NGINX and NGINX Plus can be used as a cache for static and dynamic content, and NGINX Plus adds the ability to purge items from the cache, especially useful for dynamic content.

NGINX Plus offers additional ADC functions, such as application health checks, session persistence, response rate limiting, bandwidth limiting, connection limiting, and more.

To support high availability (HA) in this scenario requires clustering of the NGINX Plus instances. [Editor – the HA solution originally discussed here has been deprecated. For details about the HA solution introduced in NGINX Plus Release 6, see High Availability Support for NGINX Plus in the NGINX Plus Admin Guide.]

Scenario 2: NGINX Plus Works in Parallel with a Legacy Hardware‑Based Load Balancer

In this scenario, NGINX Plus is introduced to load balance new applications in an environment where a legacy hardware appliance continues to load balance existing applications.

This scenario can be applied in a data center where both the hardware load balancers and NGINX Plus reside, or the hardware load balancers might be in a legacy data center while NGINX Plus is deployed in a new data center or a cloud.

NGINX Plus and the hardware‑based load balancer are not connected in any way, so from an NGINX Plus perspective, it is very similar to first scenario where NGINX is the only load balancer.

Clients connect directly to NGINX Plus, which can offload SSL/TLS termination, cache static and dynamic content, and perform other advanced ADC functions.

If high availability is important, the same solution as in the previous scenario can be used.

The usual reason for deploying NGINX in this way is that a company wants to move to a more modern software‑based platform but does not want to rip and replace all of its legacy hardware load balancers. By putting all new applications behind NGINX Plus, an enterprise can start to implement a software‑based platform and then over time migrate the legacy applications from the hardware load balancer to NGINX Plus.

Scenario 3: NGINX Plus Sits Behind a Legacy Hardware‑Based Load Balancer

As in the previous scenario, NGINX Plus is added to an environment with a legacy hardware‑based load balancer, but here it sits behind the legacy load balancer. Clients connect to The hardware‑based load balancer accepts client requests and load balances them to a pool of NGINX Plus instances, which load balance them across the group of actual backend servers. In this scenario NGINX Plus performs all Layer‑7 application load balancing and caching.

Because the NGINX Plus instances are being load balanced by the hardware load balancer, HA can be achieved by having the hardware load balancer do health checks on the NGINX Plus instances and stop sending traffic to instances that are down.

The Problem with Large Multi‑Tenant Hardware Load Balancers

There can be multiple reasons for deploying NGINX Plus in this way. One is because of corporate structure. In a multi‑tenant environment where many internal application teams share a device or set of devices, the hardware load balancers are often owned and managed by the network team. The application teams probably would like access to the load balancers to add application‑specific logic, but the complexity of true multi‑tenancy means that even sophisticated solutions can still not provide complete isolation between one application and another. If the application teams were given free access to shared devices, one team might make configuration changes that negatively impact other teams.

To avoid the potential problems, the network team often retains sole control over the hardware load balancers. The application teams have to submit requests to make any configuration changes. In addition, because of the potential for configuration conflicts between teams, the network team is likely to limit which advanced ADC features are exposed, meaning that application teams can’t take advantage of all the functionally available on the hardware load balancer.

Why Using Multiple Proxy Layers Makes Sense

One solution to this problem is to deploy a set of smaller load balancers, such as NGINX Plus, so that each application team can have its own. Completely isolated from one another, the application teams can each take full advantage of all the features they need without risking negative consequences for other teams. It’s not cost effective to give each application team a set of hardware appliances, so this a great use case for a software‑based load balancer like NGINX Plus.

The hardware load balancers remain in place, still owned and managed by the network team, but they no longer have to deal with complex multi‑tenant issues or application logic; their only job is to get the requests to the right NGINX Plus instances where the application logic resides, and NGINX Plus routes the requests to the right backend servers. This provides the network team with the control they need while also enabling the application teams to take full advantage of the ADC functionality.

Summary

The flexibility inherent in a software‑based load balancer makes it easy to deploy in almost any environment and scenario. The IT world is clearly moving to software‑based platforms, and with NGINX Plus you don’t need to rip and replace what you have. You can install NGINX Plus into your existing environment or a new environment as you migrate to the architecture of the future.

Further Reading

- Reverse Proxy Using NGINX Plus

- NGINX Reverse Proxy in the NGINX Plus Admin Guide

- Documentation at nginx.org

- NGINX and NGINX Plus Feature Matrix

- NGINX Plus Technical Specifications

The post NGINX Load Balancing Deployment Scenarios appeared first on NGINX.