NGINX Tutorial: Improve Uptime and Resilience with a Canary Deployment

Note: This tutorial is part of Microservices March 2022: Kubernetes Networking.

- Reduce Kubernetes Latency with Autoscaling

- Protect Kubernetes APIs with Rate Limiting

- Protect Kubernetes Apps from SQL Injection

- Improve Uptime and Resilience with a Canary Deployment (this post)

Your organization is successfully delivering apps in Kubernetes and now the team is ready to roll out v2 of a backend service. But there are valid concerns about traffic interruptions (a.k.a. downtime) and the possibility that v2 might be unstable. As the Kubernetes engineer, you need to find a way to ensure v2 can be tested and rolled out with little to no impact on customers.

You decide to implement a gradual, controlled migration using the traffic splitting technique “canary deployment” because it provides a safe and agile way to test the stability of a new feature or version. Your use case involves traffic moving between two Kubernetes services, so you choose to use NGINX Service Mesh because it’s easy and delivers reliable results. You send 10% of your traffic to v2 with the remaining 90% still routed to v1. Stability looks good, so you gradually transition larger and larger percentages of traffic to v2 until you reach 100%. Problem solved!

The easiest way to do this lab is to register for Microservices March 2022 and use the browser-based lab that’s provided. If you want to do it as a tutorial in your own environment, you need a machine with:

- 2 CPUs or more

- 2 GB of free memory

- 20 GB of free disk space

- Internet connection

- Container or virtual machine manager, such as Docker, HyperKit, Hyper-V, KVM, Parallels, Podman, VirtualBox, or VMware Fusion/Workstation

- minikube installed

- Helm installed

Note: This blog is written for minikube running on a desktop/laptop that can launch a browser window. If you’re in an environment where that’s not possible, then you’ll need to troubleshoot how to get to the services via a browser.

To get the most out of the lab and tutorial, we recommend that before beginning you:

- Watch the recording of the livestreamed conceptual overview

- Review the background blogs, webinar, and video

- Watch the video summary of the lab:

This tutorial uses these technologies:

- NGINX Service Mesh

- Helm

- Jaeger

- minikube

- Two sample apps

This tutorial includes three challenges:

- Deploy a Cluster and NGINX Service Mesh

- Deploy Two Apps (a Frontend and a Backend)

- Use NGINX Service Mesh to Implement a Canary Deployment

Challenge 1: Deploy a Cluster and NGINX Service Mesh

Deploy a minikube cluster. After a few seconds, a message confirms the deployment was successful.

$ minikube start

--extra-config=apiserver.service-account-signing-key-file=/var/lib/minikube/certs/sa.key

--extra-config=apiserver.service-account-key-file=/var/lib/minikube/certs/sa.pub

--extra-config=apiserver.service-account-issuer=kubernetes/serviceaccount

--extra-config=apiserver.service-account-api-audiences=api

🏄 Done! kubectl is now configured to use "minikube" cluster and "default" namespace by default

Did you notice this minikube command looks different from other Microservices March tutorials?

- Some extra configs are required to enable Service Account Token Volume Projection – a necessary item for NGINX Service Mesh.

- With this feature enabled, the kubelet mounts a service account token into each pod.

- From this point, the pod can be identified uniquely in the cluster and the kubelet takes care of rotating the token at a regular interval.

- To learn more, read Authentication Between Microservices Using Kubernetes Identities.

Deploy NGINX Service Mesh

NGINX Service Mesh is maintained by F5 NGINX and uses NGINX Plus as the sidecar. Although NGINX Plus is a commercial product, you get to use it for free as part of NGINX Service Mesh.

There are two options for installation:

- Using Helm

- Download and use the command-line utility

Helm is the simplest and fastest method, so it’s what we use in this tutorial.

- Download and install NGINX Service Mesh:

- Confirm that the NGINX Service Mesh pods are deployed, as indicated by the value

Runningin theSTATUScolumn.

helm install nms ./nginx-service-mesh --namespace nginx-mesh --create-namespace kubectl get pods --namespace nginx-mesh

NAME READY STATUS

grafana-7c6c88b959-62r72 1/1 Running

jaeger-86b56bf686-gdjd8 1/1 Running

nats-server-6d7b6779fb-j8qbw 2/2 Running

nginx-mesh-api-7864df964-669s2 1/1 Running

nginx-mesh-metrics-559b6b7869-pr4pz 1/1 Running

prometheus-8d5fb5879-8xlnf 1/1 Running

spire-agent-9m95d 1/1 Running

spire-server-0 2/2 Running

It may take 1.5 minutes for all pods to deploy. In addition to the NGINX Service Mesh pods, there are pods for Grafana, Jaeger, NATS, Prometheus, and Spire. Check out the docs for information on how these tools work with NGINX Service Mesh.

Challenge 2: Deploy Two Apps (a Frontend and a Backend)

The app deployment consists of two microservices:

frontend: The web app that serves a UI to your visitors. It deconstructs complex requests and sends calls to numerous backend apps.backend-v1: A business logic app that serves data tofrontendvia the Kubernetes API.

Install the Backend-v1 App

- Using the text editor of your choice, create a YAML file called 1-backend-v1.yaml with the following contents:

- Deploy

backend-v1: - Confirm that the

backend-v1pod and services deployed, as indicated by the valueRunningin theSTATUScolumn.

apiVersion: v1

kind: ConfigMap

metadata:

name: backend-v1

data:

nginx.conf: |-

events {}

http {

server {

listen 80;

location / {

return 200 '{"name":"backend","version":"1"}';

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-v1

spec:

replicas: 1

selector:

matchLabels:

app: backend

version: "1"

template:

metadata:

labels:

app: backend

version: "1"

annotations:

spec:

containers:

- name: backend-v1

image: "nginx"

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx

name: nginx-config

volumes:

- name: nginx-config

configMap:

name: backend-v1

---

apiVersion: v1

kind: Service

metadata:

name: backend-svc

labels:

app: backend

spec:

ports:

- port: 80

targetPort: 80

selector:

app: backend

$ kubectl apply -f 1-backend-v1.yaml

configmap/backend-v1 created

deployment.apps/backend-v1 created

service/backend-svc created

$ kubectl get pods,services

NAME READY STATUS

pod/backend-v1-745597b6f9-hvqht 2/2 Running

NAME TYPE CLUSTER-IP PORT(S)

service/backend-svc ClusterIP 10.102.173.77 80/TCP

service/kubernetes ClusterIP 10.96.0.1 443/TCP

You may be wondering, “Why are there two pods running for backend-v1?”

- NGINX Service Mesh injects a sidecar proxy into your pods.

- This sidecar container intercepts all incoming and outgoing traffic to your pods.

- All the data collected is used for metrics, but you can also use this proxy to decide where the traffic should go.

Deploy the Frontend App

- Create a YAML file called 2-frontend.yaml with the following contents. Notice the pod uses cURL to issue a request to the backend service (

backend-svc) every second. - Deploy

frontend: - Confirm that the frontend pod deployed, as indicated by the value Running in the

STATUScolumn. Again, note there are also two pods for each app because they are part of NGINX Service Mesh.

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

spec:

selector:

matchLabels:

app: frontend

template:

metadata:

labels:

app: frontend

spec:

containers:

- name: frontend

image: curlimages/curl:7.72.0

command: [ "/bin/sh", "-c", "--" ]

args: [ "sleep 10; while true; do curl -s http://backend-svc/; sleep 1 && echo ' '; done" ]

$ kubectl apply -f 2-frontend.yaml

deployment.apps/frontend created

$ kubectl get pods

NAME READY STATUS RESTARTS

backend-v1-5cdbf9586-s47kx 2/2 Running 0

frontend-6c64d7446-mmgpv 2/2 Running 0

Check Logs

Next, you will inspect the logs to verify that traffic is flowing from frontend to backend-v1. The command to retrieve logs requires you to piece it together using this format:

kubectl logs -c frontend <insert the full pod id displayed in your Terminal>

The full pod ID is available in the previous step (frontend-6c64d7446-mmgpv) and is unique to your deployment. When you submit the command, the logs should report that all traffic is routing to backend-v1, which is expected since it’s your only backend.

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

Inspect the Dependency Graph with Jaeger

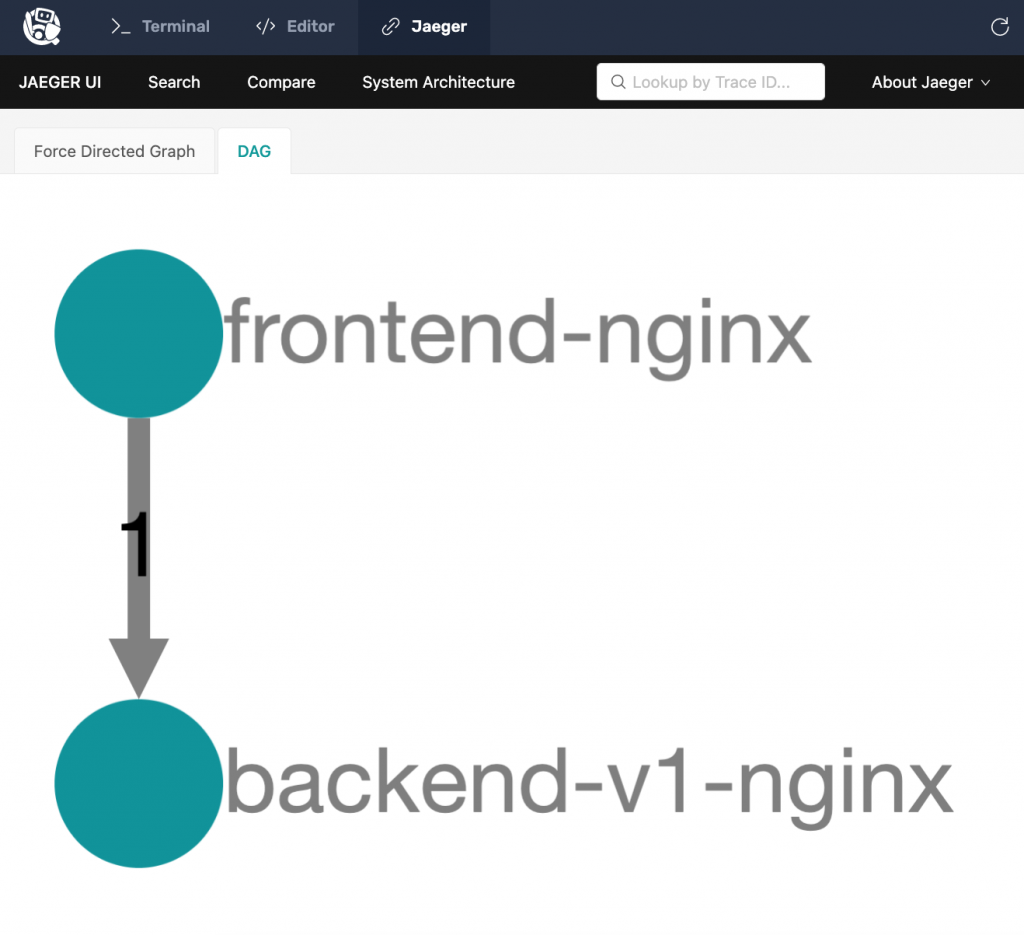

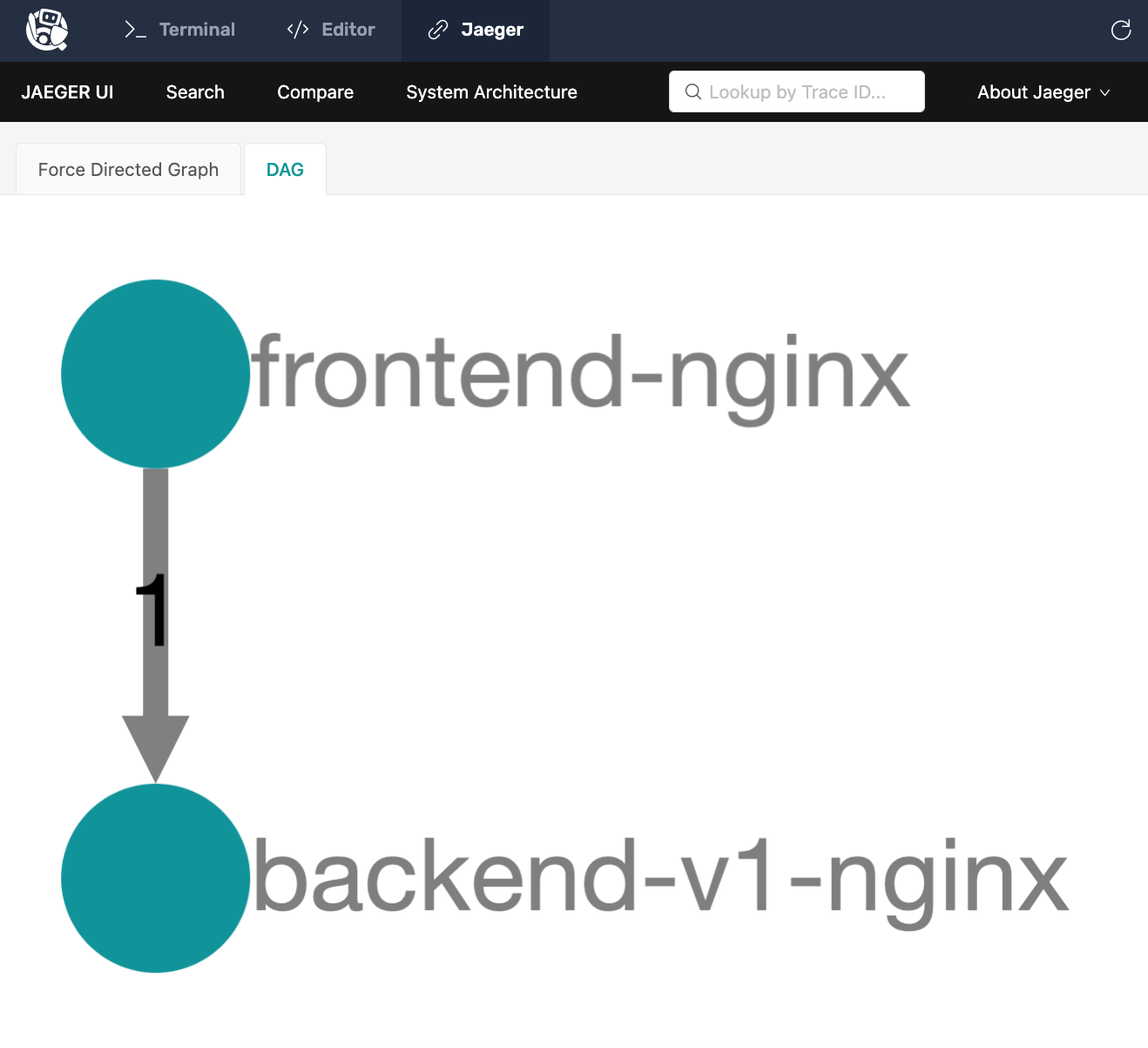

What’s more interesting is that the NGINX Service Mesh sidecars deployed alongside the two apps are collecting metrics as the traffic flows. You can use this data to derive a dependency graph of your architecture with Jaeger.

- Use minikube service jaeger to open the Jaeger dashboard in a browser.

- Click the “System Architecture” tab, where you should see a very simple architecture (hover your cursor over the graph to get the labels). The DAG tab provides a magnified view. Imagine if you had dozens or even hundreds of backend services being accessed by frontend – this would be a very interesting graph!

Add Backend-v2

You will now deploy a second backend app – backend-v2 – that will also serve frontend. As the version number suggests, backend-v2 is a new version of backend-v1.

- Create a YAML file called 3-backend-v2.yaml with the following contents, and notice:

- How the deployment shares an app:

backendlabel with the previous deployment. - Because the service selector is app:

backend, you should expect the traffic to be distributed evenly betweenbackend-v1andbackend-v2.

apiVersion: v1

kind: ConfigMap

metadata:

name: backend-v2

data:

nginx.conf: |-

events {}

http {

server {

listen 80;

location / {

return 200 '{"name":"backend","version":"2"}';

}

}

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-v2

spec:

replicas: 1

selector:

matchLabels:

app: backend

version: "2"

template:

metadata:

labels:

app: backend

version: "2"

annotations:

spec:

containers:

- name: backend-v2

image: "nginx"

ports:

- containerPort: 80

volumeMounts:

- mountPath: /etc/nginx

name: nginx-config

volumes:

- name: nginx-config

configMap:

name: backend-v2

$ kubectl apply -f 3-backend-v2.yaml

configmap/backend-v2 created

deployment.apps/backend-v2 created

backend-v2 pod and services deployed, as indicated by the value Running in the STATUS column. Inspect the Logs

Using the same command as earlier, inspect the logs. You should now see evenly distributed responses from both backend versions.

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

Return to Jaeger

Return to the Jaeger tab to see if NGINX Service Mesh was able to correctly map both backend versions.

- It can take a few minutes for Jaeger to recognize that a second

backendwas added. - If it doesn’t show up after a minute, just continue to the next challenge and check back on the dependency chart a little later.

Challenge 3: Use NGINX Service Mesh to Implement a Canary Deployment

So far in this tutorial, you deployed two versions of backend: v1 and v2. While you could immediately move all traffic to v2, it’s best practice to test stability before entrusting a new version with production traffic. A canary deployment is a perfect technique for this use case.

What is a Canary Deployment?

As discussed in the blog How to Improve Resilience in Kubernetes with Advanced Traffic Management, a canary deployment is a type of traffic split that provides a safe and agile way to test the stability of a new feature or version. A typical canary deployment starts with a high share (say, 99%) of your users on the stable version and moves a tiny group (the other 1%) to the new version. If the new version fails, for example crashing or returning errors to clients, you can immediately move the test group back to the stable version. If it succeeds, you can switch users from the stable version to the new one, either all at once or (as is more common) in a gradual, controlled migration.

This diagram depicts a canary deployment using an Ingress controller to split traffic.

Canary Deployments Between Services

In this tutorial, you’re going to set up a traffic split so 90% of the traffic goes to backend-v1 and 10% goes to backend-v2.

While an Ingress controller is used to split traffic when it’s flowing from clients to a Kubernetes service, it can’t be used to split traffic between services. There are two options for implementing this type of canary deployment:

You could instruct a proxy on the

frontend pod to send 9 out of 10 requests to backend-v1. But imagine if you have dozens of replicas of frontend. Do you really want to be manually updating all those proxies? No! It would be error-prone and time-consuming.

Option 2: The Better Way

Observability and control are excellent reasons to use a service mesh. And you can do so much more. A service mesh is also the ideal tool for implementing a traffic split between services because you can apply a single policy to all of your frontend replicas served by the mesh!

Using NGINX Service Mesh for Traffic Splits

NGINX Service Mesh implements the Service Mesh Interface (SMI), a specification that defines a standard interface for service meshes on Kubernetes, with typed resources such as TrafficSplit, TrafficTarget, and HTTPRouteGroup. With these standard Kubernetes configurations, NGINX Service Mesh and the NGINX SMI extensions make traffic splitting policies, like canary deployment, simple to deploy with minimal interruption to production traffic.

In this diagram from the blog How Do I Choose? API Gateway vs. Ingress Controller vs. Service Mesh, you can see how NGINX Service Mesh implements a canary deployment between services with conditional routing based on HTTP/S criteria.

NGINX Service Mesh’s architecture – like all meshes – has a data plane and a control plane. Because NGINX Service Mesh leverages NGINX Plus for the data plane, it’s able to perform advanced deployment scenarios.

- Data plane: Made of up of a containerized NGINX Plus proxies – called sidecars – that are responsible for offloading functions required by all apps within the mesh, as well as implementing deployment patterns like canary deployments.

- Control plane: Manages the data plane. While the sidecars route application traffic and provide other data plane services, the control plane injects sidecars into pods and performs administrative tasks, such as renewing mTLS certificates and pushing them to the appropriate sidecars.

Create the Canary Deployment

The NGINX Service Mesh control plane can be controlled with Kubernetes Custom Resource Definitions (CRDs). It uses the Kubernetes services to retrieve a list of pod IP addresses and ports. Then, it combines the instructions from the CRD and informs the sidecars to route traffic directly to the pods.

- Using the text editor of your choice, create a YAML file called 5-split.yaml that defines the traffic split using the

TrafficSplitCRD.

apiVersion: split.smi-spec.io/v1alpha3

kind: TrafficSplit

metadata:

name: backend-ts

spec:

service: backend-svc

backends:

- service: backend-v1

weight: 90

- service: backend-v2

weight: 10

Notice how there are three services defined in the CRD (and you have created only one so far):

backend-svcis the service that targets all pods.backend-v1is the service that selects pods from thebackend-v1deployment.backend-v2is the service that selects pods from thebackend-v2deployment.

apiVersion: v1

kind: Service

metadata:

name: backend-v1

labels:

app: backend

version: "1"

spec:

ports:

- port: 80

targetPort: 80

selector:

app: backend

version: "1"

---

apiVersion: v1

kind: Service

metadata:

name: backend-v2

labels:

app: backend

version: "2"

spec:

ports:

- port: 80

targetPort: 80

selector:

app: backend

version: "2"

$ kubectl apply -f 4-services.yaml

service/backend-v1 created

service/backend-v2 created

Observe the Logs

Before you implement the traffic split, check the logs to see how traffic is flowing without the TrafficSplit CRD in place. You should see that traffic is being evenly split between v1 and v2.

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

Implement the Canary Deployment

- Apply the

TrafficSplit CRD.

$ kubectl apply -f 5-split.yaml

trafficsplit.split.smi-spec.io/backend-ts created

- Observe the logs again. Now, you should see 90% of traffic being delivered to v1, as defined in 5-split.yaml.

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

Execute a Rollover to V2

TrafficSplit CRD. $ kubectl apply -f 5-split.yaml

trafficsplit.split.smi-spec.io/backend-ts created

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

{"name":"backend","version":"1"}

It’s rare that you’ll want to do a 90/10 traffic split and then immediately move all your traffic over to the new version. Instead, best practice is to move traffic incrementally. For example: 0%, 5%, 10%, 25%, 50%, and 100%. To illustrate how easy it can be to implement an incremental rollover, you’ll change the weighting to 20/80 and then 0/100.

- Edit 5-split.yaml so that

backend-v1gets 20% of traffic andbackend-v2gets the remaining 80%. - Apply the changes:

- Observe the logs to see the changes in action:

- To complete the rollover, edit 5-split.yaml so that

backend-v1gets 0% of the traffic andbackend-v2gets 100%. - Apply the changes:

- Observe the logs to see the change in action. All responses have shifted to backend-v2 which means your rollover is complete!

- Watch the high-level overview webinar

- Review the collection of technical blogs and videos

apiVersion: split.smi-spec.io/v1alpha3

kind: TrafficSplit

metadata:

name: backend-ts

spec:

service: backend-svc

backends:

- service: backend-v1

weight: 20

- service: backend-v2

weight: 80

$ kubectl apply -f 5-split.yaml

trafficsplit.split.smi-spec.io/backend-ts configured

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"2"}

{"name":"backend","version":"1"}

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

apiVersion: split.smi-spec.io/v1alpha3

kind: TrafficSplit

metadata:

name: backend-ts

spec:

service: backend-svc

backends:

- service: backend-v1

weight: 0

- service: backend-v2

weight: 100

$ kubectl apply -f 5-split.yaml

trafficsplit.split.smi-spec.io/backend-ts configured

$ kubectl logs -c frontend frontend-6c64d7446-mmgpv

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

{"name":"backend","version":"2"}

Next Steps

You can use this blog to implement the tutorial in your own environment or try it out in our browser-based lab (register here). To learn more on the topic of exposing Kubernetes services, follow along with the other activities in Unit 4: Advanced Kubernetes Deployment Strategies:

NGINX Service Mesh is completely free. You can download it using Helm (the method leveraged in this tutorial) or through F5 Downloads.

The post NGINX Tutorial: Improve Uptime and Resilience with a Canary Deployment appeared first on NGINX.

Source: NGINX Tutorial: Improve Uptime and Resilience with a Canary Deployment