NGINX vs. Avi: Performance in the Cloud

table.nginx-blog, table.nginx-blog th, table.nginx-blog td {

border: 2px solid black;

border-collapse: collapse;

}

table.nginx-blog {

width: 100%;

}

table.nginx-blog th {

background-color: #d3d3d3;

align: left;

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 2px;

line-height: 120%;

}

table.nginx-blog td {

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

table.nginx-blog td.center {

text-align: center;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

Slow is the new down – according to Pingdom, the bounce rate for a website that takes 5 seconds to load is close to 40%. So what does that mean? If your site takes that long to load, nearly half of your visitors abandon it and go elsewhere. Users are constantly expecting faster applications and better digital experiences, so improving your performance is crucial to growing your business. The last thing you want is to offer a product or service the market wants while lacking the ability to deliver a fast, seamless experience to your customer base.

In this post we analyze the performance of two software application delivery controllers (ADCs), the Avi Vantage platform and the NGINX Application Platform. We measure the latency of client requests, an important metric for keeping clients engaged.

Testing Protocol and Metrics Collected

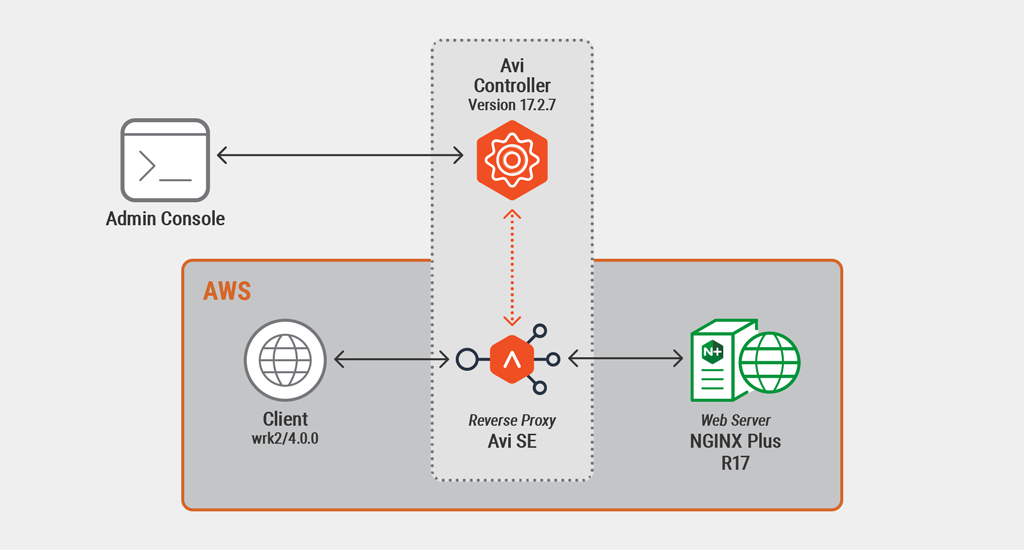

We used the load generation program wrk2 to emulate a client, making continuous requests over HTTPS for files during a defined period of time. The ADC data plane under test – the Avi Service Engine (SE) or NGINX Plus – acted as a reverse proxy, forwarding the requests to a backend web server and returning the response generated by the web server (a file) to the client. Across various test runs, we emulated real‑world traffic patterns by varying the number of requests per second (RPS) made by the client as well as the size of the requested file.

During the tests we collected two performance metrics:

- Mean latency – Latency is defined as the amount of time between the client generating the request and receiving the response. The mean (average) is calculated by adding together the response times for all requests made during the testing period, then dividing by the number of requests.

- 95th percentile latency – The latency measurements collected during the testing period are sorted from highest (most latency) to lowest. The highest 5% are discarded, and the highest remaining value is the 95th percentile latency.

Testing Methodology

Client

We ran the following script on an Amazon Elastic Compute Cloud (EC2) instance:

wrk2 -t1 -c50 -d180s -Rx --latency https://server.example.com:443/(For the specs of all EC2 instances used, including the client, see the Appendix.) To simulate multiple clients accessing a web‑based application at the same time, in each test the script spawned one wrk2 thread and established 50 connections with the ADC data plane, then continuously requested a static file for 3 minutes (the file size varied across test runs). These parameters correspond to the following wrk2 options:

-toption – Number of threads to create (1).-coption – Number of TCP connections to create (50).-doption – Number of seconds in the testing period (180, or 3 minutes).-Rxoption – Number of requests per second issued by the client (also referred to as client RPS). The x was replaced by the appropriate client RPS rate for the test run.--latencyoption – Includes detailed latency percentile information in the output.

As detailed below, we varied the size of the requested file depending on the size of the EC2 instance: 1 KB with the smaller instance, and both 10 KB and 100 KB with the larger instance. We incremented the RPS rate by 1,000 with the 1 KB and 10 KB files, and by 100 with the 100 KB file. For each combination of RPS rate and file size, we conducted a total of 20 test runs. The graphs below report the average of the 20 runs.

All requests were made over HTTPS. We used ECC with a 256‑bit key size and Perfect Forward Secrecy; the SSL cipher was ECDHE-ECDSA-AES256-GCM-SHA384.

Avi Reverse Proxy: Configuration and Versioning

We deployed Avi Vantage version 17.2.7 from the AWS Marketplace. Avi allows you to choose the AWS instance type for the SE, but not the underlying operating system. The Avi Controller preselects the OS on which it deploys SEs; at the time of testing, it was Ubuntu 14.04.5 LTS.

NGINX Reverse Proxy: Configuration and Versioning

Unlike the Avi Vantage platform, which bundles the control plane (its Controller component) and data plane (the SE), the NGINX control plane (NGINX Controller) is completely decoupled from the data plane (NGINX Plus). You can update NGINX Plus instances to any release, or change to any supported OS, without updating NGINX Controller. This gives you the flexibility to choose the release, OS, and OpenSSL version that provide optimum performance, making updates painless. Taking advantage of this flexibility, we deployed an NGINX Plus R17 instance running Ubuntu 16.04.

We configured NGINX Controller to deploy the NGINX Plus reverse proxy with a a cache that accommodates 100 upstream connections. This improves performance by enabling keepalive connections between the reverse proxy and the upstream servers.

NGINX Plus Web Server: Configuration and Versioning

As shown in the preceding topology diagrams, we used NGINX Plus R17 as the web server in all tests.

Performance Results

Latency on a t2.medium Instance

For the first test, we deployed Avi SE via Avi Controller and NGINX Plus via NGINX Controller, each on a t2.medium instance. The requested file was 1 KB in size. The graphs show the client RPS rate on the X axis, and the latency in seconds on the Y axis. Lower latency at each RPS rate is better.

The pattern of results for the two latency measurements is basically the same, differing only in the time scale on the Y axis. Measured by both mean and 95th percentile latency, NGINX Plus served nearly 2.5 times as many RPS than Avi SE (6,000 vs. 2,500) before latency increased above a negligible level. Why did Avi SE incur the dramatic increase in latency at such a low rate of RPS? To answer this, let’s take a closer look at the 20 consecutive test runs on Avi SE with client RPS at 2,500, for each of mean latency and 95th percentile latency.

As shown in the following table, on the 17th consecutive test on the Avi SE at 2500 RPS, mean latency spikes dramatically to more than 14 seconds, while 95th percentile latency spikes to more than 35 seconds.

| Test Run | Mean Latency (ms) | 95th Percentile Latency (ms) |

|---|---|---|

| 1 | 1.926 | 3.245 |

| 2 | 6.603 | 10.287 |

| 3 | 2.278 | 3.371 |

| 4 | 1.943 | 3.227 |

| 5 | 2.015 | 3.353 |

| 6 | 6.633 | 10.167 |

| 7 | 1.932 | 3.277 |

| 8 | 1.983 | 3.301 |

| 9 | 1.955 | 3.333 |

| 10 | 7.223 | 10.399 |

| 11 | 2.048 | 3.353 |

| 12 | 2.021 | 3.375 |

| 13 | 1.930 | 3.175 |

| 14 | 1.960 | 3.175 |

| 15 | 6.980 | 10.495 |

| 16 | 1.934 | 3.289 |

| 17 | 14020 | 35350 |

| 18 | 27800 | 50500 |

| 19 | 28280 | 47500 |

| 20 | 26400 | 47800 |

To understand the reason for the sudden spike, it’s important first to understand that t2 instances are “burstable performance” instances. This means that they are allowed to consume a baseline amount of the available vCPU at all times (40% for our t2.medium instances). As they run, they also accrue CPU credits, each equivalent to 1 vCPU running at 100% utilization for 1 minute. To use more than its baseline vCPU allocation (to burst), the instance has to pay with credits, and when those are exhausted the instance is throttled back to its baseline CPU allocation.

This output from htop, running in detailed mode after the latency spike occurs during the 17th test run, shows the throttling graphically:

The lines labeled 1 and 2 correspond to the t2.medium instance’s 2 CPUs and depict the proportion of each CPU that’s being used for different purposes: green for user processes, red for kernel processes, and so on. The cyan is of particular interest to us, accounting as it does for most of the overall usage. It represents the CPU steal time, which in a generalized virtualization context is defined as the “percentage of time a virtual CPU waits for a real CPU while the hypervisor is servicing another virtual processor”. For EC2 burstable performance instances, it’s the CPU capacity the instance is not allowed to use because it has exhausted its CPU credits.

At RPS rates lower than 2,500, Avi SE can complete all 20 test runs without exceeding its baseline allocation and CPU credits. At 2,500 RPS, however, it runs out of credits during the 17th test run. Latency spikes nearly 10x because Avi can’t use the baseline CPU allocation efficiently enough to process requests as fast as they’re coming in. NGINX Plus uses CPU much more efficiently than Avi SE, so it doesn’t exhaust its allocation and credits until 6,000 RPS.

Latency on a c4.large Instance

Burstable performance instances are most suitable for small, burstable workloads, but their performance degrades quickly when CPU credits become exhausted. In a real‑world deployment, it usually makes more sense to choose an instance type that consumes CPU in a consistent manner, not subject to CPU credit exhaustion. Amazon says its compute optimized instances are suitable for high‑performance web servers, so we repeated our tests with the Avi SE and NGINX Plus running on c4.large instances.

The testing setup and methodology is the same as for the t2.medium instance, except that the client requested larger files – 10 KB and 100 KB instead of 1 KB.

Latency for the 10 KB File

The following graphs show the mean and 95th percentile latency when the client requested a 10 KB file. As before, lower latency at each RPS rate is better.

As for the tests on t2.medium instances, the pattern of results for the two latency measurements is basically the same, differing only in the time scale on the Y axis. NGINX Plus again outperforms Avi SE, here by more than 70% – it doesn’t experience increased latency until about 7,200 RPS, whereas Avi SE can handle only 4,200 RPS before latency spikes.

As shown in the following table, our tests also revealed that Avi SE incurred more latency than NGINX Plus at every RPS rate, even before hitting the RPS rate (4,200) at which Avi SE’s latency spiked. At the RPS rate where the two products’ latency was the closest (2000 RPS), Avi SE’s mean latency was still 23x NGINX Plus’ (54 ms vs. 2.3 ms), and its 95th percentile latency was 79x (317 ms vs. 4.0 ms).

At 4000 RPS, just below the latency spike for Avi SE, the multiplier grew to 69x for mean latency (160 ms vs. 2.3 ms) and 128x for 95th percentile latency (526 ms vs. 4.1 ms). At RPS higher than the latency spike for Avi SE, the multiplier exploded, with the largest difference at 6,000 RPS: 7666x for mean latency (23 seconds vs. 3.0 ms) and 8346x for 95th percentile latency (43.4 seconds vs. 5.2 ms).

Avi SE’s performance came closest to NGINX Plus’ after NGINX Plus experienced its own latency spike, at 7,200 RPS. Even so, at its best Avi SE’s latency was never less than 2x NGINX Plus’ (93.5 seconds vs. 45.0 seconds for 95th percentile latency at 10,000 RPS).

| Client RPS | Mean Latency | 95th Percentile Latency | ||

|---|---|---|---|---|

| Avi SE | NGINX Plus | Avi SE | NGINX Plus | |

| 1000 | 84 ms | 1.7 ms | 540 ms | 2.6 ms |

| 2000 | 54 ms | 2.3 ms | 317 ms | 4.0 ms |

| 3000 | 134 ms | 2.2 ms | 447 ms | 4.2 ms |

| 4000 | 160 ms | 2.3 ms | 526 ms | 4.1 ms |

| 5000 | 8.8 s | 2.7 ms | 19.6 s | 5.1 ms |

| 6000 | 23.0 s | 3.0 ms | 43.4 s | 5.2 ms |

| 7000 | 33.0 s | 4.3 ms | 61 s | 14.7 ms |

| 8000 | 40.0 s | 6.86 s | 74.2 s | 13.8 s |

| 9000 | 46.8 s | 16.6 s | 85.0 s | 31.1 s |

| 10000 | 51.6 s | 24.4 s | 93.5 s | 45.0 s |

Latency for the 100 KB File

In the next set of test runs, we increased the size of the requested file to 100 KB.

For this file size, RPS drops dramatically for both products but NGINX Plus still outperforms Avi SE, here by nearly 40% – NGINX Plus doesn’t experience significant increased latency until about 720 RPS, whereas Avi SE can handle only 520 RPS before latency spikes.

As for the tests with 10 KB files, Avi SE also incurs more latency than NGINX Plus at every RPS rate. The following table shows that the multipliers are not as large as for 10 KB files, but are still significant. The lowest multiplier before Avi SE’s spike is 17x, for mean latency at 400 RPS (185 ms vs. 10.7 ms).

| Client RPS | Mean Latency | 95th Percentile Latency | ||

|---|---|---|---|---|

| Avi SE | NGINX Plus | Avi SE | NGINX Plus | |

| 100 | 100 ms | 5.0 ms | 325 ms | 6.5 ms |

| 200 | 275 ms | 9.5 ms | 955 ms | 12.5 ms |

| 300 | 190 ms | 7.9 ms | 700 ms | 10.3 ms |

| 400 | 185 ms | 10.7 ms | 665 ms | 14.0 ms |

| 500 | 500 ms | 8.0 ms | 1.9 s | 10.4 ms |

| 600 | 1.8 s | 9.3 ms | 6.3 s | 12.4 ms |

| 700 | 15.6 s | 2.2 ms | 32.3 s | 3 ms |

| 800 | 25.5 s | 2.4 s | 48.4 s | 8.1 s |

| 900 | 33.1 s | 12.9 s | 61.2 s | 26.5 |

| 1000 | 39.2 s | 20.8 s | 71.9 s | 40 s |

CPU Usage on a c4.large Instance

Our final test runs focused on overall CPU usage by Avi SE and NGINX Plus on c4.large instances. We tested with both 10 KB and 100 KB files.

With the 10 KB file, Avi SE hits 100% CPU usage at roughly 5,000 RPS and NGINX Plus at roughly 8,000 RPS, representing 60% better performance by NGINX Plus. For both products, there is a clear correlation between hitting 100% CPU usage and the latency spike, which occurs at 4,200 RPS for Avi SE and 7,200 RPS for NGINX Plus.

The results are even more striking with 100 KB files. Avi SE handled a maximum of 520 RPS because at that point it hit 100% CPU usage. NGINX Plus’ performance was nearly 40% better, with a maximum rate of 720 RPS*. But notice that NGINX Plus was using less than 25% of the available CPU at that point – the rate was capped at 720 RPS not because of processing limits in NGINX Plus but because of network bandwidth limits in the test environment. In contrast to Avi SE, which maxed out the CPU on the EC2 instance, NGINX Plus delivered reliable performance with plenty of CPU cycles still available for other tasks running on the instance.

* The graph shows the maximum values as 600 and 800 RPS, but that is an artifact of the graphing software – we tested in increments of 100 RPS and the maxima occurred during the test runs at those rates.

Summary

We extensively tested both NGINX and Avi Vantage in a realistic test case where the client continuously generates traffic for roughly 12 hours, steadily increasing the rate of requests.

The results can be summarized as follows:

-

NGINX uses CPU more efficiently, and as a result incurs less latency.

- Running on burstable performance instances (t2.medium in our testing) Avi exhausted the CPU credit balance faster than NGINX.

- Running on stable instances with no CPU credit limits (c4.large in our testing) and under heavy, constant client load, NGINX handled more RPS before experiencing a latency spike – roughly 70% more RPS with the 10 KB file and roughly 40% more RPS with the 100 KB file.

- With the 100 KB file, 100% CPU usage directly correlated with the peak RPS that Avi could process. In contrast, NGINX Plus never hit 100% CPU – instead it was limited only by the available network bandwidth.

- NGINX outperformed Avi in every latency test on the c4.large instances, even when the RPS rate was below the level at which latency spiked for Avi.

As we mentioned previously, Avi Vantage does not allow you to select the OS for Avi SE instances. As a consequence, if a security vulnerability is found in the OS, you can’t upgrade to a patched version provided by the OS vendor; you have to wait for Avi to release a new version of Avi Controller that fixes the security issue. This can leave all your applications exposed for potentially long periods.

Appendix: Amazon EC2 Specs

The tables summarize the specs for the Amazon EC2 instances used in the test environment.

In each case, the instances are in the same AWS region.

Testing on t2.medium Instances

| Role | Instance Type | vCPUs | RAM (GB) |

|---|---|---|---|

| Client | t2.micro | 1 | 1 |

| Reverse Proxy (Avi SE or NGINX Plus) | t2.medium | 2 | 4 |

| NGINX Web Server | t2.micro | 1 | 1 |

Testing on c4.large Instances

| Role | Instance Type | vCPUs | RAM (GB) |

|---|---|---|---|

| Client | c4.large | 2 | 3.75 |

| Reverse Proxy (Avi SE or NGINX Plus) | c4.large | 2 | 3.75 |

| NGINX Web Server | c4.large | 2 | 3.75 |

The post NGINX vs. Avi: Performance in the Cloud appeared first on NGINX.