Securing the Perimeter: Achieving Zero Trust with NGINX Plus and SSO/Rest

The move to cloud computing means that more of our data, our operations, and our business are being repositioned to face the public Internet; sensitive assets are no longer necessarily tucked away in the corporate data center, accessible only to on‑site personnel. The new risks resulting from this paradigm shift are increasingly rendering existing access control models insufficient and are rapidly driving the security community to embrace the “Zero Trust” approach to security as the gold standard for resource protection.

In a Zero Trust architecture, there is no longer a trusted network inside a corporate perimeter and an untrusted world outside. Instead, “microperimeters” are constructed around data and resources, allowing fine‑grained access control policies to be enforced at all locations. (For a detailed discussion of Zero Trust, see the Appendix.)

Now, with the introduction of IDF Connect’s SSO/Rest Plug‑In for NGINX Plus, the world’s most widely used web server for high‑volume sites is able to function as a key component of a Zero Trust security architecture.

With SSO/Rest, NGINX Plus Becomes a Key Component of Zero Trust Web Access Management

To be fully effective, Zero Trust web access management (WAM) needs to begin at the frontmost line of your infrastructure: at the load balancers and web servers. NGINX Plus is the perfect enforcement point to achieve this requisite level of security. The frontend web layer forms the natural boundary of your environment and is therefore the perfect place for access enforcement to begin.

But extending this protection all the way to the periphery can be a challenge. Several of the major WAM vendors still do not support NGINX and NGINX Plus. This means that organizations have had to forgo fully utilizing NGINX Plus components as parts of their access enforcement perimeter or even to keep legacy web servers in place simply to maintain the protection of their enterprise WAM platforms. Up to now, not a single enterprise WAM tool has been able to function smoothly with cloud‑deployed applications.

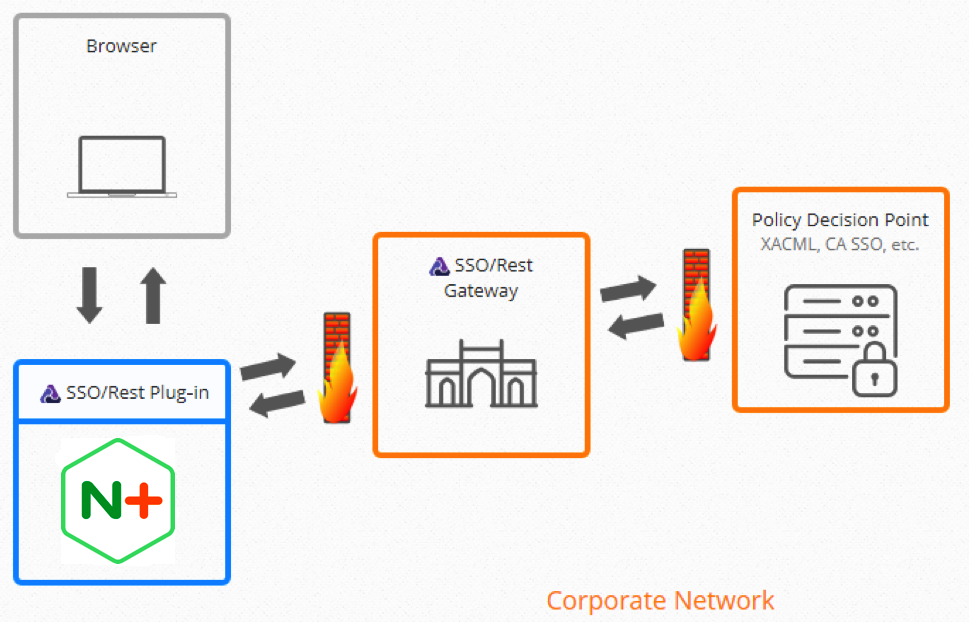

Fortunately, this is no longer the case. IDF Connect’s SSO/Rest Plug‑In for NGINX Plus now enables your WAM platform’s full capabilities to seamlessly integrate with NGINX Plus. An NGINX Plus Certified Module, the plug‑in is simple to install and configure and is transparent to both end users and administrators. Ultra‑lightweight and engineered for the cloud, it replaces the heavy, chatty, and frequently NGINX Plus‑incompatible WAM agents that have until now been required for complete WAM integration. The plug‑in works in conjunction with the SSO/Rest Gateway, a hardened server that sits in the DMZ, to securely communicate with authorization Policy Decision Points deep in the data center.

Together, the SSO/Rest Plug‑In and Gateway solve the cloud‑incompatibility problems plaguing all traditional enterprise WAM tools. This means that enterprises can now move their applications to the cloud with the full power and capabilities of their existing WAM platforms – single sign‑on, authentication, and access control enforcement. This neatly solves a central problem that faces organizations with well‑established WAM solutions: how to adapt to the new requirements of cloud‑based applications without the expensive process of ripping out and replacing their well‑functioning, on‑premises IAM solutions.

Under the Hood: Configuring the SSO/Rest Plug‑In for NGINX Plus

Shown here is a sample server‑level configuration file for the SSO/Rest Plug‑In for NGINX Plus, followed by descriptions of the SSO/Rest‑specific directives.

server {

listen 80 default_server;

server_name nginx.idfconnect.net;

underscores_in_headers on;

error_log /etc/nginx/log/debug.log debug;

# SSO/Rest Plug-In Configuration

SSORestEnabled on;

SSORestGatewayUrl https://www.idfconnect.net/ssorest3/service/gateway/evaluate;

SSORestPlug-InId nginxtest;

SSORestSecretKey ********;

SSORestUseServerNameAsDefault on;

SSORestSSOZone IDFC; # our session cookies are IDFCSESSION

SSORestIgnoreExt .txt .png .css .jpeg .jpg .gif;

SSORestIgnoreUrl /ignoreurl1.html /ignoreurl2.html;

}SSORestEnabled–onoroffto enable or disable the plug‑in. If it is disabled, the web app is no longer protected.SSORestGatewayUrl– This points to the URL of the SSO/Rest Gateway.SSORestPlug-InId– The identifier of this plug‑in instance. This value is provided by your SSO administrator.SSORestSecretKey– The secret key of the plug‑in. This value is provided by your SSO administrator. Key usage is optional.SSORestUseServerNameAsDefault–onoroffto tell the plug‑in whether to use the server name (as specified by the NGiNXserver_namedirective) as the default hostname if no host header is present in a request. This directive is used to prevent warning messages from flooding the logs if, for instance, frontend load balancers or network monitors are sending polling requests to NGINX without theHTTP_HOSTheader.SSORestSSOZone– A parameter that tells the plug‑in to send request cookies only to gateways that begin with the specified prefix. Separate multiple zone prefixes with commas.SSORestIgnoreExt– An optional space‑delimited list of file extensions that the plug‑in ignores (and auto‑authorizes).SSORestIgnoreUrl– An optional space‑delimited list of URLs that the plug‑in ignores (and auto‑authorizes).

NGINX Plus and the SSO/Rest Plug‑In in Operation

Here we walk through what it looks like to define the SSO/Rest Plug‑In for NGINX Plus and to access and log into an application that the plug‑in and NGINX Plus server are protecting. Our sample website uses CA SSO (aka “SiteMinder”) as its backend access manager, backed by an LDAP user directory.

We start with a basic NGINX Plus server:

Next, we define the plug‑in, just as we would do for any other access control agent:

The salient points here are first, that the access manager sees NGINX Plus as any other on‑premises web server – even if the NGINX Plus server is cloud‑based; and second, that SSO/Rest can either be your access manager or work with your existing access manager.

Here we see that NGINX Plus is the front door to our test app, testweb:

We click the Log In link, and, as expected, NGINX Plus issues a redirect to the login form, which is also served by NGINX Plus:

After we successfully log in, we can see the headers, username, and other attributes asserted by the plug‑in to let the application know the identity of the user and other information about the user’s session:

Strengthen, Secure, Simplify

SSO/Rest significantly improves NGINX Plus’s potential to help organizations achieve Zero Trust WAM:

- It enables NGINX Plus to work with all major enterprise WAM platforms, thereby allows NGINX Plus to play a central role in extending these tools’ full security functionality to systems outside of the data centers.

- SSO/Rest positions NGINX Plus load balancers, sitting in front of the web servers, as obvious candidates for bolstering an organization’s authorization perimeter.

SSO/Rest provides an attractive option for organizations to greatly simplify their infrastructures. With no need for legacy web servers kept in place only to maintain compatibility with a WAM platform, everything can now be NGINX Plus: a single tool that can function as a load balancer, a web server, and as a front‑facing authorization enforcement point.

Appendix: Zero Trust WAM

The Zero Trust approach to security was introduced in 2010 by John Kindervag of Forrester Research. There are three central principles to a Zero Trust architecture.1,2 First and foremost: ensure that all resources are accessed securely, regardless of location – no access to a resource is allowed until both the requester has been authenticated and the request for access has been authorized. Second: adopt the principle of least privilege and strictly enforce access control. Third: inspect and log all traffic in real time and continually re‑evaluate every request.

In the context of WAM, Zero Trust means:

-

Every request must be vetted before it ever touches an app. This is the standard to which every web‑based access control solution must be measured. When an authenticated user attempts access a resource – a file, a page, a piece of data, a tool, etc. – is the request checked against the user’s privileges? Is the user authorized to see the requested resource? And if not, is the request denied?

To fully achieve the microperimeters called for by Zero Trust, the run‑time enforcement of this authorization process must be performed in front of the app rather than by the app itself (that is, “programmatically”).

A direct corollary to this central requirement is that session duration and timeouts must be centrally managed. When the authorization has expired, does a session end when it is supposed to end?

-

Strict, “least privilege” access control policies must be enforced and centrally maintained. While traditionally this usually meant using Role Based Access Control (RBAC) system, modern access control systems frequently use the more flexible Attribute Based Access Control (ABAC) techniques. Whatever the system used, the definition and maintenance of access control rules needs to be centralized – not distributed among individual applications.

-

All authentications, access requests, and authorizations must be centrally logged. This enables two crucial security mechanisms: comprehensive audits and risk analytics. In the latter, each access request is assigned a risk score, which is then evaluated against risk policies to determine the appropriate access control response (for example, block, challenge for stronger authentication, and so on).

Fundamentally, Zero Trust WAM calls for the centralization of access control’s definitional and logging components but the distribution of its declarative components. The underlying motive that reconciles these two apparently contradictory moves is the goal of eliminating reliance on application‑based, or programmatic access control.

Zero Trust WAM is what today’s world requires. Fortunately, it is also what enterprise WAM tools (such as CA Single Sign‑On, Oracle Access Manager, and IBM Tivoli Access Manager) have, for years, been able to guarantee for onsite, integrated applications. Unfortunately, these WAM tools are, for the most part, aging “legacy” tools that often do not adequately support major requirements of modern architecture, e.g. cloud, containerization, etc. The challenge lies in being able to extend the perimeter to encompass applications and resources that do not lie deep within the protected environment.

1Getting Started with a Zero Trust Approach to Network Security. Palo Alto Networks, 2016.

2The Eight Business and Security Benefits of Zero Trust. Chase Cunningham and Jeff Pollard of Forrester Research, August 24, 2018.

The post Securing the Perimeter: Achieving Zero Trust with NGINX Plus and SSO/Rest appeared first on NGINX.

Source: Securing the Perimeter: Achieving Zero Trust with NGINX Plus and SSO/Rest