The mTLS Architecture in NGINX Service Mesh

One thing I’ve learned over the past few years is that our data is one of the most valuable things we own. Hackers know this and will do anything to get at it, and the automated attacks they launch make it easier than ever. I once made the mistake of leaving an SSH port on my Raspberry Pi open on the Internet. When I looked at the logs months later, I noticed multiple IP addresses had been hammering it with brute‑force password guessing scripts. I immediately closed off the port and made a note to check logs more frequently. Though a breach of my Raspberry Pi wouldn’t have got the hackers much, a data breach of a company or government can cost millions of dollars and have effects that last for years.

As we adopt microservices and Kubernetes, we also leave a potential attack surface open. Service-to-service communication among microservices puts more data on the wire compared to monoliths which do all communication in memory. Cleartext data on the wire is a prime candidate for passive monitoring, even if the communicating services are behind a firewall.

One of the biggest reasons to adopt a service mesh is to govern service-to-service traffic. The combination of sidecars and the control plane enables centralized management and control of both ends of the connection with mutual TLS (mTLS) to encrypt and authenticate data on the wire. mTLS extends standard TLS by having the client as well as the server present a certificate and mutually authenticate each other. With a service mesh, mTLS can be implemented in a “zero‑touch” manner, meaning developers do not have to retrofit their applications with certificates or even know that mutual authentication is taking place.

In this blog we provide an overview on how we implement mTLS in NGINX Service Mesh.

Overview

NGINX Service Mesh employs the open source SPIRE software as its central certificate authority (CA). SPIRE handles the full lifecycle of a certificate: creation, distribution, and rotation. Within the service mesh NGINX Plus uses SPIRE‑issued to establish mTLS sessions and encrypt traffic.

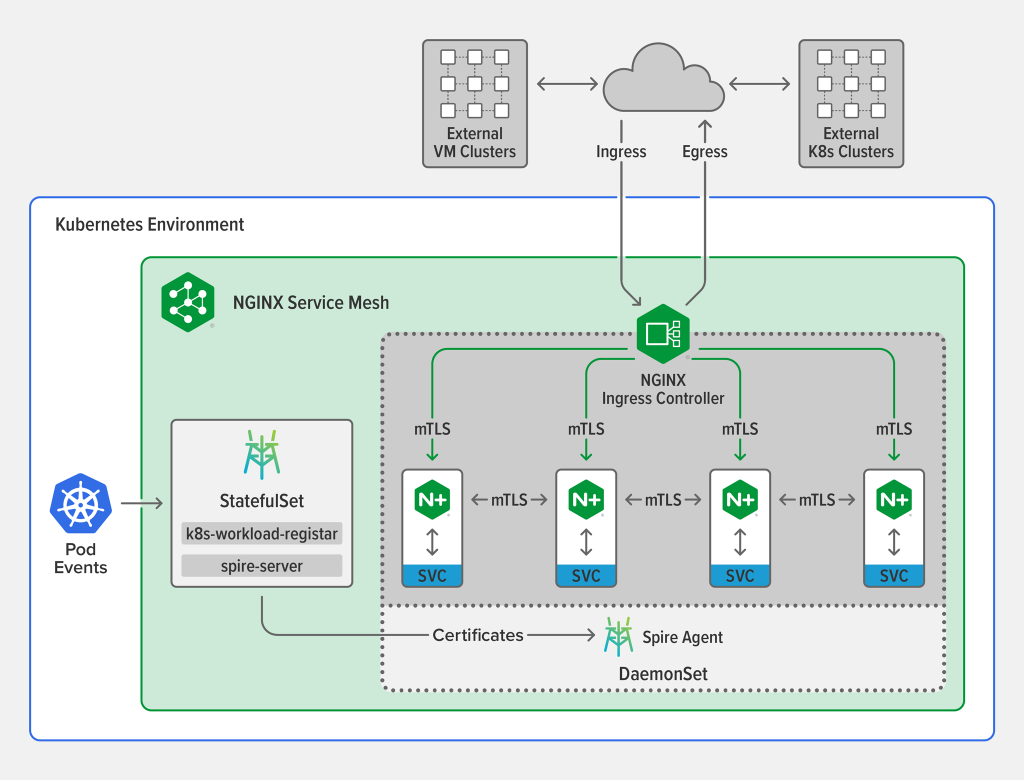

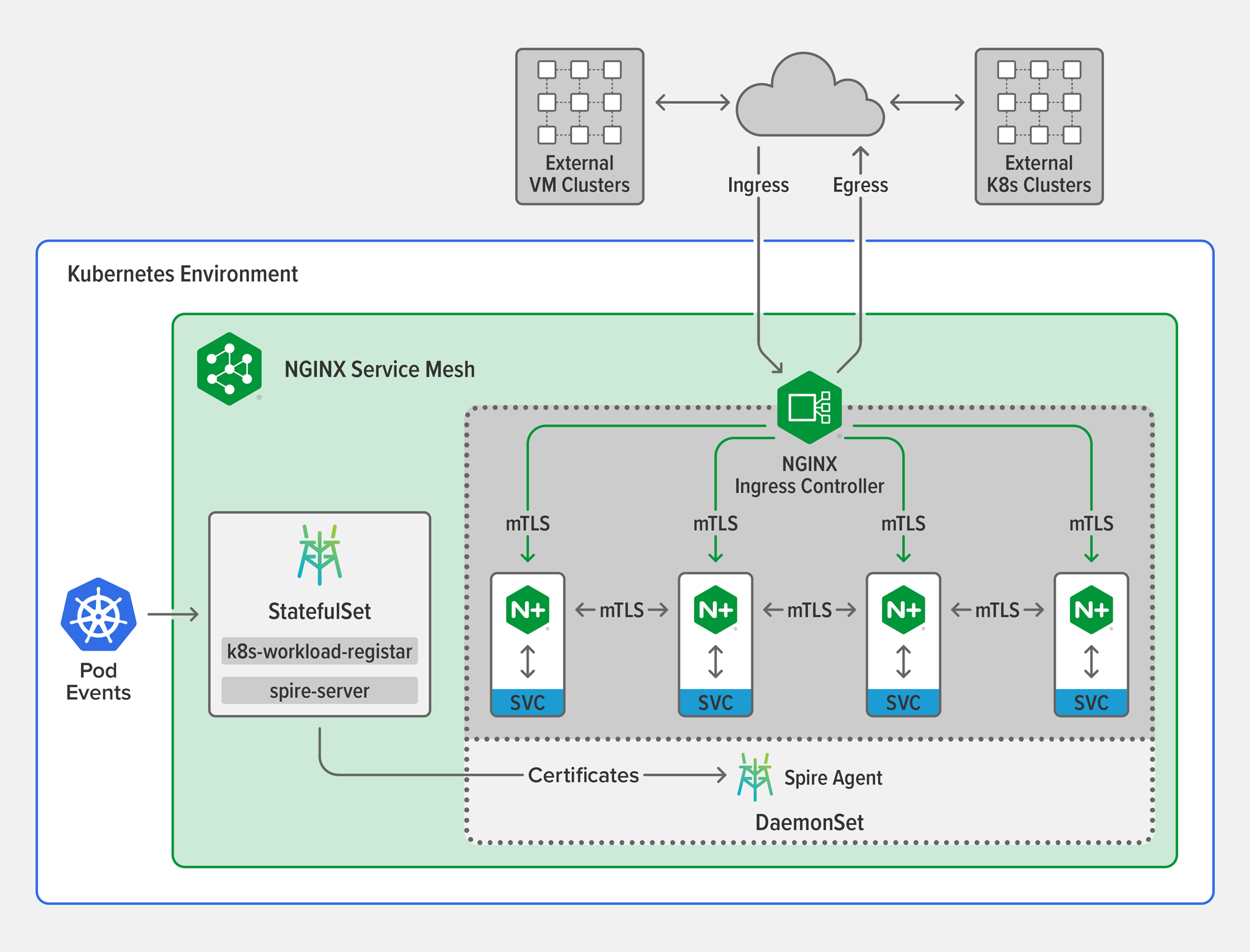

The key components in the diagram are:

-

SPIRE Server – The SPIRE Server runs as a Kubernetes StatefulSet. Its composed of two containers, the actual spire-server and the k8s-workload-registrar:

- spire-server – The heart of the NGINX Service Mesh mTLS architecture, spire-server is the CA that issues certificates for workloads and pushes them to the Spire Agent (see below). It can be the root CA for all services in the mesh or an intermediate CA in the trust chain.

- k8s-workload-registrar – When a new pod is created, k8s-workload-registrar makes API calls to request that spire-server generate a new certificate. It communicates with spire-server through a Unix socket. The k8s-workload-registrar container is based on a Kubernetes Custom Resource Definition (CRD).

-

Spire Agent – Runs as a Kubernetes DaemonSet, meaning one copy runs per node. A SPIRE Agent has two main functions:

- Receives certificates from the SPIRE Server and stores them in a cache.

- “Attests” each pod that comes up, meaning that it asks the Kubernetes system to provide information about the pod – including its UID, name, and namespace – which it then uses to look up the corresponding certificate.

- NGINX Plus sidecar – Consumes the certificates generated and distributed by SPIRE and handles the entire mTLS workflow, exchanging and verifying certificates.

The Certificate Lifecycle in NGINX Service Mesh

With an understanding of the key components in NGINX Service Mesh’s mTLS architecture, we’re ready to look at how they work together to create a self‑contained mTLS system.

Creation

The first stage in the mTLS workflow is creating the actual certificate. Let’s step through what happens when you deploy a Kubernetes pod in NGINX Service Mesh:

- The pod is deployed.

- The NGINX Service Mesh control plane injects the sidecar into the pod spec using a mutating webhook.

- In response to a “pod created” event notification, k8s-workload-registrar gathers the information needed to create the certificate, such as the ServiceAccount name.

- k8s-workload-registrar makes an API call to spire-server over a Unix socket to request a certificate for the pod.

- spire-server mints a certificate for the pod.

Distribution

The new certificate needs to be securely distributed to the correct pod:

- The SPIRE Agents fetch the new certificate and store it in their caches. The SPIRE Agents and Server use gRPC to communicate, as detailed in Bootstrapping.

- The injected pod comes up, with the NGINX Plus sidecar injected.

- The sidecar connects through a Unix socket to the SPIRE Agent running on the same node.

- The SPIRE Agent attests the pod, gathers the correct certificate, and sends it to the sidecar through the Unix socket (for details, see Pod Attestation).

Enforcement

NGINX Plus now has access to the certificate and can use it to perform mTLS. Let’s step through how it’s used when the pod tries to connect to a server that also has a certificate issued by SPIRE.

- The application running in the pod initiates a connection to a service.

- The NGINX Plus sidecar intercepts the connection using

iptablesNAT redirect. - NGINX Plus initiates a TLS connection to the sidecar of the destination service.

- The server‑side NGINX Plus sends its certificate to the client along with a request for the client’s certificate.

- The client NGINX Plus validates the server certificate up to the trust root and sends its own certificate to the server.

- The server validates the client certificate up to the trust root.

- Both sides having mutually authenticated, standard TLS key exchange can now occur.

Rotation

To keep traffic flowing smoothly, certificates must be rotated before their expiration dates. When a certificate is close to expiring, spire-server issues a new certificate and triggers the rotation process. The new certificate is pushed to the SPIRE Agent which pushes it through the Unix socket to the NGINX Plus sidecar.

Public Key Infrastructure

SPIRE can be the trust root in your NGINX Service Mesh deployment or plug in to your existing public key infrastructure (PKI). For more information, see Deploy Using an Upstream Root CA in the NGINX Service Mesh documentation.

Conclusion

Much of the communication that happens in memory with a monolith is instead exposed on the wire with a microservices application. To prevent attackers from snooping this data, you need to encrypt it. NGINX Service Mesh provides a zero‑touch mTLS architecture that encrypts service-to-service communication without requiring modifications to your services. To get started with NGINX Service Mesh, see Introducing NGINX Service Mesh on our blog.

Appendix

Bootstrapping

The SPIRE Server and Agents use SSL/TLS to communicate, raising the question of how we can use certificates to establish secure communication between services that are themselves responsible for generating and distributing certificates. The SPIRE Server accomplishes this with a Notifier plug‑in called k8sbundle.

When the SPIRE Server boots up, it pushes the latest root CA certificate to a Kubernetes ConfigMap resource. ConfigMaps are used to store data that pods can mount as a file volume, as done by the SPIRE Agents. When the SPIRE Agent boots up, it finds the root CA certificate and uses that to validate the certificate presented by the SPIRE Server.

The SPIRE Server uses the process of Node Attestation to validate the SPIRE Agent.

Selectors

For a pod to be issued a particular certificate, it must have a set of properties called selectors. Each certificate issued by SPIRE is associated with a set of selectors that identify the pod it belongs to. NGINX Service Mesh relies on the pod UID to uniquely identify a pod. After a pod comes up, during pod attestation the SPIRE Agent looks up the unique certificate for the pod using the UID as the selector.

Pod Attestation

Attestation is only one step of the process for distributing a certificate to the correct pod (Step 4 in Distribution), but is a key part of the overall mTLS architecture. Attestation means that the SPIRE Agent makes a request to Kubernetes for all the information available about the pod. A snippet from the logs of the SPIRE Agent shows the information it collects on the pod.

"PID attested to have selectors" pid=1226861 selectors="[type:"unix" value:"uid:2102" type:"unix" value:"gid:1000" type:"k8s" value:"sa:bookinfo-productpage" type:"k8s" value:"ns:default" type:"k8s" value:"pod-uid:5beeffac-756e-11ea-91e1-42010a8a008f" type:"k8s" value:"pod-name:productpage-v1-c7765c886-fvqqj" type:"k8s" value:"container-name:proxy" type:"k8s" value:"pod-label:spire-workload:productpage-v1" type:"k8s" value:"pod-label:version:v1" type:"k8s" value:"pod-label:app:productpage" ]"Here, for example, the SPIRE Agent collects the pod’s UID (pod‑uid, here 5b33...008f), namespace (ns, here default), and ServiceAccount (sa, here bookinfo‑productpage). These then become selectors to find the correct certificate to issue to the pod. For more information, see Workload Attestation in the SPIRE documentation.

The post The mTLS Architecture in NGINX Service Mesh appeared first on NGINX.