What Customers Tell Us They Need for Modern API Management

As a Sales lead for NGINX at F5, I often interact with customers that are using more than one API management framework. One customer I spoke with has five at last count! Why is this? There can be many reasons, but one situation we come across again and again is that as customers are transforming their applications from monolithic architectures to more microservices‑based architectures, they’re finding that their traditional API management framework no longer satisfies all their requirements.

Before we examine the ways these traditional frameworks are no longer adequate, let’s take a look back at how we got to where we are today…

The Evolution of Software Integration Architecture

During the early 2000s we saw a growing requirement for an integration layer able to connect various front‑end services to various backend services such as mainframes, message queues, and datastores. Middleware tools solved these integration challenges with protocol and message transformation, greatly reducing the complexity of connecting to backend systems. These middleware tools became known as an enterprise service bus (ESB) and over time began to offer additional functionality such as authentication, authorization, and auditing capabilities. To connect and integrate internal business functions APIs, ESB vendors created APIs that typically used SOAP message protocols and XML‑formatted data. Over time the ESB became a critical infrastructure component for many organizations, which consequently tended to treat ESB like a “pet” in their infrastructure.

Organizations were forced to rethink their business strategies and infrastructure models as Internet traffic grew exponentially and consumers began to consume services in new ways – a prominent example being the use of mobile devices for shopping, Internet banking, and social media. Time-to-market became a key business driver for many organizations, inspiring lines of business to look for more agile ways to bring new products and services to market. I am sure we have all heard the maxim that it is no longer large companies that eat small ones, but fast ones that eat slow ones!

The evolution of mobile devices as well as the adoption of modern web frameworks led developers to adopt more modern web frameworks and to replace SOAP/XML APIs with newer, lighter‑weight REST APIs and JSON‑formatted data. APIs have now become such an instrumental way for our devices and applications to communicate that in 2019 Akamai found that 83% of web traffic is API calls. As ESB became less relevant to the “digital natives” adopting new technologies, ESB vendors tried to hold on to market share by offering API functionality with both legacy SOAP and more modern REST web services. This resulted in a quick win for the ESB vendors, but the market was still moving at a rapid pace towards the microservices era.

Many of the API management frameworks that exist on the market today started life as ESBs. They were built long before the public cloud became mainstream, and predate the rapid adoption of microservices and methodologies such as DevOps and CI/CD tooling. So now we can understand why organizations today are finding that traditional API management frameworks no longer satisfy all their requirements. Simply put, it is because these frameworks were implemented when customers were not building distributed, microservices‑based architectures that often run inside of Kubernetes clusters, in both public and private cloud environments.

Challenges of Traditional API Management Frameworks

As our customers are adopting modern application architectures, they’re finding that their traditional API management frameworks are creating problems rather than solving them. Based on research into human cognition and input from our customers, we at NGINX recently determined that API calls must be processed within 30 milliseconds (ms) to deliver the “real‑time” performance that consumers demand when they interact with your apps.

The architecture of many traditional API frameworks unfortunately makes it difficult to achieve latency below this 30ms threshold. In particular, traditional frameworks typically route API calls through the control plane on their way to the data plane and the API endpoints. This not only adds latency, but also means that the tightly coupled control and data planes must be connected at all times for API calls to be processed. Furthermore, the control plane is often made up of many moving parts, requiring third‑party modules, scripts, and a database just to manage, monitor, and analyze APIs.

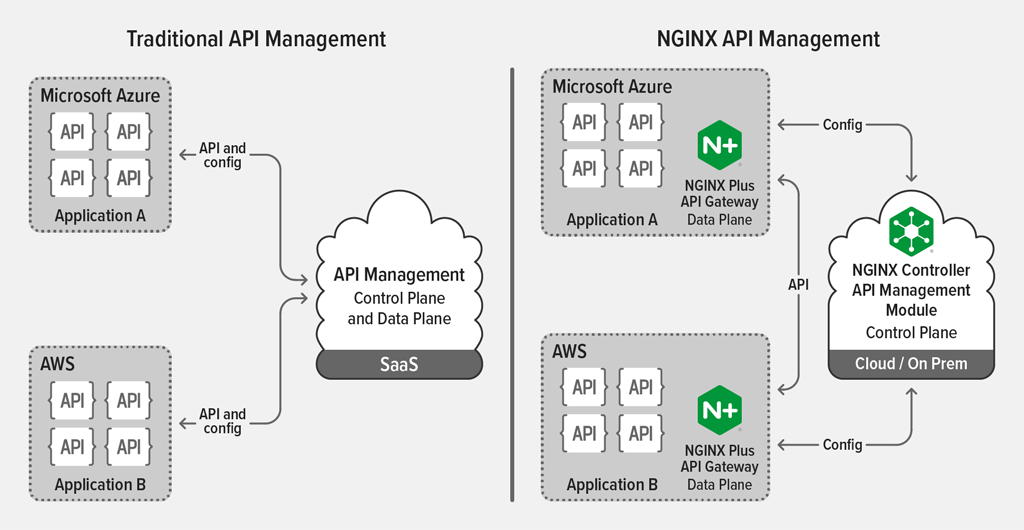

Traditional frameworks are particularly ill‑suited to modern applications that are distributed in nature, composed of microservices that can be located in multiple physical locations or cloud environments. If, for example, you’ve adopted cloud strategy that involves running workloads in both public and private cloud environments and are using a SaaS‑based or self‑managed traditional API management framework, you probably struggle to achieve low latencies simply because all API traffic has to be authenticated and routed across the network to pass through the tightly coupled control and data plane. This is sometimes referred to as tromboning.

SaaS‑based offerings create another challenge for some organizations. If you have a zero‑trust network security policy, there is probably a firewall in place between the API management framework and the backend service that exposes the API. Potentially every change to the backend service requires a change to the firewall. I’m not aware of many organizations that allow their AppDev teams to make automated changes on the corporate firewall, so there’s a significant amount of operational overhead that’s very frustrating for dev teams that are aiming to be agile but are continuously blocked and slowed down by corporate‑wide security policies. Routing even internal API calls through a cloud outside the corporate network is especially inefficient and adversely impacts API performance as well as the security posture of the entire organization.

One clear outcome of the rise of public clouds is the adoption of Infrastructure as Code and the placement of infrastructure closer to applications. There is a clear trend towards decentralized infrastructure ownership where, for example, a line of business or a DevOps team can operate and manage its own infrastructure. This is problematic with traditional API management frameworks, which are inherently designed to be operated and managed by one central team. DevOps teams can’t even deploy their own gateways for test, dev, and sandbox environments as part of their CI/CD pipeline. Traditional frameworks also make it next to impossible to take advantage of technologies such as Docker.

As stated earlier, many traditional API management frameworks are made up of many moving parts and rely on third‑party modules, scripts, and a database to manage, monitor and analyze the APIs. This can make troubleshooting difficult not only because there are so many moving parts, but also because of the lack of visibility into the internals of the framework. Many of my customers complain that they spend hours in triage when there is an outage or they are experiencing poor performance.

The final challenge posed by these traditional API management frameworks is cost. There is a plethora of different cost models out there including per‑administrator, per‑API, per‑gateway, and combinations of these. All of them have pros and cons, but one thing they have in common is that it is very difficult to forecast what your expenditure will be for the coming year. With SaaS‑based frameworks that charge per API call, I have heard of customers suffering severe sticker shock when they realize they are paying even for malformed calls and during DDoS attacks.

Modern API Management Requirements for Today’s Needs

Organizations that are modernizing their applications and facing the problems we’ve discussed with traditional API management frameworks clearly need a new breed of API management tools. Here are some of features my customers have told me they need:

- A decoupled control and data plane that do not require constant connectivity. This allows customers to run the data plane (API gateways) closer to their applications, and greatly reduces latency because every API call no longer has to route via the control plane. This is particularly key in containerized environments where there is a significant amount of east‑west (interservice) traffic.

-

An architecture designed for multi‑cloud.

Suppose Application A running in Microsoft Azure communicates via API with Application B running in AWS, and the SaaS‑based traditional API management system is running in yet another cloud, or on premises. The API traffic flow is App A → SaaS → App B → SaaS → App A with an inevitable and significant amount of latency. With a decoupled architecture, you can run the data plane right next to each application and the traffic flow is App A → App B → App A; the location of the control plane is irrelevant because API traffic doesn’t pass through it. This scenario also greatly reduces the additional operational overhead imposed by having a firewall between the SaaS‑based API management framework and App A and App B. - Self‑service API deployment and management. With a decoupled architecture, each line of business or DevOps team can own its own API gateways while NetOps or SecOps teams are still in control of security policy on the control plane. This requirement is becoming increasingly popular as organizations move towards more agile development methodologies.

We have developed the NGINX API management solution to address these needs based on what we have heard from our customers. What are your API management requirements? We’d love to hear from you in the comments below. In the meantime, get started with a free 30-day trial today or contact us to discuss your use cases.

The post What Customers Tell Us They Need for Modern API Management appeared first on NGINX.

Source: What Customers Tell Us They Need for Modern API Management