Announcing NGINX Plus R16

table.nginx-blog, table.nginx-blog th, table.nginx-blog td {

border: 2px solid black;

border-collapse: collapse;

}

table.nginx-blog {

width: 100%;

}

table.nginx-blog th {

background-color: #d3d3d3;

align: left;

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 2px;

line-height: 120%;

}

table.nginx-blog td {

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

table.nginx-blog td.center {

text-align: center;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

We are pleased to announce that NGINX Plus Release 16 (R16) is now available. Today’s release is one of the most important in advancing NGINX’s technical vision. With R16, NGINX Plus now functions as a single, elastic ingress and egress tier for all of your applications. What this means is that you can consolidate the functionality of a load balancer, API gateway, and WAF into a single software package that spans any cloud.

Many companies we talk to today have each of these components, but often from different vendors. This drives up cost and complexity as operations teams have to manage each point solution separately. It also reduces performance and reliability as each extra hop adds additional latency and points of failure.

With new features in NGINX Plus R16 you can begin to consolidate and simplify your infrastructure, moving towards architecting an elastic ingress/egress tier for both legacy and modern applications. NGINX Plus R16 includes new clustering features, enhanced UDP load balancing, and DDoS mitigation that make it a more complete replacement for costly F5 BIG-IP hardware and other load‑balancing infrastructure. New support for global rate limiting further makes NGINX Plus a lightweight alternative to many API gateway solutions. Finally, new support for Random with Two Choices load balancing makes NGINX Plus the ideal choice for load balancing microservices traffic in scaled or distributed environments, such as Kubernetes.

Summary of New NGINX Plus R16 Features

New features in NGINX Plus R16 include:

- Cluster‑aware rate limiting – In NGINX Plus R15 we introduced the state sharing module, allowing data to be synchronized across an NGINX Plus cluster. In this release state sharing is extended to the rate limiting feature, enabling you to specify global rate limits across an NGINX Plus cluster. Global rate limiting is an important feature for API gateways and is an extremely popular use case for NGINX Plus.

- Cluster‑aware key‑value store – In NGINX Plus R13 we introduced a key‑value store which can be used, for example, to create a dynamic IP address blacklist or manage HTTP redirects. In this release the key‑value store can now be synchronized across a cluster and includes a new

timeoutparameter, so that individual key‑value pairs can be automatically removed. With the new synchronization support, the key‑value store can now be used to provide dynamic DDoS mitigation, distribute authenticated session tokens, or build a distributed content cache (CDN). - Random with Two Choices load balancing – When clustering load balancers, it is important to use a load‑balancing algorithm that does not inadvertently overload individual backend servers. Random with Two Choices load balancing is extremely efficient for scaled clusters and will be the default method in the next release of the NGINX Ingress Controller for Kubernetes.

- Enhanced UDP load balancing – In NGINX Plus R9 we first introduced UDP load balancing. This initial implementation was limited to UDP applications that expect a single UDP packet from the client for each interaction with the server (such as DNS and RADIUS). NGINX Plus R16 can handle multiple UDP packets from the client, enabling us to support more complex UDP protocols such as OpenVPN, voice over IP (VoIP), and virtual desktop infrastructure (VDI).

- AWS PrivateLink support – AWS PrivateLink is Amazon’s technology for creating secure VPN tunnels into a Virtual Private Cloud. With this release you can now authenticate, route, load balance traffic, and optimize how traffic flows within an AWS PrivateLink data center. This functionality is built on top of the new support for PROXY protocol v2.

Additional enhancements include support for OpenID Connect opaque session tokens, the Encrypted Session dynamic module, updates to the NGINX JavaScript module, and much more. NGINX Plus R16 is based on NGINX Open Source 1.15.2 and includes all features in the open source release.

During the development of NGINX Plus R16, we also added a number of significant enhancements to the official NGINX Ingress Controller for Kubernetes, a prominent use case for NGINX Plus.

Learn more about NGINX Plus R16 and all things NGINX at NGINX Conf 2018. We’ll have dedicated sessions, demos, and experts on hand to go deep on all the new capabilities.

Important Changes in Behavior

-

The Upstream Conf and Status APIs, replaced and deprecated by the unified NGINX Plus API, have been removed. In August 2017, NGINX Plus R13 introduced the all‑new NGINX Plus API for dynamic reconfiguration of upstream groups and monitoring metrics, replacing the Upstream Conf and Status APIs that previously implemented those functions. As announced at the time, the deprecated APIs continued to be available and supported for a significant period of time, which is now over. If your configuration includes the

upstream_confand/orstatusdirectives, you must replace them with theapidirective as part of the upgrade to R16.For advice and assistance in migrating to the new NGINX Plus API, please see the transition guide on our blog, or contact our support team.

-

Discontinued support for end‑of‑life OS versions – NGINX Plus is no longer supported on Ubuntu 17.10 (Artful), FreeBSD 10.3, or FreeBSD 11.0.

For more information about supported platforms see the technical specifications for NGINX Plus and dynamic modules.

-

The NGINX New Relic plug‑in has been updated to use the new NGINX Plus API and is now an open source project. The updated plug‑in works with all versions of NGINX Plus from R13 onward but is no longer supported or maintained by NGINX, Inc.

New Features in Detail

Cluster-Aware Rate Limiting

NGINX Plus R15 introduced the zone_sync module which enables runtime state to be shared across all NGINX Plus nodes in a cluster.

The first synchronized feature was sticky‑learn session persistence. NGINX Plus R16 extends state sharing to the rate limiting feature. When deployed in a cluster, NGINX Plus can now apply a consistent rate limit to incoming requests regardless of which member node of the cluster the request arrives at. Commonly known as global rate limiting, applying a consistent rate limit across a cluster is particularly relevant to API gateway use cases, delivering APIs to a service level agreement (SLA) that prevents clients from exceeding a specified rate limit.

NGINX Plus state sharing does not require a primary or master node – all nodes are peers and exchange data in a full mesh topology. An NGINX Plus state‑sharing cluster must meet three requirements:

- Network connectivity between all cluster nodes

- Synchronized clocks

- Configuration on every node that enables the

zone_syncmodule, as in the following snippet.

stream {

resolver 10.0.0.53 valid=20s;

server {

listen 9000;

zone_sync;

zone_sync_server nginx-cluster.example.com:9000 resolve;

}

}The zone_sync directive enables synchronization of shared memory zones in a cluster. The zone_sync_server directive identifies the other NGINX Plus instances in the cluster. NGINX Plus supports DNS service discovery so cluster nodes can be identified by hostname, making the configuration identical on all nodes. Note that this is a minimal configuration, without the necessary security controls for production deployment. For more details, see the NGINX Plus R15 announcement and the reference documentation for the zone_sync module.

Once this configuration is in place on all nodes, rate limits can be applied across a cluster simply by adding the new sync parameter to the limit_req_zone directive. With the following configuration, NGINX Plus imposes a rate limit of 100 requests per second to each client, based on the client’s IP address.

limit_req_zone $remote_addr zone=per_ip:1M rate=100r/s sync; # Cluster-aware

server {

listen 80;

location / {

limit_req zone=per_ip; # Apply rate limit here

proxy_pass http://my_backend;

}

}Additionally, the state‑sharing clustering solution is independent from the keepalived‑based high‑availability solution for network resilience. Therefore, unlike that solution a state‑sharing cluster can span physical locations.

Cluster-Aware Key-Value Store

NGINX Plus R13 introduced a lightweight, in‑memory key‑value store as a native NGINX module. This module ships with NGINX Plus, enabling solutions that require simple database storage to be configured without installing additional software components. Furthermore, the key‑value store is controlled through the NGINX Plus API so that entries can be created, modified, and deleted through a REST interface.

Use cases for the NGINX Plus key‑value store include dynamic IP address blacklisting, dynamic bandwidth limiting, and caching of authentication tokens.

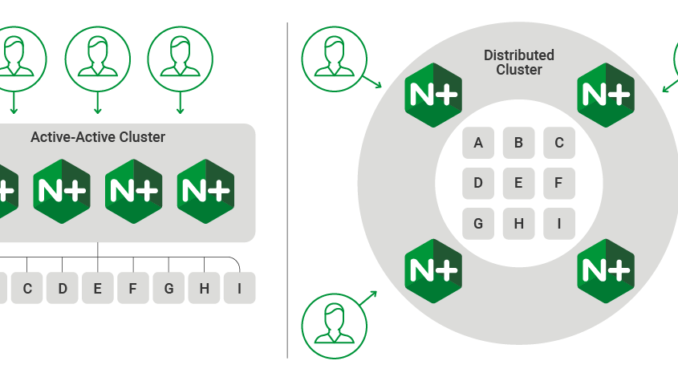

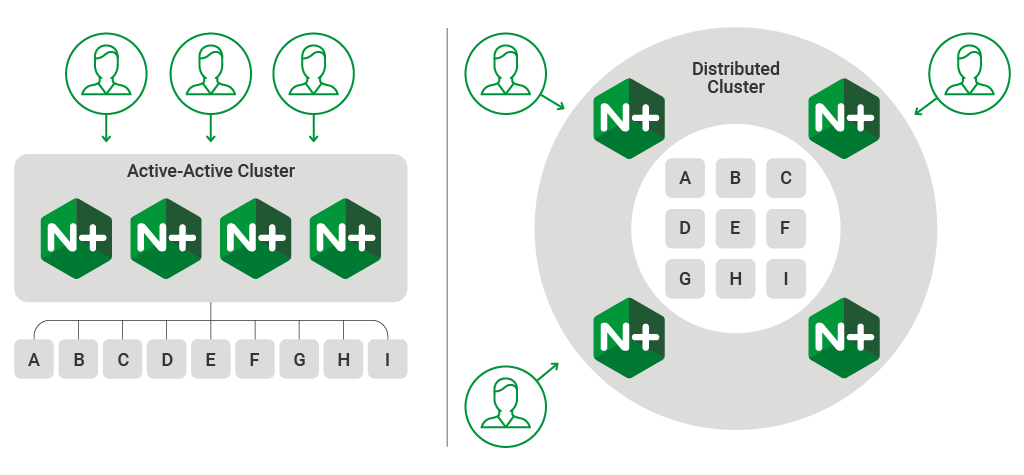

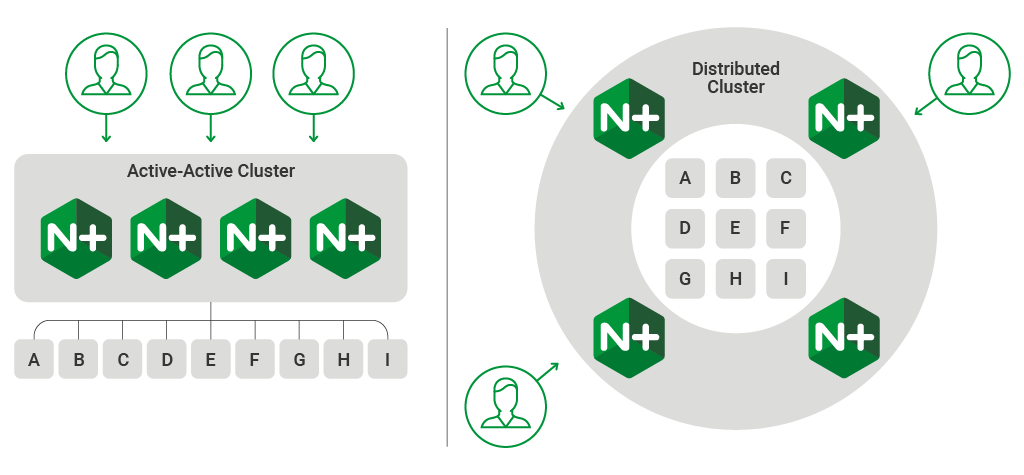

With NGINX Plus R16 the key‑value store is now cluster‑aware so that entries are synchronized across all nodes in a cluster. This means that solutions using the NGINX Plus key‑value store can be deployed to all kinds of clustered environments – active‑passive, active‑active, and also widely distributed.

Like the other cluster‑aware features, you configure synchronization of the the key‑value store simply by adding the sync parameter to the keyval_zone directive for a cluster that has already been configured for state sharing (with the zone_sync and zone_sync_server directives).

The key‑value store has also been extended with the ability to set a timeout value for each key‑value pair added to the key‑value store. Such entries automatically expire and are removed from the key‑value store when the specified timeout period elapses. The timeout parameter is mandatory when configuring a synchronized key‑value store.

Using the NGINX Plus Key-Value Store for Dynamic DDoS Protection

You can combine the new clustering capabilities of NGINX Plus to build a sophisticated solution to protect against DDoS attacks. When a pool of NGINX Plus instances are deployed in an active‑active cluster, or distributed across a wide area network, then malicious traffic can arrive at any one of those instances. This solution uses a synchronized rate limit and a synchronized key‑value store to dynamically respond to DDoS attacks and mitigate their effect, as follows.

- A cluster‑aware rate limit catches clients that send more than 100 requests per second, regardless of which NGINX Plus node receives the request.

- When a client exceeds the rate limit, its IP address is added to a “sin bin” key‑value store by making a call to the NGINX Plus API. The sin bin is synchronized across the cluster.

- Further requests from clients in the sin bin are subject to a very low bandwidth limit, regardless of which NGINX Plus node receives them. Limiting bandwidth is preferable to rejecting requests outright, because it does not clearly signal to the client that DDoS mitigation is in effect.</li

- After ten minutes the client is automatically removed from the sin bin.

The following configuration shows a simplified implementation of this dynamic DDoS mitigation solution.

limit_req_zone $remote_addr zone=per_ip:1M rate=100r/s sync; # Cluster-aware rate limit

limit_req_status 429;

keyval_zone zone=sinbin:1M timeout=600 sync; # Cluster-aware "sin bin" with

# 10-minute TTL

keyval $remote_addr $in_sinbin zone=sinbin; # Populate $in_sinbin with

# matched client IP addresses

server {

listen 80;

location / {

if ($in_sinbin) {

set $limit_rate 50; # Restrict bandwidth of bad clients

}

limit_req zone=per_ip; # Apply the rate limit here

error_page 429 = @send_to_sinbin; # Excessive clients are moved to

# this location

proxy_pass http://my_backend;

}

location @send_to_sinbin {

rewrite ^ /api/3/http/keyvals/sinbin break; # Set the URI of the

# "sin bin" key-val

proxy_method POST;

proxy_set_body '{"$remote_addr":"1"}';

proxy_pass http://127.0.0.1:80;

}

location /api/ {

api write=on;

# directives to control access to the API

}

}Random with Two Choices Load Balancing

It is increasingly common for both application delivery and API delivery environments to use a scaled or distributed load‑balancing tier to receive client requests and pass them to a shared application environment. When multiple load balancers pass requests to the same set of backend servers it is important to use a load‑balancing method that does not inadvertently overload the individual backend servers.

NGINX Plus clusters deployed in an active‑active configuration, or distributed environments with multiple entry points, are common scenarios that can challenge traditional load‑balancing methods because each load balancer cannot have full knowledge of all the requests that have been sent to the backend servers.

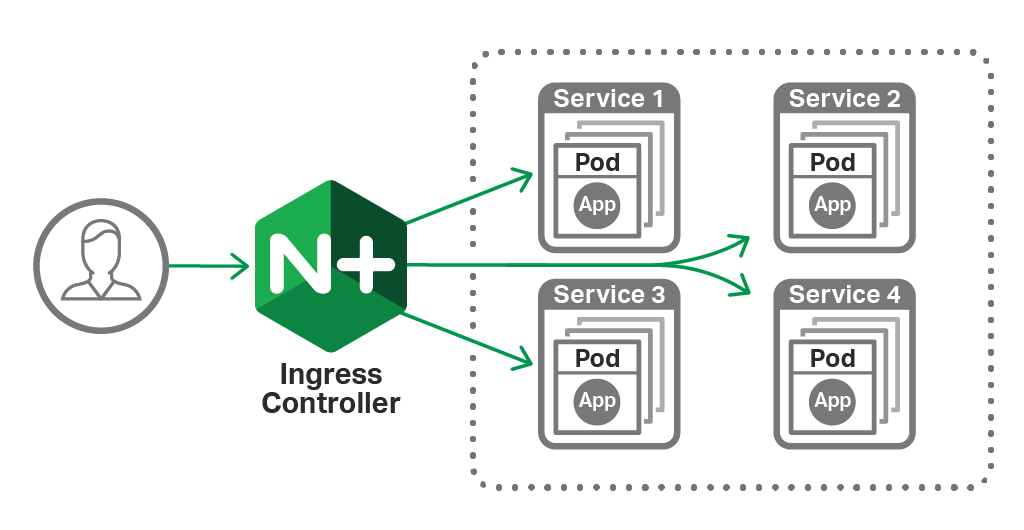

Load balancing inside a containerized cluster has the same characteristics as a scaled‑out active‑active deployment. Kubernetes Ingress controllers deployed in a replica set with multiple instances of the Ingress resource also face the challenge of how to effectively distribute load to the pods delivering each of the services in the cluster.

Workload variance has a huge impact on the effectiveness of distributed load balancing. When each request imposes the same load on the backend server, measuring how quickly each server has responded to previous requests is an effective way to decide where to send the next request. Exclusive to NGINX Plus is the Least Time load‑balancing method, which does just that. But when the load on the backend server is variable (because it includes both read and write operations, for example), past performance is a poor indicator of future performance.

The simplest way to balance the load in a distributed environment is to choose a backend server at random. Over time the load averages out, but it’s a crude approach that is likely to send spikes of traffic to individual servers from time to time.

A simple variation on random load balancing, yet one which is proven to improve load distribution, is to select two backend servers at random and then send the request to the one with the lowest load. The goal of comparing two random choices is to avoid making a bad load‑balancing decision, even if the decision made isn’t optimal. By avoiding the backend server with the greater load, each load balancer can operate independently and still avoid sending spikes of traffic to individual backend servers. As a result, this method has the benefits and computational efficiency of random load balancing but with demonstrably better load distribution.

NGINX Plus R16 introduces two new load‑balancing methods, Random and Random with Two Choices. For the latter, you can further specify which load‑indicating metric NGINX Plus compares to decide which of the two selected backends receives the request. The following table lists the available metrics.

| HTTP Load Balancing | TCP/UDP Load Balancing |

|---|---|

| Number of active connections | Number of active connections |

| Response time to receive the HTTP header* | Response time to receive the first byte* |

| Response time to receive the last byte* | Response time to receive the last byte* |

| Time to establish network connection* |

* All time‑based metrics are exclusive to NGINX Plus

The following snippet shows a simple example of Random with Two Choices load‑balancing configuration with the random two directive (HTTP, Stream).

upstream my_backend {

zone my_backend 64k;

server 10.0.0.1;

server 10.0.0.2;

server 10.0.0.3;

#...

random two last_byte; # Choose the quicker response time from two random choices

}

server {

listen 80;

location / {

proxy_pass http://my_backend; # Load balance to upstream group

}

}Note that the Random with Two Choices method is suitable only for distributed environments where multiple load balancers are passing requests to the same set of backends. Do not use it when NGINX Plus is deployed on a single host or in an active‑passive deployment. In those cases the single load balancer has a full view of all requests, and the Round Robin, Least Connections, and Least Time methods give the best results.

Enhanced UDP Load Balancing

NGINX Plus R9 introduced support for proxying and load balancing UDP traffic, enabling NGINX Plus to sit in front of popular UDP applications such as DNS, syslog, NTP, and RADIUS to provide high availability and scalability of the application servers. The initial implementation was limited to UDP applications that expect a single UDP packet from the client for each interaction with the server.

With NGINX Plus R16, multiple incoming packets are supported, which means that more complex UDP applications can be proxied and load balanced. These include OpenVPN, VoIP, virtual desktop solutions, and Datagram Transport Layer Security (DTLS) sessions. Furthermore, NGINX Plus’ handling of all UDP applications – simple or complex – is also significantly faster.

NGINX Plus is the only software load balancer that load balances four of the most popular Web protocols – UDP, TCP, HTTP, and HTTP/2 – on the same instance and at the same time.

PROXY Protocol v2 Support

NGINX Plus R16 adds support for the PROXY protocol v2 (PPv2) header, and the ability to inspect custom type-length-value (TLV) values in the header.

The PROXY protocol is used by Layer 7 proxies such as NGINX Plus and Amazon’s load balancers to transmit connection information to upstream servers that are located behind another set of Layer 7 proxies, or NAT devices. It is necessary because some connection information, such as the source IP address and port, can be lost when proxies relay connections, and it’s not always possible to rely on HTTP header injection to transmit this information. Previous versions of NGINX Plus supported the PPv1 header; NGINX Plus R16 adds support for the PPv2 header.

Using PPv2 with Amazon Network Load Balancer

One use case for the PPv2 header is connections that are relayed by Amazon’s Network Load Balancer (NLB), which may appear to originate from a private IP address on the load balancer. NLB prefixes each connection with a PPv2 header that identifies the true source IP address and port of the client’s connection. The true source can be obtained from the $proxy_protocol_addr variable, and you can use the realip module to overwrite NGINX’s internal source variables with the values from the PPv2 header.

Using PPv2 with AWS PrivateLink

AWS PrivateLink is Amazon’s technology for creating secure VPN tunnels into a Virtual Private Cloud (VPC). It is commonly used to publish a provider service (running in the Provider VPC) and make it available to one or more Client VPCs. Connections to the provider service originate from an NLB running in the provider VPC.

In many cases, the Provider Service needs to know where each connection originates from, but the source IP address in the PPv2 header is essentially meaningless, corresponding to an internal, unrouteable address in the Client VPC. The AWS PrivateLink NLB adds the source VPC ID to the header using the PPv2 TLV record 0xEA.

NGINX Plus can inspect PPv2 TLV records, and can extract the source VPC ID for AWS PrivateLink connections using the $proxy_protocol_tlv_0xEA variable. This make it possible to authenticate, route, and control traffic in a AWS PrivateLink data center.

Other New Features in NGINX Plus R16

OpenID Connect Opaque Session Tokens

The NGINX Plus OpenID Connect reference implementation has been extended to support opaque session tokens. In this use case, the JWT identity token is not sent to the client. Instead it is stored in the NGINX Plus key‑value store and a random string is sent in its place. When the client presents the random string it is exchanged for the JWT and validated. This use case has been upgraded from prototype/experimental to production‑grade, now that entries in the key‑value store can have a timeout value, and be synchronized across a cluster.

Encrypted Session Dynamic Module

The Encrypted Session community module provides encryption and decryption support for NGINX variables based on AES‑256 with MAC. It is now available in the NGINX Plus repository as a supported dynamic module for NGINX Plus.

NGINX JavaScript Module Enhancements

The NGINX JavaScript modules in R16 include a number of extensions to the scope of JavaScript language support. The HTTP JavaScript module has been simplified so that a single object (r) now accesses both request and response attributes associated with each request. Previously there were separate request (req) and response (res) objects. This simplification makes the HTTP JavaScript module consistent with the Stream JavaScript module which has a single session (s) object. This change is fully backwards compatible – existing JavaScript code will continue to work as is – but we recommend that you modify existing JavaScript code to take advantage of this simplification, as well as using it in code you write in future.

JavaScript language support now includes:

- String methods:

bytesFrom(),padStart(), andpadEnd() - Additional methods:

getrandom()andgetentropy() - Binary literals

The full list of changes to the JavaScript module are documented in the changelog.

New $ssl_preread_protocol Variable

The new $ssl_preread_protocol variable allows you to distinguish between SSL/TLS and other protocols when forwarding traffic using a TCP (stream) proxy. The variable contains the highest SSL/TLS protocol version supported by the client. This is useful if you want to avoid firewall restrictions by (for example) running SSL/TLS and SSH services on the same port.

For more details, see Running SSL and Non‑SSL Protocols over the Same Port on our blog.

Kubernetes Ingress Controller Enhancements

NGINX Plus can manage traffic in a broad range of environments, and one significant use case is as an Ingress Load Balancer for Kubernetes. NGINX develops an Ingress controller solution that automatically configures NGINX Plus, and this integration is fully supported for all NGINX Plus subscribers. (All NGINX Open Source users and NGINX Plus customers can find the open source implementation on GitHub, and formal releases are made periodically.)

During the development of NGINX Plus R16, a number of significant enhancements have been added to the official NGINX Ingress Controller for Kubernetes:

- Prometheus support enables users to export NGINX and NGINX Plus metrics directly to Prometheus on Kubernetes.

- Support for Helm charts simplifies installation and management of the Ingress controller.

- Support for load‑balancing gRPC traffic provides high availability and scalability for gPRC‑based applications. (Added in release 1.2.0.)

- Active health checks of Kubernetes pods quickly detects failures in pods and in network connectivity.

- Multi‑tenant, mergeable configuration allows for separation of concerns, letting teams independently manage different paths of the same web application, while operations retains control of the frontend listener and SSL configuration.

- Fine‑grained JWT authentication, on a per‑path basis, gives deeper control over authentication for rich applications.

- Direct management of configuration templates makes it much easier to develop and test custom changes to the NGINX configuration.

The NGINX Ingress Controller for Kubernetes can be further extended using ConfigMaps (to embed NGINX configuration) or through editing the base templates, allowing users to access all NGINX capabilities when they create traffic management policies for their applications running on Kubernetes.

For more details, see Announcing NGINX Ingress Controller for Kubernetes Release 1.3.0 on our blog.

Upgrade or Try NGINX Plus

NGINX Plus R16 includes additional clustering capabilities, global rate limiting, a new load‑balancing algorithm, and several bug fixes. If you’re running NGINX Plus, we strongly encourage you to upgrade to R16 as soon as possible. You’ll pick up a number of fixes and improvements, and it will help NGINX, Inc. to help you when you need to raise a support ticket.

Please carefully review the new features and changes in behavior described in this blog post before proceeding with the upgrade.

If you haven’t tried NGINX Plus, we encourage you to try it out – for web acceleration, load balancing, and application delivery, or as a fully supported web server with enhanced monitoring and management APIs. You can get started for free today with a 30‑day free trial. See for yourself how NGINX Plus can help you deliver and scale your applications.

The post Announcing NGINX Plus R16 appeared first on NGINX.

Source: Announcing NGINX Plus R16

Leave a Reply