Validating OAuth 2.0 Access Tokens with NGINX and NGINX Plus

There are many options for authenticating API calls, from X.509 client certificates to HTTP Basic authentication. In recent years, however, a de facto standard has emerged in the form of OAuth 2.0 access tokens. These are authentication credentials passed from client to API server, and typically carried as an HTTP header.

OAuth 2.0, however, is a maze of interconnecting standards. The processes for issuing, presenting, and validating an OAuth 2.0 authentication flow often rely on several related standards. At the time of writing there are eight OAuth 2.0 standards, and access tokens are a case in point, as the OAuth 2.0 core specification (RFC 6749) does not specify a format for access tokens. In the real world, there are two formats in common usage:

- JSON Web Token (JWT) as defined by RFC 7519

- Opaque tokens that are little more than a unique identifier for an authenticated client

After authentication, a client presents its access token with each HTTP request to gain access to protected resources. Validation of the access token is required to ensure that it was indeed issued by a trusted identity provider (IdP) and that it has not expired. Because IdPs cryptographically sign the JWTs they issue, JWTs can be validated “offline” without a runtime dependency on the IdP. Typically, a JWT also includes an expiry date which can also be checked. The NGINX Plus auth_jwt module performs offline JWT validation.

Opaque tokens, on the other hand, must be validated by sending them back to the IdP that issued them. However, this has the advantage that such tokens can be revoked by the IdP, for example as part of a global logout operation, without leaving previously logged‑in sessions still active. Global logout might also make it necessary to validate JWTs with the IdP.

In this blog we describe how NGINX and NGINX Plus can act as an OAuth 2.0 Relying Party, sending access tokens to the IdP for validation and only proxying requests that pass the validation process. We discuss the various benefits of using NGINX and NGINX Plus for this task, and how the user experience can be improved by caching validation responses for a short time. For NGINX Plus, we also show how the cache can be distributed across a cluster of NGINX Plus instances, by updating the key‑value store with the JavaScript module, as introduced in NGINX Plus R18.

Except where noted, the information in this blog applies to both NGINX Open Source and NGINX Plus. References to NGINX Plus apply only to that product.

Token Introspection

The standard method for validating access tokens with an IdP is called token introspection. RFC 7662, OAuth 2.0 Token Introspection, is now a widely supported standard that describes a JSON/REST interface that a Relying Party uses to present a token to the IdP, and describes the structure of the response. It is supported by many of the leading IdP vendors and cloud providers.

Regardless of which token format is used, performing validation at each backend service or application results in a lot of duplicated code and unnecessary processing. Various error conditions and edge cases need to be accounted for, and doing so in each backend service is a recipe for inconsistency in implementation and consequently an unpredictable user experience. Consider how each backend service might handle the following error conditions:

- Missing access token

- Extremely large access token

- Invalid or unexpected characters in access token

- Multiple access tokens presented

- Clock skew across backend services

Using the NGINX auth_request Module to Validate Tokens

To avoid code duplication and the resulting problems, we can use NGINX to validate access tokens on behalf of backend services. This has a number of benefits:

- Requests reach the backend services only when the client has presented a valid token

- Existing backend services can be protected with access tokens, without requiring code changes

- Only the NGINX instance (not every app) need be registered with the IdP

- Behavior is consistent for every error condition, including missing or invalid tokens

With NGINX acting as a reverse proxy for one or more applications, we can use the auth_request module to trigger an API call to an IdP before proxying a request to the backend. As we’ll see in a moment, the following solution has a fundamental flaw, but it introduces the basic operation of the auth_request module, which we will expand on in later sections.

The auth_request directive (line 5) specifies the location for handling API calls. Proxying to the backend (line 6) happens only if the auth_request response is successful. The auth_request location is defined on line 9. It is marked as internal to prevent external clients from accessing it directly.

Lines 11–14 define various attributes of the request so that it conforms to the token introspection request format. Note that the access token sent in the introspection request is a component of the body defined in line 14. Here token=$http_apikey indicates that the client must supply the access token in the apikey request header. Of course, the access token can be supplied in any attribute of the request, in which case we use a different NGINX variable.

Extending auth_request with the JavaScript Module

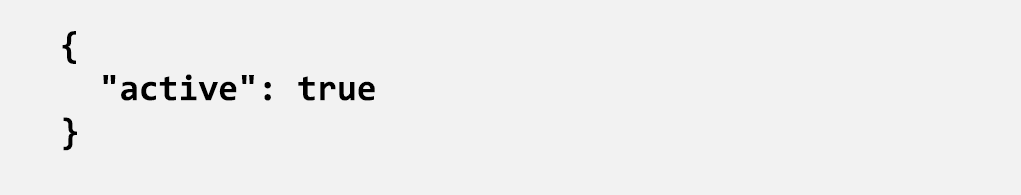

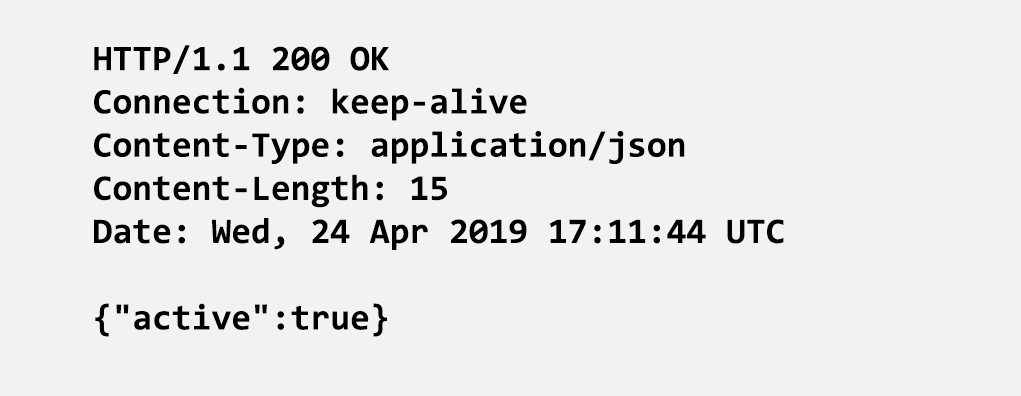

As mentioned, using the auth_request module in this way is not a complete solution. The auth_request module uses HTTP status codes to determine success (2xx = good, 4xx = bad). However, OAuth 2.0 token introspection responses encode success or failure in a JSON object, and return HTTP status code 200 (OK) in both cases.

What we need is a JSON parser to convert the IdP’s introspection response to the appropriate HTTP status code so that the auth_request module can correctly interpret that response.

Thankfully, JSON parsing is a trivial task for the NGINX JavaScript module (njs). So instead of defining a location block to perform the token introspection request, we tell the auth_request module to call a JavaScript function.

Note: This solution requires the JavaScript module to be loaded as a dynamic module with the load_module directive in nginx.conf. For instructions, see the NGINX Plus Admin Guide.

The js_content directive on line 13 specifies a JavaScript function, introspectAccessToken, as the auth_request handler. The handler function is defined in oauth2.js:

Notice that the introspectAccessToken function makes an HTTP subrequest (line 2) to another location (/oauth2_send_request) which is defined in the configuration snippet below. The JavaScript code then parses the response (line 5) and sends the appropriate status code back to the auth_request module based on the value of the active field. Valid (active) tokens return HTTP 204 (No Content) (but success) and invalid tokens return HTTP 403 (Forbidden). Error conditions return HTTP 401 (Unauthorized) so that errors can be distinguished from invalid tokens.

Note: This code is provided as a proof of concept only, and is not production quality. A complete solution with comprehensive error handling and logging is provided below.

The subrequest target location defined in line 2 looks very much like our original auth_request configuration.

All of the configuration to construct the token introspection request is contained within the /_oauth2_send_request location. Authentication (line 19), the access token itself (line 21), and the URL for the token introspection endpoint (line 22) are typically the only necessary configuration items. Authentication is required for the IdP to accept token introspection requests from this NGINX instance. The OAuth 2.0 Token Introspection specification mandates authentication, but does not specify the method. In this example, we use a bearer token in the Authorization header.

With this configuration in place, when NGINX receives a request, it passes it to the JavaScript module, which makes a token introspection request against the IdP. The response from the IdP is inspected, and authentication is deemed successful when the active field is true. This solution is a compact and efficient way of performing OAuth 2.0 token introspection with NGINX, and can easily be adapted for other authentication APIs.

But we’re not quite done. The single biggest challenge with token introspection in general is that it adds latency to each and every HTTP request. This can become a significant issue when the IdP in question is a hosted solution or cloud provider. NGINX and NGINX Plus can offer optimizations to this drawback by caching the introspection responses.

Optimization 1: Caching by NGINX

OAuth 2.0 token introspection is provided by the IdP at a JSON/REST endpoint, and so the standard response is a JSON body with HTTP status 200. When this response is keyed against the access token it becomes highly cacheable.

NGINX can be configured to cache a copy of the introspection response for each access token so that the next time the same access token is presented, NGINX serves the cached introspection response instead of making an API call to the IdP. This vastly improves overall latency for subsequent requests. We can control for how long cached responses are used, to mitigate the risk of accepting an expired or recently revoked access token. For example, if an API client typically makes a burst of several API calls over a short period of time, then a cache validity of 10 seconds might be sufficient to provide a measurable improvement in user experience.

Caching is enabled by specifying its storage – a directory on disk for the cache (introspection responses) and a shared memory zone for the keys (access tokens).

The proxy_cache_path directive allocates the necessary storage: /var/cache/nginx/oauth for the introspection responses and a memory zone called token_responses for the keys. It is configured in the http context and so appears outside the server and location blocks. Caching itself is then enabled inside the location block where the token introspection responses are processed:

Caching is enabled for this location with the proxy_cache directive (line 26). By default NGINX caches based on the URI but in our case we want to cache the response based on the access token presented in the apikey request header (line 27).

On line 28 we use the proxy_cache_lock directive to tell NGINX that if concurrent requests arrive with the same cache key, it needs to wait until the first request has populated the cache before responding to the others. The proxy_cache_valid directive (line 29) tells NGINX how long to cache the introspection response. Without this directive NGINX determines the caching time from the cache‑control headers sent by the IdP; however, these are not always reliable, which is why we also tell NGINX to ignore headers that would otherwise affect how we cache responses (line 30).

With caching now enabled, a client presenting an access token suffers only the latency cost of making the token introspection request once every 10 seconds.

Optimization 2: Distributed Caching with NGINX Plus

Combining content caching with token introspection is a highly effective way to improve overall application performance with a negligible impact on security. However, if NGINX is deployed in a distributed fashion – for example, across multiple data centres, cloud platforms, or an active‑active cluster – then cached token introspection responses are available only to the NGINX instance that performed the introspection request.

With NGINX Plus we can use the keyval module – an in‑memory key‑value store – to cache token introspection responses. Moreover, we can also synchronize those responses across a cluster of NGINX Plus instances by using the zone_sync module. This means that no matter which NGINX Plus instance performed the token introspection request, the response is available at all of the NGINX Plus instances in the cluster.

Note: Configuration of the zone_sync module for runtime state sharing is outside the scope of this blog. For further information on sharing state in an NGINX Plus cluster, see the NGINX Plus Admin Guide.

In NGINX Plus R18 and later, the key‑value store can be updated by modifying the variable that is declared in the keyval directive. As the JavaScript module has access to all of the NGINX variables, this allows for introspection responses to be populated in the key‑value store during processing of the response.

Like the NGINX filesystem cache, the key‑value store is enabled by specifying its storage, in this case a memory zone that stores the key (access token) and value (introspection response).

Note that with the timeout parameter to the keyval_zone directive we specify the same 10‑second validity period for cached responses as on line 29 of auth_request_cache.conf, so that each member of the NGINX Plus cluster independently removes the response when it expires. Line 2 specifies the key‑value pair for each entry: the key being the access token supplied in the apikey request header, and the value being the introspection response as evaluated by the $token_data variable.

Now, for each request that includes an apikey request header, the $token_data variable is populated with the previous token introspection response, if any. Therefore we update the JavaScript code to check if we already have a token introspection response.

Line 2 tests whether there is already a key‑value store entry for this access token. Because there are two paths by which an introspection response can be obtained (from the key‑value store, or from an introspection response), we move the validation logic into the following separate function, tokenResult:

Now, each token introspection response is saved to the key‑value store, and synchronized across all other members of the NGINX Plus cluster. The following example shows a simple HTTP request with a valid access token, followed by a query to the NGINX Plus API to show the contents of the key‑value store.

$ curl -IH "apikey: tQ7AfuEFvI1yI-XNPNhjT38vg_reGkpDFA" http://localhost/

HTTP/1.1 200 OK

Date: Wed, 24 Apr 2019 17:41:34 GMT

Content-Type: application/json

Content-Length: 612

$ curl http://localhost/api/4/http/keyvals/access_tokens

{"tQ7AfuEFvI1yI-XNPNhjT38vg_reGkpDFA":"{"active":true}"}Note that the key‑value store uses JSON format itself, so the token introspection response automatically has escaping applied to quotation marks.

Optimization 3: Extracting Attributes from the Introspection Response

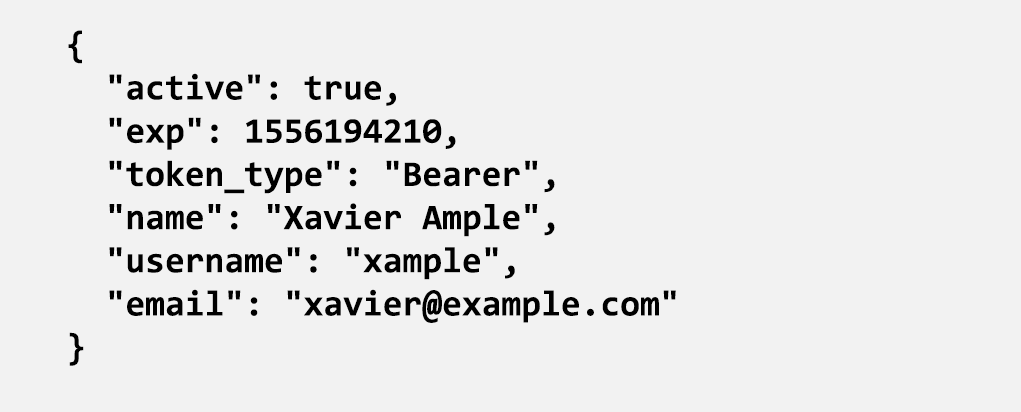

A useful capability of OAuth 2.0 token introspection is that the response can contain information about the token in addition to its active status. Such information includes the token expiry date and attributes of the associated user: username, email address, and so on.

This additional information can be very useful. It can be logged, used to implement fine‑grained access control policies, or provided to backend applications. We can export each of these attributes to the auth_request module by sending them as additional response headers with a successful (HTTP 204) response.

We iterate over each attribute of the introspection response (line 23) and send it back to the auth_request module as a response header. Each header name is prefixed with Token- to avoid conflicts with standard response headers (line 26). These response headers can now be converted into NGINX variables and used as part of regular configuration.

In this example, we convert the username attribute into a new variable, $username (line 11). The auth_request_set directive enables us to export the context of the token introspection response into the context of the current request. The response header for each attribute (added by the JavaScript code) is available as $sent_http_token_attribute. Line 12 then includes the value for $username as a request header that is proxied to the backend. We can repeat this configuration for any of the attributes returned in the token introspection response.

Production Configuration

The code and configuration examples above are functional, and suitable for proof-of-concept testing or customizing for a specific use case. For production use, we strongly recommend additional error handling, logging, and flexible configuration. You can find a more robust and verbose implementation for NGINX and NGINX Plus on Github:

- OAuth 2.0 Token Introspection with NGINX (disk caching)

- OAuth 2.0 Token Introspection with NGINX Plus (key‑value caching)

Summary

In this blog we have shown how to use the NGINX auth_request module in conjunction with the JavaScript module to perform OAuth 2.0 token introspection on client requests. In addition, we have extended that solution with caching, and extracted attributes from the introspection response for use in the NGINX configuration.

We also described how the NGINX Plus key‑value store can be used as a distributed cache for introspection responses, suitable for production deployments across a cluster of NGINX Plus instances.

Try out OAuth 2.0 token introspection with NGINX Plus for yourself – start your free 30-day trial today or contact us to discuss your use cases.

The post Validating OAuth 2.0 Access Tokens with NGINX and NGINX Plus appeared first on NGINX.

Source: Validating OAuth 2.0 Access Tokens with NGINX and NGINX Plus

Leave a Reply