Using the NGINX Plus Ingress Controller for Kubernetes with OpenID Connect Authentication from Azure AD

table.nginx-blog, table.nginx-blog th, table.nginx-blog td {

border: 2px solid black;

border-collapse: collapse;

}

table.nginx-blog {

width: 100%;

}

table.nginx-blog th {

background-color: #d3d3d3;

align: left;

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 2px;

line-height: 120%;

}

table.nginx-blog td {

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

table.nginx-blog td.center {

text-align: center;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

NGINX Open Source is already the default Ingress resource for Kubernetes, but NGINX Plus provides additional enterprise‑grade capabilities, including JWT validation, session persistence, and a large set of metrics. In this blog we show how to use NGINX Plus to perform OpenID Connect (OIDC) authentication for applications and resources behind the Ingress in a Kubernetes environment, in a setup that simplifies scaled rollouts.

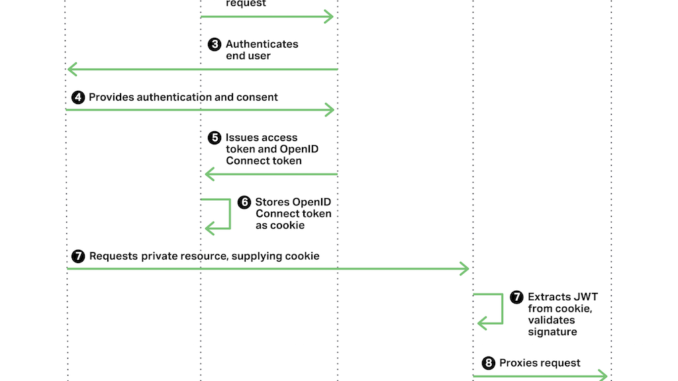

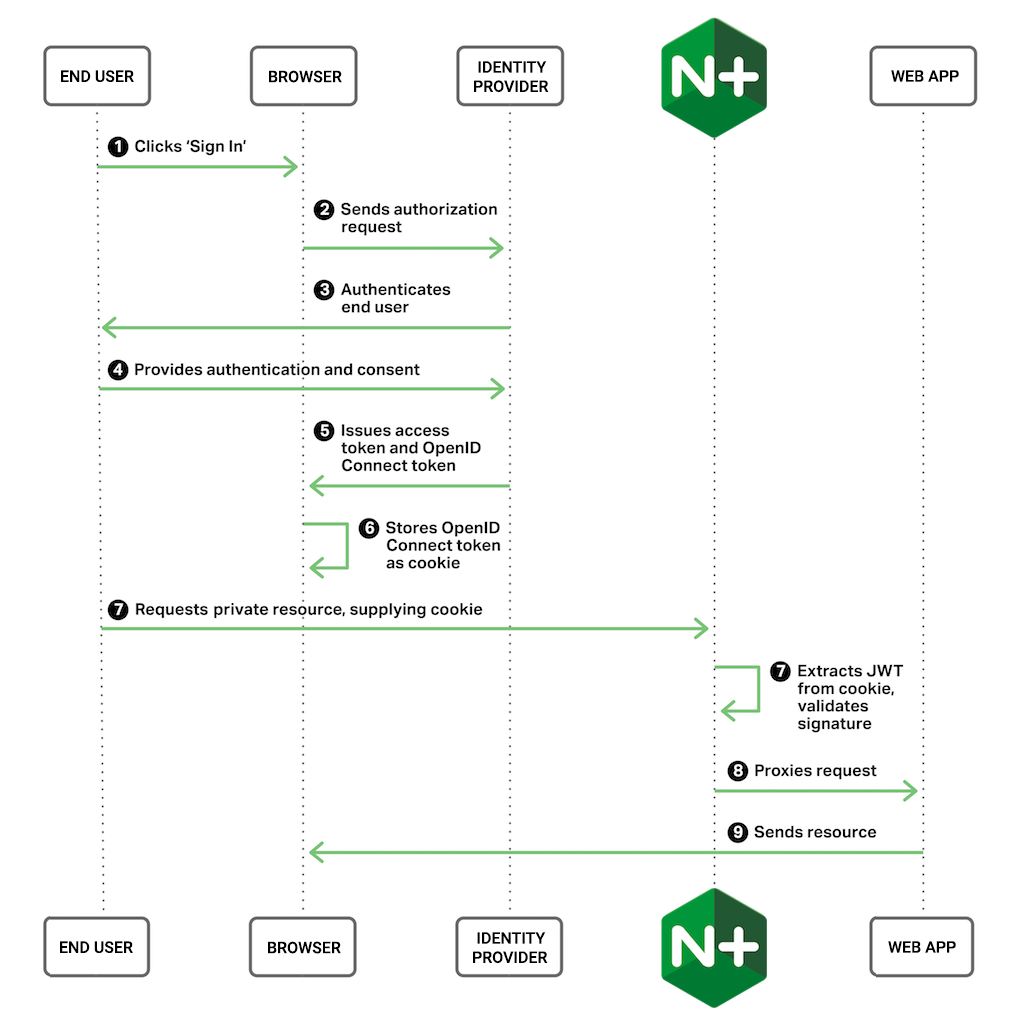

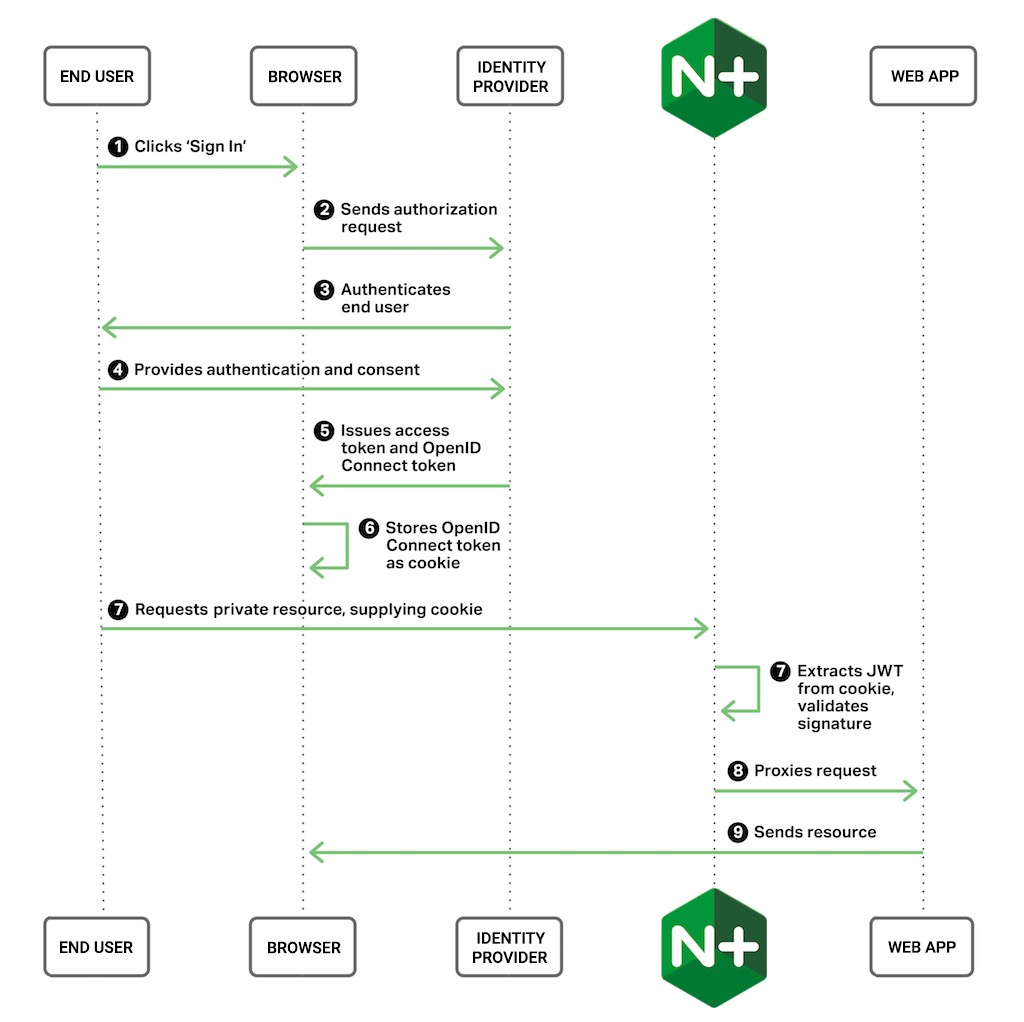

The following graphic depicts the authentication process with this setup:

To create the setup, perform the steps in these sections:

- Obtaining Credentials from the OIDC Identity Provider (Azure Active Directory)

- Installing and Configuring Kubernetes

- Creating a Docker Image for the NGINX Plus Ingress Controller

- Installing and Customizing the NGINX Plus Ingress Controller

- Setting Up the Sample Application to Use OpenID Connect

Notes:

- This blog is for demonstration and testing purposes only, as an illustration of how to use NGINX Plus for authentication in Kubernetes using OIDC credentials. The setup is not necessarily covered by your NGINX Plus support contract, nor is it suitable for production workloads without modifications that address your organization’s security and governance requirements.

- Several NGINX colleagues collaborated on this blog and I thank them for their contributions. I particularly want to thank the NGINX colleague (he modestly wishes to remain anonymous) who first came up with this use case!

Obtaining Credentials from the OpenID Connect Identity Provider (Azure Active Directory)

The purpose of OpenID Connect (OIDC) is to use established, well‑known user identities without increasing the attack surface of the identity provider (IdP, in ODC terms). Our application trusts the IdP, so when it calls the IdP to authenticate a user, it is then willing to use the proof of authentication to control authorized access to resources.

In this example, we’re using Azure Active Directory (AD) as the IdP, but you can choose any of the many OIDC IdPs operating today. For example, our earlier blog post Authenticating Users to Existing Applications with OpenID Connect and NGINX Plus uses Google.

To use Azure AD as the IdP, perform the following steps, replacing the sample values with the ones appropriate for your application:

-

If you don’t already use Azure, create an account.

-

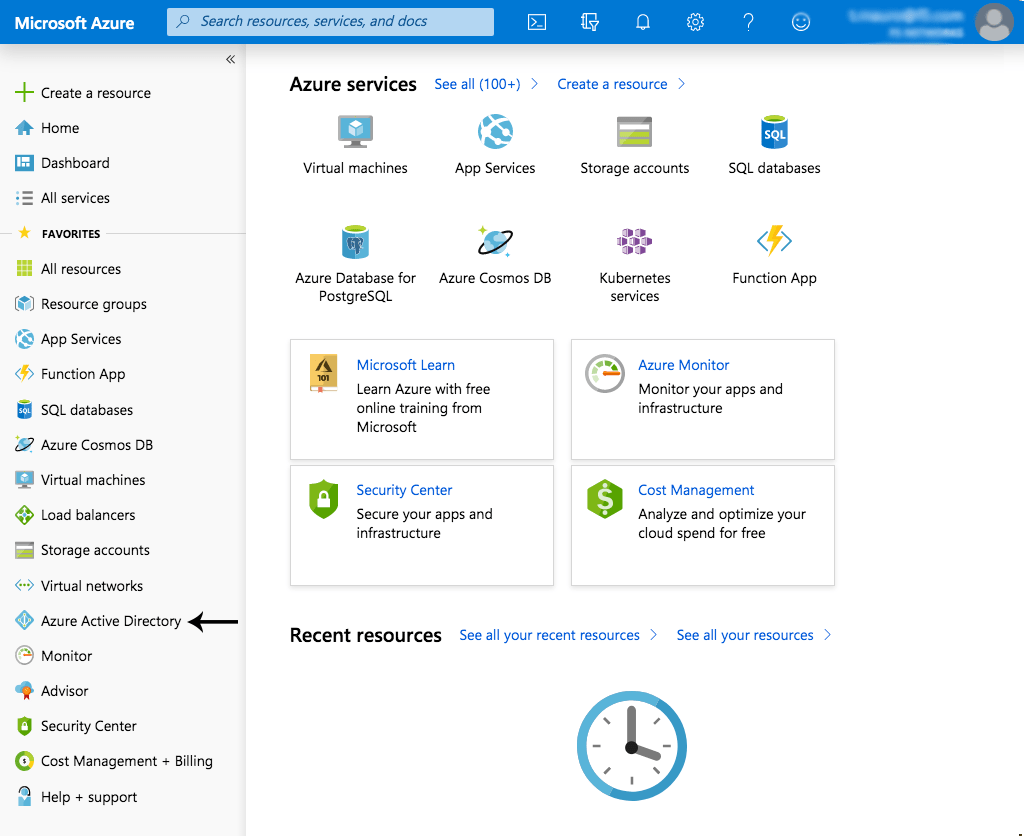

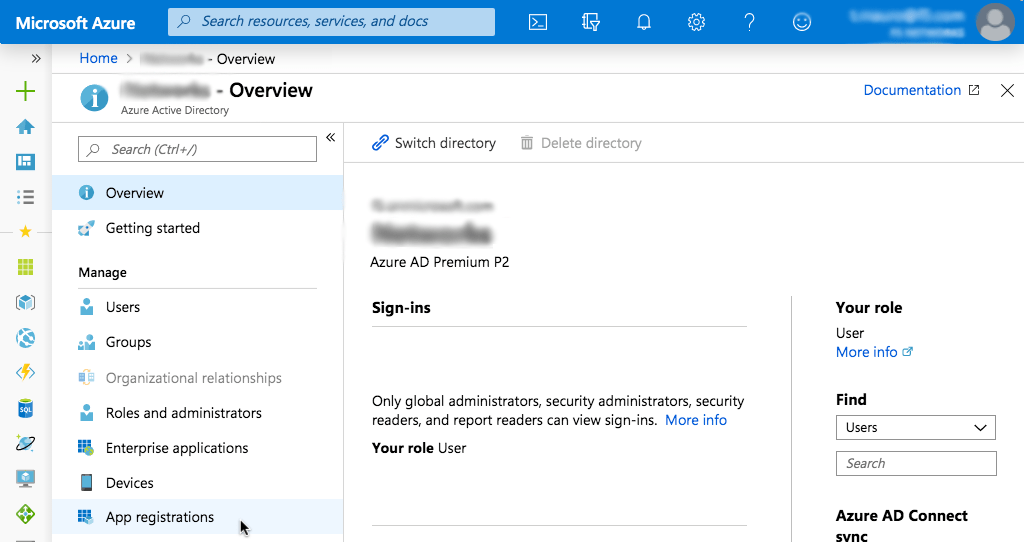

Navigate to the Azure portal and click Azure Active Directory in the left navigation column.

In this blog we’re using features that are available in the Premium version of AD and not the standard free version. If you don’t already have the Premium version (as is the case for new accounts), you can start a free trial as prompted on the AD Overview page.

-

Click App registrations in the left navigation column (we have minimized the global navigation column in the screenshot).

-

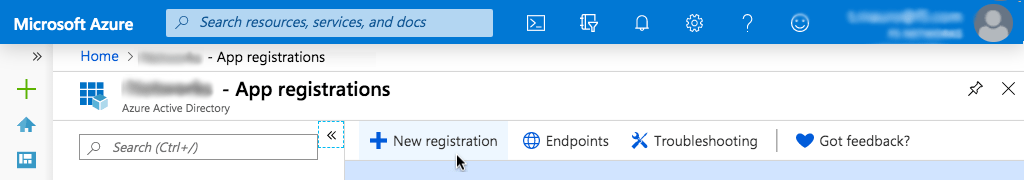

On the App registrations page, click New registration.

-

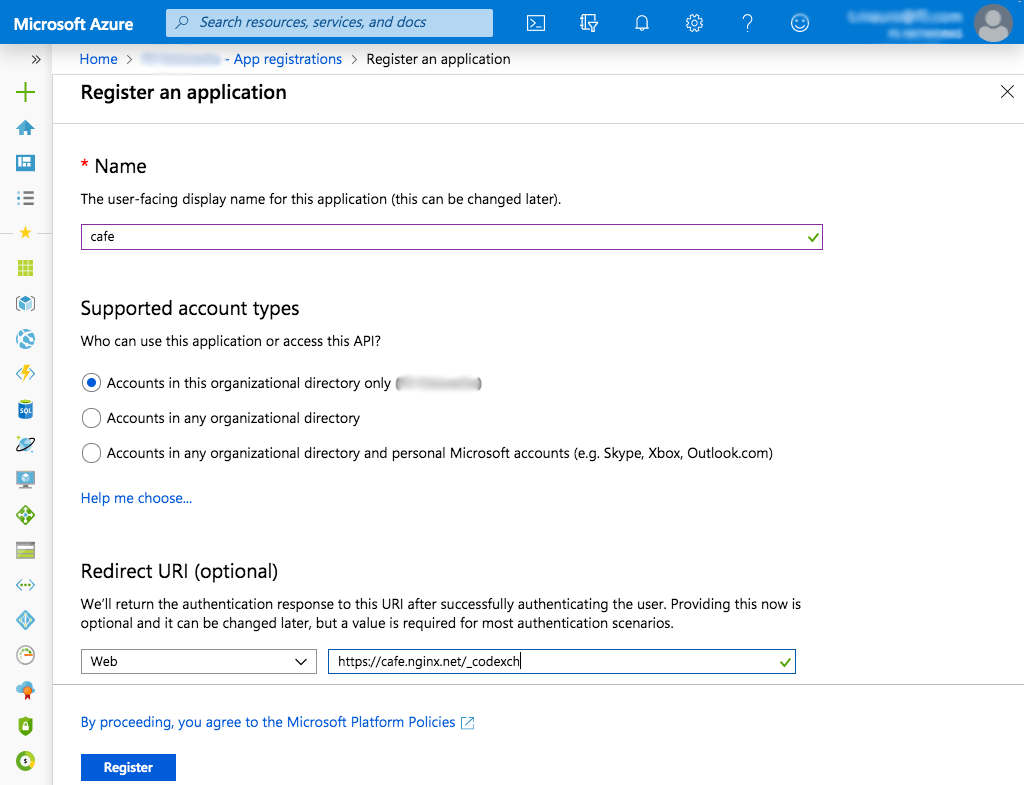

On the Register an application page that opens, enter values in the Name and Redirect URI fields, click the appropriate radio button in the Supported account types section, and then click the Register button. We’re using the following values:

- Name – cafe

- Supported account types – Account in this organizational directory only

- Redirect URI (optional) – Web: https://cafe.nginx.net/_codexch

-

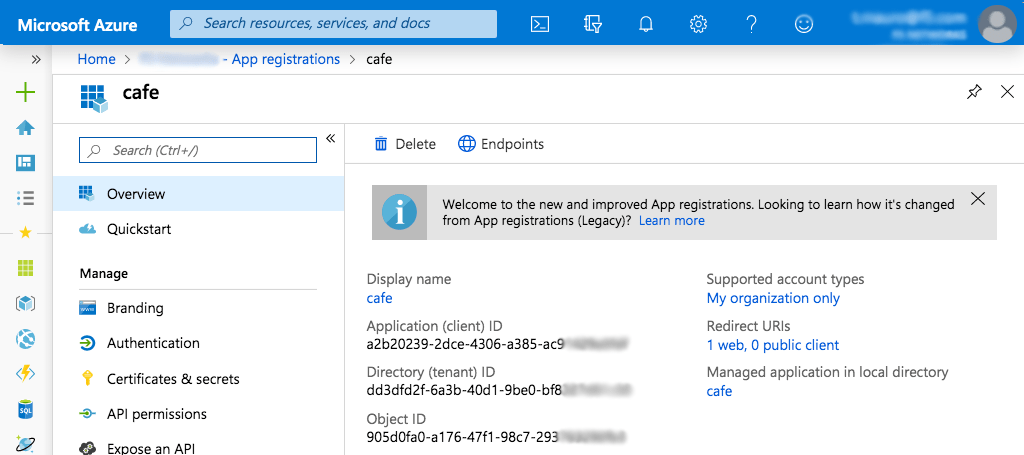

Make note of the values in the Application (client) ID and Directory (tenant) ID fields on the cafe confirmation page that opens. We’ll add them to the cafe-ingress.yaml file we create in Setting Up the Sample Application to Use OpenID Connect.

-

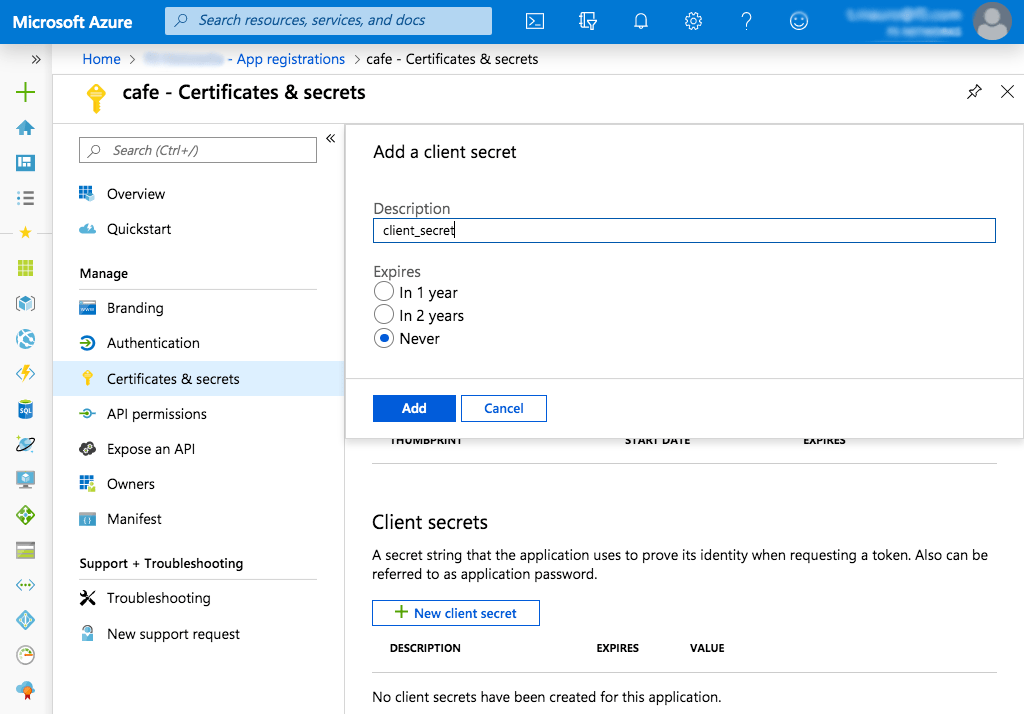

In the Manage section of the left navigation bar, click Certificates & secrets (see the preceding screenshot). On the page that opens, click the New client secret button.

-

In the Add a client secret pop‑up window, enter the following values and click the Add button:

- Description – client_secret

- Expires – Never

-

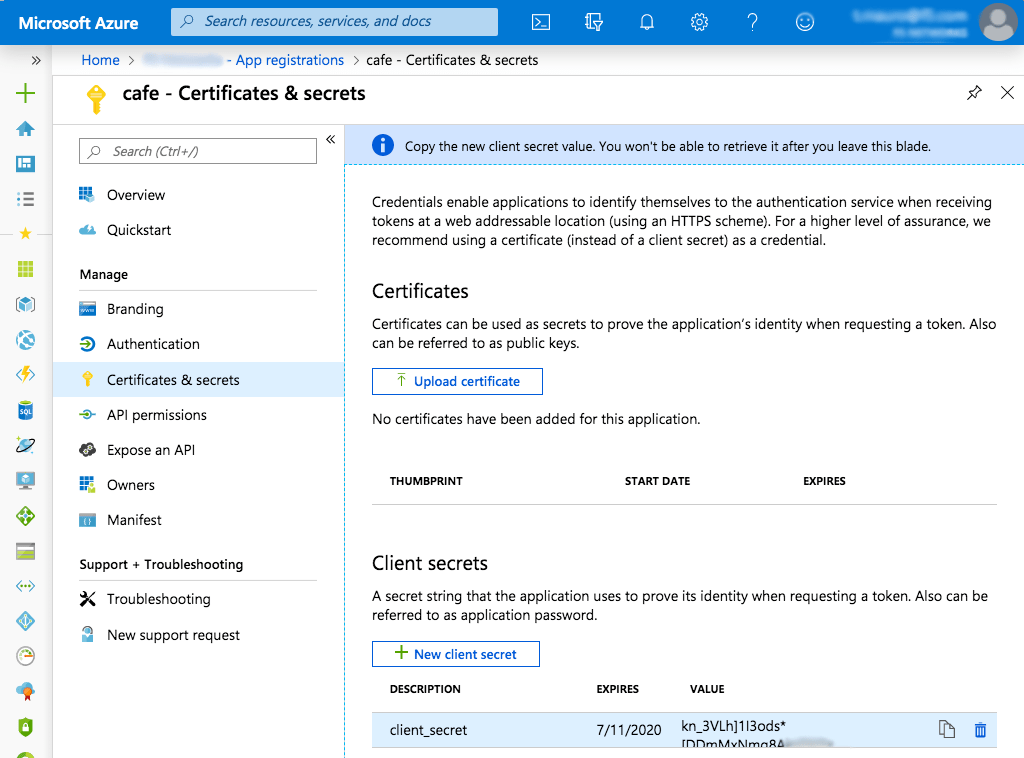

Copy the value for client_secret that appears, because it will not be recoverable after you close the window. In our example it is

kn_3VLh]1I3ods*[DDmMxNmg8xxx.

-

URL‑encode the client secret. There are a number of ways to do this but for a non‑production example we can use the urlencoder.org website. Paste the secret in the upper gray box, click the > ENCODE < button, and the encoded value appears in the lower gray box. Copy the encoded value for use in configuration files. In our example it is

kn_3VLh%5D1I3ods%2A%5BDDmMxNmg8xxx.

Installing and Configuring Kubernetes

There are many ways to install and configure Kubernetes, but for this example we’ll use one of my favorite installers, Kubespray. You can install Kubespray from the GitHub repo.

You can create the Kubernetes cluster on any platform you wish. Here we’re using a MacBook. We’ve previously used VMware Fusion to create four virtual machines (VMs) on the MacBook. We’ve also created a custom network that supports connection to external networks using Network Address Translation (NAT). To enable NAT in Fusion, navigate to Preferences > Network, create a new custom network, and enable NAT by expanding the Advanced section and checking the option for NAT.

The VMs have the following properties:

| Name | OS | IP Address | Alias IP Address | Memory | Disk Size |

|---|---|---|---|---|---|

| node1 | CentOS 7.6 | 172.16.186.101 | 172.16.186.100 | 4 GB | 20 GB |

| node2 | CentOS 7.6 | 172.16.186.102 | – | 2 GB | 20 GB |

| node3 | CentOS 7.6 | 172.16.186.103 | – | 2 GB | 20 GB |

| node4 | CentOS 7.6 | 172.16.186.104 | – | 2 GB | 20 GB |

Note that we set a static IP address for each node and created an alias IP address on node1. In addition we satisfied the following requirements for Kubernetes nodes:

- Disabling swap

- Allowing IP address forwarding

- Copying the ssh key from the host running Kubespray (the Macbook) to each of the four VMs, to enable connecting over ssh without a password

-

Modifying the sudoers file on each of the four VMs to allow

sudowithout a password (use thevisudocommand and make the following changes):## Allows people in group wheel to run all commands # %Wheel ALL=(ALL) ALL ## Same thing without a password %Wheel ALL=(ALL) NOPASSWD: ALL

We disabled firewalld on the VMs but for production you likely want to keep it enabled and define the ports through which the firewall accepts traffic. We have SELinux in enforcing mode.

On the MacBook we also satisfied all the Kubespray prerequisites, including installation of an Ansible version supported by Kubespray.

Kubespray comes with a number of configuration files. We’re replacing the values in several fields in two of them:

-

group_vars/all/all.yml

# adding the ability to call upstream DNS upstream_dns_servers: - 8.8.8.8 - 8.8.4.4 -

group_vars/k8s-cluster/k8s-cluster.yml

kube_network_plugin: flannel # Make sure the following subnets aren't used by active networks kube_service_addresses: 10.233.0.0/18 kube_pods_subnet: 10.233.64.0/18 # change the cluster name to whatever you plan to use cluster_name: k8s.nginx.net # add so we get kubectl and the config files locally kubeconfig_localhost: true kubectl_localhost: true

We also create a new hosts.yml file with the following contents:

all:

hosts:

node1:

ansible_host: 172.16.186.101

ip: 172.16.186.101

access_ip: 172.16.186.101

node2:

ansible_host: 172.16.186.102

ip: 172.16.186.102

access_ip: 172.16.186.102

node3:

ansible_host: 172.16.186.103

ip: 172.16.186.103

access_ip: 172.16.186.103

node4:

ansible_host: 172.16.186.104

ip: 172.16.186.104

access_ip: 172.16.186.104

children:

kube-master:

hosts:

node1:

kube-node:

hosts:

node1:

node2:

node3:

node4:

etcd:

hosts:

node1:

k8s-cluster:

children:

kube-master:

kube-node:

calico-rr:

hosts: {}Now we run the following command to create a four‑node Kubernetes cluster with node1 as the single master. (Kubernetes recommends three master nodes in a production environment, but one node is sufficient for our example and eliminates any possible issues with synchronization.)

$ ansible-playbook -i inventory/mycluster/hosts.yml -b cluster.ymlCreating a Docker Image for the NGINX Plus Ingress Controller

NGINX publishes a Docker image for the open source NGINX Ingress Controller, but we’re using NGINX Plus and so need to build a private Docker image with the certificate and key associated with our NGINX Plus subscription. We’re following the instructions at the GitHub repo for the NGINX Ingress Controller, but replacing the contents of the Dockerfile provided in that repo, as detailed below.

Note: Be sure to store the image in a private Docker Hub repository, not a standard public repo; otherwise your NGINX Plus credentials are exposed and subject to misuse. A free Docker Hub account entitles you to one private repo.

Replace the contents of the standard Dockerfile provided in the kubernetes-ingress repo with the following text. One important difference is that we include the NGINX JavaScript (njs) module in the Docker image by adding the nginx-plus-module-njs argument to the second apt-get install command.

FROM debian:stretch-slim

LABEL maintainer="NGINX Docker Maintainers "

ENV NGINX_PLUS_VERSION 18-1~stretch

ARG IC_VERSION

# Download certificate and key from the customer portal (https://cs.nginx.com)

# and copy to the build context

COPY nginx-repo.crt /etc/ssl/nginx/

COPY nginx-repo.key /etc/ssl/nginx/

# Make sure the certificate and key have correct permissions

RUN chmod 644 /etc/ssl/nginx/*

# Install NGINX Plus

RUN set -x

&& apt-get update

&& apt-get install --no-install-recommends --no-install-suggests -y apt-transport-https ca-certificates gnupg1

&&

NGINX_GPGKEY=573BFD6B3D8FBC641079A6ABABF5BD827BD9BF62;

found='';

for server in

ha.pool.sks-keyservers.net

hkp://keyserver.ubuntu.com:80

hkp://p80.pool.sks-keyservers.net:80

pgp.mit.edu

; do

echo "Fetching GPG key $NGINX_GPGKEY from $server";

apt-key adv --keyserver "$server" --keyserver-options timeout=10 --recv-keys "$NGINX_GPGKEY" && found=yes && break;

done;

test -z "$found" && echo >&2 "error: failed to fetch GPG key $NGINX_GPGKEY" && exit 1;

echo "Acquire::https::plus-pkgs.nginx.com::Verify-Peer "true";" >> /etc/apt/apt.conf.d/90nginx

&& echo "Acquire::https::plus-pkgs.nginx.com::Verify-Host "true";" >> /etc/apt/apt.conf.d/90nginx

&& echo "Acquire::https::plus-pkgs.nginx.com::SslCert "/etc/ssl/nginx/nginx-repo.crt";" >> /etc/apt/apt.conf.d/90nginx

&& echo "Acquire::https::plus-pkgs.nginx.com::SslKey "/etc/ssl/nginx/nginx-repo.key";" >> /etc/apt/apt.conf.d/90nginx

&& echo "Acquire::https::plus-pkgs.nginx.com::User-Agent "k8s-ic-$IC_VERSION-apt";" >> /etc/apt/apt.conf.d/90nginx

&& printf "deb https://plus-pkgs.nginx.com/debian stretch nginx-plusn" > /etc/apt/sources.list.d/nginx-plus.list

&& apt-get update && apt-get install -y nginx-plus=${NGINX_PLUS_VERSION} nginx-plus-module-njs

&& apt-get remove --purge --auto-remove -y gnupg1

&& rm -rf /var/lib/apt/lists/*

&& rm -rf /etc/ssl/nginx

&& rm /etc/apt/apt.conf.d/90nginx /etc/apt/sources.list.d/nginx-plus.list

# Forward NGINX access and error logs to stdout and stderr of the Ingress

# controller process

RUN ln -sf /proc/1/fd/1 /var/log/nginx/access.log

&& ln -sf /proc/1/fd/1 /var/log/nginx/stream-access.log

&& ln -sf /proc/1/fd/1 /var/log/nginx/oidc_auth.log

&& ln -sf /proc/1/fd/2 /var/log/nginx/error.log

&& ln -sf /proc/1/fd/2 /var/log/nginx/oidc_error.log

EXPOSE 80 443

COPY nginx-ingress internal/configs/version1/nginx-plus.ingress.tmpl internal/configs/version1/nginx-plus.tmpl internal/configs/version2/nginx-plus.virtualserver.tmpl /

RUN rm /etc/nginx/conf.d/*

&& mkdir -p /etc/nginx/secrets

# Uncomment the line below to add the default.pem file to the image

# and use it as a certificate and key for the default server

# ADD default.pem /etc/nginx/secrets/default

ENTRYPOINT ["/nginx-ingress"]We tag the Dockerfile with 1.5.0-oidc and push the image to a private repo on Docker Hub under the name nginx-plus:1.5.0-oidc. Our private repo is called magicalyak, but we’ll remind you to substitute the name of your private repo as necessary below.

To prepare the Kubernetes nodes for the custom Docker image, we run the following commands on each of them. This enables Kubernetes to place the Ingress resource on the node of its choice. (You can also run the commands on just one node and then direct the Ingress resource to run exclusively on that node.) In the final command, substitute the name of your private repo for magicalyak:

$ sudo groupadd docker

$ sudo usermod -aG docker $USER

$ docker login # this prompts you to enter your Docker username and password

$ docker pull magicalyak/nginx-plus:1.5.0-oidcAt this point the Kubernetes nodes are running.

In order to use the Kubernetes dashboard, we run the following commands. The first enables kubectl on the local machine (the MacBook in this example). The second returns the URL for the dashboard, and the third returns the token we need to access the dashboard (we’ll paste it into the token field on the dashboard login page).

$ cp inventory/mycluster/artifacts/admin.conf ~/.kube/config

$ kubectl cluster-info # gives us the dashboard URL

$ kubectl -n kube-system describe secrets

`kubectl -n kube-system get secrets | awk '/clusterrole-aggregation-controller/ {print $1}'`

| awk '/token:/ {print $2}'Installing and Customizing the NGINX Plus Ingress Controller

We now install the NGINX Plus Ingress Controller in our Kubernetes cluster and customize the configuration for OIDC by incorporating the IDs and secret generated by Azure AD in Obtaining Credentials from an OpenID Connect Identity Provider.

Cloning the NGINX Plus Ingress Controller Repo

We first clone the kubernetes-ingress GitHub repo and change directory to the deployments subdirectory. Then we run kubectl commands to create the resources needed: the namespace and service account, the default server secret, the custom resource definition, and role‑based access control (RBAC).

$ git clone https://github.com/nginxinc/kubernetes-ingress

$ cd kubernetes-ingress/deployments

$ kubectl create -f common/ns-and-sa.yaml

$ kubectl create -f common/default-server-secret.yaml

$ kubectl create -f common/custom-resource-definitions.yaml

$ kubectl create -f rbac/rbac.yamlCreating the NGINX ConfigMap

Now we replace the contents of the common/nginx-config.yaml file with the following, a ConfigMap that enables the njs module and includes configuration for OIDC.

kind: ConfigMap

apiVersion: v1

metadata:

name: nginx-config

namespace: nginx-ingress

data:

#external-status-address: 172.16.186.101

main-snippets: |

load_module modules/ngx_http_js_module.so;

ingress-template: |

# configuration for {{.Ingress.Namespace}}/{{.Ingress.Name}}

{{- if index $.Ingress.Annotations "custom.nginx.org/enable-oidc"}}

{{$oidc := index $.Ingress.Annotations "custom.nginx.org/enable-oidc"}}

{{- if eq $oidc "True"}}

{{- $kv_zone_size := index $.Ingress.Annotations "custom.nginx.org/keyval-zone-size"}}

{{- $refresh_time := index $.Ingress.Annotations "custom.nginx.org/refresh-token-timeout"}}

{{- $session_time := index $.Ingress.Annotations "custom.nginx.org/session-token-timeout"}}

{{- if not $kv_zone_size}}{{$kv_zone_size = "1M"}}{{end}}

{{- if not $refresh_time}}{{$refresh_time = "8h"}}{{end}}

{{- if not $session_time}}{{$session_time = "1h"}}{{end}}

keyval_zone zone=opaque_sessions:{{$kv_zone_size}} state=/var/lib/nginx/state/opaque_sessions.json timeout={{$session_time}};

keyval_zone zone=refresh_tokens:{{$kv_zone_size}} state=/var/lib/nginx/state/refresh_tokens.json timeout={{$refresh_time}};

keyval $cookie_auth_token $session_jwt zone=opaque_sessions;

keyval $cookie_auth_token $refresh_token zone=refresh_tokens;

keyval $request_id $new_session zone=opaque_sessions;

keyval $request_id $new_refresh zone=refresh_tokens;

proxy_cache_path /var/cache/nginx/jwk levels=1 keys_zone=jwk:64k max_size=1m;

map $refresh_token $no_refresh {

"" 1;

"-" 1;

default 0;

}

log_format main_jwt '$remote_addr $jwt_claim_sub $remote_user [$time_local] "$request" $status '

'$body_bytes_sent "$http_referer" "$http_user_agent" "$http_x_forwarded_for"';

js_include conf.d/openid_connect.js;

js_set $requestid_hash hashRequestId;

{{end}}{{end -}}

{{range $upstream := .Upstreams}}

upstream {{$upstream.Name}} {

zone {{$upstream.Name}} 256k;

{{if $upstream.LBMethod }}{{$upstream.LBMethod}};{{end}}

{{range $server := $upstream.UpstreamServers}}

server {{$server.Address}}:{{$server.Port}} max_fails={{$server.MaxFails}} fail_timeout={{$server.FailTimeout}}

{{- if $server.SlowStart}} slow_start={{$server.SlowStart}}{{end}}{{if $server.Resolve}} resolve{{end}};{{end}}

{{if $upstream.StickyCookie}}

sticky cookie {{$upstream.StickyCookie}};

{{end}}

{{if $.Keepalive}}keepalive {{$.Keepalive}};{{end}}

{{- if $upstream.UpstreamServers -}}

{{- if $upstream.Queue}}

queue {{$upstream.Queue}} timeout={{$upstream.QueueTimeout}}s;

{{- end -}}

{{- end}}

}

{{- end}}

{{range $server := .Servers}}

server {

{{if not $server.GRPCOnly}}

{{range $port := $server.Ports}}

listen {{$port}}{{if $server.ProxyProtocol}} proxy_protocol{{end}};

{{- end}}

{{end}}

{{if $server.SSL}}

{{- range $port := $server.SSLPorts}}

listen {{$port}} ssl{{if $server.HTTP2}} http2{{end}}{{if $server.ProxyProtocol}} proxy_protocol{{end}};

{{- end}}

ssl_certificate {{$server.SSLCertificate}};

ssl_certificate_key {{$server.SSLCertificateKey}};

{{if $server.SSLCiphers}}

ssl_ciphers {{$server.SSLCiphers}};

{{end}}

{{end}}

{{range $setRealIPFrom := $server.SetRealIPFrom}}

set_real_ip_from {{$setRealIPFrom}};{{end}}

{{if $server.RealIPHeader}}real_ip_header {{$server.RealIPHeader}};{{end}}

{{if $server.RealIPRecursive}}real_ip_recursive on;{{end}}

server_tokens "{{$server.ServerTokens}}";

server_name {{$server.Name}};

status_zone {{$server.StatusZone}};

{{if not $server.GRPCOnly}}

{{range $proxyHideHeader := $server.ProxyHideHeaders}}

proxy_hide_header {{$proxyHideHeader}};{{end}}

{{range $proxyPassHeader := $server.ProxyPassHeaders}}

proxy_pass_header {{$proxyPassHeader}};{{end}}

{{end}}

{{if $server.SSL}}

{{if not $server.GRPCOnly}}

{{- if $server.HSTS}}

set $hsts_header_val "";

proxy_hide_header Strict-Transport-Security;

{{- if $server.HSTSBehindProxy}}

if ($http_x_forwarded_proto = 'https') {

{{else}}

if ($https = on) {

{{- end}}

set $hsts_header_val "max-age={{$server.HSTSMaxAge}}; {{if $server.HSTSIncludeSubdomains}}includeSubDomains; {{end}}preload";

}

add_header Strict-Transport-Security "$hsts_header_val" always;

{{end}}

{{- if $server.SSLRedirect}}

if ($scheme = http) {

return 301 https://$host:{{index $server.SSLPorts 0}}$request_uri;

}

{{- end}}

{{end}}

{{- end}}

{{- if $server.RedirectToHTTPS}}

if ($http_x_forwarded_proto = 'http') {

return 301 https://$host$request_uri;

}

{{- end}}

{{with $jwt := $server.JWTAuth}}

auth_jwt_key_file {{$jwt.Key}};

auth_jwt "{{.Realm}}"{{if $jwt.Token}} token={{$jwt.Token}}{{end}};

{{- if $jwt.RedirectLocationName}}

error_page 401 {{$jwt.RedirectLocationName}};

{{end}}

{{end}}

{{- if $server.ServerSnippets}}

{{range $value := $server.ServerSnippets}}

{{$value}}{{end}}

{{- end}}

{{- range $healthCheck := $server.HealthChecks}}

location @hc-{{$healthCheck.UpstreamName}} {

{{- range $name, $header := $healthCheck.Headers}}

proxy_set_header {{$name}} "{{$header}}";

{{- end }}

proxy_connect_timeout {{$healthCheck.TimeoutSeconds}}s;

proxy_read_timeout {{$healthCheck.TimeoutSeconds}}s;

proxy_send_timeout {{$healthCheck.TimeoutSeconds}}s;

proxy_pass {{$healthCheck.Scheme}}://{{$healthCheck.UpstreamName}};

health_check {{if $healthCheck.Mandatory}}mandatory {{end}}uri={{$healthCheck.URI}} interval=

{{- $healthCheck.Interval}}s fails={{$healthCheck.Fails}} passes={{$healthCheck.Passes}};

}

{{end -}}

{{- range $location := $server.JWTRedirectLocations}}

location {{$location.Name}} {

internal;

return 302 {{$location.LoginURL}};

}

{{end -}}

{{- if index $.Ingress.Annotations "custom.nginx.org/enable-oidc"}}

{{- $oidc_resolver := index $.Ingress.Annotations "custom.nginx.org/oidc-resolver-address"}}

{{- if not $oidc_resolver}}{{$oidc_resolver = "8.8.8.8"}}{{end}}

resolver {{$oidc_resolver}};

subrequest_output_buffer_size 32k;

{{- $oidc_jwt_keyfile := index $.Ingress.Annotations "custom.nginx.org/oidc-jwt-keyfile"}}

{{- $oidc_logout_redirect := index $.Ingress.Annotations "custom.nginx.org/oidc-logout-redirect"}}

{{- $oidc_authz_endpoint := index $.Ingress.Annotations "custom.nginx.org/oidc-authz-endpoint"}}

{{- $oidc_token_endpoint := index $.Ingress.Annotations "custom.nginx.org/oidc-token-endpoint"}}

{{- $oidc_client := index $.Ingress.Annotations "custom.nginx.org/oidc-client"}}

{{- $oidc_client_secret := index $.Ingress.Annotations "custom.nginx.org/oidc-client-secret"}}

{{ $oidc_hmac_key := index $.Ingress.Annotations "custom.nginx.org/oidc-hmac-key"}}

set $oidc_jwt_keyfile "{{$oidc_jwt_keyfile}}";

set $oidc_logout_redirect "{{$oidc_logout_redirect}}";

set $oidc_authz_endpoint "{{$oidc_authz_endpoint}}";

set $oidc_token_endpoint "{{$oidc_token_endpoint}}";

set $oidc_client "{{$oidc_client}}";

set $oidc_client_secret "{{$oidc_client_secret}}";

set $oidc_hmac_key "{{$oidc_hmac_key}}";

{{end -}}

{{range $location := $server.Locations}}

location {{$location.Path}} {

{{with $location.MinionIngress}}

# location for minion {{$location.MinionIngress.Namespace}}/{{$location.MinionIngress.Name}}

{{end}}

{{if $location.GRPC}}

{{if not $server.GRPCOnly}}

error_page 400 @grpcerror400;

error_page 401 @grpcerror401;

error_page 403 @grpcerror403;

error_page 404 @grpcerror404;

error_page 405 @grpcerror405;

error_page 408 @grpcerror408;

error_page 414 @grpcerror414;

error_page 426 @grpcerror426;

error_page 500 @grpcerror500;

error_page 501 @grpcerror501;

error_page 502 @grpcerror502;

error_page 503 @grpcerror503;

error_page 504 @grpcerror504;

{{end}}

{{- if $location.LocationSnippets}}

{{range $value := $location.LocationSnippets}}

{{$value}}{{end}}

{{- end}}

{{with $jwt := $location.JWTAuth}}

auth_jwt_key_file {{$jwt.Key}};

auth_jwt "{{.Realm}}"{{if $jwt.Token}} token={{$jwt.Token}}{{end}};

{{end}}

grpc_connect_timeout {{$location.ProxyConnectTimeout}};

grpc_read_timeout {{$location.ProxyReadTimeout}};

grpc_set_header Host $host;

grpc_set_header X-Real-IP $remote_addr;

grpc_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

grpc_set_header X-Forwarded-Host $host;

grpc_set_header X-Forwarded-Port $server_port;

grpc_set_header X-Forwarded-Proto $scheme;

{{- if $location.ProxyBufferSize}}

grpc_buffer_size {{$location.ProxyBufferSize}};

{{- end}}

{{if $location.SSL}}

grpc_pass grpcs://{{$location.Upstream.Name}}

{{else}}

grpc_pass grpc://{{$location.Upstream.Name}};

{{end}}

{{else}}

proxy_http_version 1.1;

{{if $location.Websocket}}

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $connection_upgrade;

{{- else}}

{{- if $.Keepalive}}proxy_set_header Connection "";{{end}}

{{- end}}

{{- if $location.LocationSnippets}}

{{range $value := $location.LocationSnippets}}

{{$value}}{{end}}

{{- end}}

{{ with $jwt := $location.JWTAuth }}

auth_jwt_key_file {{$jwt.Key}};

auth_jwt "{{.Realm}}"{{if $jwt.Token}} token={{$jwt.Token}}{{end}};

{{if $jwt.RedirectLocationName}}

error_page 401 {{$jwt.RedirectLocationName}};

{{end}}

{{end}}

{{- if index $.Ingress.Annotations "custom.nginx.org/enable-oidc"}}

auth_jwt "" token=$session_jwt;

auth_jwt_key_request /_jwks_uri;

error_page 401 @oidc_auth;

{{end}}

proxy_connect_timeout {{$location.ProxyConnectTimeout}};

proxy_read_timeout {{$location.ProxyReadTimeout}};

client_max_body_size {{$location.ClientMaxBodySize}};

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Host $host;

proxy_set_header X-Forwarded-Port $server_port;

proxy_set_header X-Forwarded-Proto {{if $server.RedirectToHTTPS}}https{{else}}$scheme{{end}};

proxy_buffering {{if $location.ProxyBuffering}}on{{else}}off{{end}};

{{- if $location.ProxyBuffers}}

proxy_buffers {{$location.ProxyBuffers}};

{{- end}}

{{- if $location.ProxyBufferSize}}

proxy_buffer_size {{$location.ProxyBufferSize}};

{{- end}}

{{- if $location.ProxyMaxTempFileSize}}

proxy_max_temp_file_size {{$location.ProxyMaxTempFileSize}};

{{- end}}

{{if $location.SSL}}

proxy_pass https://{{$location.Upstream.Name}}{{$location.Rewrite}};

{{else}}

proxy_pass http://{{$location.Upstream.Name}}{{$location.Rewrite}};

{{end}}

{{end}}

}{{end}}

{{if $server.GRPCOnly}}

error_page 400 @grpcerror400;

error_page 401 @grpcerror401;

error_page 403 @grpcerror403;

error_page 404 @grpcerror404;

error_page 405 @grpcerror405;

error_page 408 @grpcerror408;

error_page 414 @grpcerror414;

error_page 426 @grpcerror426;

error_page 500 @grpcerror500;

error_page 501 @grpcerror501;

error_page 502 @grpcerror502;

error_page 503 @grpcerror503;

error_page 504 @grpcerror504;

{{end}}

{{if $server.HTTP2}}

location @grpcerror400 { default_type application/grpc; return 400 "n"; }

location @grpcerror401 { default_type application/grpc; return 401 "n"; }

location @grpcerror403 { default_type application/grpc; return 403 "n"; }

location @grpcerror404 { default_type application/grpc; return 404 "n"; }

location @grpcerror405 { default_type application/grpc; return 405 "n"; }

location @grpcerror408 { default_type application/grpc; return 408 "n"; }

location @grpcerror414 { default_type application/grpc; return 414 "n"; }

location @grpcerror426 { default_type application/grpc; return 426 "n"; }

location @grpcerror500 { default_type application/grpc; return 500 "n"; }

location @grpcerror501 { default_type application/grpc; return 501 "n"; }

location @grpcerror502 { default_type application/grpc; return 502 "n"; }

location @grpcerror503 { default_type application/grpc; return 503 "n"; }

location @grpcerror504 { default_type application/grpc; return 504 "n"; }

{{end}}

{{- if index $.Ingress.Annotations "custom.nginx.org/enable-oidc" -}}

include conf.d/openid_connect.server_conf;

{{- end}}

}{{end}}Now we deploy the ConfigMap in Kubernetes, and change directory back up to kubernetes-ingress.

$ kubectl create -f common/nginx-config.yaml

$ cd ..Incorporating OpenID Connect into the NGINX Plus Ingress Controller

Since we are using OIDC resources, we’re taking advantage of the OIDC reference implementation provided by NGINX on GitHub. After cloning the nginx-openid-connect repo inside our existing kubernetes-ingress repo, we create ConfigMaps from the openid-connect.js and openid-connect.server-conf files.

$ git clone https://github.com/nginxinc/nginx-openid-connect

$ cd nginx-openid-connect

$ kubectl create configmap -n nginx-ingress openid-connect.js --from-file=openid_connect.js

$ kubectl create configmap -n nginx-ingress openid-connect.server-conf --from-file=openid_connect.server_confNow we incorporate the two files into our Ingress controller deployment as Kubernetes volumes of type ConfigMap, by adding the following directives to the existing nginx-plus-ingress.yaml file in the deployments/deployment subdirectory of our kubernetes-ingress repo:

volumes:

- name: openid-connect-js

configMap:

name: openid-connect.js

- name: openid-connect-server-conf

configMap:

name: openid-connect.server-confWe also add the following directives to nginx-plus-ingress.yaml to make the files accessible in the /etc/nginx/conf.d directory of our deployment:

volumeMounts:

- name: openid-connect-js

mountPath: /etc/nginx/conf.d/openid_connect.js

subPath: openid_connect.js

- name: openid-connect-server-conf

mountPath: /etc/nginx/conf.d/openid_connect.server_conf

subPath: openid_connect.server_confHere’s the complete nginx-plus-ingress.yaml file for our deployment. If using it as the basis for your own deployment, replace magicalyak with the name of your private registry.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-ingress

namespace: nginx-ingress

labels:

app: nginx-ingress

spec:

replicas: 1

selector:

matchLabels:

app: nginx-ingress

template:

metadata:

labels:

app: nginx-ingress

#annotations:

# prometheus.io/scrape: "true"

# prometheus.io/port: "9113"

spec:

containers:

- image: magicalyak/nginx-plus:1.5.0-oidc

imagePullPolicy: IfNotPresent

name: nginx-plus-ingress

args:

- -nginx-plus

- -nginx-configmaps=$(POD_NAMESPACE)/nginx-config

- -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret

- -report-ingress-status

#- -v=3 # Enables extensive logging. Useful for troubleshooting.

#- -external-service=nginx-ingress

#- -enable-leader-election

#- -enable-prometheus-metrics

#- -enable-custom-resources

env:

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

#- name: prometheus

# containerPort: 9113

volumeMounts:

- name: openid-connect-js

mountPath: /etc/nginx/conf.d/openid_connect.js

subPath: openid_connect.js

- name: openid-connect-server-conf

mountPath: /etc/nginx/conf.d/openid_connect.server_conf

subPath: openid_connect.server_conf

serviceAccountName: nginx-ingress

volumes:

- name: openid-connect-js

configMap:

name: openid-connect.js

- name: openid-connect-server-conf

configMap:

name: openid-connect.server-confCreating the Kubernetes Service

We also need to define a Kubernetes service by creating a new file called nginx-plus-service.yaml in the deployments/service subdirectory of our kubernetes-ingress repo. We set the ExternalIPs field to the alias IP address (172.16.186.100) we assigned to node1 in Installing and Configuring Kubernetes, but you could use NodePorts or other options instead.

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress

namespace: nginx-ingress

labels:

svc: nginx-ingress

spec:

type: ClusterIP

clusterIP:

externalIPs:

- 172.16.186.100

ports:

- name: http

port: 80

targetPort: http

protocol: TCP

- name: https

port: 443

targetPort: https

protocol: TCP

selector:

app: nginx-ingressDeploying the Ingress Controller

With all of the YAML files in place, we run the following commands to deploy the Ingress controller and service resources in Kubernetes:

$ cd ../deployments

$ kubectl create -f deployment/nginx-plus-ingress.yaml

$ kubectl create -f service/nginx-plus-service.yaml

$ cd ..At this point our Ingress controller is installed and we can focus on creating the sample resource for which we’re using OIDC authentication.

Setting Up the Sample Application to Use OpenID Connect

To test our OIDC authentication setup, we’re using a very simple application called cafe, which has tea and coffee service endpoints. It’s included in the examples/complete-example directory of the kubernetes-ingress repo on GitHub, and you can read more about it in NGINX and NGINX Plus Ingress Controllers for Kubernetes Load Balancing on our blog.

We need to make some modifications to the sample app, however – specifically, we need to insert the values we obtained from Azure AD into the YAML file for the application, cafe-ingress.yaml in the examples/complete-example directory.

We’re making two sets of changes, as shown in the full file below:

-

We’re adding an

annotationssection. The file below uses the{client_key},{tenant_key}, and{client_secret}variables to represent the values obtained from an IdP. To make it easier to track which values we’re referring to, in the list we’ve specified the literal values we obtained from Azure AD in the indicated step in Obtaining Credentials from the OpenID Connect Identity Provider. When creating your own deployment, substitute the values you obtain from Azure AD (or other IdP).{client_key}– The value in the Application (client) ID field on the Azure AD confirmation page. For our deployment, it’sa2b20239-2dce-4306-a385-ac9xxx, as reported in Step 6.{tenant_key}– The value in the Directory (tenant) ID field on the Azure AD confirmation page. For our deployment, it’sdd3dfd2f-6a3b-40d1-9be0-bf8xxx, as reported in Step 6.{client_secret}– The URL-encoded version of the value in the Client secrets section in Azure AD. For our deployment, it’skn_3VLh%5D1I3ods%2A%5BDDmMxNmg8xxx, as noted in Step 9.

In addition, note that the value in the

custom.nginx.org/oidc-hmac-keyfield is just an example. Substitute your own unique value that ensures nonce values are unpredictable. -

We’re changing the value in the

hostsandhostfields to cafe.nginx.net, and adding an entry for that domain to the /etc/hosts file on each of the four Kubernetes nodes, specifying the IP address from theClusterIPfield in nginx-plus-service.yaml. In our deployment, we set this to 172.16.186.100 in Installing and Configuring Kubernetes.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: cafe-ingress

annotations:

custom.nginx.org/enable-oidc: "True"

custom.nginx.org/keyval-zone-size: "1m" #(default 1m)

custom.nginx.org/refresh-token-timeout: "8h" #(default 8h)

custom.ngnix.org/session-token-timeout: "1h" #(default 1h)

custom.nginx.org/oidc-resolver-address: "8.8.8.8" #(default 8.8.8.8)

custom.nginx.org/oidc-jwt-keyfile: "https://login.microsoftonline.com/{tenant}/discovery/v2.0/keys"

custom.nginx.org/oidc-logout-redirect: "https://login.microsoftonline.com/{tenant}/oauth2/v2.0/logout"

custom.nginx.org/oidc-authz-endpoint: "https://login.microsoftonline.com/{tenant}/oauth2/v2.0/authorize"

custom.nginx.org/oidc-token-endpoint: "https://login.microsoftonline.com/{tenant}/oauth2/v2.0/token"

custom.nginx.org/oidc-client: "{client_key}"

custom.nginx.org/oidc-client-secret: "{client_secret}"

custom.nginx.org/oidc-hmac-key: "vC5FabzvYvFZFBzxtRCYDYX+"

spec:

tls:

- hosts:

- cafe.nginx.net

secretName: cafe-secret

rules:

- host: cafe.nginx.net

http:

paths:

- path: /tea

backend:

serviceName: tea-svc

servicePort: 80

- path: /coffee

backend:

serviceName: coffee-svc

servicePort: 80We now create the cafe resource in Kubernetes:

$ cd examples/complete-example

$ kubectl create -f cafe-secret.yaml

$ kubectl create -f cafe.yaml

$ kubectl create -f cafe-ingress.yaml

$ cd ../..To verify that the OIDC authentication process is working, we navigate to http://cafe/nginx.com/tea in a browser. It prompts for our login credentials, authenticates us, and displays some basic information generated by the tea service. For an example, see NGINX and NGINX Plus Ingress Controllers for Kubernetes Load Balancing.

The post Using the NGINX Plus Ingress Controller for Kubernetes with OpenID Connect Authentication from Azure AD appeared first on NGINX.

Leave a Reply