Maximizing Drupal 8 Performance with NGINX – Part II: Caching and Load Balancing

The following is adapted from a webinar hosted on January 20th, 2016 by Floyd Smith and Faisal Memon. This blog post is the second of two parts, and is focused on caching and using NGINX as a load balancer; the first part, focused on site architecture and configuring NGINX for Drupal 8, can be found here.

Table of Contents

22:18 Introduction

23:20 Why Cache with NGINX?

24:26 Microcaching with NGINX

26:06 Configuring NGINX for Microcaching

28:00 Optimized Microcaching with NGINX

30:48 Cache Purging with NGINX Plus

32:00 Load Balancing with NGINX

35:23 Session Persistence with NGINX Plus

38:51 SSL Offloading with NGINX

40:57 HTTP/2 With NGINX

42:30 Summary

22:18 Introduction

Faisal: Once you have a server running Drupal 8 and NGINX properly, you can start looking at caching to improve performance. Caching provides many benefits to your site, but there’s two main ones.

First, by caching the content, you’re moving it closer to the user, so they can get it faster. Second, you’re also offloading that work from the Drupal servers. Now, a request that would previously go and cause a PHP script to be executed on the Drupal servers will be intercepted and served by NGINX so you also get that performance improvement, and another less well-known benefit with caching is that you’re saving deep compute cycles in the backend as well.

23:20 Why Cache with NGINX?

This screenshot here is from a BBC study that they did with their radix caching server. They laid out all the details of their configuration and what they did to be able to get this performance difference in this post on the BBC Internet blog.

In the BBC’s testing, they found that with NGINX as a drop-in replacement for Varnish, they saw five times more throughput. Varnish is an open source cousin of ours and we’re not trying to knock it, but these kinds of results show how the event-driven architecture of NGINX that Floyd touched on earlier, and the way that we handle traffic within NGINX, allows us to scale to a level that other products and other projects are not always able to do.

24:26 Microcaching with NGINX

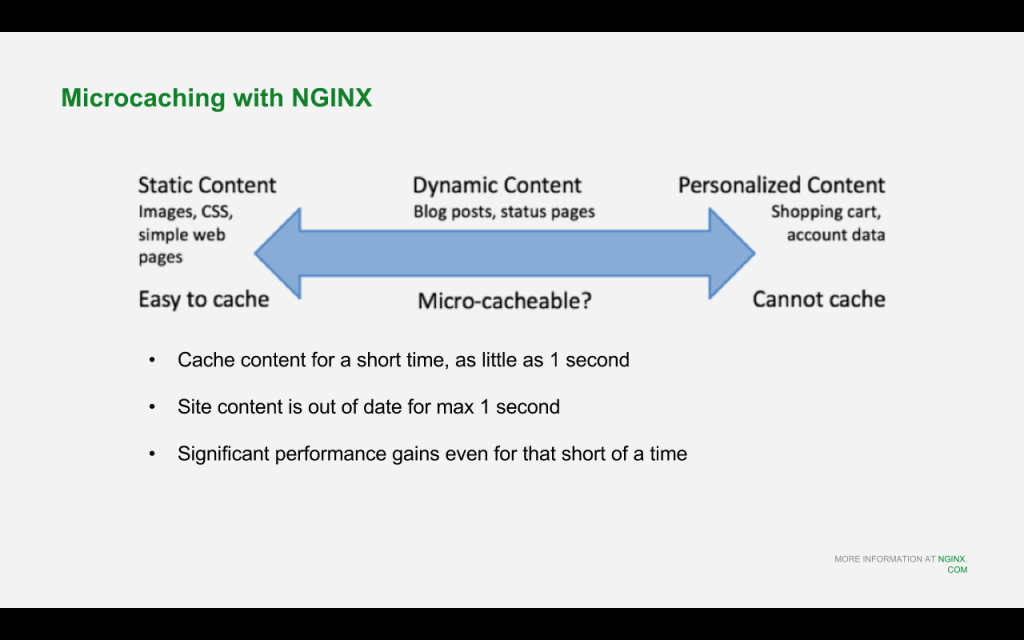

Microcaching is a term you may or may not have heard of. This is where you cache content that’s somewhere in the middle here on the scale between static content, such as images, CSS, stuff that’s typically easily cached and quite often put into some sort of CDN, and stuff that’s all the way on the right. That’s personalized content, your shopping cart, your account data, stuff that you do not ever want to cache.

In-between those two extremes, there’s a whole load of content, blog posts, status pages, that we consider dynamic content, but dynamic content that is cacheable. By microcaching this content, which is to say caching it for a short amount of time – as little as one second – we can get significant performance gains and reduce the load on the Drupal servers.

The nice part of microcaching is you could set your microcache validity to one second and your site content will be out of date for a maximum of one second. That takes away a lot of the typical worries you have with caching, such as accidentally presenting old stale content to your users or having to go through all the hassle of validating and purging the cache every time you make a change. If you’re doing microcaching, updates can be taken care of just by leveraging existing caching mechanisms.

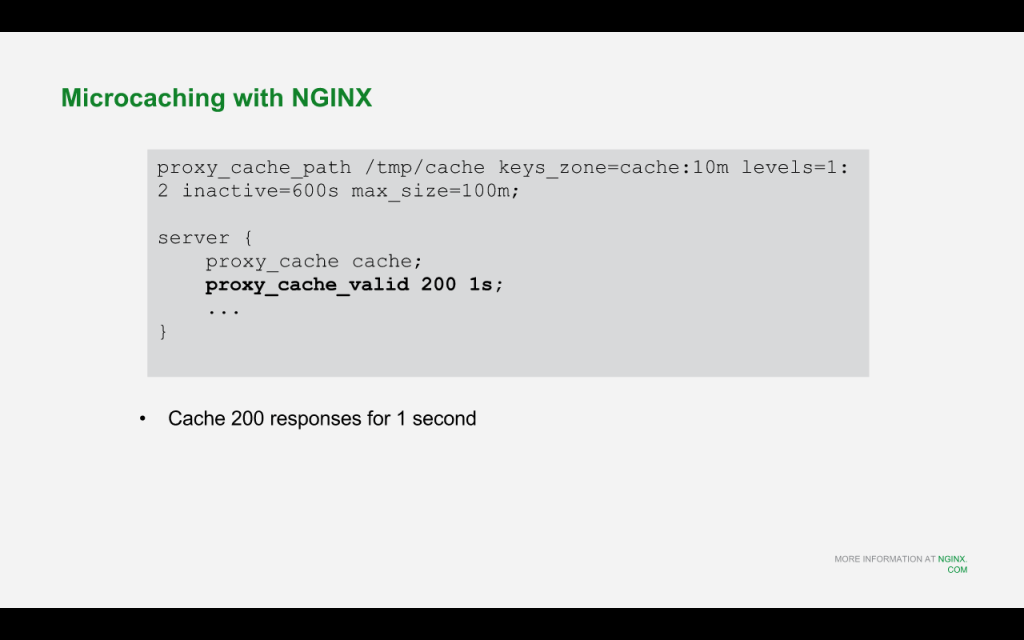

26:06 Configuring NGINX for Microcaching

Here’s a very simple NGINX configuration that enables caching. proxy_cache_valid is the magic line here that will take in all the content that is cacheable and then say that for this content, we’re going to cache it for one second, and then we’re going to mark it as stale.

There’s some other directives on the top there that says if content is inactive for 600 seconds, or in other words no one has accessed it for 10 minutes, it’s going to go ahead and be deleted from the NGINX cache, whether it’s stale or not.

So there’s two separate concepts here. There’s the idea of stale cache. This is set by the proxy_cache_valid directive and marks an entry as being stale but does not delete it off NGINX. It just sits there as stale content, which can be desirable. We’ll see why that matters in a few minutes. And there’s the inactive directive, which deletes content whether or not it’s stale if it has not been accessed by a user for a given amount of time; in this case, 10 minutes.

28:00 Optimized Microcaching with NGINX

That’s how we enable microcaching on a basic level within NGINX. Then there’s some directives that we determined, based on testing, can improve performance even more in the microcaching case.

proxy_cache_lock

The first directive, proxy_cache_lock, says that if there are multiple simultaneous requests for the same uncached or stale content – in other words, content that has be refreshed from Drupal – only the first one of those requests is going to be allowed through, and the subsequent requests for that same content are going to be queued up. That way when that first request is satisfied, the other guys will get it from the cache. This saves a significant amount of work from happening on the backend.

proxy_cache_use_stale

This is a fairly powerful directive. proxy_cache_use_stale allows you to serve stale content in various scenarios when fresh content is not available. In this case, we’re restricting it to updating, so we’re instructing NGINX to serve stale content when a cached entry is in the process of being updated. So if I have a user come in for a request, and the content they’re requesting is within the cache but stale (since the one second timer has expired), NGINX is going to go ahead and refresh that content from the origin server. But, while that content is being refreshed, users that come in are going to be served the stale content.

Obviously, this is a little bit more dangerous as it could increase the amount of time that users see stale content. But since the cache is being refreshed every second, it’s not going to impact us a lot.

But proxy_cache_use_stale is a very powerful directive that goes beyond that use case. Let’s say that the origin servers have gone down, there’s a network partition, a server has crashed, or for whatever reason, NGINX can’t talk to that server to update the content. Rather than serving out a 404, you realize I have this content in my cache. It’s stale, but I’d rather serve stale content than no content to my users. In this case you can configure NGINX using the proxy_cache_use_stale directive to cover for potential errors in the backend. So, proxy_cache_use_stale is a very powerful directive worth looking into for Drupal.

That’s microcaching with NGINX in a nutshell, and if you want more details on microcaching with NGINX you can follow this link.

30:48 Cache Purging with NGINX Plus

Let’s talk quickly about cache purging in NGINX. There are different ways to accomplish this. You can compile a third-party module and include it into NGINX, and that’s fine. But for our customers with NGINX Plus, we’ve built in a cache purging module written by the same developers that made NGINX, with the backing of our support team and engineering staff.

So, for NGINX Plus it’s pre-bundled, and this is an example configuration that enables it. This allows you, for example, using curl, if you specify the verb as PURGE as opposed to GET, in this case we’re going to delete everything with that root URL. Or you can also name a specific file to purge, for example, www.example.com/image.jpg. So NGINX Plus includes this powerful cache purging mechanism pre-built.

32:00 Load Balancing with NGINX

Let’s talk a little bit about using NGINX as a reverse proxy server.

Floyd has already talked about replacing Apache with NGINX, which offers many performance benefits, but there are times when that’s not feasible. You may be pretty embedded with your Apache config or it may be working well enough that you don’t want to go through the work of replacing it. That’s absolutely okay. You can use NGINX as a reverse proxy server in front of Apache and still gain the majority of the benefits of using NGINX. (Or, you can use NGINX as a reverse proxy server in front of NGINX, and get all the benefits of using NGINX.)

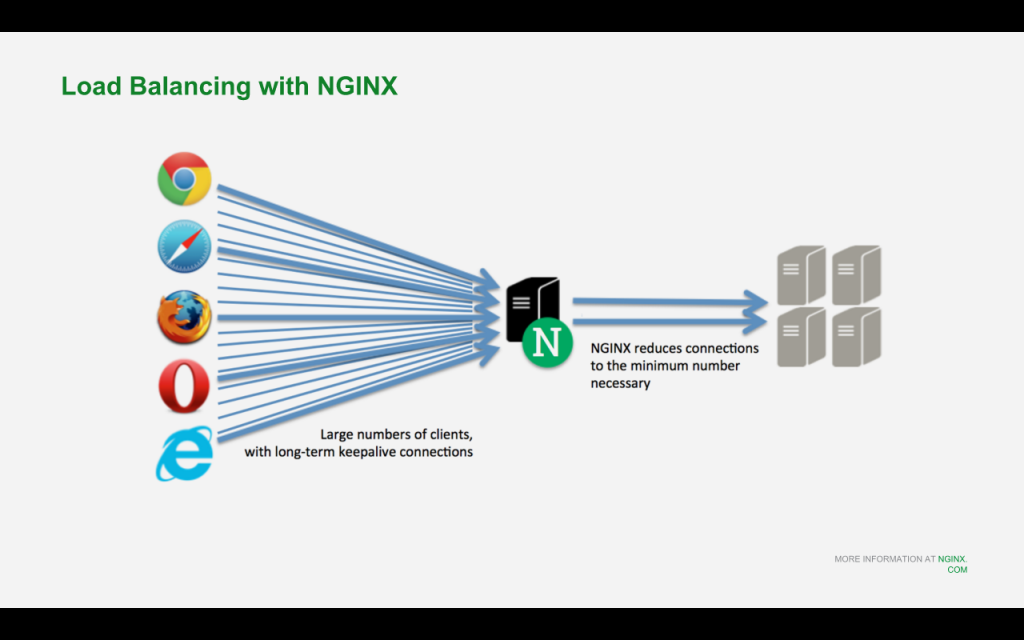

NGINX can be used as a one-to-one reverse proxy, where you put it in front of just a single Drupal server, or in a one-to-many scenario where you place NGINX in front of multiple Drupal servers that are running either Apache or NGINX as well. In that case, when it’s a one-to-many relationship, we call that load balancing. What NGINX will do is distribute the load more or less as equally as possible to the various Drupal nodes behind it.

But NGINX also does a lot of other different optimizations as well. The one highlighted in this particular slide is that you can have a large number of clients coming in with a large number of keep-alive connections on the front-end, but you don’t necessarily need or want to have a one-to-one mapping of keep-alive connections on the back end. NGINX will consolidate that down so you can have thousands of connections on the frontend and that gets down to hundreds of connections on the backend. By multiplexing connections on the backend here, we are able to make more efficient usage of the Drupal servers upstream.

NGINX and NGINX Plus support a variety of different load balancing algorithms; from basic round-robin load balancing, to more sophisticated algorithms that take into account the amount of connections that currently exist on the backend servers, to even more sophisticated algorithms that take into account response time of the application servers. (The latter two on NGINX Plus only.) For example, if one particular Drupal server is being bogged down, or is running on a less-powerful server, NGINX will automatically route less traffic to that particular server, and more traffic to the other ones. So, NGINX automatically accommodates for different amounts of load or different numbers of servers on the backend.

35:23 Session Persistence with NGINX Plus

Session Persistence is something that you may need when you’re scaling out – scaling horizontally – and you have multiple Drupal servers. You may have a user state stored on each of these servers, in which case you’ll need to make sure that the same user goes to the same server each time, at least for that duration of that session. We call that session persistence, while some people like to call it sticky sessions or session affinity.

There are multiple ways to implement session persistence. A nice way to do it is to have NGINX track an application session cookie. In this case, if NGINX sees this session cookie from a client, it will make sure to send that same client to the server that NGINX first saw that cookie come from. We call that session-learning persistence, and it’s a great way to do it.

A typical cookie people use is PHPSESSIONID. On Java sites, it’s JSESSIONID. So, NGINX can track that particular cookie if it’s present and make sure to send that same user to the server that originally sent that cookie out.

If there’s not a cookie that you can track, you can have NGINX insert its own cookie. Then when it sees that cookie, it’s going to send the client to the server that it sent it to before. You can also use sticky routes, which lets you do persistence outside of cookies based on values in the HTTP header. There’s also IP hash, a very simple method which basically just uses the IP address. Clients from the same IP address, the same group of IP addresses, or same subnet are all sent to the same server. Session persistence is mostly an NGINX Plus feature, except for the IP hash method which is available within our open-source offering.

The downside of IP hash is that since it’s based just on the IP address, it’s a bit naive. If we have multiple people within the same office accessing your Drupal application, they may be running off the same IP address, so they’re not going to be load balanced. They’re all going to be sent to the same server, so the load isn’t being distributed as well as it would be with the more elegant session persistence methods.

NGINX also supports session draining, and that means gracefully removing servers from the load balancing pool. If you need to do maintenance on a server, you don’t want to just rip it out while it’s in the middle of processing. You want to have it gracefully shut down what it’s doing. On a more technical level, we want it to stop taking in new connections, process all its existing connections until completion, and once that’s done, we’ll go ahead and move it off. That’s session draining, and with NGINX Plus we have some nice methods to support that.

38:51 SSL Offloading with NGINX

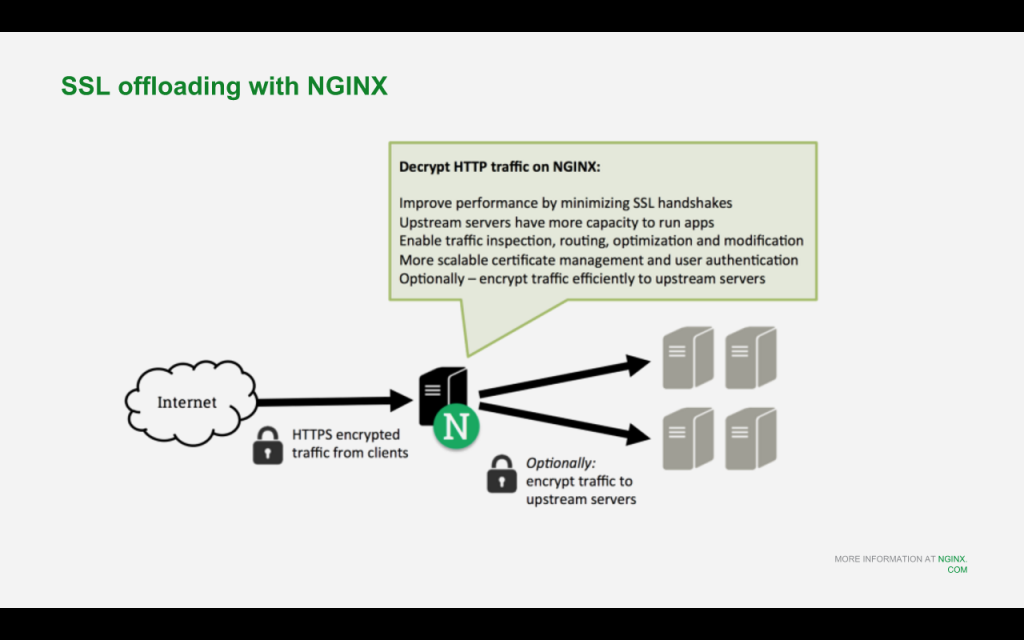

With SSL offloading, the benefits are obvious. We’re moving the computationally expensive burden of SSL processing away from the application servers, to the edge of the network. We’re placing it on the reverse proxy server, the load balancer. In this case, that’s NGINX.

Now NGINX is going to be decrypting the traffic, which gives us another benefit. Since that traffic is now decrypted, we can do stuff to it. We can do traffic inspection, routing, insert cookies, cookie inspection. If you wanted to do session persistence, for example, you have to decrypt the traffic first to allow NGINX to get in there and look at the cookies and look at the data. So with the traffic decrypted, NGINX can do its layer 7 work.

Then if you want extra security you can optionally encrypt the traffic when you pass it to your upstream servers. If you encrypt it back to the upstream servers, you’re losing the benefits of SSL offloading, but you’re protecting yourself against someone that has hacked into your data center, someone who has hacked into your cloud environment and has a passive probe there. You’re also protected against potential spying by governments or other entities.

Nowadays with more security threats and the amount of attacks going up, we’re seeing a lot more people opt to encrypt traffic even within their own data center. But even if you decide to encrypt that backend traffic, there’s still a benefit to decrypting traffic at NGINX so that it can do the inspections and layer 7 work.

40:57 HTTP/2 With NGINX

HTTP/2 was ratified in February 2015. It gives websites better performance without requiring you to re-architect or make changes on the backend, especially if you’re using it with NGINX. What NGINX does is translate traffic to and from HTTP/2. It speaks HTTP/2 on the frontend to client browsers that support it, and then on the backend it translates that to FastCGI, uWSGI, HTTP/1.1, whatever language your application servers speak now. And it does this translation work for you transparently.

What this means for the application owner is that, with NGINX, you can move to HTTP/2 and gain the performance benefits of it without refactoring your application. You’ll still be supporting clients that are using older web browsers or using older mobile devices that don’t support HTTP/2. And this all happens pretty transparently to the application owner. Moving to HTTP/2 with NGINX is a nice and easy way to boost the performance of your website without having to do a lot of work.

42:30 Summary

Floyd: Just to sum up some of what we’ve talked about, the Drupal world is getting more complex, and there’s a lot going on that you can look into to improve your application’s performance. There’s a lot you can look into with cloud architecture and design, information architecture. You might be using a CDN in conjunction with your site. There’s a lot to look into in the web server world, whether you go with Apache or NGINX.

Part of the fun of being at NGINX is working with a lot of really smart people, and one of the benefits of using NGINX Plus for customers is that you get access to them and get to talk with them. There are some things that are worth learning yourself. If you’re going to be doing something often, you should learn it and have that expertise in-house. But if you’re going to be tackling something once, it’s often better to get consulting or outside help, and you can do that easily with NGINX Plus.

When you talk about using NGINX, it’s good to be aware that many people use NGINX as a web server, as they get a lot of advantages out of that. But especially with Drupal, some of the best performance benefits come from using NGINX as a reverse proxy server and when you gain the architectural flexibility inherent with that. So, those are two different use cases, and when you talk to someone about NGINX, it’s important to know which use case each of you is talking about.

The other thing that can be confusing to talk about is caching. In the world of website design and DevOps and everything as a whole, when people say caching they’re often referring to caching static files. This is like the caching that takes places in your browser or a CDN. In the Drupal world, on the other hand, caching more often means microcaching, that is, caching of a page for just a second or a few seconds. Because if you try to produce that page 100 or 200 times a second, your site’s going to go down. So, microcaching for these peak periods gives a lot of benefit and is what is usually referred to when talking about caching in a Drupal context.

To reiterate: The advantage of microcaching with NGINX, or as the Drupal world might call it, caching with NGINX, is that the caching is taking place off of the server that’s running Drupal. So, that server is now completely freed up, and all it has to do is generate fresh pages when fresh pages are needed. That might even be every second, but once a second is a lot less than tens or hundreds of times per second, which could be the amount of requests you get when you’re really busy.

It may not seem like a big deal, but being clear on the vocabulary is important when talking to other people. Even if you know this stuff cold, it’s still worth being aware of how people use these terms differently so that you can communicate better and faster. A lot of the optimizations we’ve been talking about, like using multiple application servers, are going to require teams. And so, communicating these concepts clearly is an important part of getting everyone up to speed.

To sum up, as Drupal is pushing into doing more and more for very complex and very high-performance sites, NGINX and NGINX Plus offer a lot of effective ways to solve performance problems that may arise.

Adding to what Faisal said about SSL/TLS and HTTP/2, we’re finding that having a secure site is becoming an expectation for more users. What they see is, they’re spending a lot of time on the big sites, and those are secure. And that sets an expectation in the user’s mind. So, it may not seem like a big deal to secure your content or your site for instance, but it actually is becoming a checkbox for people, and they get nervous about logging into a site if they don’t see the security bar there. It’s a subtle factor, but it’s increasing, and NGINX – especially with HTTP/2 – makes it much easier to implement these more secure protocols without your site suffering a performance penalty.

We have a lot of details and information about security and HTTP/2 in particular. There’s a blog post that we have 7 Tips to Improve HTTP/2 Performance which talks a lot about HTTP/2. And then we also have webinars, speeches from nginx.conf, and a lot more.

High performance is a big area. There’s a lot of stuff to learn and we know it’s hard to make a Drupal site high-performance. So, we’ve got a start here on that for you now.

The post Maximizing Drupal 8 Performance with NGINX – Part II: Caching and Load Balancing appeared first on NGINX.

Source: Maximizing Drupal 8 Performance with NGINX – Part II: Caching and Load Balancing

Leave a Reply