Announcing NGINX Ingress Controller for Kubernetes Release 1.5.0

h4 {

font-weight:bolder;

font-size:110%;

}

h5 {

font-weight:bolder;

font-size:110%;

}

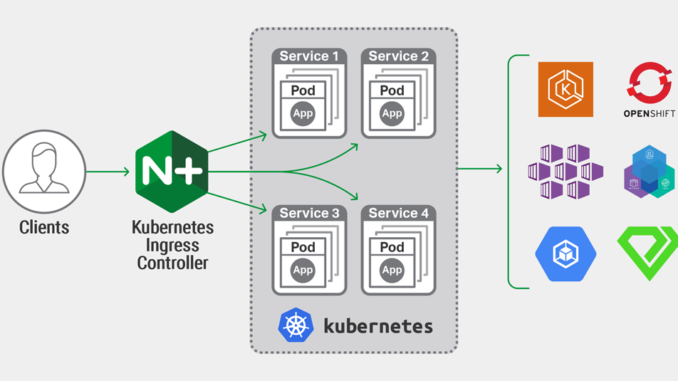

We are pleased to announce release 1.5.0 of the NGINX Ingress Controller for Kubernetes. This represents a milestone in the development of our supported solution for Ingress load balancing on Kubernetes platforms, including Amazon Elastic Container Service for Kubernetes (EKS), the Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), Red Hat OpenShift, IBM Cloud Private, Diamanti, and others.

Release 1.5.0 includes:

- A new configuration approach using NGINX custom resources to easily define ingress policies

- Additional metrics, provided by a streamlined Prometheus exporter

- Simplified configuration of complex TLS deployments

- Support for load balancing traffic to external services, using ExternalName services

- A dedicated Helm chart repository

The complete changelog for release 1.5.0, including bug fixes, improvements, and changes, is available in our GitHub repo.

What Is the NGINX Ingress Controller for Kubernetes?

The NGINX Ingress Controller for Kubernetes is a daemon that runs alongside NGINX Open Source or NGINX Plus instances in a Kubernetes environment. The daemon monitors Ingress resources and NGINX custom resources to discover requests for services that require ingress load balancing. The daemon then automatically configures NGINX or NGINX Plus to route and load balance traffic to these services.

Multiple NGINX Ingress controller implementations are available. The official NGINX implementation is high‑performance, production‑ready, and suitable for long‑term deployment. We focus on providing stability across releases, with features that can be deployed at enterprise scale. We provide full technical support to NGINX Plus subscribers at no additional cost, and NGINX Open Source users benefit from our focus on stability and supportability.

A New Configuration Approach in Release 1.5.0

The Ingress resource was introduced in Kubernetes 1.1. This API object provides a way to model a simple load‑balancing configuration, specify TLS and HTTP termination parameters, and to configure routing to Kubernetes services based on a request’s Host header and path.

The Limitations of Ingress Resources

While working with Ingress resource‑based configurations, we encountered limitations to this simple approach. We extended the Ingress resource using thp-and-annotations.md”>annotations and ConfigMaps to address additional NGINX features, even letting users embed NGINX config verbatim using snippets in their Ingress resources. This allowed the NGINX Ingress Controller to address many more use cases, including request rewriting, authentication, rate limiting, and TCP and UDP load balancing.

Extending the Ingress resource in this way has its own limitations. Annotations and ConfigMaps are not part of the Ingress resource specification. There is no type‑safety (validation of configuration), and it’s challenging to use the same annotation feature with different parameters for different services.

The net result is that configuring the NGINX Ingress Controller was harder than we want it to be, and only a small subset of NGINX use cases can be reliably supported.

Furthermore, large‑scale enterprise deployments of Kubernetes require RBAC‑based, self‑service means of configuration. The standard Ingress resources do not provide sufficiently fine‑grained separation of concerns. We’ve had good success addressing this with our mergeable Ingress resources and we want to build on that experience in our new configuration approach.

A New Approach

In release 1.5.0, we are previewing a new approach to configuring ingress policies. We take advantage of how the Kubernetes API can be extended using custom resources. These resources look and feel like built‑in Kubernetes resources and offer a similar user experience. Using custom resources, we’re experimenting with a new configuration approach with the following goals:

- To support a wide range of real, NGINX‑powered use cases

- Avoid relying on annotations; rather, all features must be part of the spec

- Follow the Kubernetes API style so that users get the same or close experience compared with using the Ingress resource

- To support RBAC and self‑service use cases in a scalable and predictable manner

The configuration approach in release 1.5.0 – using custom resources – is a preview of our future direction. As we develop the next release (1.6.0), we welcome feedback, criticism, and suggestions for improvement to this approach. Once we’re satisfied we have a solid configuration architecture, we’ll lock it down and regard it as stable and fully production‑ready.

We will also continue to support the existing NGINX Ingress resource in parallel with custom resources and have no plans to deprecate it. We’re very aware that many users of our NGINX Ingress Controller want to continue using the extensive third‑party and user‑created tooling available for managing Ingress resources. We will not, however, introduce any major new features to the Ingress resource based on annotations.

NGINX Ingress Controller 1.5.0 Features in Detail

NGINX Custom Resources

You can configure the NGINX Ingress Controller using two new custom resources:

- The

VirtualServerresource is similar in concept to the Ingress resource. It defines the listening virtual server, including its TLS parameters. It also defines the upstreams used by these servers and the routes that select these upstreams. It can also delegate upstreams and routes to aVirtualServerRouteresource. - The

VirtualServerRouteresource is a subset of theVirtualServerresource, containing just the upstream and route definitions. It can reside in a different namespace from theVirtualServerthat references it.

For full technical details, see the documentation in our GitHub repo.

Delegated, Self-Service Configuration

In a simple configuration, you use the VirtualServer resource to define the entire load‑balancing configuration.

When you operate in a multi‑role or self‑service environment, you can use the delegation capabilities of the VirtualServerRoute resource. For example, an Admin RBAC user can define the external‑facing VirtualServer, and then delegate named routes to other roles in a secure and managed RBAC fashion, giving the Application Team role permission to configure upstream services, operate blue‑green and canary deployments, and apply complex routes.

Here’s an example of a simple configuration that listens on cafe.example.com and forwards requests matching /tea to the tea‑svc Kubernetes service.

VirtualServer

apiVersion: k8s.nginx.org/v1alpha1

kind: VirtualServer

metadata:

name: cafe

namespace: cafe-ns

spec:

host: cafe.example.com

upstreams:

- name: tea

service: tea-svc

port: 80

routes:

- path: /tea

upstream: tea

- path: /coffee

route: coffee-ns/coffeeObserve that the /coffee path is delegated to a separate VirtualServerRoute named coffee, located in the coffee‑ns namespace.

VirtualServerRoute

apiVersion: k8s.nginx.org/v1alpha1

kind: VirtualServerRoute

metadata:

name: coffee

namespace: coffee-ns

spec:

host: cafe.example.com

upstreams:

- name: latte

service: latte-svc

port: 80

- name: espresso

service: espresso-svc

port: 80

subroutes:

- path: /coffee/latte

upstream: latte

- path: /coffee/espresso

upstream: espressoA user with RBAC access to the coffee‑ns namespace can define subroutes within the path http://coffee.example.com/coffee/, but cannot edit VirtualServer parameters such as TLS, or any other part of the URL space.

More Sophisticated Routing with Custom Resources

A common challenge with the Ingress resource is the limited flexibility it provides to match requests and select an upstream service. In the NGINX custom resources, we provide richer ways to route requests to an upstream.

The routes (in the VirtualServer resource) and subroutes (in the VirtualServerRoute resource) contain a list of path specifications. Requests that match a path are then handled by that path’s routing policy:

upstream:service-name– All requests that match the path are forwarded to the named upstream.route:namespace/vsroute– All requests that match the path are delegated to the namedVirtualServerRoute. Aroutepolicy can only be used in aVirtualServer.splits:weighted-upstream-list– Requests are distributed across several upstreams according to the weights, in a similar fashion to NGINX’ssplit_clientsmodule. This is useful for simple A/B testing use cases.rules:rules-list– For sophisticated content‑based routing, therulespolicy contains a list of the conditions – the values you want to select on – and a list of matches – the values you want to match in order to use the matchedupstream.

The GitHub‑hosted documentation describes these routing policies in more detail. The routing policies make it possible to meet use cases such as:

- API versioning – Route requests for /api/v1 and /api/v2 to different upstream services

- A/B testing – Select traffic based on a statistical split or request parameters, and then distribute it across different upstream services

- Debug routing – Route traffic to the current production upstream service, but route requests with particular parameters (

?debug=1), cookies, source IP addresses, or headers to a debug upstream service - Blue‑green deployments – Automate the A/B testing and debug routing use cases to deploy a canary (green) service instance, route a subset of traffic to it, monitor its behavior, and then switch traffic over from blue (production) to green (new)

Future Enhancements

The new NGINX custom resources are a preview of our future configuration structure, and will be foundational for new features as we add them. Rate limiting, authentication, health checks, session persistence, and other features are all candidates for future implementation.

If you would like to leave feedback, please open an issue in our GitHub repo.

Please note that we will continue to support the Kubernetes Ingress resource, but do not plan to introduce any major new features based on annotations.

Improved Prometheus Support

New Metrics

Release 1.5.0 provides additional metrics that instrument how the NGINX Ingress Controller configures NGINX:

- The number of successful and unsuccessful NGINX reloads

- The status of the last reload, and the time elapsed since the reload

- The number of Ingress resources which make up the NGINX configuration

These metrics can help reveal the problems in the NGINX Ingress Controller’s operation. For example, unsuccessful reloads indicate invalid NGINX configuration; excessively frequent reloads might trigger performance degradation of NGINX.

Simplified Installation

Previously, Prometheus metrics were exposed by an exporter process that ran as a sidecar container in the NGINX Ingress Controller pod. Starting in release 1.5.0, this functionality is fully integrated into the NGINX Ingress Controller process, so the sidecar container is no longer needed. This simplifies installation and reduces the resource utilization of the NGINX Ingress Controller.

To enable Prometheus metrics, start the NGINX Ingress Controller with the new -enable-prometheus-metrics command‑line flag.

Simpler Sharing of Wildcard Certificates

If you host multiple TLS services (foo.example.com, bar.example.com) using a wildcard certificate (*.example.com), release 1.5.0 makes it easier and more secure to publish that certificate and secret.

Previously, you had to name the wildcard certificate secret in each Ingress resource, and make it available to each namespace that required it. In some environments, that was inconvenient or even regarded as a security risk.

In release 1.5.0, you can optionally define a default TLS secret at the command line when the NGINX Ingress Controller starts. Any Ingress resource that requires a TLS certificate but does not specify a TLS secret uses the default TLS secret. This feature is useful in situations where multiple Ingress resources in different namespaces need to use the same TLS secret.

This simplifies configuration and reduces the expose of sensitive data in a multi‑user, self‑service environment. For more information, see the example in our GitHub repo.

Support for ExternalName Services

Release 1.5.0 of the NGINX Plus Ingress Controller can route requests to services of the type ExternalName. An ExternalName service is defined by a DNS name which typically resolves to IP addresses that are external to the cluster. This enables the NGINX Plus Ingress Controller to load balance requests to destinations outside of the cluster.

Support for ExternalName services makes it easier to migrate your services into a Kubernetes cluster. You can run your HTTP load balancers (NGINX Plus) within Kubernetes, then route traffic both to the services in the cluster and to services that have not yet been moved to the cluster.

This feature is only available when the NGINX Plus Ingress Controller is used with NGINX Plus, as it relies on the dynamic DNS resolution feature of NGINX Plus. For more information, see the example in our GitHub repo.

Helm Chart Availability via Helm Repo

Helm is becoming the preferred way to package applications on Kubernetes. Release 1.5.0 of the NGINX Plus Ingress Controller is available via our Helm repo.

Installing the NGINX Ingress Controller via Helm is as simple as the following:

$ helm repo add nginx-stable https://helm.nginx.com/stable

$ helm repo update

$ helm install nginx-stable/nginx-ingress --name release-nameFor more information, please refer to the Helm Chart documentation for NGINX Ingress Controller, also found on Helm Hub.

To try out the NGINX Ingress Controller for Kubernetes with NGINX Plus, start your free 30-day trial of NGINX Plus today or contact us to discuss your use cases.

The post Announcing NGINX Ingress Controller for Kubernetes Release 1.5.0 appeared first on NGINX.

Source: Announcing NGINX Ingress Controller for Kubernetes Release 1.5.0

Leave a Reply