API Connectivity Manager Helps Dev and Ops Work Better Together

Cloud‑native applications are composed of dozens, hundreds, or even thousands of APIs connecting microservices together. Together, these services and APIs deliver the resiliency, scalability, and flexibility that are at the heart of cloud‑native applications.

Today, these underlying APIs and microservices are often built by globally distributed teams, which need to operate with a degree of autonomy and independence to deliver capabilities to market in a timely fashion.

At the same time, Platform Ops teams are responsible for ensuring the overall reliability and security of the enterprise’s apps and infrastructure, including its underlying APIs. They need visibility into API traffic and the ability to set global guardrails to ensure uniform security and compliance – all while providing an excellent API developer experience.

While the interests of these two groups can be in conflict, we don’t believe that’s inevitable. We built API Connectivity Manager as part of F5 NGINX Management Suite to simultaneously enable Platform Ops teams to keep things safe and running smoothly and API developers to build and release new capabilities with ease.

Creating an Architecture to Support API Connectivity

As a next‑generation management plane, API Connectivity Manager is built to realize and extend the power of NGINX Plus as an API gateway. If you have used NGINX in the past, you are familiar with NGINX’s scalability, reliability, flexibility, and performance as an API gateway.

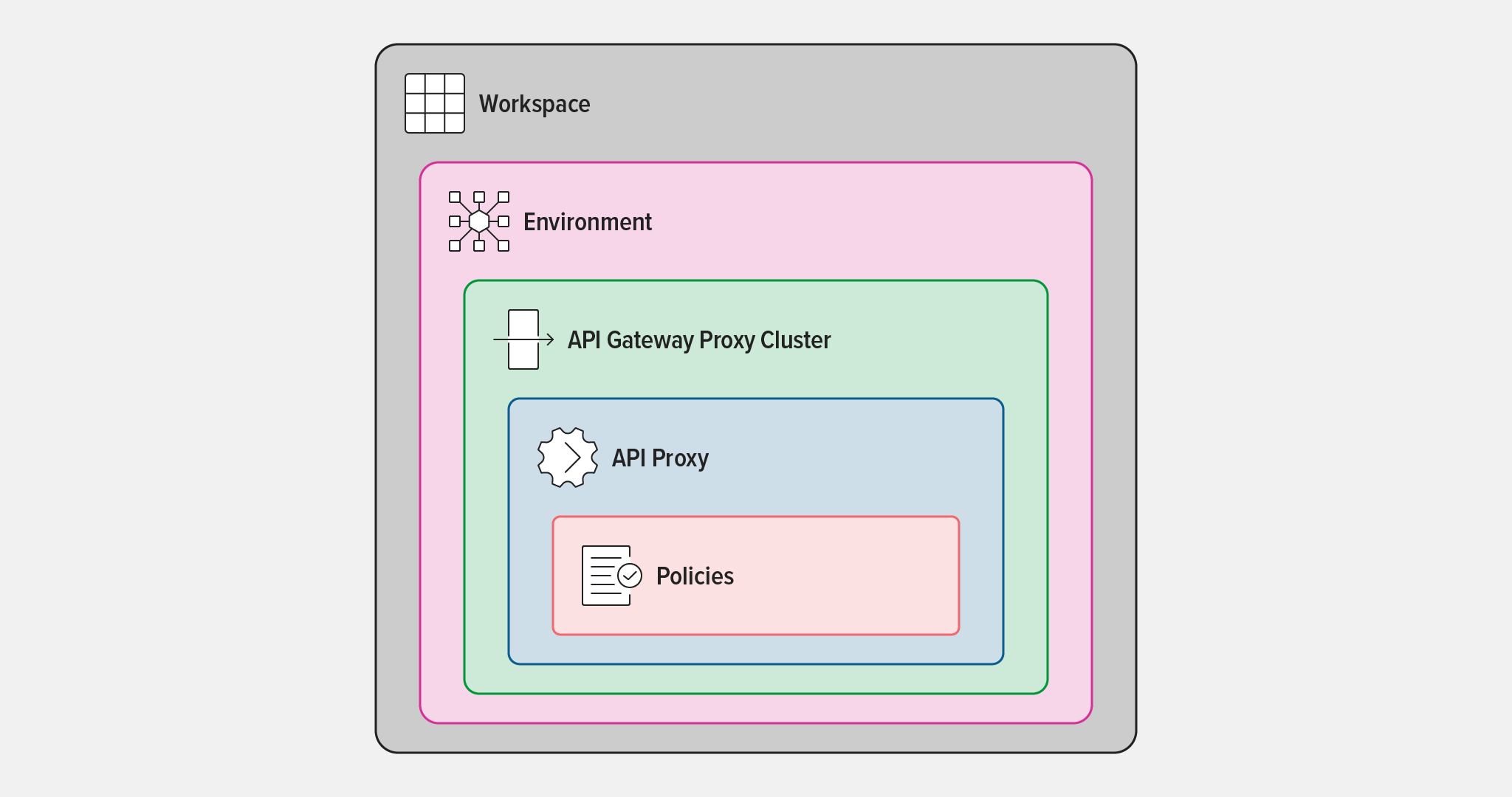

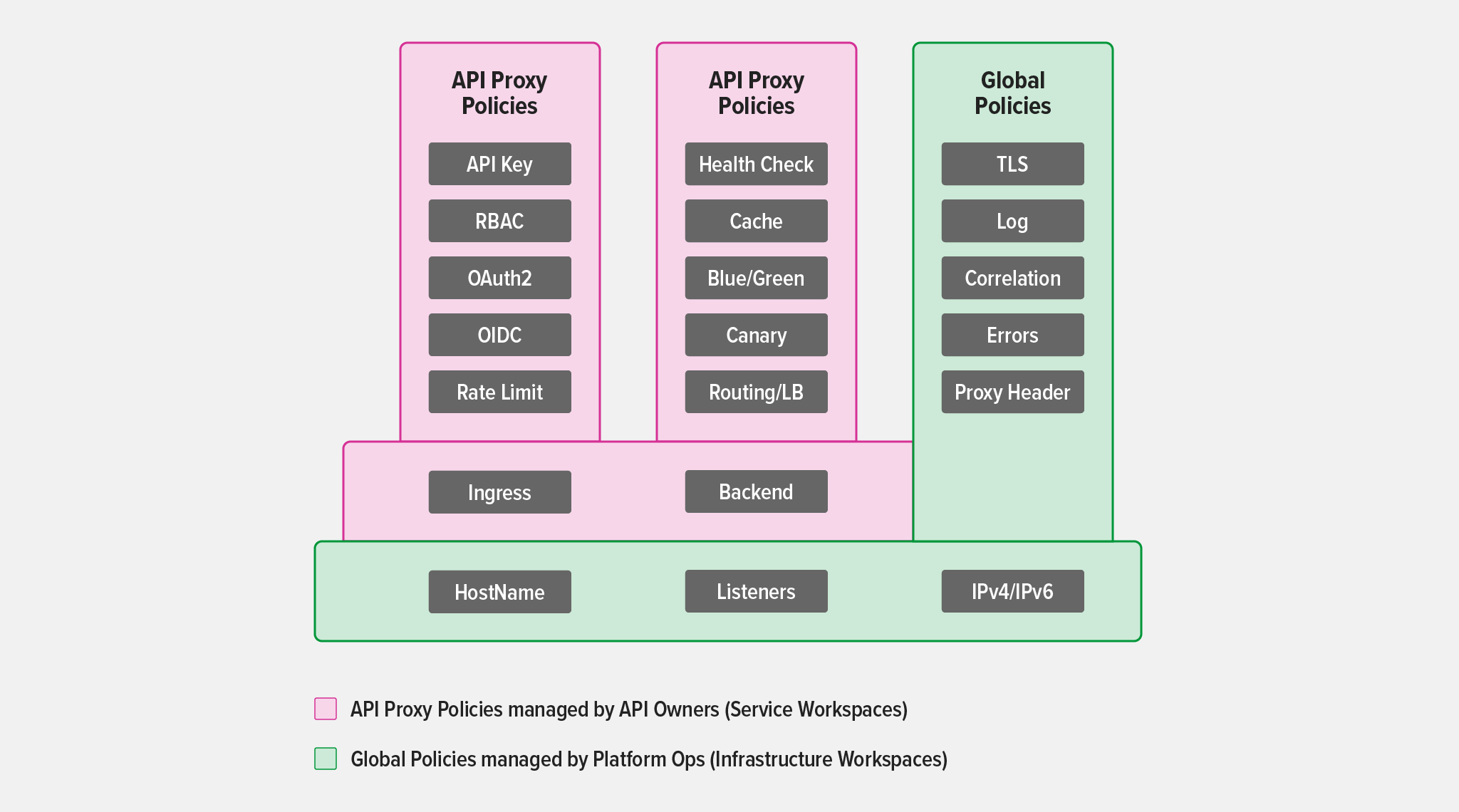

As we designed API Connectivity Manager, we developed a new architecture to enable Platform Ops teams and developers to better work together. It uses the following standard industry terms for its components (some of which differ from the names familiar to experienced NGINX users):

- Workspace – An isolated collection of infrastructure for the dedicated management and use of a single business unit or team; usually includes multiple Environments

- Environment – A logical collection of NGINX Plus instances (clusters) acting as API gateways or developer portals for a specific phase of the application life cycle, such as development, test, or production

- API Gateway Proxy Cluster – A logical representation of the NGINX API gateway that groups NGINX Plus instances and synchronizes the state between them

- API Proxy – A representation of a published instance of an API and includes routing, versioning, and other policies

- Policies – A global or local abstraction for defining specific functions of the API proxy like traffic resiliency, security, or quality of service

The following diagram illustrates how the components are nested within a Workspace:

This logical hierarchy enables a variety of important use cases that support enterprise‑wide API connectivity. For example, Workspaces can incorporate multiple types of infrastructure (for example, public cloud and on premises) giving teams access to both – and providing visibility into API traffic across both environments to infrastructure owners.

We’ll look at the architectural components in more depth in future posts. For now, let’s look at how API Connectivity Manager serves the different personas that contribute to API development and delivery.

API Connectivity Manager for Platform Ops

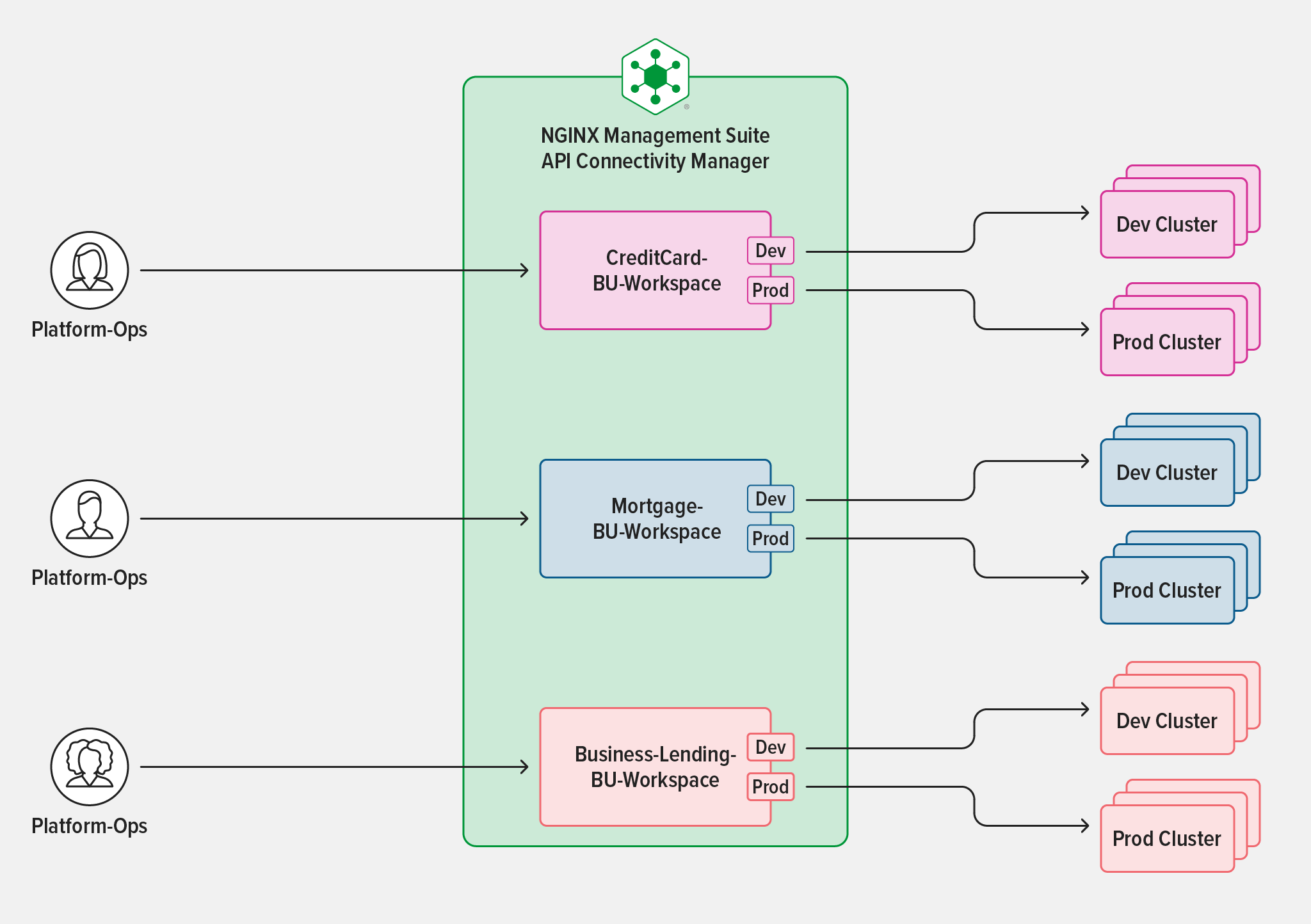

The Platform Ops team is responsible for building and managing the infrastructure lifecycle for each organization. They provide the platform on which developers build applications and services that serve customers and partners. Development teams are often decentralized, working across multiple environments and reporting to different lines of business. Meeting the needs of these dispersed groups and environments while maintaining enterprise‑wide standards is one of the biggest challenges Platform Ops teams face today.

API Connectivity Manager offers innovative ways of segregating the teams and their operations using Workspaces as a logical boundary. It also enables Platform Ops teams to manage the infrastructure lifecycle without interfering with the teams building and deploying APIs. API Connectivity Manager comes with a built‑in set of default global policies to provide basic security and configuration for NGINX Plus API gateways and developer portals. Platform Ops teams can then configure supplemental global policies to optionally require mTLS, configure log formats, or standardize proxy headers.

The global policy imposes uniformity and brings consistency to the APIs that are deployed in the shared API gateway cluster. API Connectivity Manager also offers the organizations the chance to run decentralized data‑plane clusters to physically separate where each team or line of business deploys its APIs.

In a subsequent blog, we will explore how Platform Ops teams can use API Connectivity Manager to ensure API security and governance while helping API developers succeed.

API Connectivity Manager for Developers

Teams from different lines of business own and operate their own sets of APIs. They need control over their API products to deliver their applications and services to market on time, which means they can’t afford to wait for other teams’ approval to use shared infrastructure. At the same time, there need to be “guardrails” in place to prevent teams from stepping on each other’s toes.

Like the Platform Ops persona, developers as API owners get their own Workspaces which segregate their APIs from other teams. API Connectivity Manager provides policies at the API Proxy level for API owners to configure service‑level settings like rate limiting and additional security requirements.

In a subsequent blog, we will explore how developers can use API Connectivity Manager to simplify API lifecycle management.

Get Started

Start a 30-day free trial of NGINX Management Suite, which includes API Connectivity Manager and Instance Manager.

The post API Connectivity Manager Helps Dev and Ops Work Better Together appeared first on NGINX.

Source: API Connectivity Manager Helps Dev and Ops Work Better Together

Leave a Reply