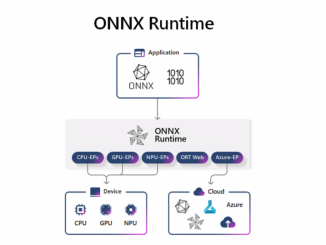

Unlocking the end-to-end Windows AI developer experience using ONNX runtime and Olive

Unlocking the end-to-end Windows AI developer experience using ONNX runtime and Olive At the Microsoft 2023 Build conference, Panos Panay announced ONNX Runtime as the gateway to Windows AI. Using ONNX Runtime gives third party developers the same tools we use internally to run AI models on any Windows or other devices across CPU, GPU, NPU, or hybrid with Azure. We are also introducing Olive, a toolchain we created to ease the burden on developers to optimize models for varied Windows and other devices. Both ONNX Runtime and Olive contribute to the velocity of getting your AI models deployed into apps. What is ONNX Runtime? ONNX Runtime is a cross-platform, cross-IHV inferencing engine that allows developers to inference across platforms (e.g. iOS, Windows, Android, Linux), across client and cloud devices (CPU/GPU/NPU) or hybrid inferencing between client and Cloud (Azure EP). EPs [ more… ]