Automate TCP Load Balancing to On-Premises Kubernetes Services with NGINX

You are a modern app developer. You use a collection of open source and maybe some commercial tools to write, test, deploy, and manage new apps and containers. You’ve chosen Kubernetes to run these containers and pods in development, test, staging, and production environments. You’ve bought into the architectures and concepts of microservices, the Cloud Native Computing Foundation, and other modern industry standards.

On this journey, you’ve discovered that Kubernetes is indeed powerful. But you’ve probably also been surprised at how difficult, inflexible, and frustrating it can be. Implementing and coordinating changes and updates to routers, firewalls, load balancers and other network devices can become overwhelming – especially in your own data center! It’s enough to bring a developer to tears.

How you handle these challenges has a lot to do with where and how you run Kubernetes (as a managed service or on premises). This article addresses TCP load balancing, a key area where deployment choices impact ease of use.

TCP Load Balancing with Managed Kubernetes (a.k.a. the Easy Option)

If you use a managed service like a public cloud provider for Kubernetes, much of that tedious networking stuff is handled for you. With just one command (kubectl apply -f loadbalancer.yaml), the Service type LoadBalancer gives you a Public IP, DNS record, and TCP load balancer. For example, you could configure Amazon Elastic Load Balancer to distribute traffic to pods containing NGINX Ingress Controller and, using this command, have no worries when the backends change. It’s so easy, we bet you take it for granted!

TCP Load Balancing with On-Premises Kubernetes (a.k.a. the Hard Option)

With on-premises clusters, it’s a totally different scenario. You or your networking peers must provide the networking pieces. You might wonder, “Why is getting users to my Kubernetes apps so difficult?” The answer is simple but a bit shocking: The Service type LoadBalancer, the front door to your cluster, doesn’t actually exist.

To expose your apps and Services outside the cluster, your network team probably requires tickets, approvals, procedures, and perhaps even security reviews – all before they reconfigure their equipment. Or you might need to do everything yourself, slowing the pace of application delivery to a crawl. Even worse, you dare not make changes to any Kubernetes Services, for if the NodePort changes, the traffic could get blocked! And we all know how much users like getting 500 errors. Your boss probably likes it even less.

A Better Solution for On-Premises TCP Load Balancing: NGINX Loadbalancer for Kubernetes

You can turn the “hard option” into the “easy option” with our new project: NGINX Loadbalancer for Kubernetes. This free project is a Kubernetes controller that watches NGINX Ingress Controller and automatically updates an external NGINX Plus instance configured for load balancing. Being very straightforward in design, it’s simple to install and operate. With this solution in place, you can implement TCP load balancing in on-premises environments, ensuring new apps and services are immediately detected and available for traffic – with no need to get hands on.

Architecture and Flow

NGINX Loadbalancer for Kubernetes sits inside a Kubernetes cluster. It is registered with Kubernetes to watch the nginx-ingress Service (NGINX Ingress Controller). When there is a change to the backends, NGINX Loadbalancer for Kubernetes collects the Worker IPs and the NodePort TCP port numbers, then sends the IP:ports to NGINX Plus via the NGINX Plus API. The NGINX upstream servers are updated with no reload required, and NGINX Plus load balances traffic to the correct upstream servers and Kubernetes NodePorts. Additional NGINX Plus instances can be added to achieve high availability.

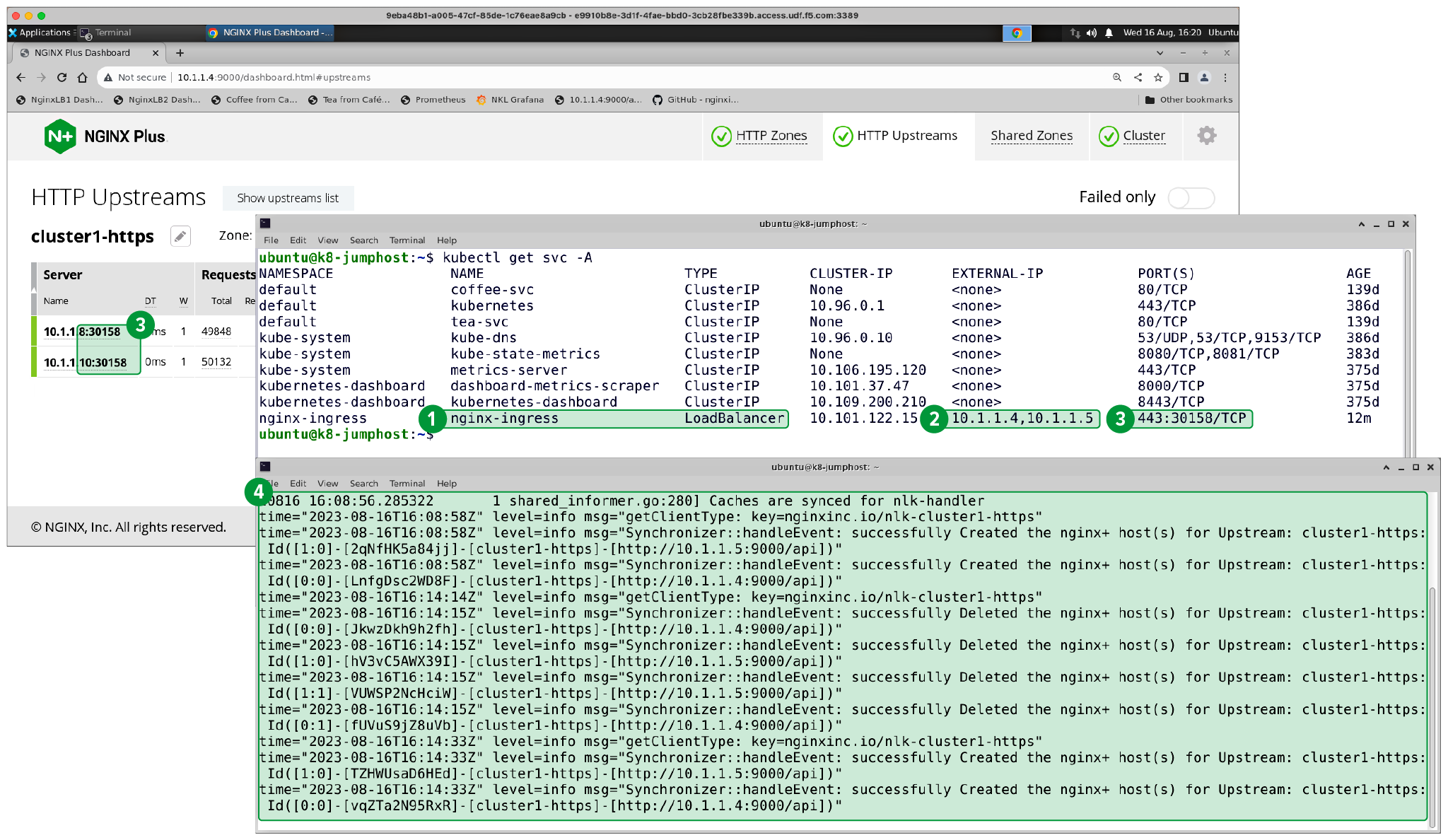

A Snapshot of NGINX Loadbalancer for Kubernetes in Action

In the screenshot below, there are two windows that demonstrate NGINX Loadbalancer for Kubernetes deployed and doing its job:

- Service Type – LoadBalancer (for

nginx-ingress) - External IP – Connects to the NGINX Plus servers

- Ports – NodePort maps to 443:30158 with matching NGINX upstream servers (as shown in the NGINX Plus real-time dashboard)

- Logs – Indicates NGINX Loadbalancer for Kubernetes is successfully sending data to NGINX Plus

Note: In this example, the Kubernetes worker nodes are 10.1.1.8 and 10.1.1.10

Get Started Today

If you’re frustrated with networking challenges at the edge of your Kubernetes cluster, take the project for a spin and let us know what you think. The source code for NGINX Loadbalancer for Kubernetes is open source (under the Apache 2.0 license) with all installation instructions available on GitHub.

To provide feedback, drop us a comment in the repo or message us in the NGINX Community Slack.

The post Automate TCP Load Balancing to On-Premises Kubernetes Services with NGINX appeared first on NGINX.

Source: Automate TCP Load Balancing to On-Premises Kubernetes Services with NGINX

Leave a Reply