Building and Securely Delivering APIs with the NGINX Application Platform

The unprecedented growth of the API management market is fueled by demand for modern APIs connecting applications and devices to accelerate digital transformation, as well as for real‑time insights and analytics on API performance and usage.

According to MarketsANDMarkets, the API management market is expected to grow to $5.1 billion by 2023. Yet API management is only half of what you need to do to monetize your APIs. Building fast APIs is just as important (if not more) as delivering them securely to your customers.

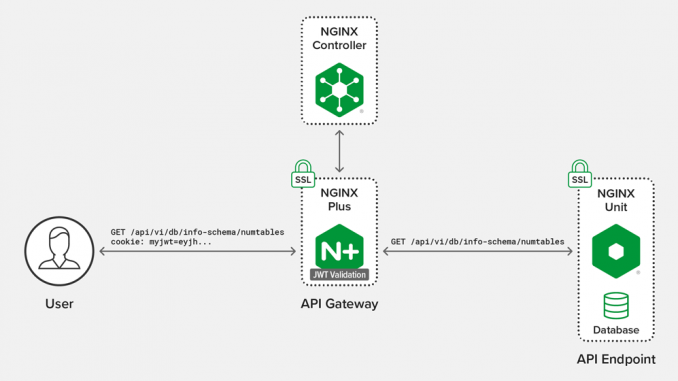

In this blog, we demonstrate how the NGINX Application Platform – the combination of NGINX Controller, NGINX Plus, and NGINX Unit – accelerates application delivery from code to customer, providing a comprehensive and unified solution for API monetization that spans end-to-end from the creation of API functions through their delivery to end users.

Consider, for example, a mobile e-commerce app that obtains inventory information by making an API call to a web application, which in turns connects to a database. To simulate this use case, we’ve constructed a simple app server that calculates the total number of tables in a database, and returns the results via an authenticated API call.

The diagram depicts the architecture of our end-to-end solution:

Using NGINX Controller 3.2, we provision NGINX Plus R20 as an API gateway, which validates JSON Web Tokens (JWTs) to authenticate users accessing the API endpoint. The API endpoint is a backend server running a FaaS (Function as a Service) written in Go 1.13.5, with NGINX Unit 1.13.0 as the application server. The API function computes the total number of tables in the information_schema database hosted on the backend server. From the user perspective, the API is accessed at a URI (/api/v1/db/info-schema/numtables) and the user needs to present a valid JWT in the myjwt cookie to access the API endpoint.

Looking for a seamless way to securely deliver your APIs to authenticated users? Let’s step through the provisioning of NGINX Controller, NGINX Plus, and NGINX Unit.

Configuring NGINX Controller and NGINX Plus

We begin by configuring the NGINX Controller API Management Module to deliver our API.

Note: There are four main tabs in the Controller GUI: Analytics, Services, Infrastructure, and Platform. To navigate among them as directed in the instructions, click the menu icon in the upper left corner of the Controller window and select the appropriate tab from the drop‑down list.

- Open an NGINX Controller session and log in.

-

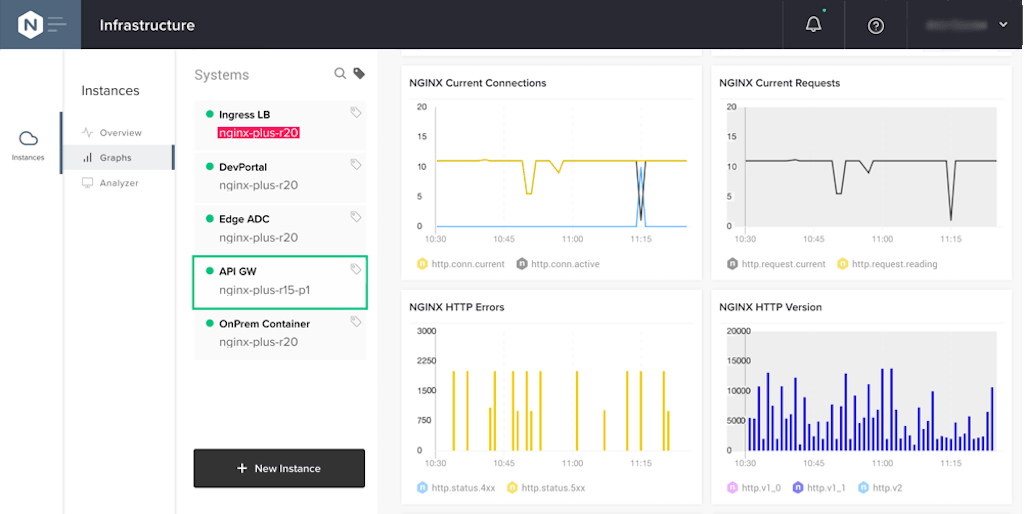

We first review the set of NGINX Plus instances being managed by Controller.

Open the Infrastructure tab and click Graphs in the Instances column. Our NGINX Plus instances are listed in the Systems column. In this screenshot, we’ve selected the NGINX Plus instance serving as our API gateway, API GW. Note that there is also a dedicated DevPortal instance that hosts the documentation for our API definitions.

-

Create a Controller Environment for the API. An Environment is a logical container for a set of resources that a particular group of users (for example, an app development team) is allowed to access and manage. (For details, see https://your-Controller/docs/services/environments/manage-environments/.)

Open the Services tab and click Environments in the first column. Click +Create Environment in the My Environments column. In the Create Environment column that opens, the only required field is Name (devguest in our example, and we are specifying values in other fields as shown in the screenshot). Click the Submit button.

-

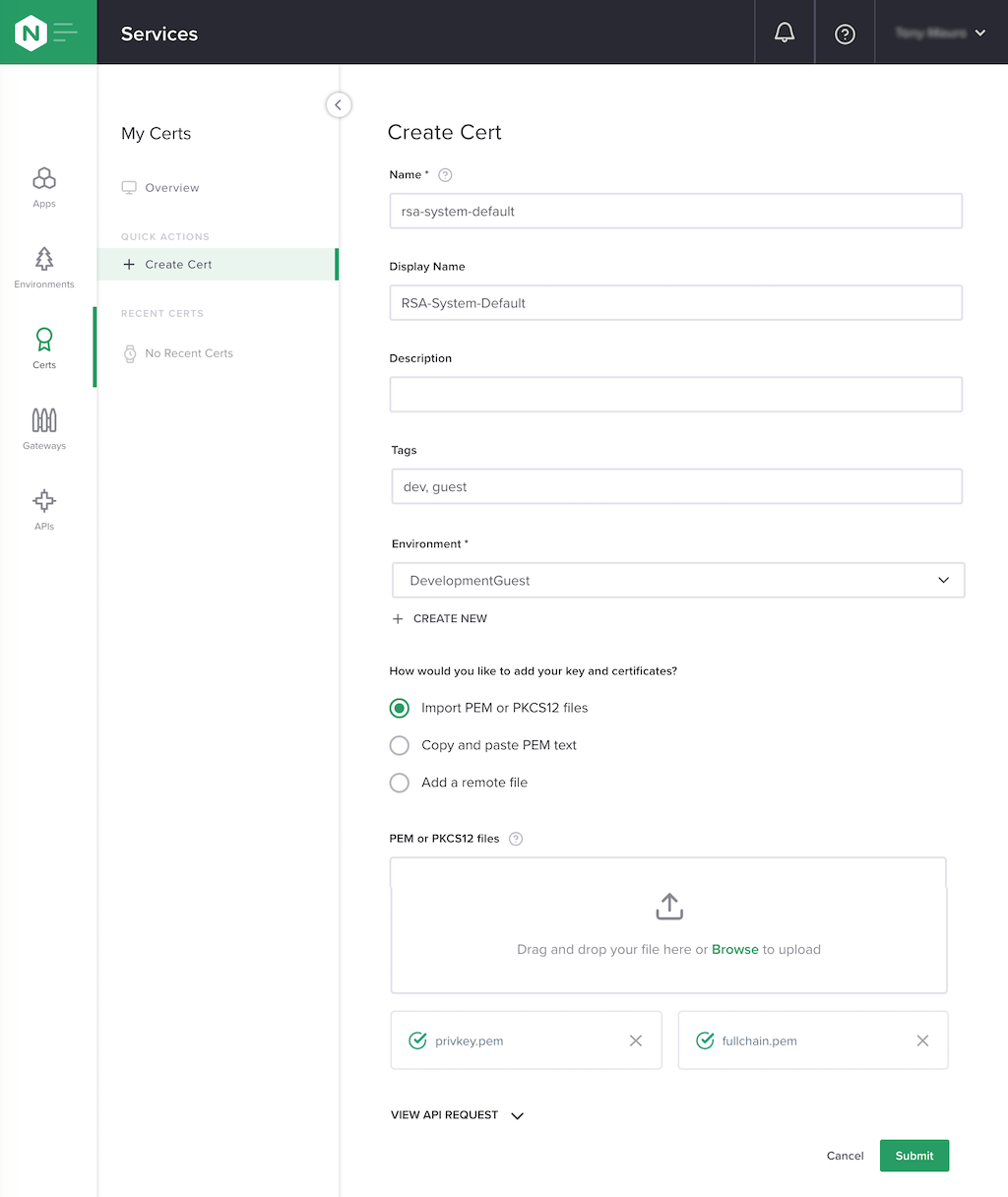

Upload a security certificate to a certificate template. Here we’ve obtained a certificate from Let’s Encrypt.

Click Certs in the first column, then +Create Cert in the My Certs column. The required fields in the Create Cert column are Name and Environment (rsa-system-default and DevelopmentGuest in our example). Drag and drop the key and cert files into the PEM or PKCS12 field (the screenshot shows the result, with the two .pem files loaded). Click the Submit button.

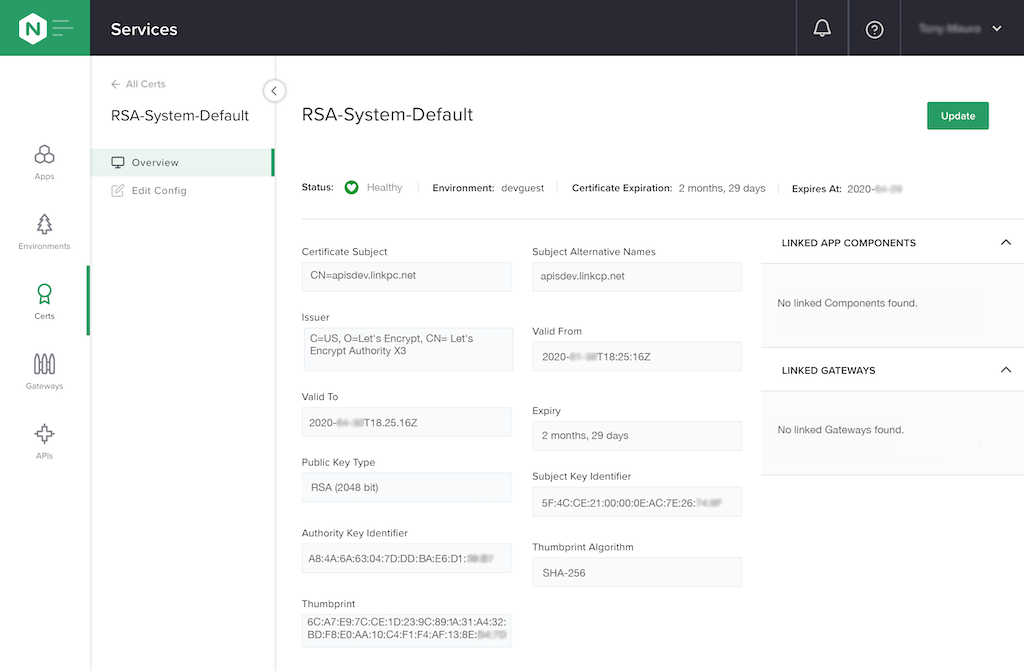

To display details about the certificate template, click its name (devguest/RSA-System-Default) in the My Certs column. In the window that opens we see that the uploaded certificate is valid for another 2 months and 29 days. When we created the certificate with Let’s Encrypt, we set the fully qualified domain name that resolves to the IP address of our API gateway, and it now appears automatically in the Certificate Subject field as CN=apisdev.linkpc.net.

-

Create a TLS gateway that references the certificate template. External users who access the hostname for our API (apisdev.linkpc.net) connect to it through the TLS gateway.

Click Gateways in the first column, then +Create Gateway in the My Gateways column. In the Configuration column that opens, the required fields are Name and Environment (in this example, api-ingress-gateway and DevelopmentGuest respectively). Click the Next → button.

-

Associate the gateway with an NGINX Plus instance.

In the Instance Refs field of the Placements column that opens, select API-GW from the drop‑down menu. Click the Next → button.

-

Define the hostname at which users access the gateway, and the TLS cert that secures it.

In the Hostnames column that opens, click Add Hostname and enter apisdev.linkpc.net in the Hostname (URI Formatted) field that opens. In the Cert Reference field, select RSA-System-Default from the drop‑down menu. Click the Publish button.

-

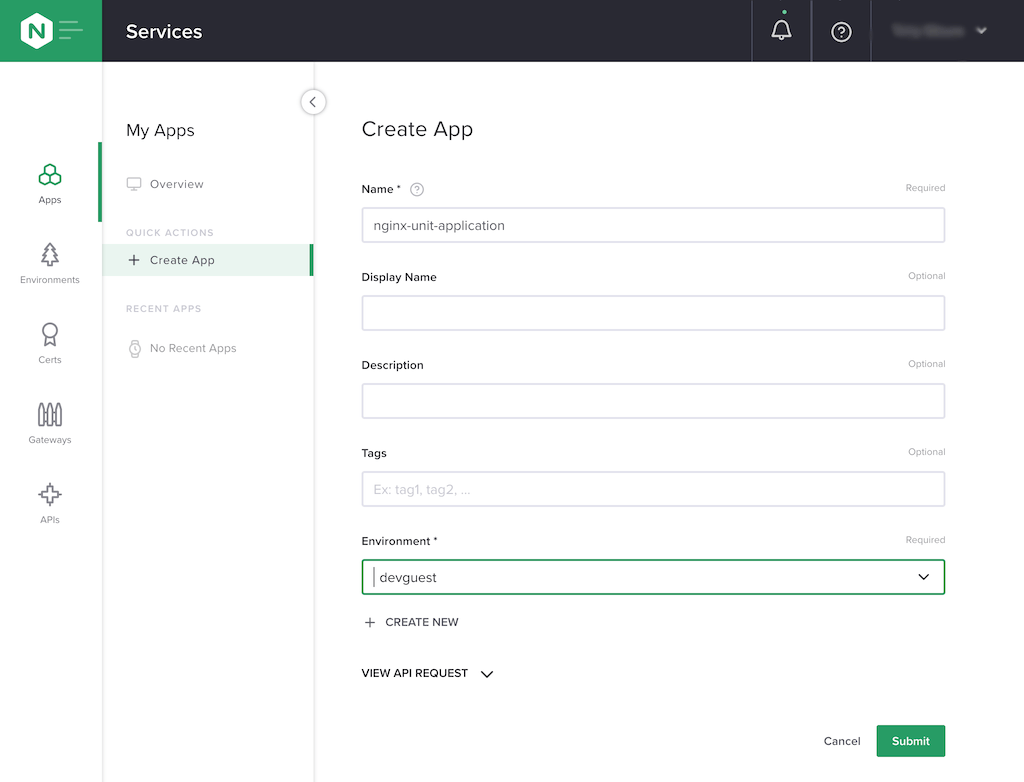

Create an application associated with our API.

Click Apps in the first column, then +Create App in the My Apps column. In the Create App column that opens, enter nginx-unit-application in the Name field and select devguest from the drop‑down menu in the Environment field. Click the Submit button.

-

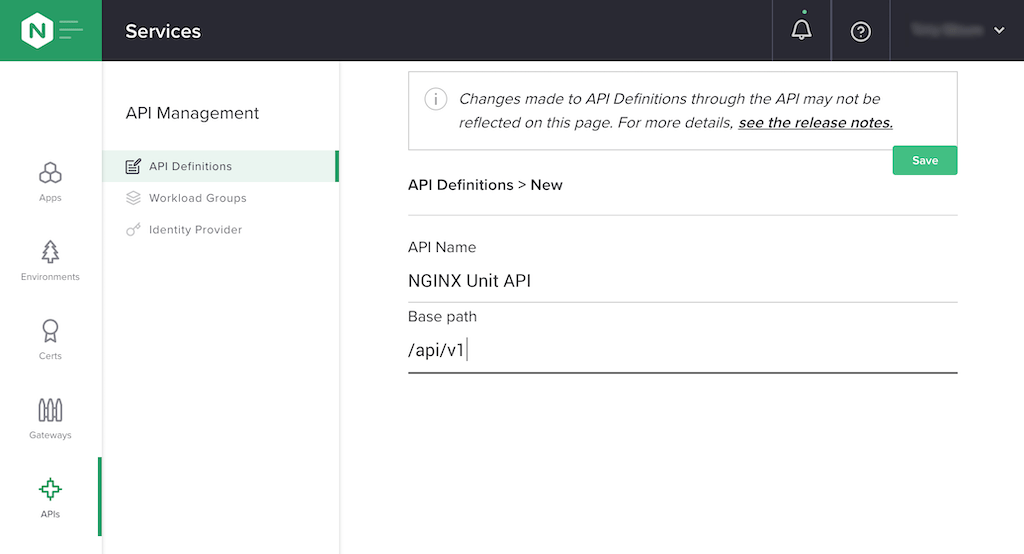

Create an API definition.

Click APIs in the first column, then the Create an API Definition button. In the API Definitions > New column than opens, enter NGINX Unit API in the API Name field and /api/v1 in the Base path field. Click the Save button.

-

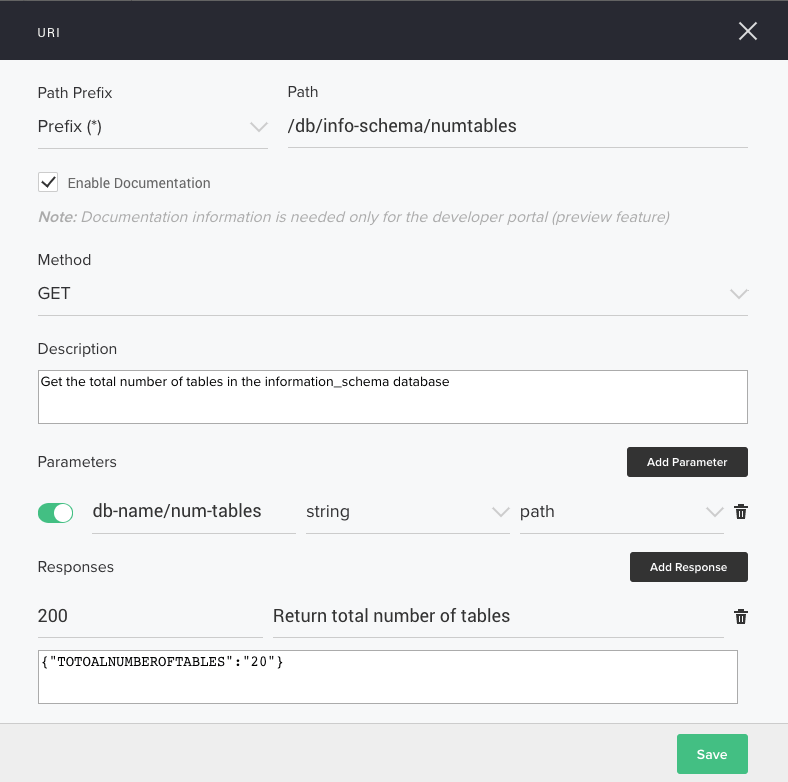

Define the URI, HTTP method, parameters, and responses for your API. As mentioned in the introduction, our API computes the total number of tables in the information_schema database hosted on the backend server.

When you click the Save button in the previous step, the URIs column opens under the Base path field. Click the Add a URI button. In the URI window that pops up, enter the values shown in the screenshot below. (The bottom four fields open when you click the Enable Documentation checkbox. The fields in the Parameters and Responses areas open when you click their respective Add buttons.) When finished, click the Save button.

-

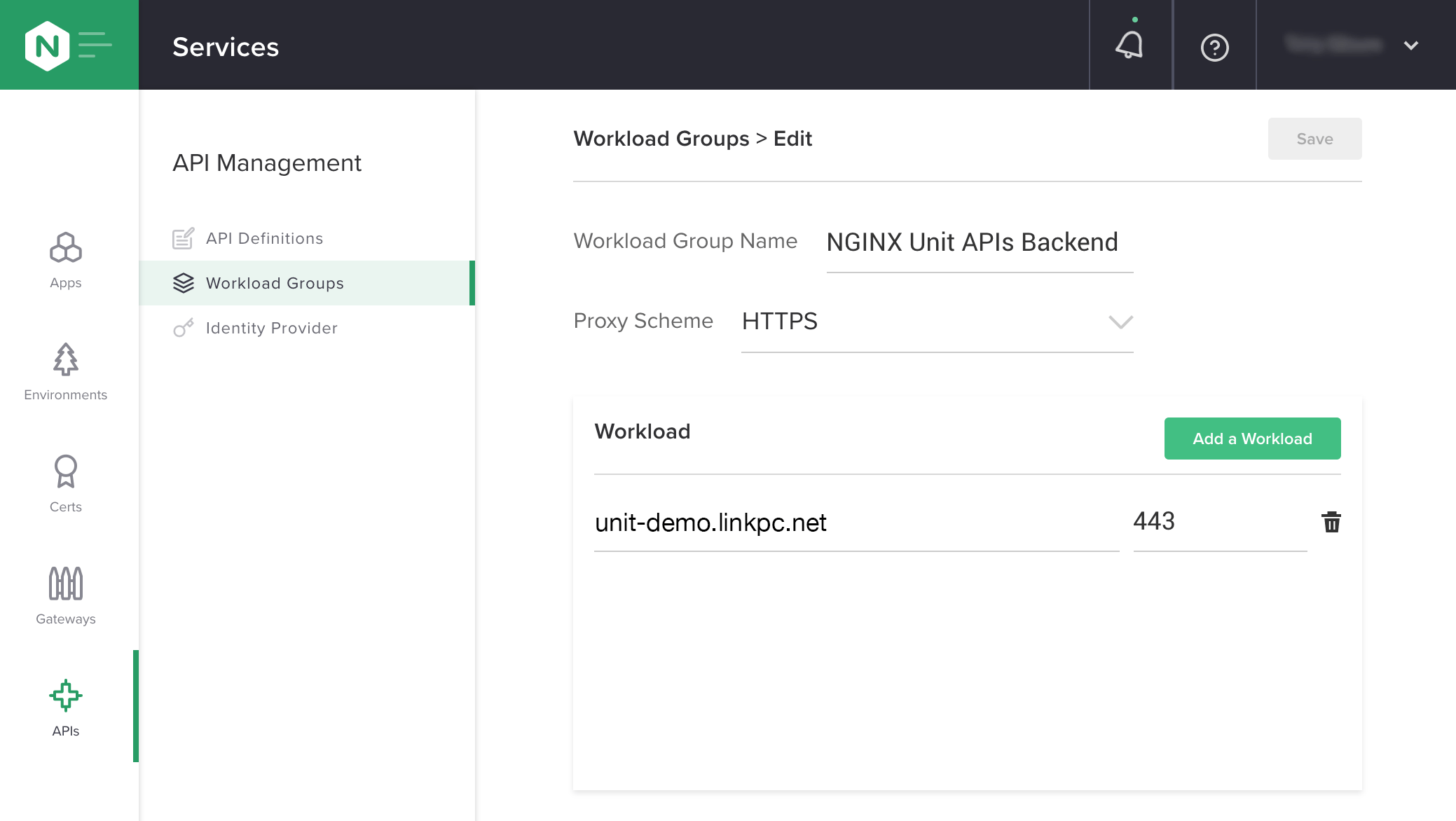

Create an NGINX Plus upstream group (which Controller calls a workload group) to define the backend server for our API, including the protocol used for communication from NGINX Plus through NGINX Unit to the backend server, and the server’s hostname and port. As mentioned in the introduction, our backend API is a Go FaaS executed by NGINX Unit on the backend server.

Click Workload Groups in the API Management column, then the Create a Workload Group button. Enter NGINX Unit APIs Backend in the Workload Group Name field, and select HTTPS from the Proxy Scheme drop‑down menu. Click the Save button.

The Workload section opens under the Proxy Scheme field. Click the Add a Workload button.

Enter the hostname and port of the backend server in the my-workload.com and Port fields (unit-demo.linkpc.net and 443 in our example), and click the Add button.

-

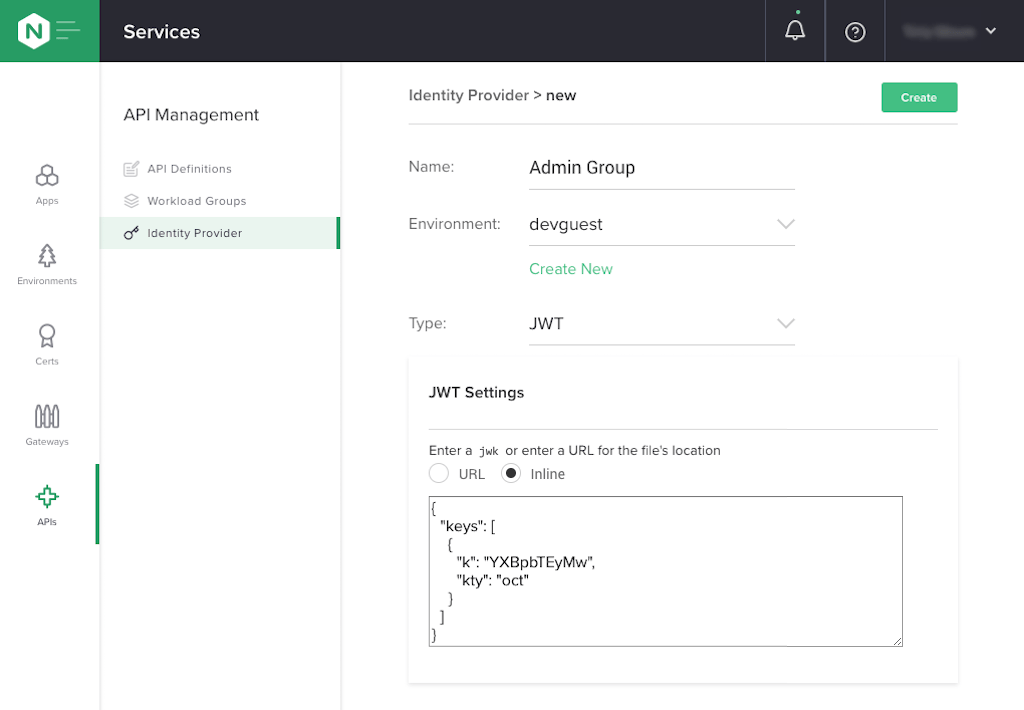

Set up authentication for the clients accessing our API. As mentioned in the introduction, we’re configuring NGINX Plus as the API gateway and using JSON Web Tokens (JWTs) to authenticate clients.

In the API Management column click Identity Provider, then click the Create an Identity Provider button in the Identity Provider column that opens.

In the fields that appear, enter or select the following values:

- Name – Admin Group

- Environment – devtest

- Type – JWT

When you select JWT, the JWT Settings section opens. Select the Inline radio button (the default) and enter the following text in the box. The value in the

kfield is the Base64URL‑encoded form of an arbitrary secret key (apim123 in our example):{ "keys": [ { "k": "YXBpbTEyMw", "kty": "oct" } ] }Click the Create button.

-

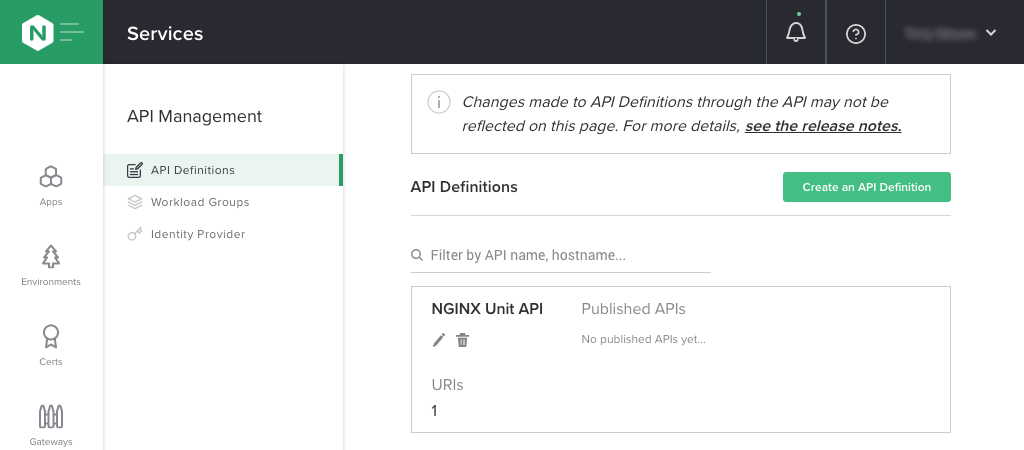

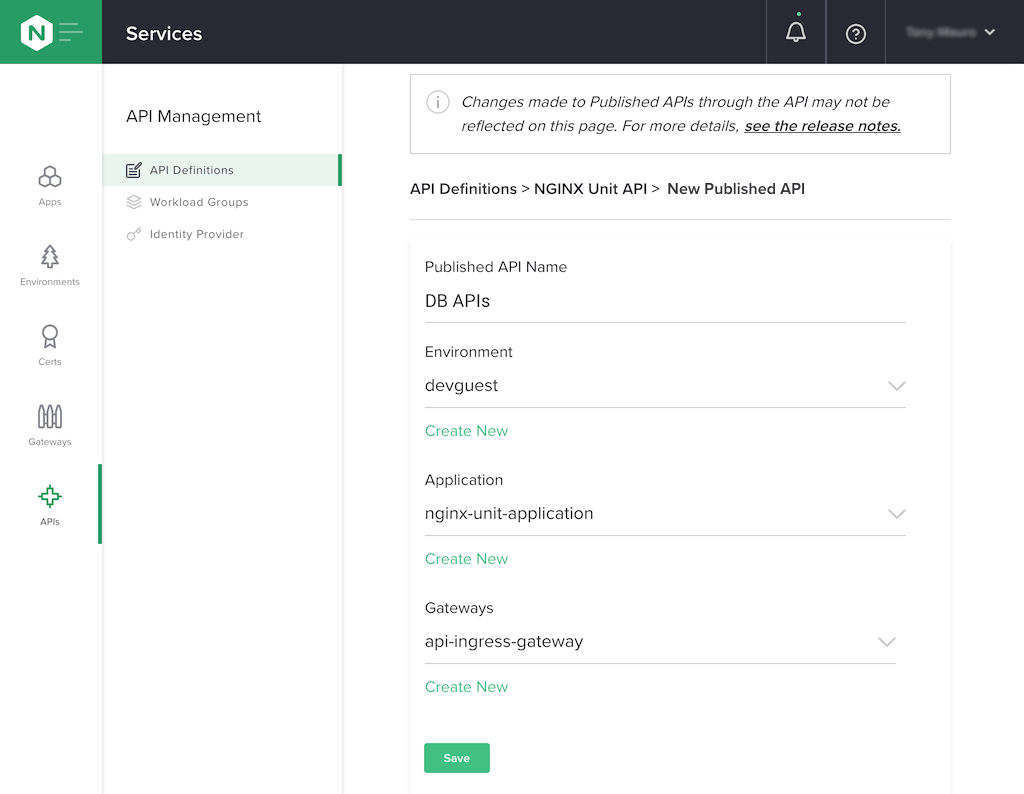

Publish the API.

Click API Definitions in the API Management column. In the box labeled NGINX Unit API in the API Definitions column, click the pencil icon to edit the API definition.

Click the Add a Published API button in the Published APIs column which opens. In the API Definitions > NGINX Unit API> New Published API column, enter or select the following values, then click the Save button:

- Published API Name – DB APIs

- Environment – devguest

- Application – nginx-unit-application

- Gateways – api-ingress-gateway

-

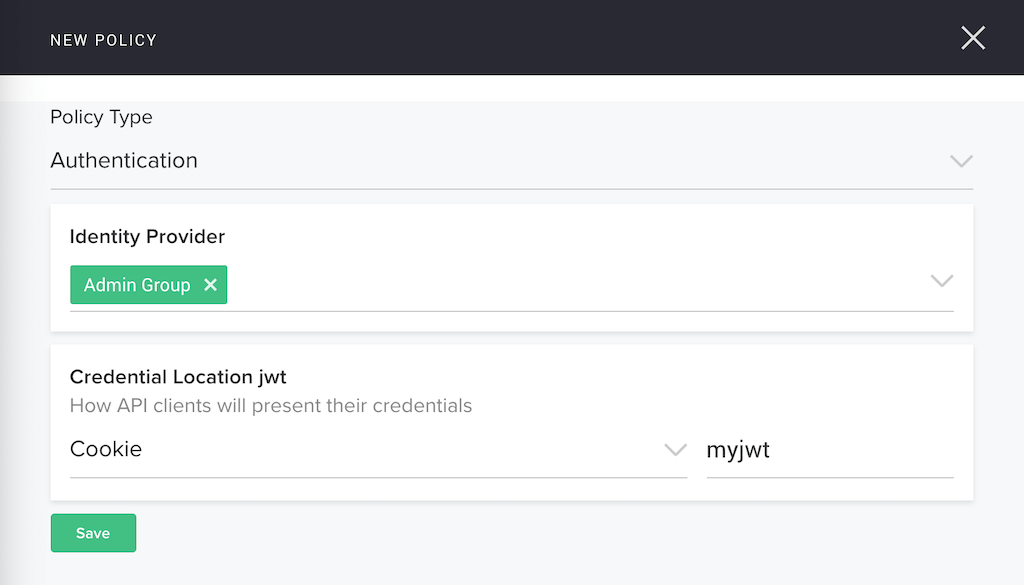

Create an authentication policy for our published API.

Scroll down on the same page and click the Add a policy button. In the NEW POLICY pop‑up window, select Authentication in the Policy Type field. (You can also create a rate limiting policy, by selecting Rate Limit instead; we are not imposing rate limits in our example.)

Next select Admin Group in the Identity Provider field, and Cookie in the Credential Location jwt field. Enter myjwt in the Cookie Name field and click the Save button.

-

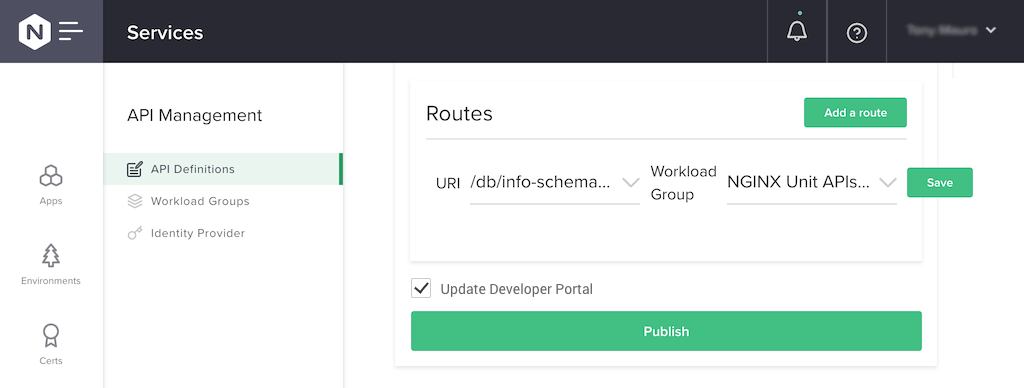

Create a route for our published API. This is what determines which workload group (API endpoint) receives requests made at a given URI.

Scroll down on the same page and click the Add a route button. Select /db/info-schema/numtables (GET) in the URI field, and NGINX Unit APIs Backend in the Workload Group field. Click the Save button. Click the Update Developer Portal checkbox and then the Publish button. Publishing the API definition updates the NGINX Plus configuration on the instance.

Provisioning NGINX Unit

NGINX Unit is the only fully dynamic, API‑driven application server that can simultaneously run applications written in different languages and frameworks, while also providing reverse‑proxying and web‑serving capabilities. It bridges the divide between developers and network operators by diminishing isolation on both sides while empowering them to build apps with marginalized constraints.

Are you ready to go fast? Run a Go FaaS (Function as a Service) with NGINX Unit, and start building APIs just as fast as NGINX Plus ends up delivering them to your consumers.

Building the API

We have previously installed the unit-go package during installation of NGINX Unit.

We start by defining our API, which in this example is a simple Go function that returns the total number of tables in the database called information_schema. Here’s the source code in info_count_tables.go. Note that we list the NGINX Unit Go package in the import section, and at the end of the file substitute unit.ListenAndServe() for the usual http.ListenAndServe() function:

package main

import (

"database/sql"

_ "github.com/go-sql-driver/mysql"

"nginx/unit"

"unit.nginx.org/go"

"net/http"

"io"

"strconv"

)

func main() {

http.HandleFunc("/", func (w http.ResponseWriter, r *http.Request) {

db, err := sql.Open("mysql", "${user}:${password}@tcp(127.0.0.1:3306)/information_schema")

if err != nil {

panic(err.Error())

}

defer db.Close()

rows, err := db.Query("SELECT count(*) AS TOTALNUMBEROFTABLES FROM INFORMATION_SCHEMA.TABLES WHERE TABLE_SCHEMA = 'information_schema'")

if err != nil {

panic(err.Error()) // proper error handling instead of panic in your app

}

var res = 0

for rows.Next() {

// for each row, scan the result into our tag composite object

err = rows.Scan(&res)

if err != nil {

panic(err.Error()) // proper error handling instead of panic in your app

}

io.WriteString(w,"{"TOTALNUMBEROFTABLES":"" + strconv.Itoa(res) + ""}")

}

})

unit.ListenAndServe(":443", nil)

}To build the binary executable, we run the following command:

$ go build info_count_tables.goConfiguring NGINX Unit

The following example.json file configures NGINX Unit to run the Go FaaS and return the output to the API gateway that initiated the request. Specifically, it instructs NGINX Unit to:

- Listen on the 192.168.27.159:443 socket (replace the address as appropriate for your server). We are enabling SSL/TLS on the application server so we have included a certificate bundle.

- Run the info_count_tables executable for incoming requests originating from host apisdev.linkpc.net (the hostname of the API gateway) with request URI /api/v1/db/info-schema/numtables.

"config": {

"listeners": {

"192.168.27.159:443": {

"pass": "routes/main",

"tls": {

"certificate": "bundle"

}

}

},

"routes": {

"main": [

{

"match": {

"host": [

"apisdev.linkpc.net"

],

"scheme": "https",

"uri": [

"/api/v1/db/info-schema/numtables"

]

},

"action": {

"pass": "applications/db_info_schema_numtables"

}

}

]

},

"applications": {

"db_info_schema_numtables": {

"type": "external",

"working_directory": "/opt/unit/src",

"executable": "info_count_tables"

}

},

"access_log": "/var/log/access.log"

}Run the following command on the application server to update the NGINX Unit configuration with the contents of example.json:

$ curl -iX PUT [email protected] http://localhost:8443/configThe Results in Action

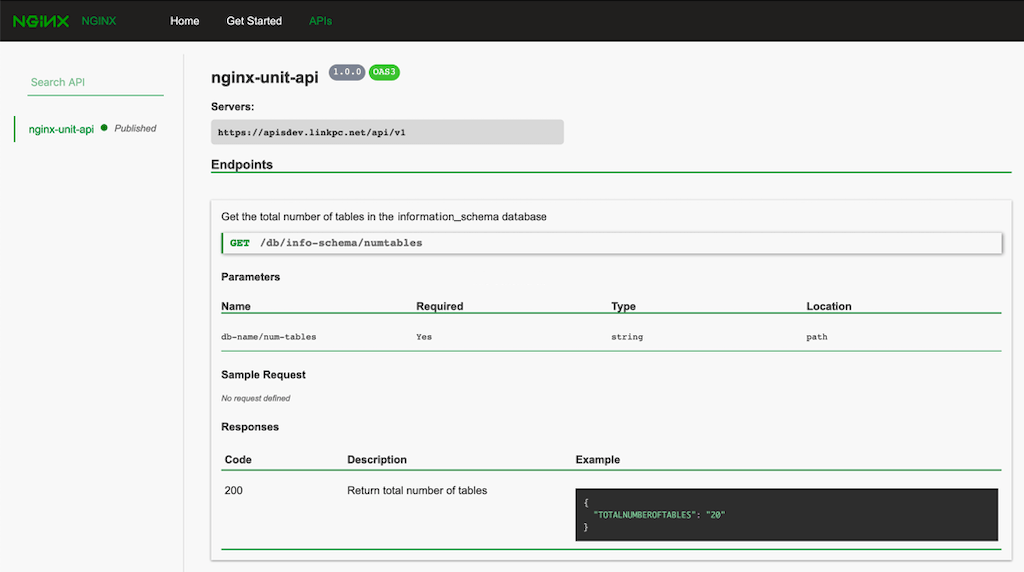

We now have everything provisioned and ready to go. Let’s visit our updated developer portal. Open a browser and enter http://dev-portal:8090 in the address bar, where dev-portal is the public IP address of the NGINX Plus instance hosting our developer portal (recall that in Step 2 of Configuring NGINX Controller and NGINX Plus we pointed out the instance called DevPortal). The documentation for our API definition appears.

Attempting to access the API without a cookie returns HTTP status code 401.

$ curl -s --insecure https://apisdev.linkpc.net:443/api/v1/db/info-schema/numtables

<html>

<head><title>401 Authentication Required</title></head>

<body>

<center><h1>401 Authentication Required</h1></center>

<hr><center>nginx</center>

</body>

</html>In a production environment, your identity provider (such as Okta, OneLogin, or Ping Identity) generates JWTs that are digitally signed by a private key. In this demo we’re generating the token manually. Once the JWT is generated, include it on the curl command line like so:

$ curl -i --insecure --cookie myjwt=eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJzdWIiOiIxMjM0NTY3ODkwIiwibmFtZSI6IkpvaG4gRG9lIiwiaWF0IjoxNTE2MjM5MDIyfQ.2p7Ov69UwxPquichQ6Fo0KR-ZWQouBz7h4gdjsXCxaQ https://apisdev.linkpc.net:443/api/v1/db/info-schema/numtables

HTTP/1.1 200 OK

Server: nginx

Date: Day, DD Mon Year 23:35:24 GMT

Content-Type: text/html; charset=utf-8

Transfer-Encoding: chunked

Connection: keep-alive

{"TOTALNUMBEROFTABLES":"61"}To make sure the API is running correctly, check the NGINX Unit logs by running the following command on the application server. The output looks something like this:

$ tail -n 10 /var/log/access.log

52.8.184.22 - - [DD/Mon/Year:00:43:47 +000] "GET /api/v1/db/info-schema/numtables HTTP/1.1" 200 41 "-" "curl/7.65.2"

52.8.184.22 - - [DD/Mon/Year:00:43:48 +000] "GET /api/v1/db/info-schema/numtables HTTP/1.1" 200 41 "-" "curl/7.65.2"

52.8.184.22 - - [DD/Mon/Year:00:43:49 +000] "GET /api/v1/db/info-schema/numtables HTTP/1.1" 200 41 "-" "curl/7.65.2"Conclusion

The NGINX Application Platform combines NGINX Controller, NGINX Plus, and NGINX Unit to provide a rapid and effective end-to-end solution for running APIs and delivering them securely to authenticated users. We used NGINX Unit on the backend server to run the API function, and NGINX Controller to publish the API to developers so they can access them.

Find out how easy it is to develop and deploy an API with NGINX Controller – Start free 30-day trial today or contact us to discuss your use cases.

The post Building and Securely Delivering APIs with the NGINX Application Platform appeared first on NGINX.

Source: Building and Securely Delivering APIs with the NGINX Application Platform

Leave a Reply