Cognitive Services APIs: Vision

What exactly are Cognitive Services and what are they for? Cognitive Services are a set of machine learning algorithms that Microsoft has developed to solve problems in the field of Artificial Intelligence (AI). The goal of Cognitive Services is to democratize AI by packaging it into discrete components that are easy for developers to use in their own apps. Web and Universal Windows Platform developers can consume these algorithms through standard REST calls over the Internet to the Cognitive Services APIs.

The Cognitive Services APIs are grouped into five categories…

- Vision—analyze images and videos for content and other useful information.

- Speech—tools to improve speech recognition and identify the speaker.

- Language—understanding sentences and intent rather than just words.

- Knowledge—tracks down research from scientific journals for you.

- Search—applies machine learning to web searches.

So why is it worthwhile to provide easy access to AI? Anyone watching tech trends realizes we are in the middle of a period of huge AI breakthroughs right now with computers beating chess champions, go masters and Turing tests. All the major technology companies are in an arms race to hire the top AI researchers.

Along with high profile AI problems that researchers know about, like how to beat the Turing test and how to model computer neural-networks on human brains, are discrete problems that developers are concerned about, like tagging our family photos and finding an even lazier way to order our favorite pizza on a smartphone. The Cognitive Services APIs are a bridge allowing web and UWP developers to use the resources of major AI research to solve developer problems. Let’s get started by looking at the Vision APIs.

Cognitive Services Vision APIs

The Vision APIs are broken out into five groups of tasks…

- Computer Vision—Distill actionable information from images.

- Content Moderator—Automatically moderate text, images and videos for profanity and inappropriate content.

- Emotion—Analyze faces to detect a range of moods.

- Face—identify faces and similarities between faces.

- Video—Analyze, edit and process videos within your app.

Because the Computer Vision API on its own is a huge topic, this post will mainly deal with just its capabilities as an entry way to the others. The description of how to use it, however, will provide you good sense of how to work with the other Vision APIs.

Note: Many of the Cognitive Services APIs are currently in preview and are undergoing improvement and change based on user feedback.

One of the biggest things that the Computer Vision API does is tag and categorize an image based on what it can identify inside that image. This is closely related to a computer vision problem known as object recognition. In its current state, the API recognizes about 2000 distinct objects and groups them into 87 classifications.

Using the Computer Vision API is pretty easy. There are even samples available for using it on a variety of development platforms including NodeJS, the Android SDK and the Swift SDK. Let’s do a walkthrough of building a UWP app with C#, though, since that’s the focus of this blog.

The first thing you need to do is register at the Cognitive Services site and request a key for the Computer Vision Preview (by clicking on one of the “Get Started for Free” buttons.

Next, create a new UWP project in Visual Studio and add the ProjectOxford.Vision NuGet package by opening Tools | NuGet Package Manager | Manage Packages for Solution and selecting it. (Project Oxford was an earlier name for the Cognitive Services APIs.)

For a simple user interface, you just need an Image control to preview the image, a Button to send the image to the Computer Vision REST Services and a TextBlock to hold the results. The workflow for this app is to select an image -> display the image -> send the image to the cloud -> display the results of the Computer Vision analysis.

<Grid Background="{ThemeResource ApplicationPageBackgroundThemeBrush}">

<Grid.RowDefinitions>

<RowDefinition Height="9*"/>

<RowDefinition Height="*"/>

</Grid.RowDefinitions>

<Grid.ColumnDefinitions>

<ColumnDefinition/>

<ColumnDefinition/>

</Grid.ColumnDefinitions>

<Border BorderBrush="Black" BorderThickness="2">

<Image x:Name="ImageToAnalyze" />

</Border>

<Button x:Name="AnalyzeButton" Content="Analyze" Grid.Row="1" Click="AnalyzeButton_Click"/>

<TextBlock x:Name="ResultsTextBlock" TextWrapping="Wrap" Grid.Column="1" Margin="30,5"/>

</Grid>

When the Analyze Button gets clicked, the handler in the Page’s code-behind will open a FileOpenPicker so the user can select an image. In the ShowPreviewAndAnalyzeImage method, the returned image is used as the image source for the Image control.

readonly string _subscriptionKey;

public MainPage()

{

//set your key here

_subscriptionKey = "b1e514ef0f5b493xxxxx56a509xxxxxx";

this.InitializeComponent();

}

private async void AnalyzeButton_Click(object sender, RoutedEventArgs e)

{

var openPicker = new FileOpenPicker

{

ViewMode = PickerViewMode.Thumbnail,

SuggestedStartLocation = PickerLocationId.PicturesLibrary

};

openPicker.FileTypeFilter.Add(".jpg");

openPicker.FileTypeFilter.Add(".jpeg");

openPicker.FileTypeFilter.Add(".png");

openPicker.FileTypeFilter.Add(".gif");

openPicker.FileTypeFilter.Add(".bmp");

var file = await openPicker.PickSingleFileAsync();

if (file != null)

{

await ShowPreviewAndAnalyzeImage(file);

}

}

private async Task ShowPreviewAndAnalyzeImage(StorageFile file)

{

//preview image

var bitmap = await LoadImage(file);

ImageToAnalyze.Source = bitmap;

//analyze image

var results = await AnalyzeImage(file);

//"fr", "ru", "it", "hu", "ja", etc...

var ocrResults = await AnalyzeImageForText(file, "en");

//parse result

ResultsTextBlock.Text = ParseResult(results) + "nn " + ParseOCRResults(ocrResults);

}

The real action happens when the returned image then gets passed to the VisionServiceClient class included in the Project Oxford NuGet package you imported. The Computer Vision API will try to recognize objects in the image you pass to it and recommend tags for your image. It will also analyze the image properties, color scheme, look for human faces and attempt to create a caption, among other things.

private async Task<AnalysisResult> AnalyzeImage(StorageFile file)

{

VisionServiceClient VisionServiceClient = new VisionServiceClient(_subscriptionKey);

using (Stream imageFileStream = await file.OpenStreamForReadAsync())

{

// Analyze the image for all visual features

VisualFeature[] visualFeatures = new VisualFeature[] { VisualFeature.Adult, VisualFeature.Categories

, VisualFeature.Color, VisualFeature.Description, VisualFeature.Faces, VisualFeature.ImageType

, VisualFeature.Tags };

AnalysisResult analysisResult = await VisionServiceClient.AnalyzeImageAsync(imageFileStream, visualFeatures);

return analysisResult;

}

}

And it doesn’t stop there. With a few lines of code, you can also use the VisionServiceClient class to look for text in the image and then return anything that the Computer Vision API finds. This OCR functionality currently recognizes about 26 different languages.

private async Task<OcrResults> AnalyzeImageForText(StorageFile file, string language)

{

//language = "fr", "ru", "it", "hu", "ja", etc...

VisionServiceClient VisionServiceClient = new VisionServiceClient(_subscriptionKey);

using (Stream imageFileStream = await file.OpenStreamForReadAsync())

{

OcrResults ocrResult = await VisionServiceClient.RecognizeTextAsync(imageFileStream, language);

return ocrResult;

}

}

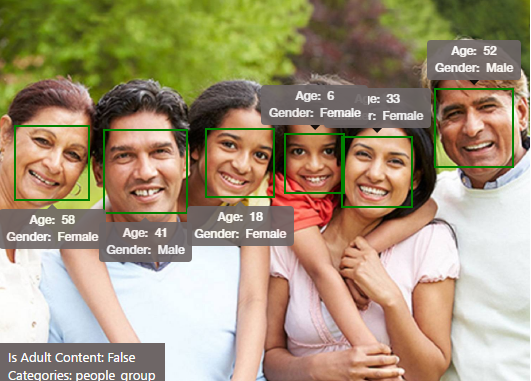

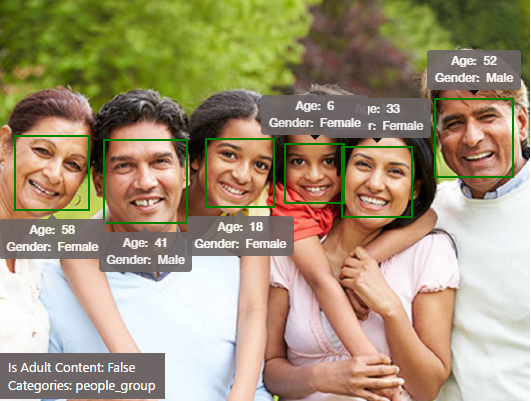

Combining the image analysis and text recognition features of the Computer Vision API will return results like that shown below.

The power of this particular Cognitive Services API is that it will allow you to scan your device folders for family photos and automatically start tagging them for you. If you add in the Face API, you can also tag your photos with the names of family members and friends. Throw in the Emotion API and you can even start tagging the moods of the people in your photos. With Cognitive Services, you can take a task that normally requires human judgement and combine it with the indefatigability of a machine (in this case a machine that learns) in order to perform this activity quickly and indefinitely on as many photos as you own.

Wrapping Up

In this first post in the Cognitive API series, you received an overview of Cognitive Services and what it offers you as a developer. You also got a closer look at the Vision APIs and a walkthrough of using one of them. In the next post, we’ll take a closer look at the Speech APIs. If you want to dig deeper on your own, here are some links to help you on your way…

- Artificial Intelligence based vision, speech and language API

- Cognitive Services: making AI easy

- Selection of resource to learn AI / Machine Learning

- Code samples

- The dawn of deep learning

Source: Cognitive Services APIs: Vision

Leave a Reply