Consolidating Your API Gateway and Load Balancer with NGINX

table.nginx-blog, table.nginx-blog th, table.nginx-blog td {

border: 2px solid black;

border-collapse: collapse;

}

table.nginx-blog {

width: 100%;

}

table.nginx-blog th {

background-color: #d3d3d3;

align: left;

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 2px;

line-height: 120%;

}

table.nginx-blog td {

padding-left: 5px;

padding-right: 5px;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

table.nginx-blog td.center {

text-align: center;

padding-bottom: 2px;

padding-top: 5px;

line-height: 120%;

}

Now that software load balancers are the standard in modern, DevOps‑driven organizations, what new challenges have we created for ourselves? How can we deal with the complexities of “proxy‑sprawl”? Can we consolidate without compromising performance, stability, and functionality?

Most professionals in the web and app delivery space are familiar with the trajectory of the hardware application delivery controller (ADC) market. Hardware ADCs began as smart, load‑balancing routers and quickly rose to become the standard way of delivering high‑traffic or business‑critical applications.

Driven by the unceasing pressure to increase revenue, hardware ADC vendors bundled feature after feature into their devices – SSL VPN? virtual desktop broker? link load balancer? Sure, we can be that! Hardware devices became more and more complex and unwieldy, and users found this essential technology increasingly expensive to own and operate.

Software Is Displacing Hardware ADCs in Modern Enterprises

As hardware ADCs began to collapse under their own weight, DevOps teams turned to much lighter‑weight software alternatives to meet their application delivery needs. Software‑based solutions that used familiar open source technology – NGINX reverse proxy, ModSecurity web application firewall (WAF), Varnish cache, HAProxy load balancer – displaced the hardware alternatives. Software could be deployed easily and cost‑effectively on a per‑application basis, giving control directly to application owners and supporting the agile delivery processes that DevOps teams championed.

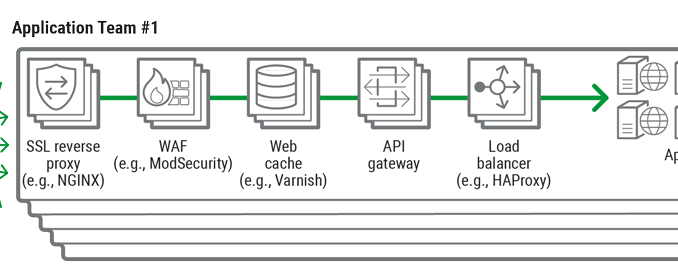

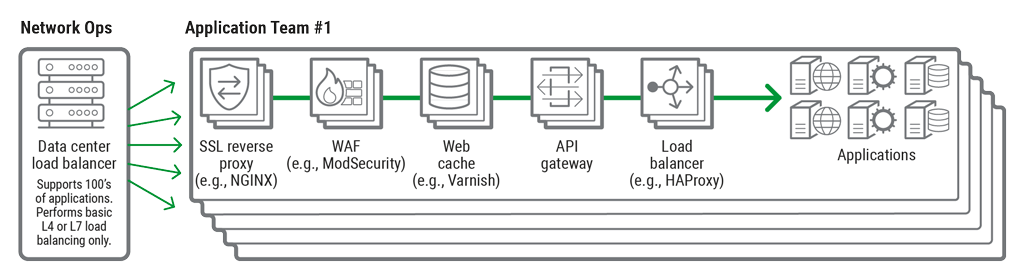

If you survey one of these deployments, it’s not unusual to see something resembling the following:

The software ecosystem is full of single‑purpose, point solutions for each problem faced by a DevOps engineer. Each new use case has been addressed with a new set of solutions built on the reverse proxy. For example, API gateway products arose because of the need to manage API traffic, applying routing, authentication, rate‑limiting, and access‑control policies to protect API‑based services.

Software Must Evolve Beyond Point Solutions

The explosion of point solutions clearly poses a problem for DevOps engineers. They must familiarize themselves with the processes of deploying, configuring, updating, and monitoring each solution. Troubleshooting becomes much more complex, especially when the problems arise from interactions among the different solutions.

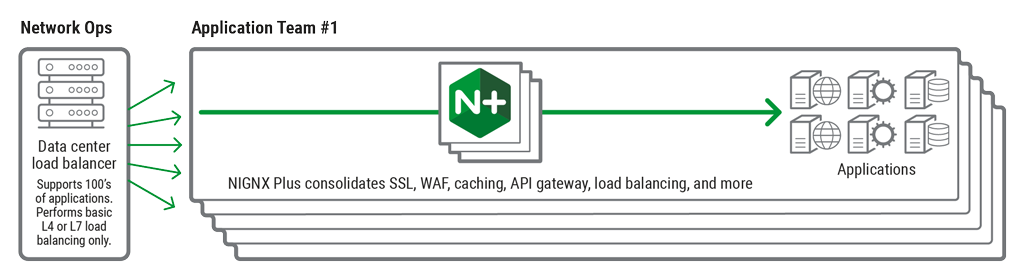

It seems history is repeating itself: just as the proliferation of purpose‑specific hardware devices led to consolidation of many functions on a single ADC, DevOps teams now need to reduce complexity and simplify their infrastructure by consolidating software functionality onto a single platform. But this time around it’s essential to avoid the mistakes made previously with hardware load balancers. A consolidated solution is of little use if it underperforms, is complex to operate, or is prohibitively expensive to deploy on a per‑application basis.

Consolidating the Application Delivery Stack with NGINX and NGINX Plus

Most DevOps engineers are already very familiar with NGINX, the open source web server and reverse proxy which delivers more of the world’s busiest websites than any other solution.

Organizations use open source NGINX to build a variety of specific solutions, ranging from distributed content delivery networks (CDNS, like CloudFlare, CloudFront, and MaxCDN) to local API gateways. NGINX is also at the heart of many open source and commercial point solutions, including commercial load balancers and API gateways from Kong and 3scale.

NGINX Plus is developed by the team behind open source NGINX. It removes the need for a complex chain of point solutions by consolidating multiple functions – authentication, reverse proxying, caching, API gateway, load balancing, and more – into a single software platform. It can be extended easily with certified modules to add WAF (with modules for ModSecurity, Stealth Security, and Wallarm), advanced authentication (Curity, Forgerock, IDFConnect, Ping Identity), programmability (NGINX JavaScript, Lua), among other functions.

into a single platform

The benefits of NGINX Plus to the DevOps engineer go far beyond than just consolidation of functionality. A rich RESTful API provides deep insights into the health and performance of NGINX Plus and the backend servers it is load balancing. Dynamic reconfiguration and service discovery integration simplify the operation of NGINX Plus in fluid environments such as cloud or Kubernetes. The clustering capabilities in NGINX Plus enable you to distribute traffic and share run‑time state across a cluster in a reliable HA fashion.

Achieving Consolidation Without Compromising Performance

Third‑party solutions that are built on open source NGINX do not provide the advantages of the additional NGINX Plus functionality, APIs, and clustering. Furthermore, many solutions rely on third‑party languages (Lua is most popular) to implement their additional functionality. Lua is a very capable language, but it imposes an unpredictable performance penalty on NGINX that is very dependent on the complexity of the third‑party extensions.

NGINX Plus does not compromise the high performance or lightweight nature of open source NGINX. The core NGINX Plus binary is just 1.6 MB in size, and capable of handling over 1 million requests per second, 70 Gbps throughput, and 65,000 new SSL transactions per second on industry‑standard hardware with a realistic, production‑ready configuration. New functionality is implemented by the core NGINX team as in‑process modules, exactly as in open source NGINX, so you can be assured of the performance, stability, and quality of each feature.

The API Gateway Use Case

DevOps teams can use NGINX Plus to meet a number of use cases, API gateway being a prominent example. The following table shows how NGINX Plus as an API gateway meets the many requirements for managing API requests from external sources and routing them to internal services.

| API gateway requirement | NGINX Plus solution | |

|---|---|---|

| Core protocols | REST (HTTPS), gRPC | HTTP, HTTPS, HTTP/2, gRPC |

| Additional protocols | TCP‑borne message queue | WebSocket, TCP, UDP |

| Routing requests | Requests are routed based on service (host header), API method (HTTP URL) and parameters | Very flexible request routing based on Host header, URL, and other request headers |

| Managing API life cycle | Rewriting legacy API requests, rejecting calls to deprecated APIs | Comprehensive request rewriting and rich decision engine to route or respond directly to requests |

| Protecting vulnerable applications | Rate limiting by APIs and methods | Rate limiting by multiple criteria, including source address, request parameters; connection limiting to backend services |

| Offloading authentication | Examining authentication tokens in incoming requests | Support for multiple authentication methods, including JWT, API keys, external auth services, and OpenID Connect |

| Managing changing application topology | Implementing various APIs to accept configuration changes and support blue‑green workflows | APIs and service‑discovery integrations to locate endpoints; APIs may be orchestrated for blue‑green and other use cases |

Being a consolidated solution, NGINX Plus can also manage web traffic with ease, translating between protocols (HTTP/2 and HTTP, FastCGI, uwsgi) and providing consistent configuration and monitoring interfaces. NGINX Plus is sufficiently lightweight to be deployed in container environments or as a sidecar with minimal resource footprint.

Enhancing API Gateway Solutions with NGINX Plus

Open source NGINX was originally developed as a gateway for HTTP (web) traffic, and the primitives by which it is configured are expressed in terms of HTTP requests. These are similar, but not identical, to the way you expect to configure an API gateway, so a DevOps engineer needs to understand how to map API definitions to HTTP requests.

For simple APIs, this is straightforward to do. For more complex situations, the blogs in our recent three‑part series describe how to map a complex API to a service in NGINX Plus, and then handle many common API gateway tasks:

- Rewriting API requests

- Correctly responding to errors

- Managing API keys for access control

- Examining JWT tokens for user authentication

- Rate limiting

- Enforcing specific request methods

- Applying fine‑grained access control

- Controlling request sizes

- Validating request bodies

- Routing, authenticating, checking, and protecting gRPC traffic

Conclusion

Hardware ADCs have been sidelined as DevOps teams rely heavily on software components to deliver and operate their applications. Open source NGINX is recognized as a key part of most application delivery stacks.

The single‑purpose or point‑solution functionality of many software components can result in multi‑tier application delivery stacks. Although built from ‘best‑of‑breed’ components, these stacks can be complex to operate, difficult to troubleshoot, and have inconsistent performance and scalability. NGINX Plus converges this functionality onto a single software platform.

NGINX Plus can be applied to a wide range of problems, such as API delivery, using proven and familiar technology that does not compromise performance or stability.

Try NGINX Plus as an API gateway for yourself – start a free 30-day trial today or contact us to discuss your use case.

The post Consolidating Your API Gateway and Load Balancer with NGINX appeared first on NGINX.

Source: Consolidating Your API Gateway and Load Balancer with NGINX

Leave a Reply