Do I Need a Service Mesh?

“Service mesh” is a red‑hot topic. It seems that every major container‑related conference last year included a “service mesh” track, and industry influencers everywhere are talking about the revolutionary benefits of this technology.

However, as of early 2019, service mesh technology is still immature. Istio, the leading implementation, is not yet ready for general enterprise deployment and only a handful of successful in‑production deployments are running. Other service mesh implementations also exist, but are not getting the massive mindshare the industry pundits say that service mesh deserves.

How do we reconcile this mismatch? On the one hand, we hear “you need a service mesh”, and on the other hand, organizations have been running applications successfully on container platforms for years without one.

Getting Started with Kubernetes

Service mesh is a milestone on your journey, but it’s not the starting point.

Kubernetes is a very capable platform that has been well proven in production deployments of container applications. It provides a rich networking layer that brings together service discovery, load balancing, health checks, and access control in order to support complex distributed applications.

These capabilities are more than enough for simple applications and for well‑understood, legacy applications that have been containerized. They allow you to deploy applications with confidence, scale them as needed, route around unexpected failures, and implement simple access control.

Kubernetes provides an Ingress resource object in its API. This object defines how selected services can be accessed from outside the Kubernetes cluster, and an Ingress controller implements those policies. NGINX is the load balancer of choice in most implementations, and we provide a high‑performance, supported, and production‑ready implementation for both NGINX Open Source and NGINX Plus.

For many production applications, vanilla Kubernetes and an Ingress controller provide all the needed functionality and there’s no need to progress to anything more sophisticated.

Next Steps for More Complex Applications

Add security, monitoring, and traffic management to improve control and visibility.

When operations teams manage applications in production, they sometimes need deeper control and visibility. Sophisticated applications might exhibit complex network behavior, and frequent changes in production can introduce more risk to the stability and consistency of the app. It might be necessary to encrypt traffic between the components when running on a shared Kubernetes cluster.

Each requirement can be met using well‑understood techniques:

- To secure traffic between services, you can add mutual TLS to each microservice at deployment or run time.

- To identify performance and reliability issues, each microservice can export Prometheus‑compliant metrics for analysis with tools such as Grafana.

- To debug those issues, you can embed OpenTracing Tracers into each microservice (multiple languages and frameworks are supported).

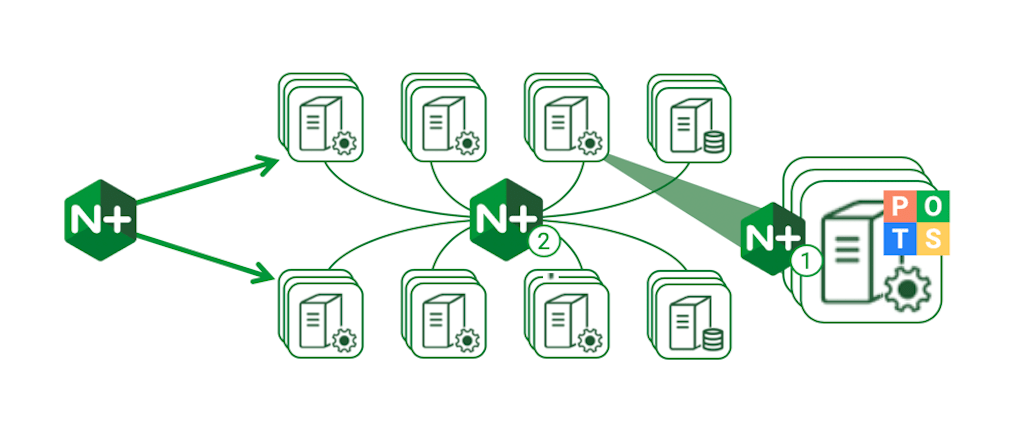

- To implement advanced load‑balancing policies, blue/green and canary deployments, and circuit breakers, you can tactically deploy proxies and load balancers.

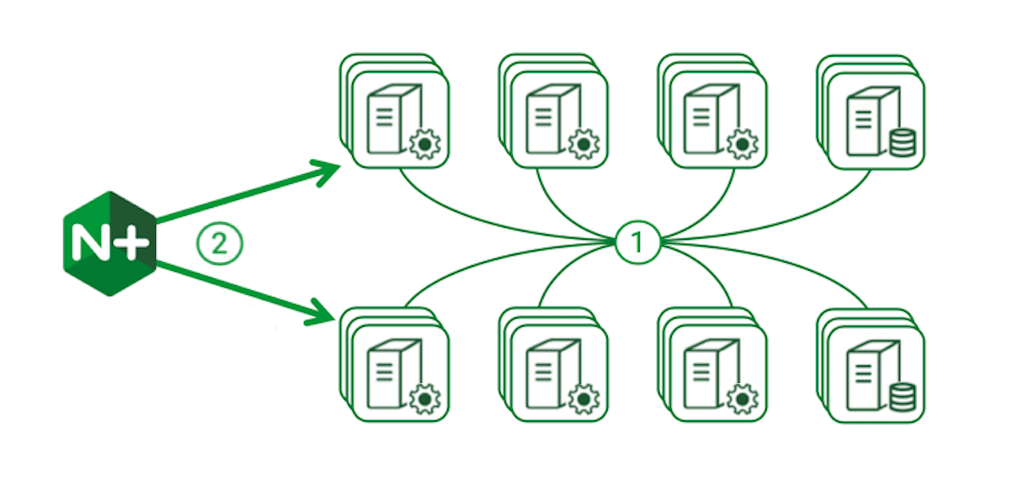

Some of these techniques require a small change to each service – for example, burning certificates into containers or adding modules for Prometheus and OpenTracing. NGINX Plus can provide dedicated load balancing for critical services, with service discovery and API‑driven configuration for orchestrating changes. The Router Mesh pattern in the NGINX Microservices Reference Architecture implements a cluster‑wide control point for traffic.

Almost every containerized application running in production today uses techniques like these to improve control and visibility.

Why Then Do I Need a Service Mesh?

If the techniques above are proven in production, what does a service mesh add?

Each step described in the previous section puts a burden on the application developer and operations team to accommodate it. Individually, the burdens are light because the solutions are well understood, but the weight accumulates. Eventually, organizations running large‑scale, complex applications might reach a tipping point where enhancing the application service-by-service becomes too difficult to scale.

This is the core problem service mesh promises to address. The goal of a service mesh is to deliver the required capabilities in a standardized and transparent fashion, completely invisible to the application.

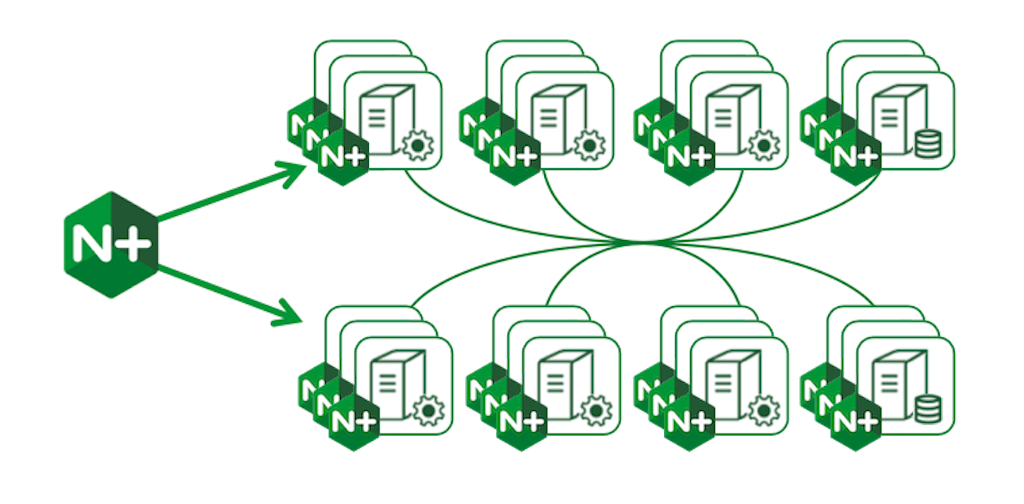

Service mesh technology is still new, with very few production deployments. Early deployments have been built on complex, home‑grown solutions, specific to each adopter’s needs. A more universal approach is emerging, described as the “sidecar proxy” pattern. This approach deploys Layer 7 proxies alongside every single service instance; these proxies capture all network traffic and provide the additional capabilities – mutual TLS, tracing, metrics, traffic control, and so on – in a consistent fashion.

Service mesh technology is still very new, and vendors and open source projects are rushing to make stable, functional, and easy-to-operate implementations. 2019 will almost certainly be the “year of the service mesh”, where this promising technology will reach the point where some implementations are truly production‑ready for general‑purpose applications.

What Should I Do Now?

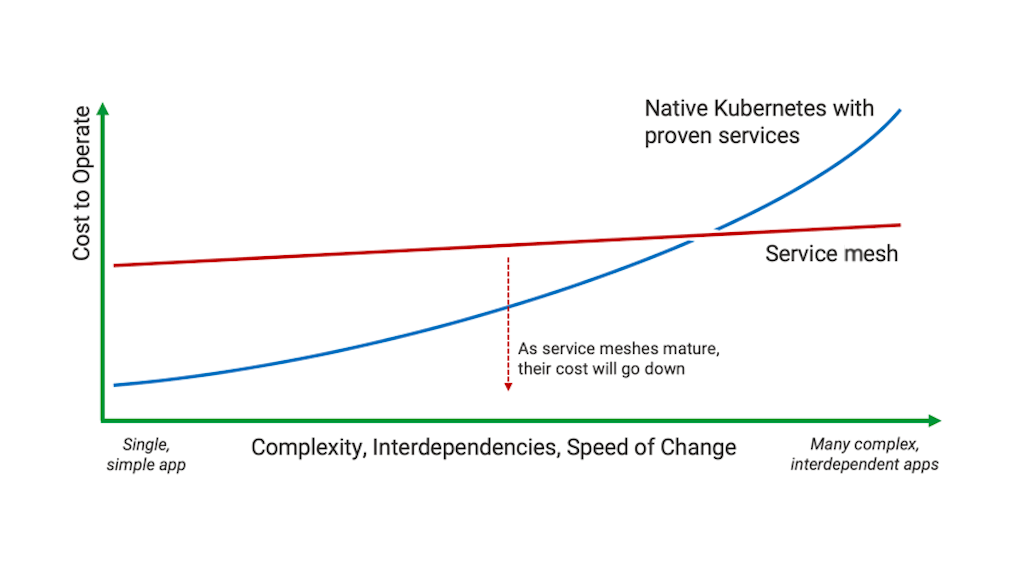

As of early 2019, it’s probably premature to jump forward to one of the early service mesh implementations, unless you have firmly hit the limitations of other solutions and need an immediate, short‑term solution. The immaturity and rapid pace of change in current service mesh implementations make the cost and risk of deploying them high. As the technology matures, the cost and risks will go down, and the tipping point for adopting service mesh will get closer.

Do not let the lack of a stable, mature service mesh delay any initiatives you are considering today, however. As we have seen, Kubernetes and other orchestration platforms provide rich functionality, and adding more sophisticated capabilities can follow well‑trodden, well‑understood paths. Proceed down these paths now, using proven solutions such as ingress routers and internal load balancers. You will know when you reach the tipping point where it’s time to consider bringing a service mesh implementation to bear.

The post Do I Need a Service Mesh? appeared first on NGINX.

Source: Do I Need a Service Mesh?

Leave a Reply