Do You Really Need Different Kinds of API Gateways? (Hint: No!)

div.indent {

margin-left: 30px;

}

API gateways are the bedrock of API infrastructure. API gateways secure and mediate traffic between backend applications that expose their APIs and the consumers of the APIs. For example, API gateways can manage the API traffic generated by calls from a mobile application like Uber to a backend application like Google Maps. API gateway functionality includes authenticating API calls, routing requests to appropriate backends, applying rate limits to prevent overloading your systems, mitigating DDoS attacks, offloading SSL/TLS traffic to improve performance, and handling errors and exceptions.

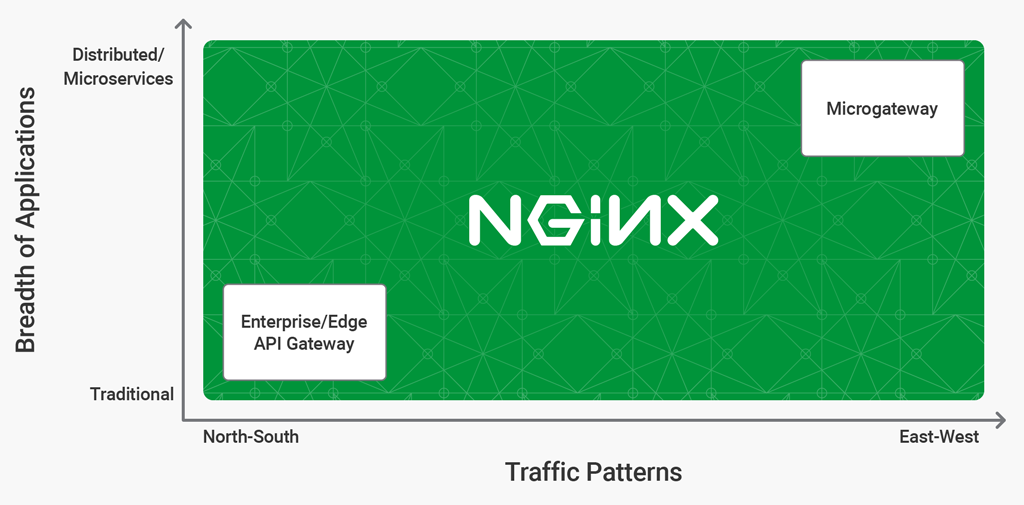

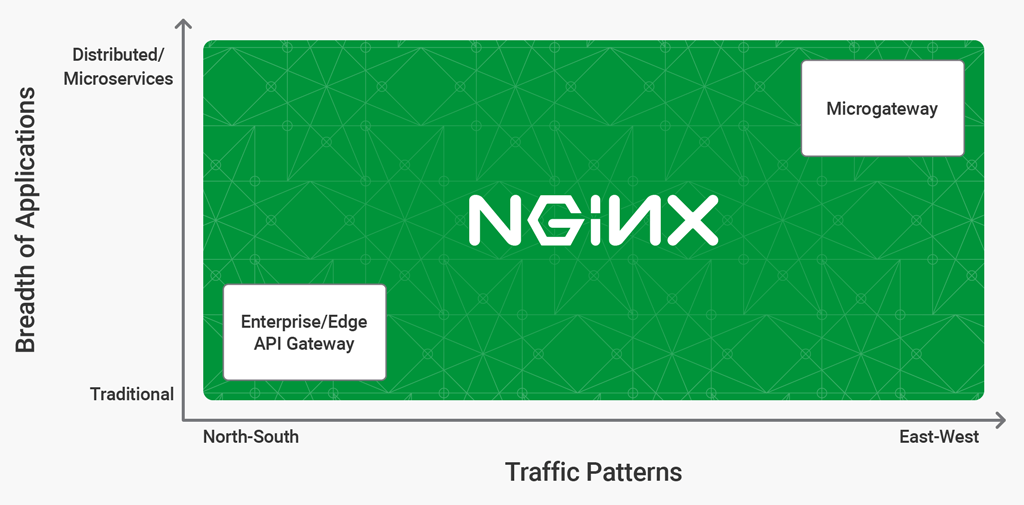

To generate new sources of revenue, enterprises are developing external APIs that third‑party developers can use to build applications. They are also exposing APIs to simplify business processes. And to support these new digital business models, enterprises need a comprehensive API management solution that helps them define, publish, secure, and analyze APIs, as well as quickly onboard third‑party developers who consume the APIs. In response, many vendors have emerged to offer a one‑stop solution for full API lifecycle management, with an API gateway integrated into the feature‑heavy solution. Such gateways, often referred to as “enterprise” or “edge” API gateways, manage and protect the “north‑south” traffic between the applications that expose APIs and the consumers of those APIs.

But applications evolve. Apps that use a microservices architecture generate a significant amount of API traffic among the microservices, referred to as “east‑west” traffic. API management vendors have responded, but the resulting architectures are overly complex.

This blog explores why.

The Rise of Microservices

Microservices are an approach to software architecture adopted by many distributed applications developed by companies such as Netflix and Uber, wherein an application is made up of distinct, smaller “services” that are autonomous and perform a single function or process. Compared to traditional monolithic apps, microservices apps are:

- More resilient – Failure of one service does not bring down the app as a whole

- Easier to scale – To alleviate bottlenecks, you spin up more instances of the relevant microservices

- More agile – Smaller apps lend themselves better to continuous integration/continuous delivery (CI/CD) software development processes

Since microservices by definition perform a single function, they don’t need the full infrastructure support provided by a server or virtual machine. Most microservices architectures use container‑based infrastructure, which is flexible, portable, and lightweight.

Microgateways Have Emerged to Handle Traffic Among Microservices

To work effectively together, the microservices that make up an application must of course communicate. The usual mechanism is for each microservice to call the APIs exposed by its peer microservices. The volume of this east‑west traffic among the component microservices is often substantially higher than the volume of north‑south traffic between the application and its external clients. Many API management vendors have introduced a new kind of gateway, the microgateway, dedicated to handling east‑west traffic.

As defined in a recent report from Gartner:

- Be containerized or container‑ready

- Have no limit on number of instances

- Incur no (or very low) license fees for additional instances

- Provide low latency

- Have a small footprint

- Be amenable to centralized and automatic administration

Gartner, Selecting the Right API Gateway to Protect Your APIs and Microservices, 28 June 2018

The primary reason that legacy API management vendors have introduced microgateways as a new type of gateway is that their first‑generation solutions were designed before the emergence of microservices and containers. As products of their time, these solutions share characteristics of the monolithic applications they were designed to work with: all processing functionality is included in a single binary, and configuration information is typically stored in a database (either bundled into the binary or from a separate database vendor).

These solutions usually have a large footprint, because the vendors have included a large number of features to maximize the solution’s appeal to customers with a wide range of use cases. The API gateway (data plane) is tightly coupled with the API management software (control plane), with the result that a failure in the control plane also halts API traffic processing.

Using a database to store configuration that controls how traffic is processed (for example, rate limits) adds latency because the API gateway must access the database every time it receives an API call. As with the control plane, if the database is unavailable, the API gateway can’t function.

Optimized to handle north‑south traffic at the edge of the data center, the API gateway in a first‑generation solution is inefficient for the large volume of east‑west traffic in distributed microservices environments: it has a large footprint, can’t be containerized, and the constant communication with the database and control plane adds latency. Bolting on a microgateway is the only way to manage significant east‑west network traffic, where low latency is critical.

The upside of using a first‑generation solution that includes a microgateway is that you can maintain your relationship with the API management vendor you previously chose for north‑south traffic, and use its solution to handle east‑west traffic as well. The downside is that the microgateway is actually a separate tool – yet another moving part that brings its own additional cost, complexity, scalability, and reliability issues.

What if you could eliminate the need for two different types of API gateways? Now you can.

One API Gateway for All of Your Applications

NGINX eliminates the need for separate types of API gateways. Our gateways, NGINX and NGINX Plus, are optimized for both north‑south and east‑west traffic. As such, our API management solution does not distinguish between the edge gateway and microgateway functions. There’s one gateway for all of your applications.

API gateways for traditional applications manage north‑south traffic between the API consumers outside your network and your applications which reside in your network. Microgateways manage east‑west traffic across all your services that reside within your network. NGINX Plus can be deployed as an API gateway to manage both kinds of traffic.

How do we achieve this? By isolating API runtime traffic processed by NGINX Plus as the API gateway (the data plane) from API management traffic processed by NGINX Controller (the control plane). NGINX Plus stores its traffic‑handling configuration locally, so it can process API calls whether it is connected to NGINX Controller or not. This maximizes performance by reducing the average response time to serve an API call and minimizes the footprint and complexity of the API gateway. There’s no need for scripting extensions or reliance on databases for configuration of runtime functionality, which can introduce needless complexity and additional points of failure. NGINX Controller configures NGINX Plus as an API gateway using best practices learned over years.

Both NGINX Controller and NGINX Plus are flexible, portable, and can be deployed on any environment – bare metal, VMs, containers, and public, private, and hybrid clouds. This versatility means you can deploy NGINX Plus in traditional environments to manage traffic for monolithic applications, as well as in containers to manage traffic among microservices in distributed applications.

The NGINX Controller API Management Module is slated for launch in January 2019.

Why NGINX Plus Is the Best API Gateway for Both Traditional Apps and Microservices

NGINX provides established solutions for both API and microservices use cases. As such, our solution is uniquely positioned to handle the convergence of these two environments:

- NGINX and NGINX Plus are already the industry’s most pervasive API gateway. According to our 2018 user survey, 40% of NGINX and NGINX Plus users deploy it as an API gateway. NGINX and NGINX Plus are also the underlying gateway used in popular API management solutions like Axway, IBM DataPower, Kong, MuleSoft, and Red Hat 3Scale. NGINX Plus offers robust functionality such as caching to improve performance, request routing, rate limiting, and API authentication using JWTs. NGINX Controller enables Infrastructure & Operations teams to define and publish APIs to NGINX Plus API gateways, as well as to secure, monitor, troubleshoot, and analyze APIs.

- NGINX is also the pioneer in developing microservices reference architectures. We have developed three different reference architectures ranging from simple to sophisticated depending on where you are in your microservices journey. NGINX is the de facto standard in microservices, with more than 250 users running more than 3 million NGINX instances in production microservices environments.

- NGINX and NGINX Plus are designed for containers: Roughly 2 MB in size, they run on supported Linux servers (bare metal, cloud, or virtual), or directly in Docker containers orchestrated by Kubernetes and other platforms. NGINX is the most widely pulled and starred application on DockerHub, with more than 10 million downloads so far.

Get Started with NGINX to Simplify Your API Management

NGINX’s API management solution – with NGINX Plus as the data plane and NGINX Controller as the control plane – is well suited for the broadest range of application types, compute infrastructures, and traffic patterns. Using only one kind of API gateway reduces complexity, simplifies API infrastructure deployment and maintenance, and results in both cost and time savings. NGINX accelerates your journey to microservices with an approach that ensures high performance and reliability. As you modernize your applications using microservices, there’s no need to worry about deploying and maintaining yet another API infrastructure. At the same time, you can be confident NGINX offers powerful capabilities to manage API traffic for your existing applications.

Have you deployed different kinds of API gateways? Is your API infrastructure becoming unwieldy? Or are you just using one kind of API gateway? We’d love to hear from you in the comments below. In the meantime, get started with a free 30‑day trial of NGINX Plus, which can be used equally well as a traditional enterprise gateway and a microgateway.

The post Do You Really Need Different Kinds of API Gateways? (Hint: No!) appeared first on NGINX.

Source: Do You Really Need Different Kinds of API Gateways? (Hint: No!)

Leave a Reply