High Availability for Microsoft Active Directory Federation Services with NGINX Plus

h4 {

font-weight:bolder;

font-size:110%;

}

h5 {

font-weight:bolder;

font-size:110%;

}

Microsoft Active Directory Federation Services (AD FS) enables organizations that host applications on Windows Server to extend single sign‑on (SSO) access to employees of trusted business partners across an extranet. The sharing of identity information between the business partners is called a federation.

In practice, using AD FS means that employees of companies in a federation only ever have to log in to their local environment. When they access a web application belonging to a trusted business partner, the local AD FS server passes their identify information to the partner’s AD FS server in the form of a security token. The token consists of multiple claims, which are individual attributes of the employee (username, business role, employee ID, and so on) stored in the local Active Directory. The partner’s AD FS server maps the claims in the token onto claims understood by the partner’s applications, and then determines whether the employee is authorized for the requested kind of access.

For AD FS 2.0 and later, you can enable high availability (HA), adding resiliency and scale to the authentication services for your applications. In an AD FS HA cluster, also known as an AD FS farm, multiple AD FS servers are deployed within a single data center or distributed across data centers. A cluster configured for all‑active HA needs a load balancer to distribute traffic evenly across the AD FS servers. In an active‑passive HA deployment, the load balancer can provide failover to the backup AD FS server when the master fails.

This post explains how to configure NGINX Plus to provide HA in environments running AD FS 3.0 and AD FS 4.0, which are natively supported in Windows Server 2012 R2 and Windows Server 2016 respectively.

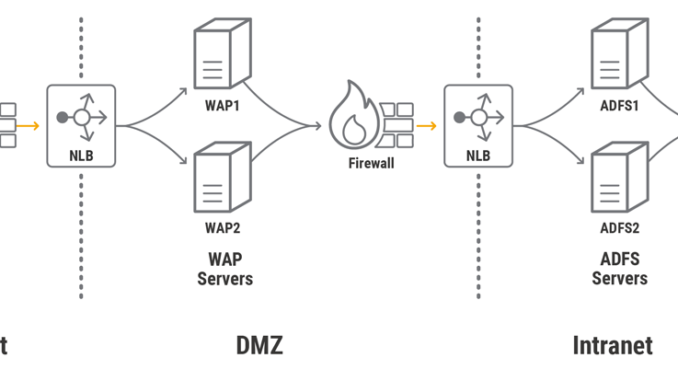

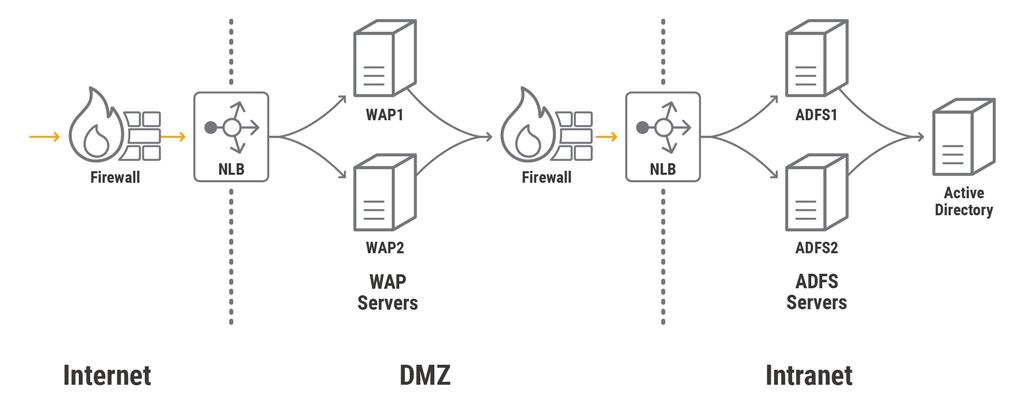

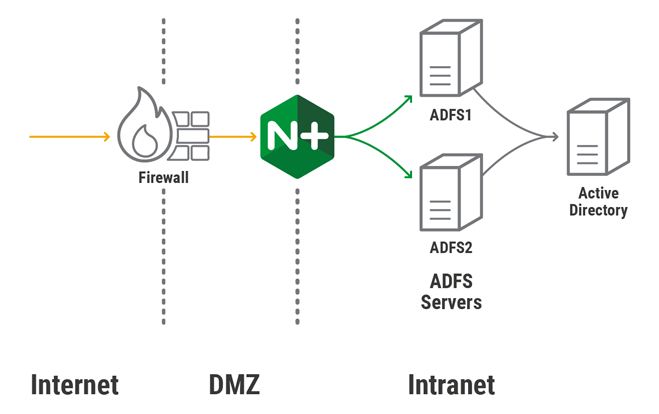

Standard HA Topology for AD FS

This post uses a standard AD FS farm topology for purposes of illustration. It is not intended as a recommendation or to cover all possible deployment scenarios. For on‑premises deployments, a standard farm consists of one or more AD FS servers on the corporate intranet fronted by one or more Web Application Proxy (WAP) servers on a DMZ network. The WAP servers act as reverse proxies which allow external users to access the web applications hosted on the corporate intranet.

WAP does not have a built‑in way to configure a cluster of servers or support HA, however, so a load balancer must be deployed in front of the WAP servers. A load balancer is also placed at the border between the DMZ and the intranet, to enable HA for AD FS. In a standard AD FS farm, the Network Load Balancing (NLB) feature of Windows Server 2012 and 2016 acts as the load balancer. Finally, firewalls are typically implemented in front of the external IP address of the load balancer and between network zones.

Using NGINX Plus in the Standard HA Topology

As noted, NLB can perform load balancing for an AD FS farm. However, its feature set is quite basic (just basic health checks and limited monitoring capabilities). NGINX Plus has many features critical for HA in production AD FS environments and yet is lightweight.

In the deployment of the standard topology described here, NGINX Plus replaces NLB to load balance traffic for all WAP and AD FS farms. Note, however, that we do not have NGINX Plus terminate SSL connections for the AD FS servers, because correct AD FS operation requires it to see the actual SSL certificate from the WAP server (for details, see this Microsoft TechNet article).

We recommend that in production environments you also implement HA for NGINX Plus itself, but don’t show that here; for instructions, see High Availability Support for NGINX Plus in On‑Premises Deployments.

Configuring NGINX Plus to Load Balance AD FS Servers

The NGINX Plus configuration for load balancing AD FS servers is straightforward. As mentioned just above, an AD FS server must see the actual SSL certificate from a WAP server, so we configure the NGINX Plus instance on the DMZ‑intranet border to pass SSL‑encrypted traffic to the AD FS servers without terminating or otherwise processing it.

In addition to the mandatory directives, we include these directives in the configuration:

zoneallocates an area in shared memory where all NGINX Plus worker processes can access configuration and run‑time state information about the servers in the upstream group.hashestablishes session persistence between clients and AD FS servers, based on the source IP address (the client’s). This is necessary even though clients establish a single TCP connection with an AD FS server, because under certain conditions some applications can suffer multiple login redirections if session persistence is not enabled.status_zonemeans the NGINX Plus API collects metrics for this server, which can be displayed on the built‑in live activity monitoring dashboard (the API and dashboard are configured separately).

stream {

upstream adfs_ssl {

zone adfs_ssl 64k;

server 10.11.0.5:443; # AD FS server 1

server 10.11.0.6:443; # AD FS server 2

hash $remote_addr;

}

server {

status_zone adfs_ssl;

listen 10.0.5.15:443;

proxy_pass adfs_ssl;

}

}

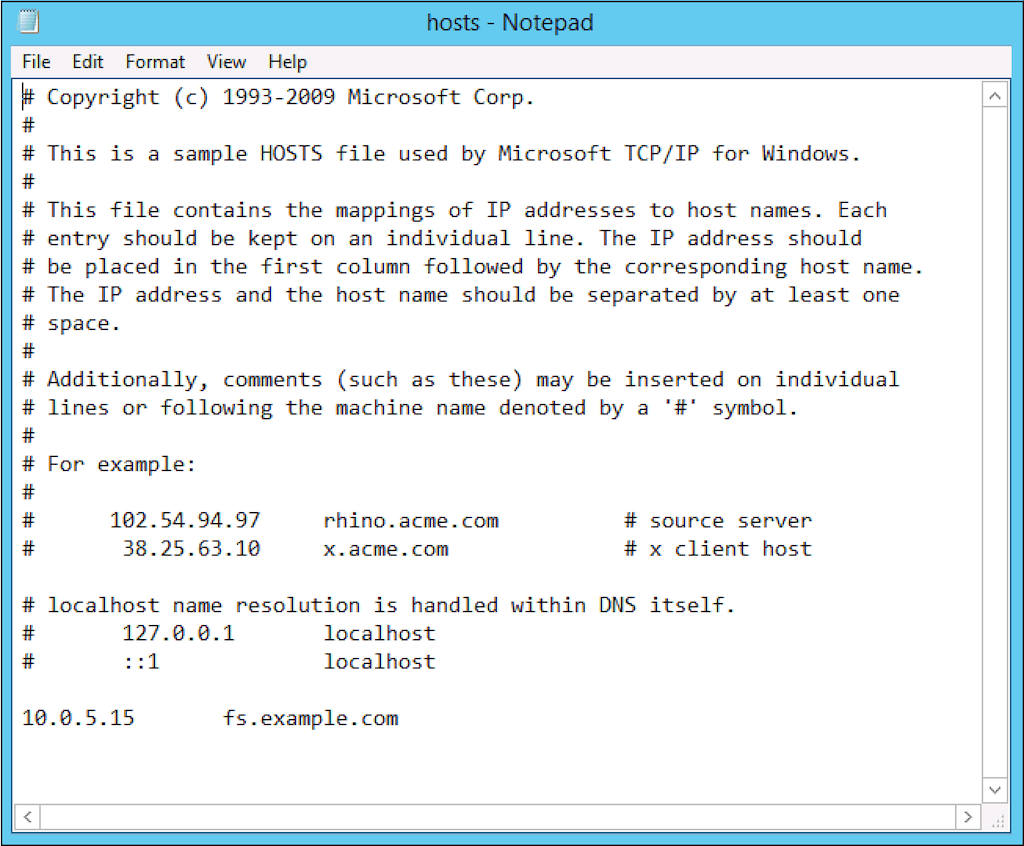

For traffic from the WAP servers to flow through NGINX Plus to the AD FS servers, we also need to map the federation service name (fs.example.com in our example) to the IP address that NGINX Plus is listening on. For production deployments, add a DNS Host A record in the DMZ. For test deployments, it’s sufficient to create an entry in the hosts file on each WAP server; that’s what we’re doing here, binding fs.example.com to 10.0.5.15 in a hosts file:

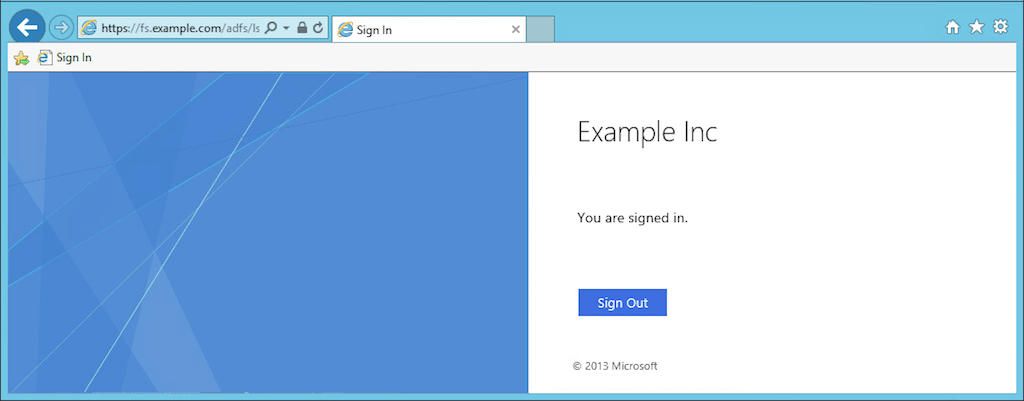

To test that traffic from the WAP servers reaches the AD FS servers, we run the ipconfig /flushdns command to flush DNS, and then in our browser log in to AD FS on the SSO page, https://fs.example.com/adfs/ls/idpinitiatedsignon.htm:

Configuring NGINX Plus to Load Balance WAP Servers

We now configure NGINX Plus to load balance HTTPS connections from external clients to the WAP servers. Best practice dictates that traffic is still SSL‑encrypted when it reaches the AD FS servers, so we use one of two approaches to configure NGINX Plus to pass HTTPS traffic to the WAP servers: SSL pass‑through or SSL re‑encryption.

Configuring SSL Pass‑Through

The easier configuration is to have NGINX Plus forward SSL‑encrypted TCP traffic unchanged to the WAP servers. For this we configure a virtual server in the stream context similar to the previous one for load balancing the AD FS servers.

Here NGINX Plus listens for external traffic on a different unique IP address, 10.0.5.100. For production environments, the external FQDN of the published application must point to this address in the form of a DNS Host A record in the public zone. For testing, an entry in your client machine’s hosts file suffices.

Note: If you are going to configure additional HTTPS services in the stream context to listen on the same IP address as this server, you must enable Server Name Indication (SNI) using the ssl_preread directive with a map to route traffic to different upstreams correctly. This is out of the scope for this blog, but the NGINX reference documentation includes examples.

stream {

upstream wap {

zone wap 64k;

server 10.11.0.5:443; #WAP server 1

server 10.11.0.6:443; #WAP server 2

least_time connect;

}

server {

status_zone wap_adfs;

listen 10.0.5.100:443;

proxy_pass wap;

}

}Configuring HTTPS Re‑Encryption

As an alternative to SSL pass‑through, we can take advantage of NGINX Plus’ full Layer 7 capabilities by configuring a virtual server in the http context to accept HTTPS traffic. NGINX Plus terminates the HTTPS connections from clients and creates new HTTPS connections to the WAP servers.

The certificate and secret key files, example.com.crt and example.com.key, contain the external FQDN of the published application in the Common Name (CN) or Subject Alternative Name (SAN) property; in this example the FQDN is fs.example.com.

The proxy_ssl_server_name and proxy_ssl_name directives enable the required Server Name Indication (SNI) header, specifying a remote hostname to be sent in the SSL Client Hello. The header must match the external FQDN of the published application, in this example fs.example.com.

We use proxy_set_header directives to pass relevant information to the WAP servers, and also so we can capture it in the logs:

- The

X-Real-IPheader contains the source (client’s) IP address as captured in the$remote_addrvariable. - The

X-Forwarded-Forconveys that header from the client request, with the client’s IP address appended to it (or just that address if the client request doesn’t have the header). - The

x-ms-proxyheader indicates the user was routed through a proxy server, and identifies the NGINX Plus instance as that server.

In addition to the directives shown here, NGINX and NGINX Plus provide a number of features that can improve the performance and security of SSL/TLS. For more information, see our blog.

http {

upstream wap {

zone wap 64k;

server 10.0.5.11:443;

server 10.0.5.10:443;

least_time header;

}

server {

listen 10.0.5.100:443 ssl;

status_zone fs.example.com;

server_name fs.example.com;

ssl_certificate /etc/ssl/example.com/example.com.crt;

ssl_certificate_key /etc/ssl/example.com/example.com.key;

location / {

proxy_pass https://wap; # 'https' enables reencrypt

proxy_ssl_server_name on;

proxy_ssl_name $host;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header x-ms-proxy $server_name;

}

}

}Configuring NGINX Plus in a Topology Without WAP Servers

When enabling external clients to access your AD FS servers, it’s best practice to terminate the external traffic at the border between the DMZ and the corporate intranet and also to identify external authentication attempts by inserting the x-ms-proxy header. WAP servers perform both of these functions, but as configured in the previous section NGINX Plus does also.

WAP servers are not required for some use cases – for example, when you don’t use advanced claim rules such as IP network and trust levels. In such cases you can eliminate WAP servers and place NGINX Plus at the border between the DMZ and the intranet to authenticate requests to internal AD FS servers. This reduces your hardware, software, and operational costs.

This configuration replaces the ones for the standard HA topology. It is almost identical to the HTTPS re‑encryption configuration for load balancing WAP servers, but here NGINX Plus load balances external requests directly to the AD FS servers in the internal network.

upstream adfs {

zone adfs 64k;

server 192.168.5.5:443; # AD FS Server 1

server 192.168.5.6:443; # AD FS Server 2

least_time header;

}

server {

listen 10.0.5.110:443 ssl;

status_zone adfs_proxy;

server_name fs.example.com;

ssl_certificate /etc/ssl/example.com/example.com.crt;

ssl_certificate_key /etc/ssl/example.com/example.com.key;

location / {

proxy_pass https://adfs;

proxy_ssl_server_name on;

proxy_ssl_name $host;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header x-ms-proxy $server_name;

}

}Try out NGINX Plus in your AD FS deployment – start a free 30-day trial today or contact us to discuss your use case.

The post High Availability for Microsoft Active Directory Federation Services with NGINX Plus appeared first on NGINX.

Source: High Availability for Microsoft Active Directory Federation Services with NGINX Plus

Leave a Reply