Improved JavaScript performance, WebAssembly, and Shared Memory in Microsoft Edge

JavaScript performance is an evergreen topic on the web. With each new release of Microsoft Edge, we look at user feedback and telemetry data to identify opportunities to improve the Chakra JavaScript engine and enable better performance on real sites.

In this post, we’ll walk you through some new features coming to Chakra with the Windows 10 Creators Update that improve the day-to-day browsing experience in Microsoft Edge, as well as some new experimental features for developers: WebAssembly, and Shared Memory and Atomics.

Under the hood: JavaScript performance improvements

Saving memory by re-deferring functions

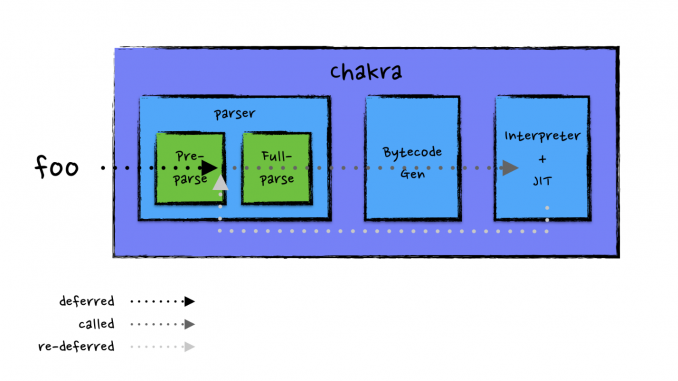

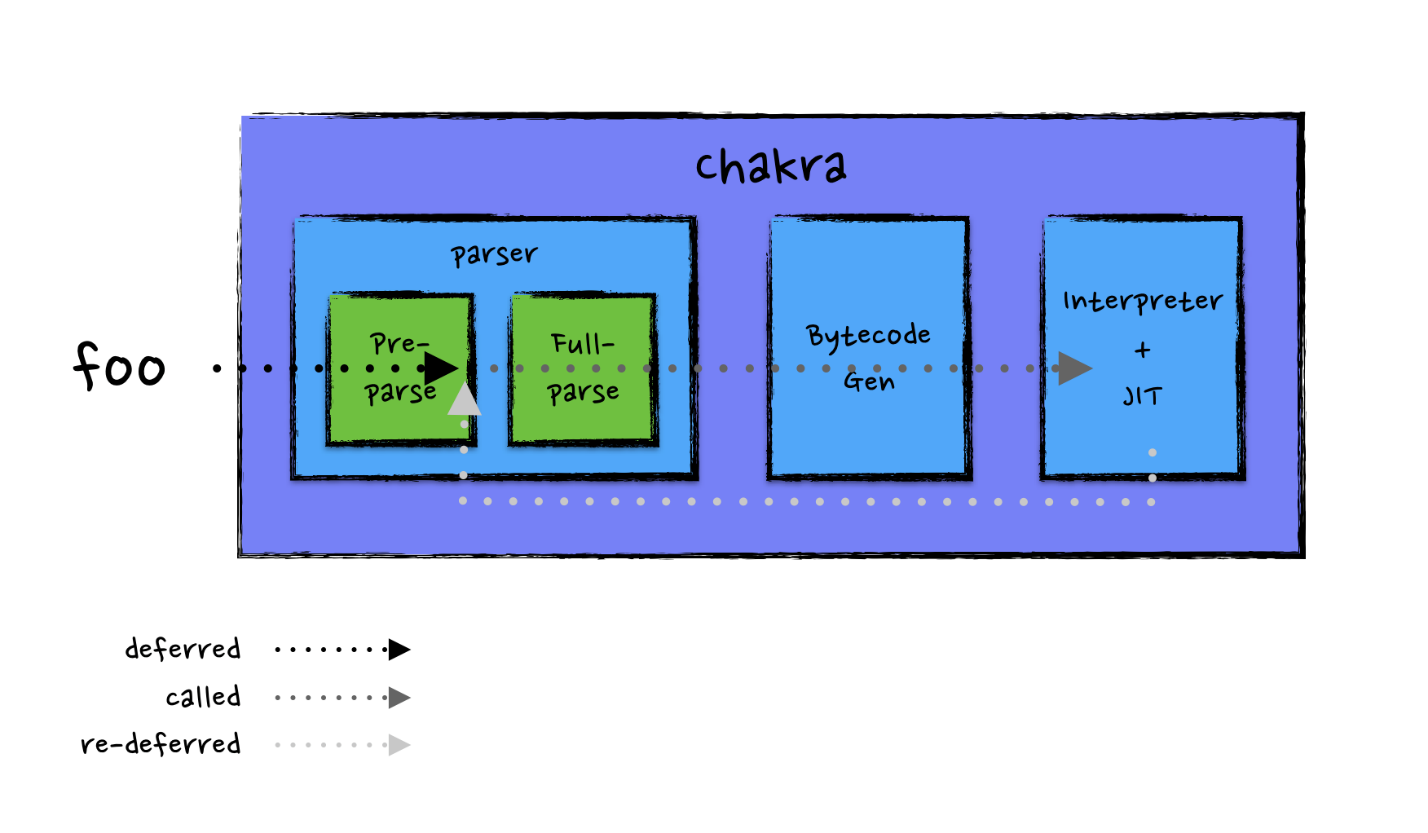

Back in the days of Internet Explorer, Chakra introduced the ability to defer-parse functions, and more recently extended the capability to defer-parse event-handlers. For eligible functions, Chakra performs a lightweight pre-parsing phase where the engine checks for syntax errors at startup time, and delays the full parsing and bytecode generation until functions are called for the first time. While the obvious benefit is to improve page load time and avoid wasting time on redundant functions, defer-parsing also prevents memory from being allocated to store metadata such as ASTs or bytecode for those redundant functions. In the Creators Update, Microsoft Edge and Chakra further utilizes the defer-parsing mechanism and improves memory usage by allowing functions to be re-deferred.

The idea of re-deferring is deceptively simple – for every function that Chakra deems would no longer get executed, the engine frees the bulk of the memory the function holds to store metadata generated after pre-parsing, and effectively leaves the function in a deferred state as if it has just been pre-parsed. Imagine a function foo which gets deferred upon startup, called at some point, and re-deferred later.

The tricky part about re-deferring is that Chakra cannot perform such actions too aggressively or it risks frequently re-paying the cost of full-parsing, bytecode generation, etc. Chakra checks its record of function call counts every N GC cycles, and re-defers functions that are not called over that period of time. The value of N is based on heuristics, and as a special case a smaller value is used at startup time when memory usage is more susceptible to peak. It is hard to generalize the exact saving from re-deferring as it is very subject to the content served, but in our experiment with a small sample of sites, re-deferring typically reduces the memory allocated by Chakra by 6-12%.

Post Creators Update, we are working on addressing an existing limitation of re-deferring to handle arrow functions, getters, setters, and functions that capture lexically-scoped variables. We expect to see further memory savings from the re-deferring feature.

Optimizing away heap arguments

The usage of arguments object is fairly common on the web. Whenever a function uses the arguments object, Chakra if necessary, creates a “heap arguments” object so that both formals and the arguments object refer to the same memory location. Allocating such object can be expensive, so the Chakra JIT optimizes away the creation of heap arguments when functions have no formal parameters.

In the Creators Update, the JIT optimization is extended to avoid the creation of heap arguments with the presence of formals as long as there’re no writes to the formals. It is no longer necessary to allocate heap arguments objects for code such as below.

// no writes to formals (a & b) therefore heap args can be optimized away

function plus(a, b) {

if (arguments.length == 2) {

return a + b;

}

}

To measure the impact of the optimization, our web crawler estimates that the optimization benefits about 95% websites and it allows the React sub-test in the Speedometer benchmark, which runs a simple todoMVC implemented with React, to speed up by about 30% in Microsoft Edge.

Better performance for minified code

Using a minifier before deploying scripts has been a common practice for web developers to reduce the download burden on the client side. However, minifiers could sometimes pose performance issues as they introduce code patterns that developers typically would not write by hand and therefore might not be optimized.

Previously, we’ve made optimizations in Chakra for code patterns observed in UglifyJS, one of the most heavily-used minifiers, and improved performance for some code patterns by 20-50%. For the Creators Update, we investigated the emit pattern of the Closure compiler, another widely used minifier in the JavaScript ecosystem, and added a series of inlining heuristics, fast paths and other optimizations according to our findings.

The changes lead to a visible speedup for code minified by Closure or other minifiers that follow the same patterns. As an experiment to measure the impact consistently in a well-defined and constrained environment, we minified some popular JavaScript benchmarks using Closure and noticed a 5~15% improvement on sub-tests with patterns we’ve optimized for.

WebAssembly

WebAssembly is an emerging portable, size- and load-time-efficient binary format for the web. It aims to achieve near-native performance and provides a viable solution for performance-critical workloads such as games, multimedia encoding/decoding, etc. As part of the WebAssembly Community Group (CG), we have been collaborating closely with Mozilla, Google, Apple and others in the CG to push the design forward.

Following the recent conclusion of WebAssembly browser preview and the consensus over the minimum viable product (MVP) format among browser vendors, we’re excited to share that Microsoft Edge now supports WebAssembly MVP behind the experimental flag in the Creators Update. Users can navigate to about:flags and check the “Enable experimental JavaScript features” box to turn on WebAssembly and other experimental features such as SharedArrayBuffer.

Under the hood, Chakra defers parsing WebAssembly functions until called, unlike other engines that parse and JIT functions at startup time. We’ve observed startup time as a major headache for large web apps and have rarely seen runtime performance being the issue from our experiences with existing WebAssembly & asm.js workloads. As a result, a WebAssembly app often loads noticeably faster in Microsoft Edge. Try out the Tanks! demo in Microsoft Edge to see it for yourself – be sure to enable the “Experimental JavaScript Features” flag in about:flags!

Beyond the Creators Update, we are tuning WebAssembly performance as well as working on the last remaining MVP features such as response APIs and structured cloning before we enable WebAssembly on by default in Microsoft Edge. Critical post-MVP features such as threads are being considered as well.

Shared Memory & Atomics

JavaScript as we know it operates in a run-to-completion single-threaded model. But with the growing complexity of web apps, there is an increasing need to fully exploit the underlying hardware and utilize multi-core parallelism to achieve better performance.

The creation of Web Workers unlocked the possibility of parallelism on the web and executing JavaScript without blocking the UI thread. Communication between the UI thread and workers was initially done via cloning data and postMessage. Transferable object was later added as a welcome change to allow transferring data to another thread without the runtime and memory overhead of cloning, and the original owner forfeits its right to access the data to avoid synchronization problems.

Soon to be ratified in ES2017, Shared Memory & Atomics is the new addition to the picture to further improve parallel programming on the web. With the release of Creators Update, we are excited to preview the feature behind the experimental JavaScript features flag in Microsoft Edge.

In Shared Memory and Atomics, SharedArrayBuffer is essentially an ArrayBuffer shareable between threads and removes the chore of transferring data back and forth. It enables workers to virtually work on the same block of memory, guaranteeing that a change on one thread on a SharedArrayBuffer will eventually be observed (at some unknown point of time) on other threads holding the same buffer. As long as workers operate on different parts of the same SharedArrayBuffer, all operations are thread-safe.

The addition of Atomics gives developers the necessary tools to safely and predictably operate on the same memory location by adding atomics operations and the ability to wait and wake in JavaScript. The new feature allows developers to build more powerful web applications. As a simple illustration of the feature, here’s the producer-consumer problem implemented with shared memory:

// UI thread

var sab = new SharedArrayBuffer(Int32Array.BYTES_PER_ELEMENT * 1000);

var i32 = new Int32Array(sab);

producer.postMessage(i32);

consumer.postMessage(i32);

// producer.js – a worker that keeps producing non-zero data

onmessage = ev => {

let i32 = ev.data;

let i = 0;

while (true) {

let curr = Atomics.load(i32, i); // load i32[i]

if (curr != 0) Atomics.wait(i32, i, curr); // wait till i32[i] != curr

Atomics.store(i32, i, produceNonZero()); // store in i32[i]

Atomics.wake(i32, i, 1); // wake 1 thread waiting on i32[i]

i = (i + 1) % i32.length;

}

}

// consumer.js – a worker that keeps consuming and replacing data with 0

onmessage = ev => {

let i32 = ev.data;

let i = 0;

while (true) {

Atomics.wait(i32, i, 0); // wait till i32[i] != 0

consumeNonZero(Atomics.exchange(i32, i, 0)); // exchange value of i32[i] with 0

Atomics.wake(i32, i, 1); // wake 1 thread waiting on i32[i]

i = (i + 1) % i32.length;

}

}

Shared memory will also play a key role in the upcoming WebAssembly threads.

Built with the community

We hope you enjoy the JavaScript performance update in Microsoft Edge and are as excited as we are to see the progress on WebAssembly and shared memory pushing the performance boundary of the web. We love to hear user feedback and are always on a lookout for opportunities to improve JavaScript performance on the real-world web. Help us improve and share your thoughts with us via @MSEdgeDev and @ChakraCore, or the ChakraCore repo on GitHub.

― Limin Zhu, Program Manager, Chakra Team

Source: Improved JavaScript performance, WebAssembly, and Shared Memory in Microsoft Edge

Leave a Reply