Introducing NGINX 1.12 and 1.13

Today we’re pleased to announce the availability of open source NGINX 1.12 and 1.13. These version numbers define the stable and mainline branches, respectively, of the NGINX open source software. We will focus on developing and improving these two branches for the next 12 months.

NGINX version 1.12.0 was released today, and the next feature release of our mainline branch will be numbered 1.13.0.

NGINX Version Numbering Overview

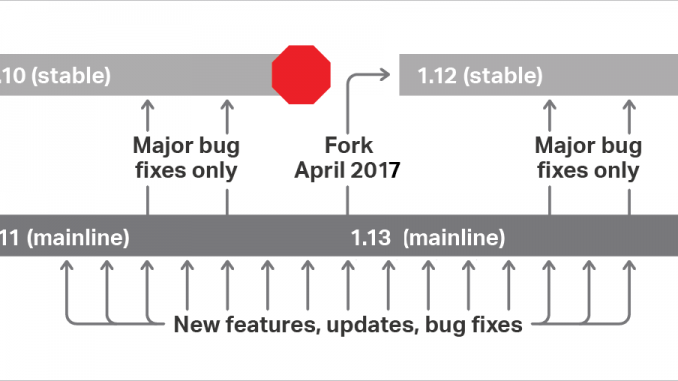

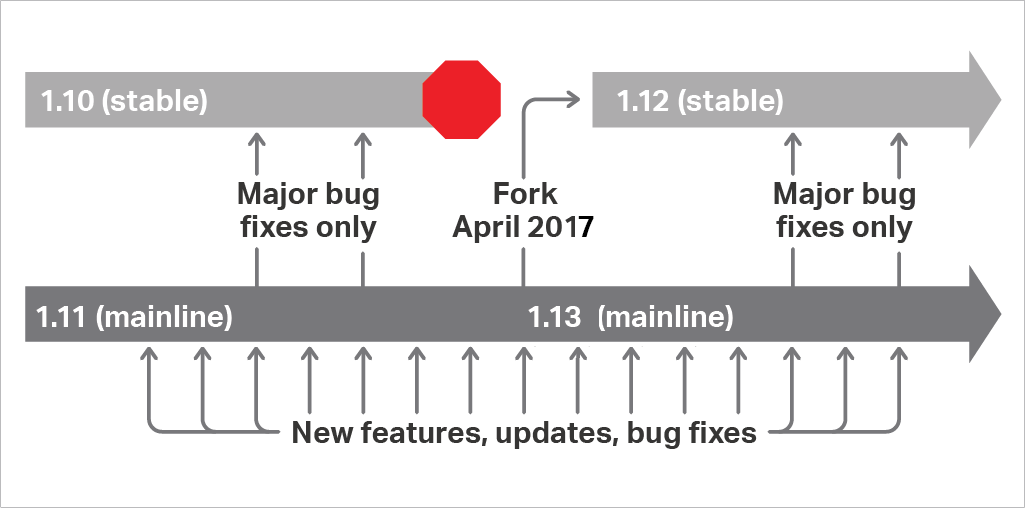

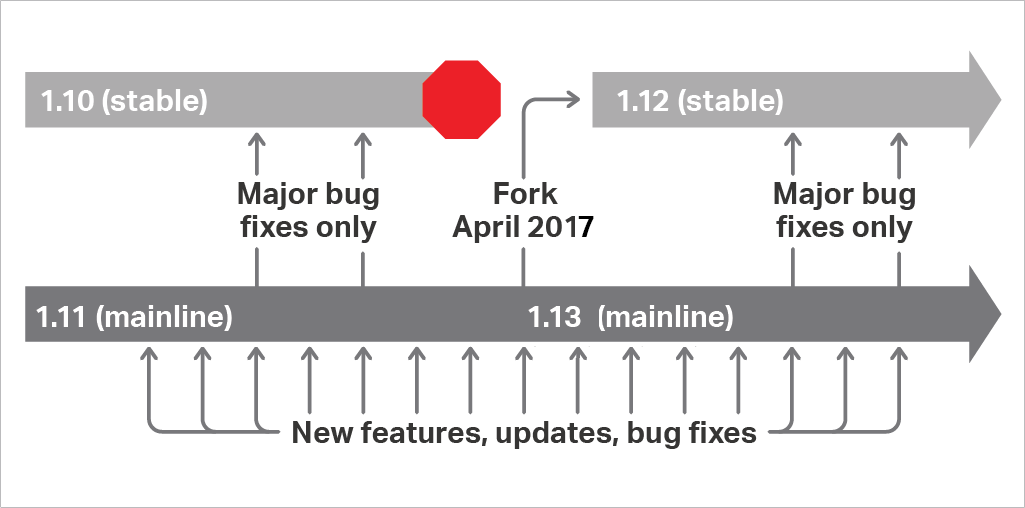

NGINX, Inc. manages two NGINX source code branches:

- The even‑numbered version (1.12) is our stable branch. This branch is updated only when critical issues or security vulnerabilities need to be fixed. For example, during the past year only three minor updates were made to the 1.10 stable branch.

- The odd‑numbered version (1.13) is the mainline branch. This branch is actively developed; new minor releases (1.13.1, 1.13.2, etc.) are made approximately every 4 to 6 weeks, regularly introducing new features and enhancements.

Each year, we create a new fork from our mainline branch which becomes the new stable branch. Version 1.12.0 is forked from 1.11.13, the last release of the prior mainline branch (1.11). The previous stable branch, 1.10, is now deprecated and we will no longer be updating or supporting it. Development will continue on the 1.13 mainline branch.

We generally recommend using the mainline branch. This is where we commit all new features, performance improvements, and enhancements. We actively test and QA the mainline branch, so it’s arguably more stable than the “stable” branch. The mainline branch is also the source of NGINX Plus builds for our commercial customers.

If you want to minimize the number of updates you need to deploy and don’t anticipate an urgent need for any of the features planned for the next 12 months, the stable branch is a good choice.

NGINX 1.11 in Review

The past year was another busy one for NGINX development. We added a number of new features and capabilities to the 1.11 mainline branch: dynamic modules, IP Transparency, improved TCP/UDP load balancing, better caching performance, and more.

-

Dynamic modules – NGINX 1.11.5 was a milestone moment in the development of NGINX. It introduced the new

‑‑with‑compatoption which allows any module to be compiled and dynamically loaded into a running NGINX instance of the same version (and an NGINX Plus release based on that version). There are over 120 modules for NGINX contributed by the open source community, and now you can load them into our NGINX builds, or those of an OS vendor, without having to compile NGINX from source. For more information on compiling dynamic modules, see Compiling Dynamic Modules for NGINX Plus. -

IP Transparency – When acting as a reverse proxy, NGINX by default uses its own IP address as the source IP address for connections to upstream servers. With IP Transparency you can instruct NGINX to instead preserve the original client IP address as the source IP address. This is useful for logging and rate limiting for TCP applications, for example, which can’t make use of the

X‑Forwarded‑ForHTTP header to get the original client IP address. IP Transparency was first introduced in NGINX 1.11.0 with the addition of thetransparentparameter to theproxy_bind,fastcgi_bind,memcached_bind,scgi_bind, anduwsgi_binddirectives. -

Direct Server Return (DSR) – In this extension of IP Transparency, the upstream server receives packets that appear to originate from the remote client, and responds directly to the remote client, bypassing NGINX completely.

Note: For more information, including additional configuration that may be required on servers and routers, see IP Transparency and Direct Server Return with NGINX and NGINX Plus as Transparent Proxy.

-

Improved TCP/UDP load balancing – In NGINX 1.11 we introduced a number of enhancements to the set of Stream modules, which provide TCP/UDP reverse proxy and load balancing functionality. The Map and Return modules were introduced in NGINX 1.11.2. The Geo, GeoIP, and Split Clients modules were introduced in NGINX 1.11.3. The Log and Real IP modules were introduced in NGINX 1.11.4. And finally the SSL/TLS Preread module was introduced in NGINX 1.11.5.

-

Better caching performance – NGINX is a high‑performance content cache that a number of large CDNs, such as CloudFlare and Level 3, build their solutions on. In NGINX 1.11.10 we introduced the optional ability to update the cache in the background, serving expired content to all users while the update is in progress. This ensures no user experiences a cache‑miss penalty or has to wait for a round trip to the origin server. This is enabled through the

proxy_cache_background_update,fastcgi_cache_background_update,scgi_cache_background_update, anduwsgi_cache_background_updatedirectives. For more details see Announcing NGINX Plus R12.NGINX has a special cache manager process that removes the least recently used data from the cache when the configured cache size is exceeded. In NGINX 1.11.5 we introduced the

manager_files,manager_threshold, andmanager_sleepparameters, which together determine how frequently the cache manager runs. If you find that it is running too often and causing excess I/O operations, you can use the parameters to dial it back and improve overall system performance. The new parameters apply to theproxy_cache_path,fastcgi_cache_path,scgi_cache_path, anduwsgi_cache_pathdirectives. -

Dual‑stack RSA/ECC certificates – In our testing, Elliptic Curve Cryptography (ECC) was 3x faster than the equivalent‑strength RSA certificates. In NGINX 1.11.0 we introduced the ability to use both RSA and ECC certificates on the same virtual server. NGINX and NGINX Plus use ECC certificates with clients that support them, and RSA certificates with older clients that don’t support ECC, such as Windows XP machines. For more details, see Announcing NGINX Plus R10.

-

nginScript general availability – nginScript is a unique JavaScript implementation for NGINX and NGINX Plus, designed specifically for server‑side use cases and per‑request processing. Much effort was put into maturing and stabilizing nginScript across several 1.11 releases, including tight integration with the HTTP and Stream modules. In 1.11.10 it transitioned from “experimental” status to general availability. For more details, see Introduction to nginScript.

-

Overcoming ephemeral port exhaustion – A common problem for high‑traffic applications is ephemeral port exhaustion, where new connections to upstream servers can’t be created because the OS has run out of available port numbers. To overcome this, NGINX 1.11.4 and later now use the

IP_BIND_ADDRESS_NO_PORTsocket option when available. This option allows source ports to be reused for outgoing connections to upstream servers, provided the standard “4‑tuple” (source IP address, destination IP address, source port, destination port) is unique. It is available on systems with Linux kernel version 4.2 and later, and glibc 2.22 and later. -

Transaction tracing – In version 1.11.0 and later, NGINX generates the

$request_idvariable automatically for each new HTTP request, effectively assigning a unique “transaction ID” to the request. This facilitates application tracing and brings application performance management (APM) capabilities to log‑analysis tools. The transaction ID is proxied to backend applications and microservices so that all parts of the system can log a consistent identifier for each transaction. For more information, see Application Tracing with NGINX and NGINX Plus. -

Connection limiting to upstreams – In NGINX 1.11.5 and later, you can limit the number of simultaneous connections between NGINX and upstream servers using the

max_connsparameter to theserverdirective in both the HTTP and Stream modules. This can help prevent upstream servers from being overwhelmed, intentionally or unintentionally, with more TCP connections than they can handle. Themax_connsparameter was previously available only in NGINX Plus. -

Worker shutdown timeout – When you reload NGINX, all old worker processes with active connections are kept alive until those connections are closed. For applications with long‑running connections, such as WebSocket, connections might stay open for hours. If you reload NGINX frequently, this can exhaust resources in the system. NGINX 1.11.11 introduced a new

worker_shutdown_timeoutdirective to automatically close connections and shut down worker processes after a specified time.

NGINX 1.11 also saw numerous other feature improvements, bug fixes, and other enhancements, making this series of releases the largest development of NGINX in its recent history (check out the release notes for a full list of changes in 1.11). Only a small number of changes needed to be backported to the 1.10 stable release, a clear testament to the high standards of quality and reliability our engineering team maintains.

In the past year we also started a project to better document NGINX. As part of that effort we released the NGINX development guide, which documents NGINX internals. Though still a work in progress, it thoroughly describes our internal data structures, how we manage memory, how event handling works, and other aspects of NGINX’s internal architecture.

We also hit a major milestone in NGINX adoption in the past year. Although we don’t set out to compete or take market share from other web servers, it’s gratifying to see continued steady growth in the use of NGINX. This January, we became the web server for the majority of the world’s 100,000 busiest websites, a distinction we also hold for the 1,000 and 10,000 busiest sites.

Looking Forward to NGINX 1.13

The NGINX 1.13 release series also promises to bring significant advances, including enhancements to HTTP/2 and caching capabilities. We will also be making continual improvements to nginScript in terms of JavaScript language support and to enable even more sophisticated programmatic configuration. The demands of modern, distributed, microservices applications are also driving part of our roadmap, and more will be shared at our annual user conference, nginx.conf 2017, this September 6–8 in Portland, OR.

The post Introducing NGINX 1.12 and 1.13 appeared first on NGINX.

Source: Introducing NGINX 1.12 and 1.13

Leave a Reply