Microservices Reference Architecture, Part 2 – The Proxy Model

The NGINX Microservices Reference Architecture is under development. It will be made publically available later this year, and will be discussed in detail at nginx.conf 2016, September 7–9 in Austin, TX. Early bird discounts are available now.

Author’s note – This blog post is the second in a series; we will extend this list as new posts appear:

- Introducing the NGINX Microservices Reference Architecture

- Microservices Reference Architecture, Part 2 – The Proxy Model (this post)

Upcoming posts will cover the other two models included in the Microservices Reference Architecture (MRA) and related topics.

I’ve written a separate article about web frontends for microservices applications. We also have a very useful and popular series about microservices application design, plus other microservices blog posts and microservices webinars.

Introducing the Proxy Model

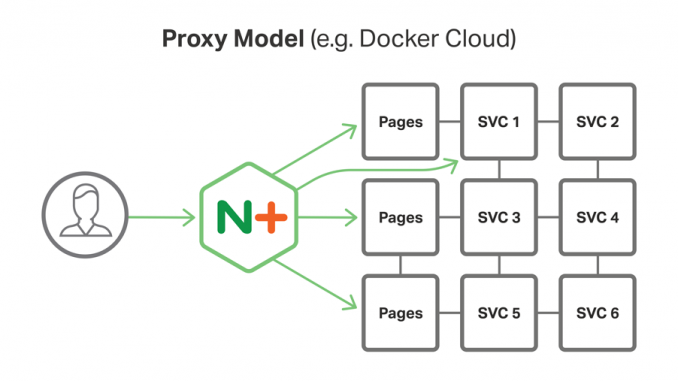

As the name implies, the Proxy Model places NGINX Plus as a reverse proxy server in front of servers running the services that make up a microservices-based application. NGINX Plus provides the central point of access to the services.

The Proxy Model is suitable for several uses cases, including:

- Improving the performance of a monolithic application before converting it to microservices

- Proxying relatively simple applications

- As a starting point before moving to other, more complex networking models

Within the Proxy Model, the NGINX Plus reverse proxy server can also act as an API gateway.

Figure 1 shows how, in the Proxy Model, NGINX Plus runs on the reverse proxy server and interacts with several services, including multiple instances of the Pages service (the web microservice that we described in the post about web frontends).

The other two models in the MRA, the Router Mesh Model and the Fabric Model, build on the Proxy Model to deliver significantly greater functionality. (These models will be described in future blog posts in this series.) However, once you understand the Proxy Model, the other models are relatively easy to grasp.

The overall structure and features of the Proxy Model are only partly microservices-specific; many of them are simply best practices when deploying NGINX Plus as a reverse proxy server and load balancer. You can begin implementing the Proxy Model while your application is still a monolith.

If you are starting with an existing, monolithic application, simply position NGINX Plus as a reverse proxy in front of your application server and implement the Proxy Model features described below. You are then in a good position to convert your application to microservices.

The Proxy Model is agnostic as to the mechanism you implement for communication between microservices instances running on the application servers behind NGINX Plus. Communication between the microservices is handled through a mechanism of your choice, such as DNS round-robin requests from one service to another. A post in our seven-part series, Building Microservices, discusses the major approaches used for inter-process communication in a microservices architecture.

Proxy Model Capabilities

The capabilities of the Proxy Model fall into three categories. These features optimize performance:

These features improve security and make application management easier:

These features are specific to microservices:

We discuss each group of features in more detail below. You can use the information in this blog post, as well as other information on this site, to start moving your applications to the Proxy Model in advance of the public release of the MRA later this year. Making these changes will provide your app with immediate benefits in performance, reliability, security, and scalability.

Performance Optimization Features

Implementing the features described here – caching, load balancing, high-speed connectivity, and high availability – optimizes the performance of your applications.

Caching Static and Dynamic Files

Caching is a highly useful feature of NGINX Plus and an important feature in the Proxy Model. Both static file caching and microcaching – that is, caching application-generated content for brief periods – speed content delivery to users and reduce load on the application.

By caching static files at the proxy server, NGINX Plus can prevent many requests from reaching application servers. This simplifies design and operation of the microservices application.

You can also microcache dynamic, application-generated files, whether from a monolithic app or from a service in a microservices app. For many read operations, the data coming back from a service is going to be identical to the same request a few minutes earlier. Calling back through the service graph and getting fresh data for every request is often a waste of resources. Microcaching saves work at the services level while still delivering fresh content.

NGINX Plus has a robust caching system to temporarily store most any type of data or content. NGINX Plus also has a cache purge API that allows your application to dynamically clear the cache when data is refreshed.

Robust Load Balancing to Services

Microservice applications require load balancing to an even greater degree than monolithic applications. The architecture of a microservice application relies on multiple, small services working in concert to provide application functionality. This inherently requires robust, intelligent load balancing, especially where external clients access the service APIs directly.

NGINX Plus, as the proxy gateway to the application, can use a variety of mechanisms for load balancing; load balancing is one of the most powerful features of NGINX Plus. With the dynamic service discovery features of NGINX Plus, new instances of services can be added to the mix and made available for load balancing as soon as they spin up.

Low-Latency Connectivity

As you move to microservices, one of the major changes in application behavior concerns how application components communicate with each other. In a monolithic app, the objects or functions communicate in memory and share data through pointers or object references.

In a microservices app, functional components (the services) communicate over the network, typically using HTTP. So the network is a critical bottleneck in a microservices application, as it is inherently slower than in-memory communication. The external connection to the system, whether from a client app, a web browser, or an external server, has the highest latency of any part of the application and therefore also has the greatest need to reduce latency. NGINX Plus provides features like HTTP/2 support for minimizing connection start-up times and HTTP/HTTPS keepalive functionality for connecting to external clients as well as to the app’s other microservices.

High Availability

In the Proxy Model network configuration, there are a variety of ways to set up NGINX Plus in a high availability (HA) configuration. In on-premise environments, you can use our keepalived-based solution to set up the NGINX Plus instances in an active‑passive HA pair. This approach works well and provides fast failure recovery with low-level hardware integration.

NGINX has also been working on an adapted version of our keepalived configuration to provide HA functionality in a cloud environment. This system provides the same type of high availability as for on-premise servers by using API-transferrable IP addresses, similar to those in the Elastic IP service in Amazon Web Services. In combination with the autoscaling features of a Platform as a Service (PaaS) like RedHat’s OpenShift, the result is a resilient HA configuration with autorecovery features that provide defense in depth against failure.

Note: With a robust HA configuration and the powerful load-balancing capabilities of NGINX Plus in a cloud environment, you may not need a cloud-specific load balancer like ELB.

Security and Management Features

Security and management features include rate limiting, SSL/TLS and HTTP/2 termination, and health checks.

Rate Limiting

A feature that is useful for managing traffic into the microservice application in the Proxy Model is rate (or request) limiting. Microservice applications are subject to the same attacks and request problems as any Internet‑accessible application. However, unlike a monolithic app, microservice applications have no inherent, single governor to detect request problems or attacks. In the Proxy Model, NGINX Plus acts as the single point of entry to the microservice application and so can evaluate all requests to determine if there are problems like DDoS attack. If a DDoS attack is occurring, NGINX has a variety of techniques for restricting or slowing request traffic.

SSL/TLS Termination

Most applications need to support SSL/TLS for any sort of authenticated or secure interaction, and many major sites have switched to using HTTPS exclusively (for example, Google and Facebook). Having NGINX Plus as the proxy gateway to the microservices application can also provide SSL/TLS termination. NGINX Plus has many advanced SSL/TLS features including SNI, modern cipher support, and server-definable SSL/TLS connection policies.

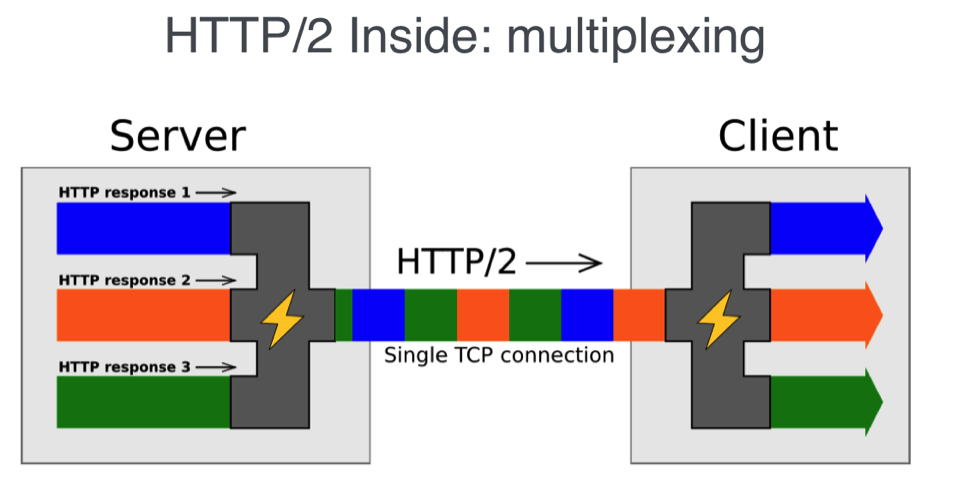

HTTP/2

One of the newest technologies sweeping across the web is HTTP/2. HTTP/2 is designed to reduce network latency and accelerate the transfer of data by multiplexing data requests across a single, established, persistent connection. NGINX Plus provides robust HTTP/2 support, so your microservice application can allow clients to take advantage of the biggest technology advance in HTTP in more than a decade. Figure 2 shows HTTP/2 multiplexing responses to client requests onto a single TCP connection.

Health Checks

Active application health checks are another useful feature that NGINX Plus provides in the Proxy Model. Microservices applications, like all applications, suffer errors and problems that cause them to slow down, fail, or just act strangely. It is therefore useful for the service to surface the status of its “health” through a URL with various messages, such as “memory usage has exceeded a given threshold” or “the system is unable to connect to the database”. NGINX can evaluate a variety of messages and respond by stopping traffic to a troubled instance and rerouting traffic to another instance until the troubled one recovers.

Microservices-Specific Features

Microservices-specific features of NGINX Plus in the Proxy Model derive from its position as a central communications point for services, NGINX Plus’ ability to do dynamic service discovery, and (optionally) its role as an API gateway.

Central Communications Point for Services

Clients wishing to use a microservices application need one central point for communicating with the application. Developers and operations people need to implement as much functionality as possible without involving actual services, such as static file caching, microcaching, load balancing, rate limiting, and more. The Proxy Model uses the NGINX Plus proxy server as the obvious and most effective place to handle communications and pan-microservice functionality, potentially including service discovery (below) and management of session-specific data.

Dynamic Service Discovery

One of the most unique and defining qualities of a microservices application is that the application is made up of many independent components. Each of the services is designed to scale dynamically and live ephemerally in the application. This means that NGINX Plus needs to track and route traffic to service instances as they come up and remove them from the load balancing pool as they are taken out of service.

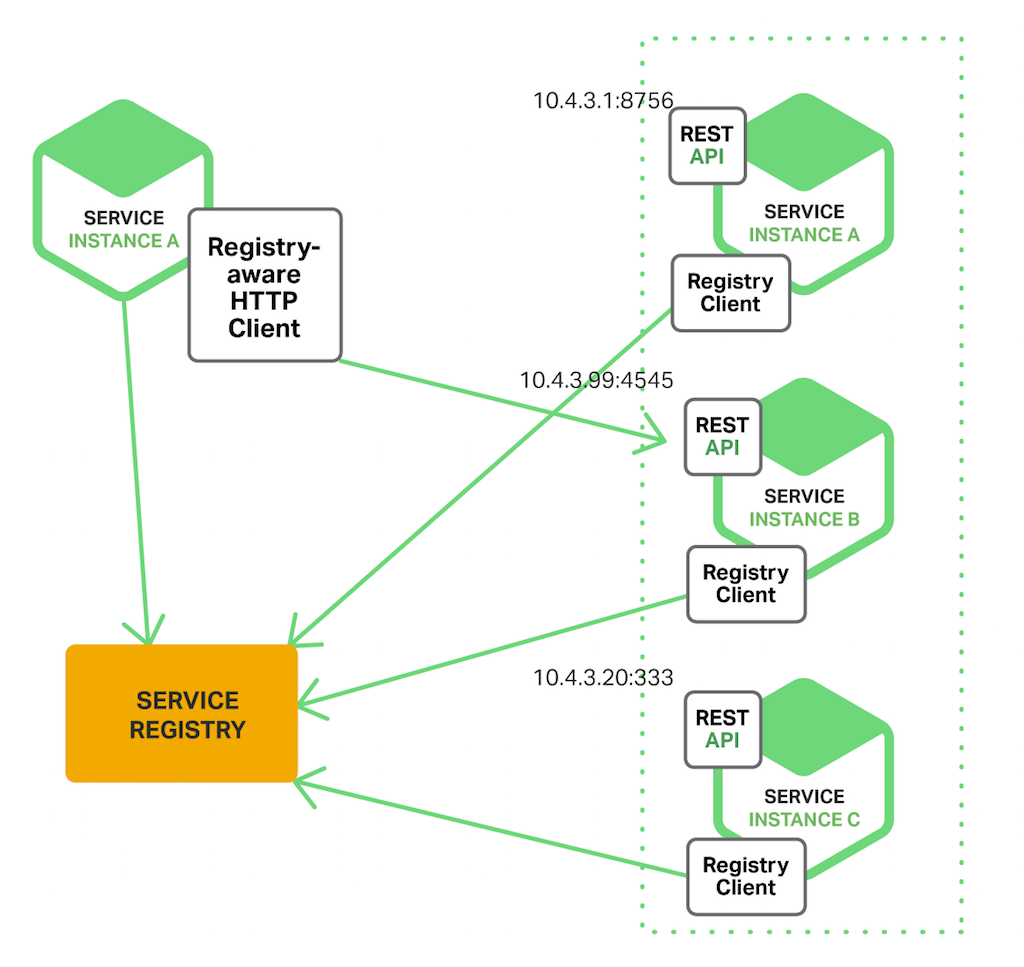

NGINX Plus has a number of features that are specifically designed to support service discovery – the most important of which is the DNS resolver feature that queries the service registry, whether provided by Consul, etcd, Kubernetes, or ZooKeeper, to get service instance information and provide routes back to the services. NGINX Plus R9 introduced SRV record support, so a service instance can live on any IP address/port number combination and NGINX Plus can route back to it dynamically.

Because the NGINX Plus DNS resolver is asynchronous, it can scan the service registry and add new service endpoints, or take them out of the pool, without blocking the request processing that is NGINX’s main job.

The DNS resolver is also configurable, so it does not need to rely on the DNS entry’s time-to-live (TTL) records to know when to refresh the IP address – in fact, relying on TTL in a microservices application can be disastrous. Instead, the valid parameter to the resolver directive allows you to set the frequency at which the resolver scans the service registry.

Figure 3 shows service discovery using a shared service registry, as described in our post on service discovery.

API Gateway Capability

An API gateway is a favored approach for client communication with the microservices application; a web frontend is another. The API gateway receives requests from clients, performs any needed protocol translation (as with SSL/TLS), and routes them to the appropriate service – using the results of service discovery, as mentioned above.

You can extend the capabilities of an API gateway using a tool such as the Lua module for NGINX Plus. You can, for instance, have code at the API gateway aggregate the results from several microservices requests into a single response to the client.

The Proxy Model also takes advantage of the fact that the API gateway is a logical place to handle capabilities that are not specific to microservices, such as caching, load balancing, and the others described in this blog post.

Conclusion

The Proxy Model networking architecture for microservices provides many useful features and a high degree of functionality. NGINX Plus, acting as the reverse proxy server, can provide clear benefits to the microservices application by making the system more robust, resilient, and dynamic. NGINX Plus makes it easy to manage traffic, load balance requests, and dynamically respond to changes in the backend microservices application.

The post Microservices Reference Architecture, Part 2 – The Proxy Model appeared first on NGINX.

Source: Microservices Reference Architecture, Part 2 – The Proxy Model

Leave a Reply