New DirectX 12 features in Windows 10 Fall Creators Update

We’ve come a long way since we launched DirectX 12 with Windows 10 on July 29, 2015. Since then, we’ve heard every bit of feedback and improved the API to enhance stability and offer more versatility. Today, developers using DirectX 12 can build games that have better graphics, run faster and that are more stable than ever before. Many games now run on the latest version of our groundbreaking API and we’re confident that even more anticipated, high-end AAA titles will take advantage of DirectX 12.

DirectX 12 is ideal for powering the games that run on PC and Xbox, which is the most powerful console on the market. Simply put, our consoles work best with our software: DirectX 12 is perfectly suited for native 4K games on the Xbox One X.

In the Windows 10 Fall Creators Update, we’ve added features that make it easier for developers to debug their code. In this article, we’ll explore how these features work and offer a recap of what we added in Windows 10 Creators Update.

But first, let’s cover how debugging a game or a program utilizing the GPU is different from debugging other programs.

As covered previously, DirectX 12 offers developers unprecedented low-level access to the GPU (check out Matt Sandy’s detailed post for more info). But even though this enables developers to write code that’s substantially faster and more efficient, this comes at a cost: the API is more complicated, which means that there are more opportunities for mistakes.

Many of these mistakes happen GPU-side, which means they are a lot more difficult to fix. When the GPU crashes, it can be difficult to determine exactly what went wrong. After a crash, we’re often left with little information besides a cryptic error message. The reason why these error messages can be vague is because of the inherent differences between CPUs and GPUs. Readers familiar with how GPUs work should feel free to skip the next section.

The CPU-GPU Divide

Most of the processing that happens in your machine happens in the CPU, as it’s a component that’s designed to resolve almost any computation it it’s given. It does many things, and for some operations, foregoes efficiency for versatility. This is the entire reason that GPUs exist: to perform better than the CPU at the kinds of calculations that power the graphically intensive applications of today. Basically, rendering calculations (i.e. the math behind generating images from 2D or 3D objects) are small and many: performing them in parallel makes a lot more sense than doing them consecutively. The GPU excels at these kinds of calculations. This is why game logic, which often involves long, varied and complicated computations, happens on the CPU, while the rendering happens GPU-side.

Even though applications run on the CPU, many modern-day applications require a lot of GPU support. These applications send instructions to the GPU, and then receive processed work back. For example, an application that uses 3D graphics will tell the GPU the positions of every object that needs to be drawn. The GPU will then move each object to its correct position in the 3D world, taking into account things like lighting conditions and the position of the camera, and then does the math to work out what all of this should look like from the perspective of the user. The GPU then sends back the image that should be displayed on system’s monitor.

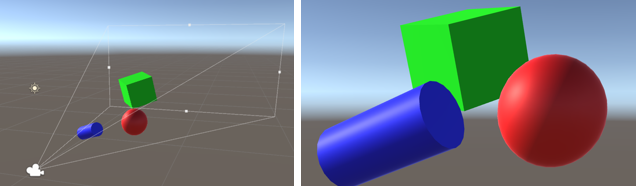

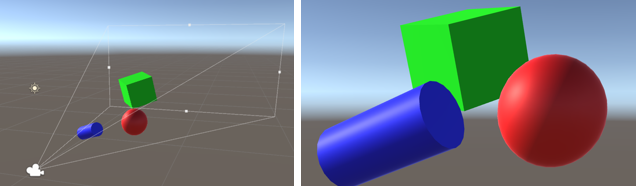

To the left, we see a camera, three objects and a light source in Unity, a game development engine. To the right, we see how the GPU renders these 3-dimensional objects onto a 2-dimensional screen, given the camera position and light source.

For high-end games with thousands of objects in every scene, this process of turning complicated 3-dimensional scenes into 2-dimensional images happens at least 60 times a second and would be impossible to do using the CPU alone!

Because of hardware differences, the CPU can’t talk to the GPU directly: when GPU work needs to be done, CPU-side orders need to be translated into native machine instructions that our system’s GPU can understand. This work is done by hardwire drivers, but because each GPU model is different, this means that the instructions delivered by each driver is different! Don’t worry though, here at Microsoft, we devote a substantial amount of time to make sure that GPU manufacturers (AMD, Nvidia and Intel) provide drivers that DirectX can communicate with across devices. This is one of the things that our API does; we can see DirectX as the software layer between the CPU and GPU hardware drivers.

Device Removed Errors

When games run error-free, DirectX simply sends orders (commands) from the CPU via hardware drivers to the GPU. The GPU then sends processed images back. After commands are translated and sent to the GPU, the CPU cannot track them anymore, which means that when the GPU crashes, it’s really difficult to find out what happened. Finding out which command caused it to crash used to be almost impossible, but we’re in the process of changing this, with two awesome new features that will help developers figure out what exactly happened when things go wrong in their programs.

One kind of error happens when the GPU becomes temporarily unavailable to the application, known as device removed or device lost errors. Most of these errors happen when a driver update occurs in the middle of a game. But sometimes, these errors happen because of mistakes in the programming of the game itself. Once the device has been logically removed, communication between the GPU and the application is terminated and access to GPU data is lost.

Improved Debugging: Data

During the rendering process, the GPU writes to and reads from data structures called resources. Because it takes time to do translation work between the CPU and GPU, if we already know that the GPU is going to use the same data repeatedly, we might as well just put that data straight into the GPU. In a racing game, a developer will likely want to do this for all the cars, and the track that they’re going to be racing on. All this data will then be put into resources. To draw just a single frame, the GPU will write to and read from many thousands of resources.

Before the Fall Creators Update, applications had no direct control over the underlying resource memory. However, there are rare but important cases where applications may need to access resource memory contents, such as right after device removed errors.

We’ve implemented a tool that does exactly this. Developers with access to the contents of resource memory now have substantially more useful information to help them determine exactly where an error occurred. Developers can now optimize time spent trying to determine the causes of errors, offering them more time to fix them across systems.

For technical details, see the OpenExistingHeapFromAddress documentation.

Improved Debugging: Commands

We’ve implemented another tool to be used alongside the previous one. Essentially, it can be used to create markers that record which commands sent from the CPU have already been executed and which ones are in the process of executing. Right after a crash, even a device removed crash, this information remains behind, which means we can quickly figure out which commands might have caused it—information that can significantly reduce the time needed for game development and bug fixing.

For technical details, see the WriteBufferImmediate documentation.

What does this mean for gamers? Having these tools offers direct ways to detect and inform around the root causes of what’s going on inside your machine. It’s like the difference between trying to figure out what’s wrong with your pickup truck based on hot smoke coming from the front versus having your Tesla’s internal computer system telling you exactly which part failed and needs to be replaced.

Developers using these tools will have more time to build high-performance, reliable games instead of continuously searching for the root causes of a particular bug.

Recap of Creators Update

In the Creators Update, we introduced two new features: Depth Bounds Testing and Programmable MSAA. Where the features we rolled out for the Windows 10 Fall Creators Update were mainly for making it easier for developers to fix crashes, Depth Bounds Testing and Programmable MSAA are focused on making it easier to program games that run faster with better visuals. These features can be seen as additional tools that have been added to a DirectX developer’s already extensive tool belt.

Depth Bounds Testing

Assigning depth values to pixels is a technique with a variety of applications: once we know how far away pixels are from a camera, we can throw away the ones too close or too far away. The same can be done to figure out which pixels fall inside and outside a light’s influence (in a 3D environment), which means that we can darken and lighten parts of the scene accordingly. We can also assign depth values to pixels to help us figure out where shadows are. These are only some of the applications of assigning depth values to pixels; it’s a versatile technique!

We now enable developers to specify a pixel’s minimum and maximum depth value; pixels outside of this range get discarded. Because doing this is now an integral part of the API and because the API is closer to the hardware than any software written on top of it, discarding pixels that don’t meet depth requirements is now something that can happen faster and more efficiently than before.

Simply put, developers will now be able to make better use of depth values in their code and can free GPU resources to perform other tasks on pixels or parts of the image that aren’t going to be thrown away.

Now that developers have another tool at their disposal, for gamers, this means that games will be able to do more for every scene.

For technical details, see the OMSetDepthBounds documentation.

Programmable MSAA

Before we explore this feature, let’s first discuss anti-aliasing.

Aliasing refers to the unwanted distortions that happen during the rendering of a scene in a game. There are two kinds of aliasing that happen in games: spatial and temporal.

Spatial aliasing refers to the visual distortions that happen when an image is represented digitally. Because pixels in a monitor/television screen are not infinitely small, there isn’t a way of representing lines that aren’t perfectly vertical or horizontal on a monitor. This means that most lines, instead of being straight lines on our screen, are not straight but rather approximations of straight lines. Sometimes the illusion of straight lines is broken: this may appear as stair-like rough edges, or ‘jaggies’, and spatial anti-aliasing refers to the techniques that programmers use to make these kinds edges smoother and less noticeable. The solution to these distortions is baked into the API, with hardware-accelerated MSAA (Multi-Sample Anti-Aliasing), an efficient anti-aliasing technique that combines quality with speed. Before the Creators Update, developers already had the tools to enable MSAA and specify its granularity (the amount of anti-aliasing done per scene) with DirectX.

Side-by-side comparison of the same scene with spatial aliasing (left) and without (right). Notice in particular the jagged outlines of the building and sides of the road in the aliased image. This still was taken from Forza Motorsport 6: Apex.

But what about temporal aliasing? Temporal aliasing refers to the aliasing that happens over time and is caused by the sampling rate (or number of frames drawn a second) being slower than the movement that happens in scene. To the user, things in the scene jump around instead of moving smoothly. This YouTube video does an excellent job showing what temporal aliasing looks like in a game.

In the Creators Update, we offer developers more control of MSAA, by making it a lot more programmable. At each frame, developers can specify how MSAA works on a sub-pixel level. By alternating MSAA on each frame, the effects of temporal aliasing become significantly less noticeable.

Programmable MSAA means that developers have a useful tool in their belt. Our API not only has native spatial anti-aliasing but now also has a feature that makes temporal anti-aliasing a lot easier. With DirectX 12 on Windows 10, PC gamers can expect upcoming games to look better than before.

For technical details, see the SetSamplePositions documentation.

Other Changes

Besides several bugfixes, we’ve also updated our graphics debugging software, PIX, every month to help developers optimize their games. Check out the PIX blog for more details.

Once again, we appreciate the feedback shared on DirectX 12 to date, and look forward to delivering even more tools, enhancements and support in the future.

Happy developing and gaming!

Source: New DirectX 12 features in Windows 10 Fall Creators Update

Leave a Reply