NGINX and IoT: Adding Protocol Awareness for MQTT

td {

padding-right: 10px;

}

This post is adapted from a presentation at nginx.conf 2017 by Liam Crilly, Director of Product Management at NGINX, Inc. You can view the complete presentation on YouTube.

Table of Contents

| 0:18 | stream, nginScript, and MQTT | 0:59 | NGINX User Survey |

| 2:19 | IoT Developer Survey |

| 3:03 | TCP/UDP Load Balancing |

| 3:57 | How to load balance MQTT – Let’s Get Started |

| 6:43 | CONNACK |

| 7:46 | Demo |

| 7:59 | VerneMQ and Contiki |

| 9:23 | MQTT Device |

| 10:56 | TCP/UDP Upstreams |

| 12:03 | Chipsets |

| 12:47 | HTTP interface |

| 15:53 | Session Persistence |

| 17:23 | NGINX Plus and JavaScript |

| 19:56 | proxy_timeout |

| 23:05 | TLS |

| 25:57 | What the Actual Config Looks Like |

| 26:06 | Blinkenlights |

| 28:12 | Adding Protocol Awareness for MQTT |

| 28:47 | Q&A |

I’m Liam Crilly, Director of Product Management at NGINX.

Welcome to the only talk that’s not about the Web – just to be sure you’re in the right place.

0:18 stream, nginScript, and MQTT

We won’t see much HTTP in this talk, but we’ll see stream, we’ll see nginScript, and we’ll see some MQTT.

But first, a hockey stick, because this is an IoT (Internet of Things) talk, and you can’t have an IoT presentation without a hockey stick. Depending on which analyst you speak to, their predictions are between 1 billion and 50 billion IoT devices by 2020. Whichever way you look at it, we’re talking about a whole lot of data from a whole lot of things.

0:59 NGINX User Survey

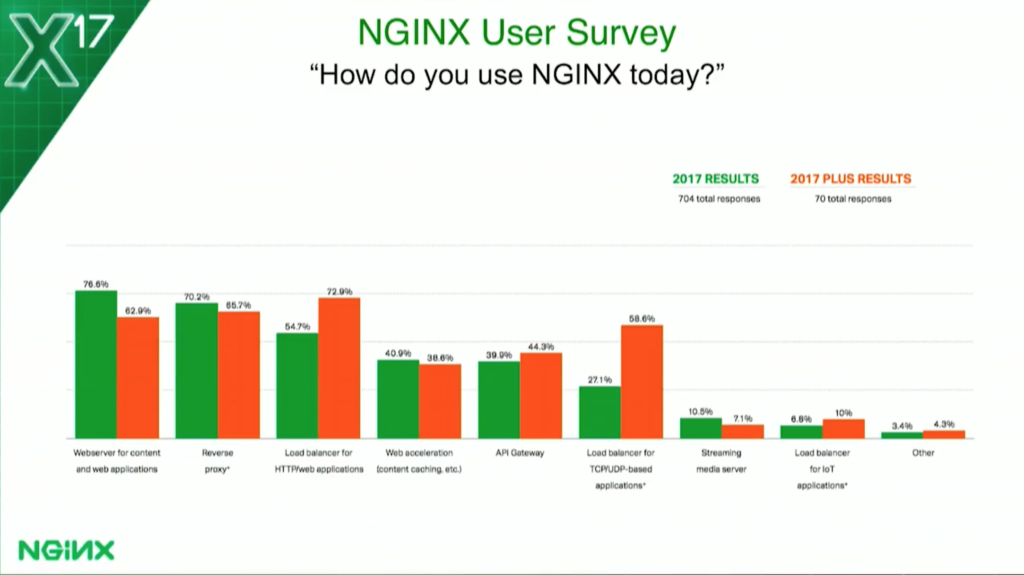

Every year, we ask our open source community and our NGINX Plus customers what they think of NGINX, and how they use it. As you’d expect, the vast majority of use cases are for HTTP functions, applications, and the good stuff you do with NGINX today.

But, as you can just about see, 58% of our NGINX Plus customers are also load balancing TCP and UDP applications, and 10% of our NGINX Plus customers are also using it for their IoT applications or IoT projects.

Now, can I get a show of hands – is anyone in the room using NGINX for load balancing Layer 4 using stream TCP/UDP? All right, I’ll call that 25%. What about IoT today? What about IoT in the future? OK, it’s not a hockey stick, but it’s on its way.

2:19 IoT Developer Survey

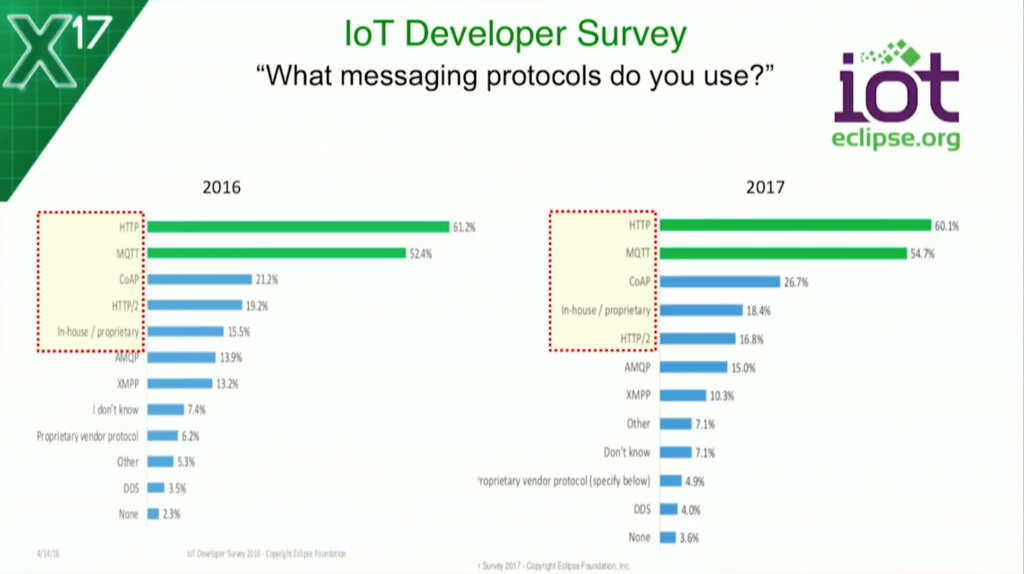

We’re not the only people doing surveys. Every year, the Eclipse Foundation asks their IoT community a whole bunch of stuff about their IoT projects. This question is particularly interesting to me: “What messaging protocols do you use in your IoT projects?”

It’s no surprise to see HTTP at the top of the list, but it’s also fair to say that MQTT is leading the way in terms of IoT-specific messaging protocols, and CoAP is gaining popularity as well. Up five points in the last year, so we’ve seen some momentum there around CoAP as well. Of course, both protocols can be proxied and load balanced with NGINX.

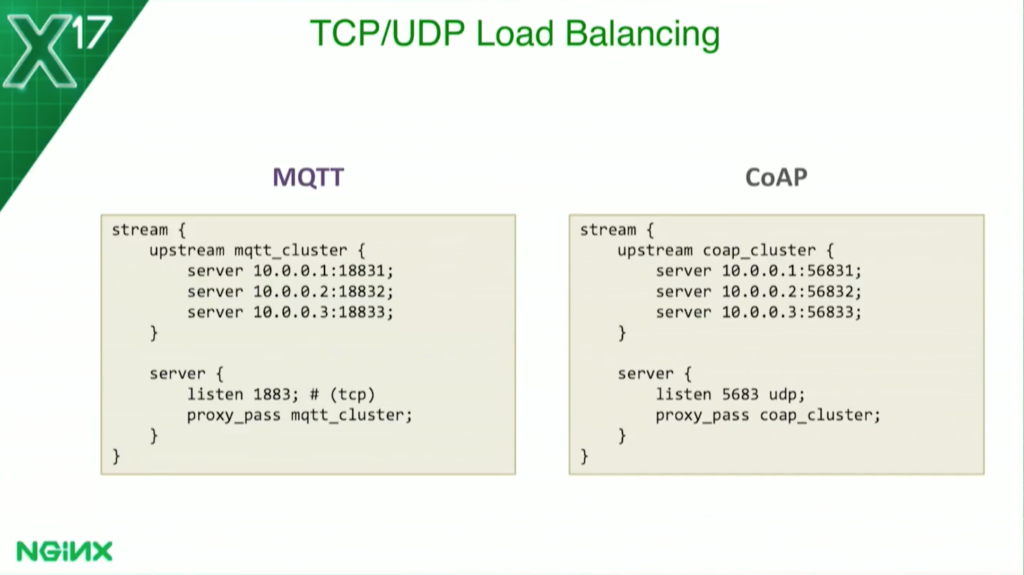

3:03 TCP/UDP Load Balancing

It’s as simple as this: We have a stream block for Layer 4 traffic. In that, we put an upstream group – just as you would for HTTP – defining what IP address, what ports they’re running on, and then have a server block for our frontend, specifying a listen port for the protocol.

MQTT defaults to port 1883, CoAP defaults to port 5683 – and you’ll notice that we use the udp parameter to the listen directive to specify when we want to listen on UDP, TCP being the default.

Then we proxy_pass anything that comes our way to the backend, to the upstream group. If you want to put NGINX in front of your MQTT, or your CoAP servers, this config will get you started straightaway. This is all you need.

3:57 How to load balance MQTT – Let’s Get Started

But this talk’s about how to load balance MQTT, so let’s get into that. MQTT is a pub/sub messaging protocol. With MQTT, you have a broker (middleware) acting as a routing agent for messages.

And then you have clients. They can either publish messages, subscribe to messages, or do both. The reason I think MQTT has become so popular for IoT projects is that it’s simple. It’s simple, but it’s as complex as it needs to be. Messages are very compact, and therefore, it uses the network very efficiently. That reminds me of NGINX, and I think it’s a really nice fit.

You’ll be pleased to hear that this talk has some live demo content. That’s exciting for you, and it’s exciting for me, especially because I wrote some code for this. It’s still kind of buggy, and we may need to do a restart. It’s going to be fun. We’ll see how we go. But first things first. We’re going to do some basic load balancing and we’re going to use health checks.

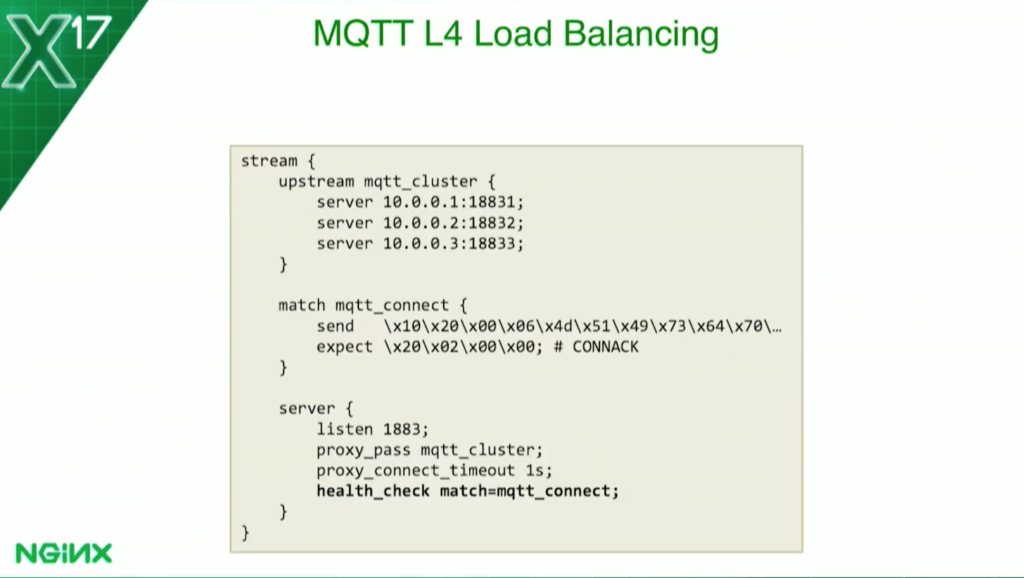

It’s simple. We look at the config we had before – MQTT is a binary protocol. After we’ve specified our server block down there, we’ve also specified a health check. By default, it specified a health check with NGINX Plus. It will check that the port on each of the servers is available – a TCP handshake.

But that’s not good enough. We want to make sure that when we do a health check, we’re checking that the upstream server is actually capable of handling MQTT traffic.

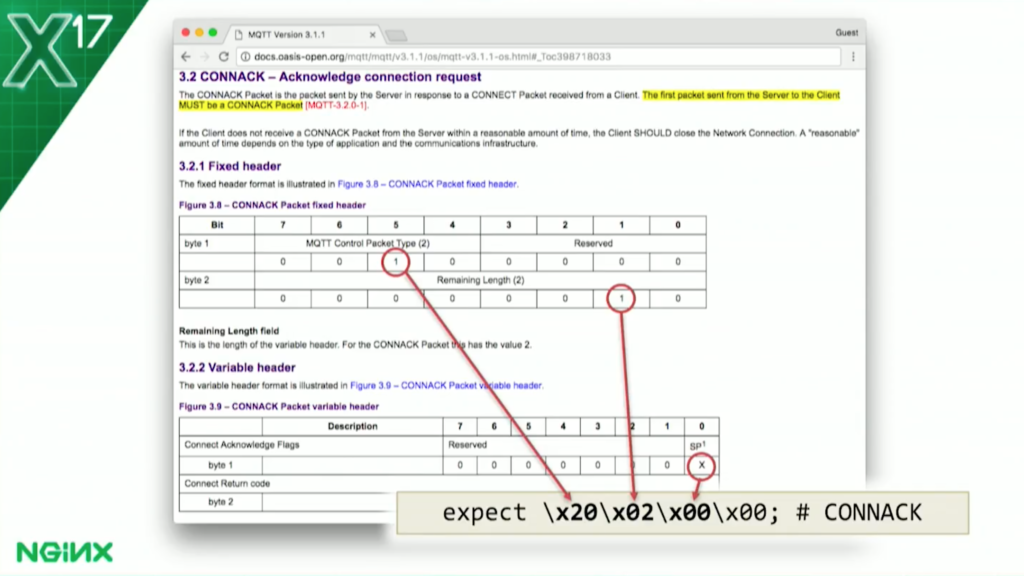

What we’re doing with this health check is: we specify a match parameter to a block called mqtt_connect. That has a send and an expect. This will put a string onto the network to each of the servers. As a binary protocol, we’ve encoded it here as a hex string. The send is an MQTT CONNECT packet, as any other MQTT software will do. The expect is for the response to that, which is a connection acknowledgement, a CONNACK. The hex there looks a bit hairy – I actually captured that using tcpdump and Wireshark – but the MQTT protocol is sufficiently simple, so we can construct the expect statement as those 4 bytes right there, just by looking at the spec.

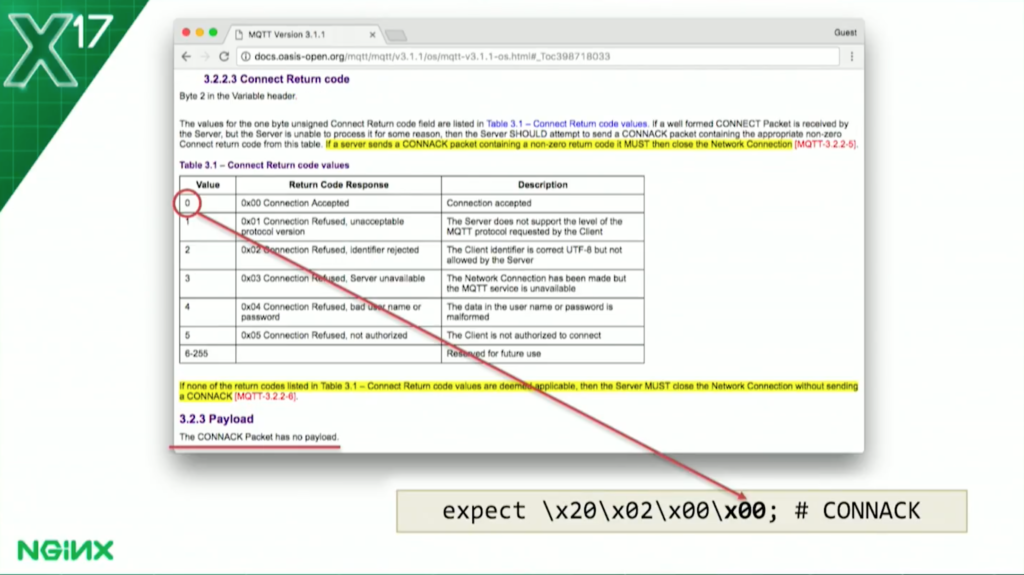

6:43 CONNACK

This is how I did it. Byte 1 is 1 at bit 5; that’s x20. Byte 2 is 1 at bit 1; that’s x02. Byte 3, in the variable header, is going to be x00 to establish the clean session. Each health check is going to look like a brand new connection to the MQTT broker.

Finally, Byte 4 is the Connect Return code and 00 means connection accepted. If we get a x00 in Byte 4, we know we’re good. Now, it’s possible for the MQTT broker to be up and healthy but still respond with x01, to say that it’s unavailable to accept new connections: to say that it’s too busy, for example.

It’s important here that we’re defining a health check where the successful response is to say that I can accept a TCP connection, I can handle your connect, and I’m going to say that I’m able to receive more connections. So, that’s the setup.

7:46 Demo

Let’s move on to the demo. As I said, I wrote some code for this and some of it was in C which makes it even more hairy. I’m just setting expectations: it might not go too smoothly.

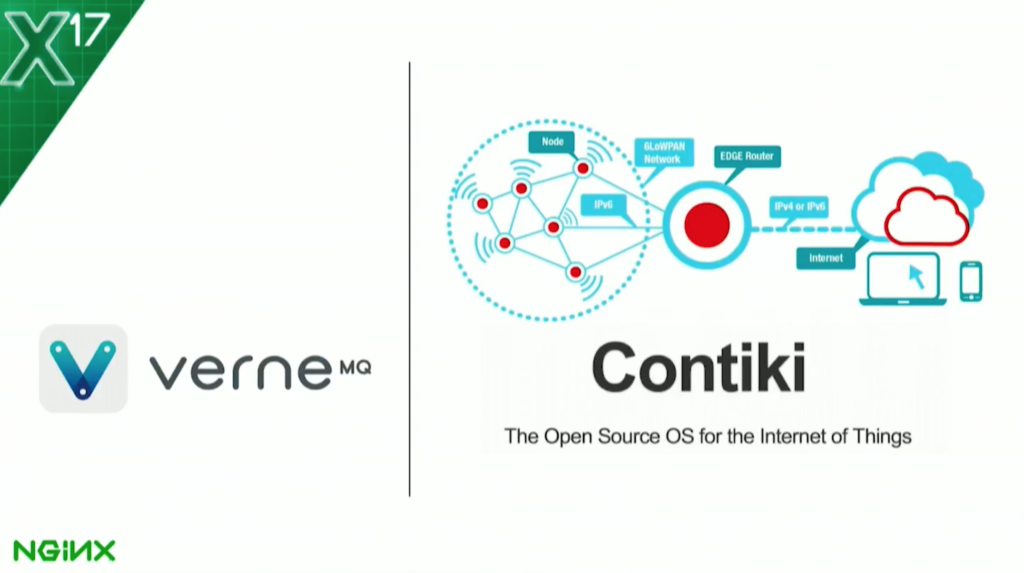

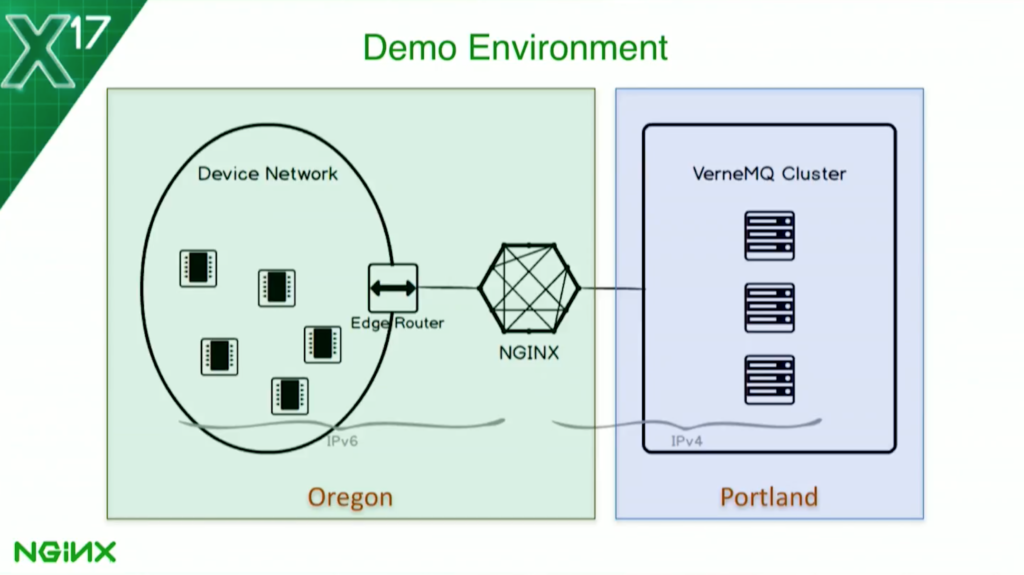

7:59 VerneMQ and Contiki

Before I can show you NGINX load balancing MQTT, I need a whole MQTT IoT environment. I don’t have a dozen Raspberry Pis in my bag that I’m going to pull out and stick on the desk, I don’t have a wireless network because that’s doomed to failure, and I don’t have an MQTT broker in the cloud. I’m going to build a bunch of stuff on my laptop. For the MQTT cluster, I’m going to be using VerneMQ. It’s just one of many open source MQTT brokers out there.

I chose VerneMQ in particular because you can set up a cluster with Docker images. It took about 90 seconds, and that makes it pretty awesome in my book. That’s what we’re using for the MQTT side.

I’m also going to need some IoT devices – some “things” – and for that, I’m using Contiki, a real-time operating system for writing applications on constrained devices – little motors, little sensors.

The cool thing about Contiki is it also comes with a simulator tool where you can run and compile your code before you push it onto the real physical device. We’ll see what that looks like, and it gives me a network, and a radio, and all kinds of cool stuff.

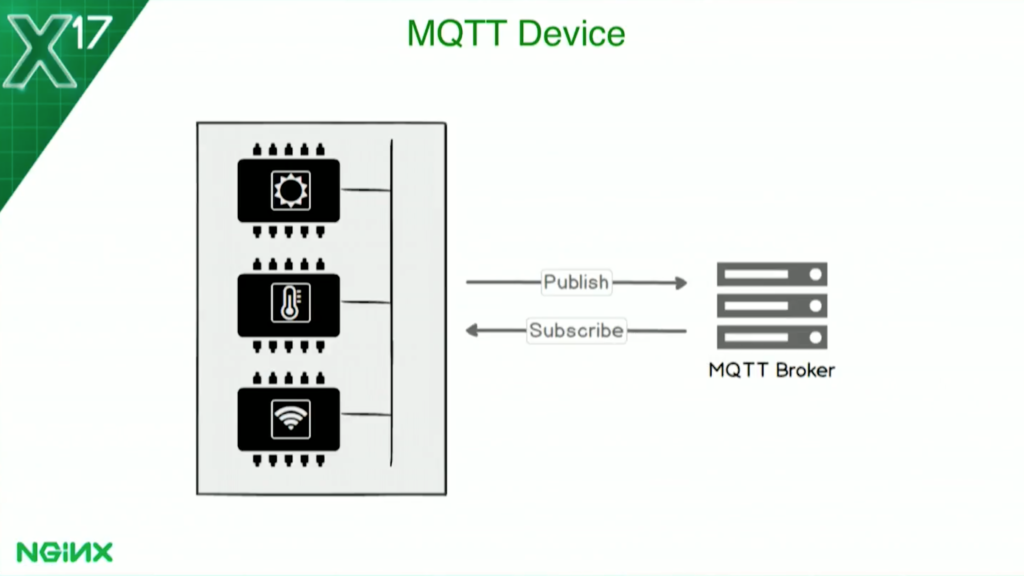

9:23 MQTT Device

This is my IoT device, my “thing”. It’s got a light sensor, a temperature sensor giving out some fake values, and a wireless network. It’s hardwired to make an MQTT connection to my NGINX instance, to publish its sensor data routinely, and to receive commands – so it also subscribes to topics. It’s got two-way communications to the broker.

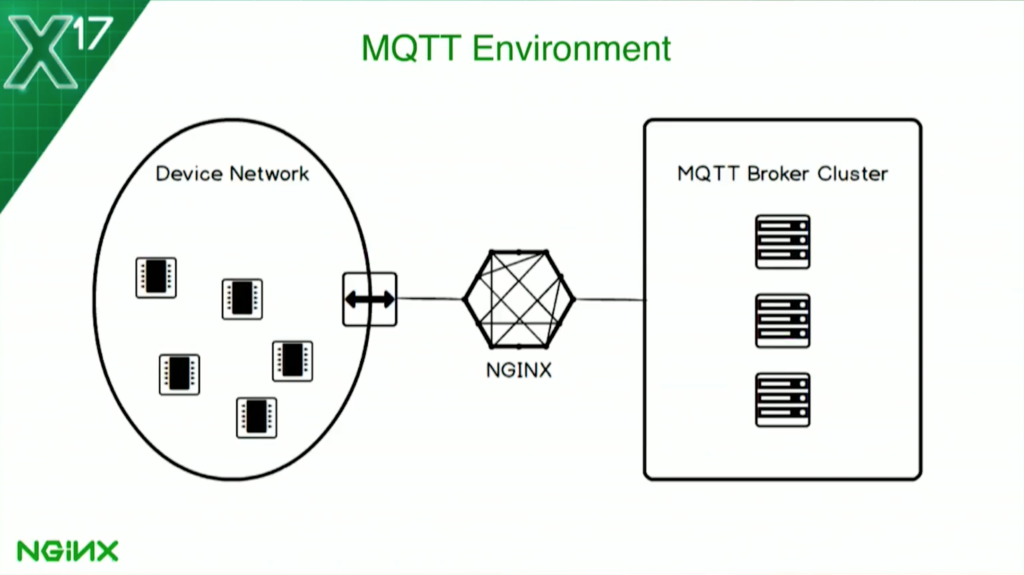

As we saw before, this is what it’s going to look like. I’ve got my devices, I’ve got an edge router which allows the network to flow through onto the regular network on my laptop, and I’ve got my NGINX instance in the middle. In this case, the NGINX instance could either sit as more of an edge server to help these devices get to the IT platform, or it could sit as a frontend load balancer closer to the IT platform itself. It doesn’t really matter. Your use cases vary and it works pretty well in both scenarios.

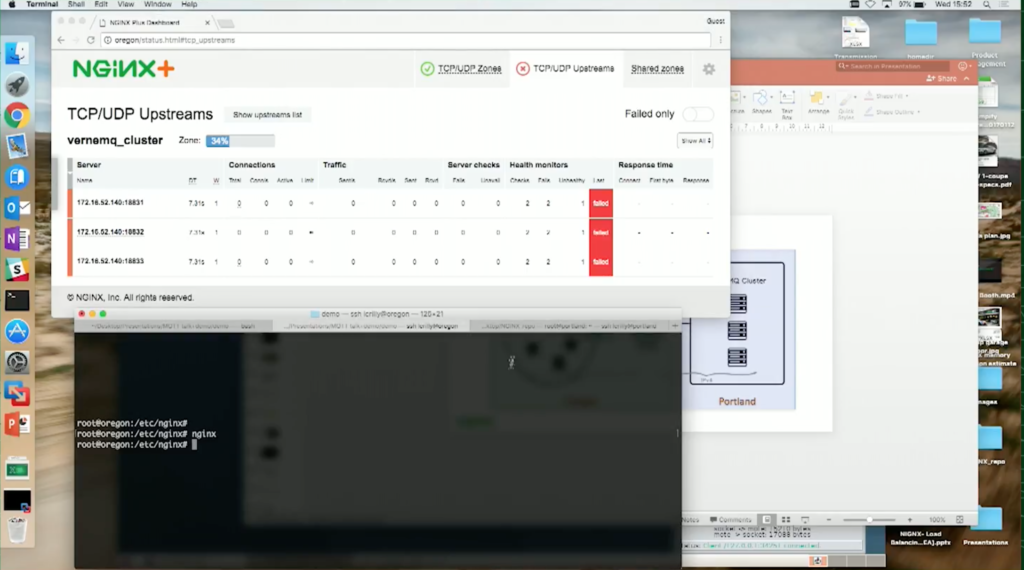

10:56 TCP/UDP Upstreams

With that said, this is where it gets exciting. First, I’m going to need to make sure NGINX is running. It wasn’t, now it is. Our health checks are all failing. Everything is red on the NGINX Plus dashboard. Let’s get a couple of nodes of the cluster up: Docker image 1, Docker image 2. Let’s see if they’re running. They’re running. Let’s see if the cluster is good. Not yet. There we go, two nodes in the cluster. That’s pretty cool.

We should see the dashboard start to be a little bit less red. A couple of our MQTT brokers are up and running and that should be enough to start moving some traffic around.

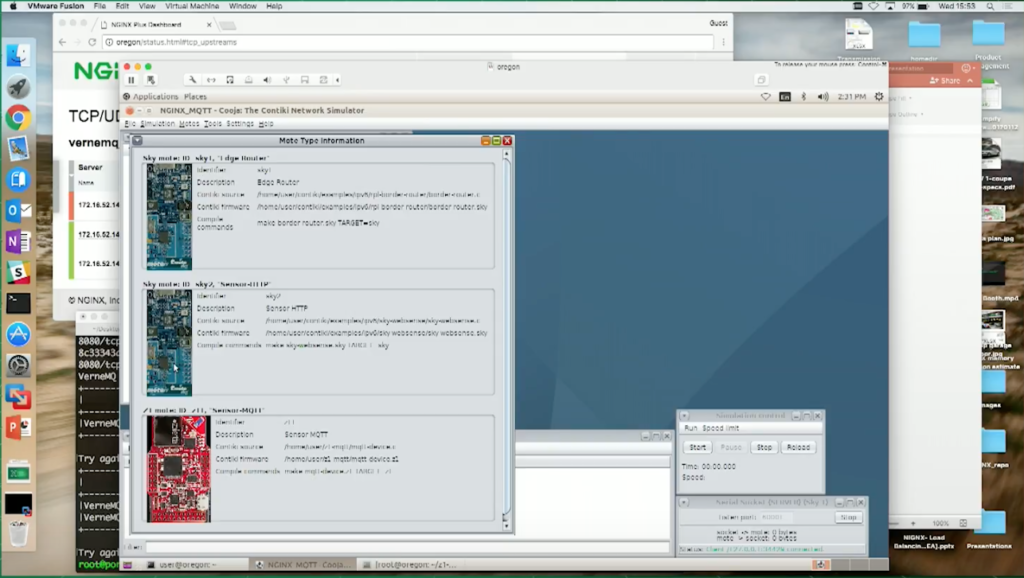

Now, let’s get the sensors up. They are up. I’m going to load a simulation, a Java app that implements the Contiki RTOS. We’re going to compile it.

12:03 Chipsets

It’s got these three different types of sensors: we’ve got our edge router on this blue-ish chipset, we’ve got a small sensor with temperature and light, as I mentioned – the blue one has an HTTP interface, so we can use the web browser to go see it – and we’ve got an MQTT sensor on this red chipset board here. We’re going to see all these three in action.

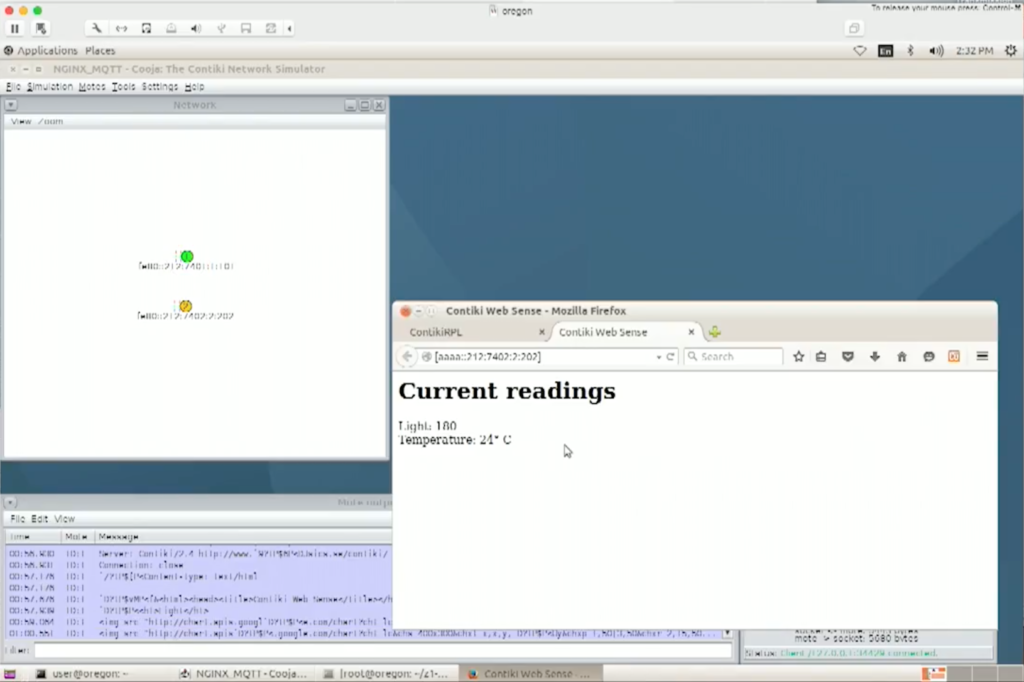

We’ve got these two – this is my edge router in the middle. Let’s start the network. It’ll boot up. They’ll find themselves an IPv6 address, and they’re ready to go. If I start a web browser at that IPv6 address – there’s the router ending in 101, and it can see it’s got a neighbor and a route: 202. Let’s go to that IP address.

12:47 HTTP interface

It’s a sensor. It has the HTTP interface and it’s telling us what its light and temperature readings are. That’s great. Let’s make the MQTT stuff happen. I’m going to add some MQTT sensors – four, for fun. I’m going to put them closer to the router because this is a lossy radio network and it tends to go a little bit ropey if they’re far away.

Let’s start that up again. They’ll boot up. They’ll get an IP address on the network, and because this is the first time I’m doing it live in front of people, we’re going to get a stack trace. Let’s just do that all over. We’ve seen these guys. Let’s add the MQTT guys again.

I’m still going to do four and they’re going to be closer together, real close. Get near to the router. They’re off, registering on the network. As soon as they’re registered, they’re going to try to connect to their hardwired address, which is the NGINX instance. That looks a bit better this time.

Let’s go back to the router web UI and see if it could see them. We’ve got IP addresses that end now in 3, 4, 5, and 6. They are our MQTT devices. They’re on the network. I’m going to go to my laptop shell here, and this time I’m going to use the Mosquitto MQTT client which has come online. It’s really easy.

I’m talking to Oregon here, port 1883, and I’m subscribing to this topic. Sensors 3, 4, and 5 have come online and they’re pushing to the broker. I’m pulling from the broker. They’re all up: 3, 4, 5, and 6. They’re sending data in JSON: a sequence number, an uptime, a light reading, and a temperature reading. Things are looking pretty good.

Now, we still have this down server. Let’s scoot back over here and extend our cluster. I’m going to add a third Docker image, as the config is expecting it. There are now three images running, the cluster has three healthy instances, the health check has passed, and we already have an active connection to our new MQTT broker.

That still hasn’t crashed. This is still pulling data. I have sequence 2, now sequence 3. Everything’s ticking along. Beautiful. I’m going to stop that before it stops itself or chews up all my battery. OK, close that down. Fantastic.

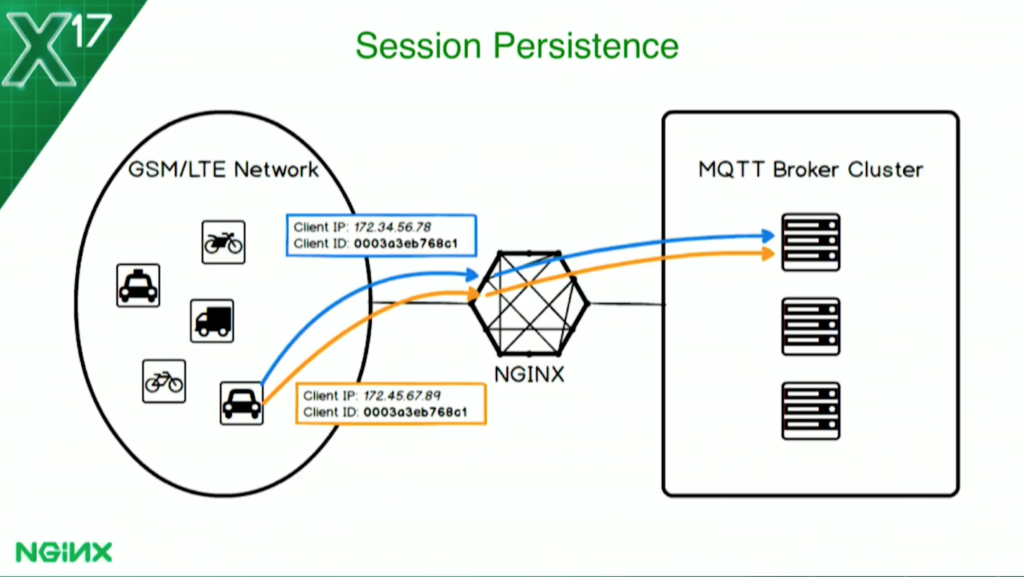

15:53 Session Persistence

We have a happy little IT environment and that’s all great, but real life is more complex. In the real world, IoT devices don’t just pop up on a fixed IPv6 address and stay connected forever. In the real world, things are different.

Take, for example, the device that moves around a lot. If you’re using someone else’s network, then you can’t rely on a static IP address. If you’re using GSM or LTE in particular, as you move around, you’re going to pick up new IP addresses the whole time. In this situation, session persistence is extremely useful. When the same device reconnects, you get back to the same MQTT broker in the cluster that you were previously connected to. This means less work for the MQTT broker or for the cluster, in fact, because a reconnecting client doesn’t have to have state synchronized between the cluster unnecessarily or have data shuffle around.

This is the scenario. Our vehicle initially connects with the blue IP address. Then it moves around and it reconnects, this time with the orange IP address. Both times, it identifies itself with a client ID which is part of the MQTT spec, a mandatory element to identify the device. That stays the same. What we want to do is extend NGINX so that it uses the client ID to make the load-balancing decision, so we have session persistence to the same broker.

17:23 NGINX Plus and JavaScript

With nginScript, we can add protocol awareness and Layer 7 functionality for MQTT. nginScript is a dynamic module for NGINX and NGINX Plus. It implements a full JavaScript VM for each TCP connection, or UDP connection, or HTTP connection. It means that you can extend the functionality of NGINX by writing regular JavaScript code.

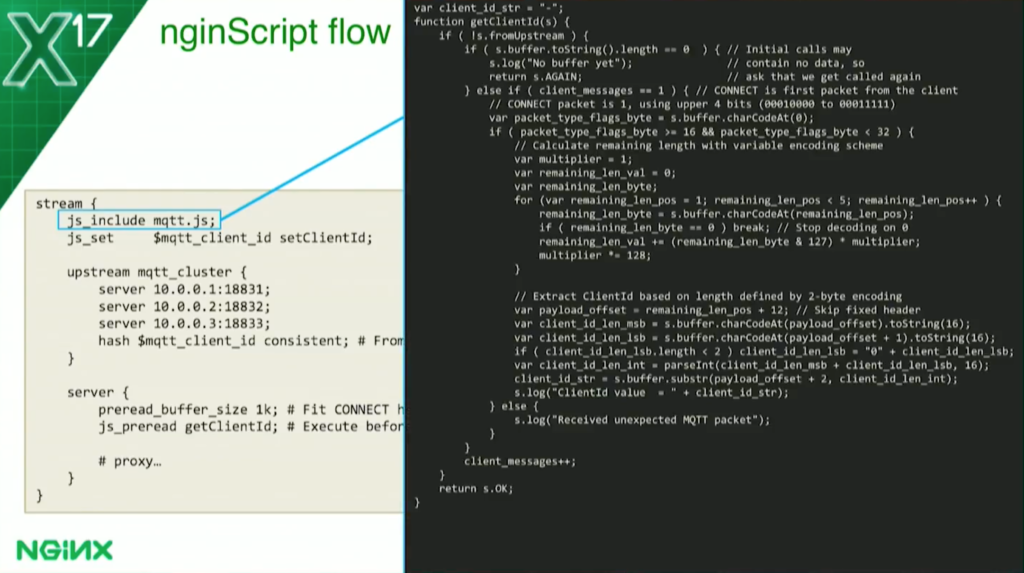

Let’s hook all that together and we’ll see it in action. This is pretty much the same config we had before, only this time, we have the js_include directive, which means that we’re going to pull in some JavaScript code from a file called mqtt.js. That’s it there, in the terminal on the right side of the slide. Brace yourselves. Here it comes.

It’s 20 odd lines of JavaScript. I won’t expect you to read it. Hopefully, it’s so small that you can’t read it. Right at the top, we’ve got a getclientID function. This is called at the preread phase with the js_preread directive in the NGINX Plus configuration. That means that we run this code before proxying and before we load balance, which means that we can get the information before it’s needed.

What happens here is that we set client_id_str as a global variable, defined at line 1 in the JavaScript code. Then, the hash directive in the NGINX configuration is looking to hash on $mqtt_client_ID, and $mqtt_client_ID is defined by the js_set directive, which calls this little function at the bottom of the JavaScript code, which just exposes the JavaScript variable as an NGINX variable.

This means that every time we receive a new MQTT connection, we look inside the packet, we parse the MQTT, we grab the client_ID variable, we make it available to NGINX, and then we hash that value against three possible MQTT brokers that we have available. For the same client_ID, we’ll always select the same upstream server. And we can see that working.

19:56 proxy_timeout

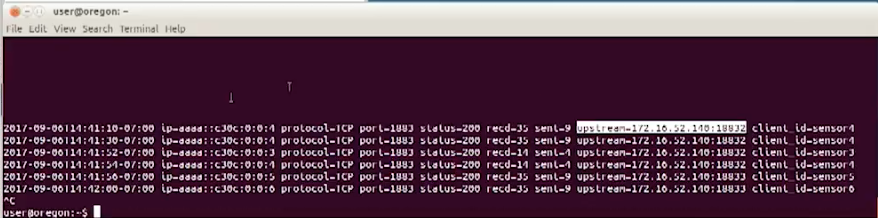

One thing I’m going to do is, first of all, just edit this proxy_timeout so that we so that NGINX closes idle connections and we’ll see some log values. I’ve got logging for MQTT. We’ll reload NGINX. Everything is up. And this time, I’m going to be looking in the access log.

We’ll just restart this simulation again. They’re going to fire up and do exactly the same thing as before. One more time. Get closer. And start. All right, they’re all connecting – not the most reliable – and with any luck, once they’ve finished establishing some sort of connection, they’ll start talking to NGINX, and NGINX will start logging the TCP connections. Yay. That’s the first one.

You can also use the variable that we exposed in the NGINX config as part of a log format, which means that every time we get a connection from a device, we’re logging it. And we can log, as you see here, the client_ID value. What we can see here is that sensor 4 likes to connect to node 2, the one that is listening on port 18832.

And should we see any other sensors come online, we’ll see where they get hashed to as well. The important point here is that when the same device connects, it will always go to the same backend.

In sensor 3, it likes 32, sensor 4 still likes 32, and sensor 5 likes 33. Happy days. l’ll quit while I’m ahead on that one and I’ll put this back the way I found it.

23:05 TLS

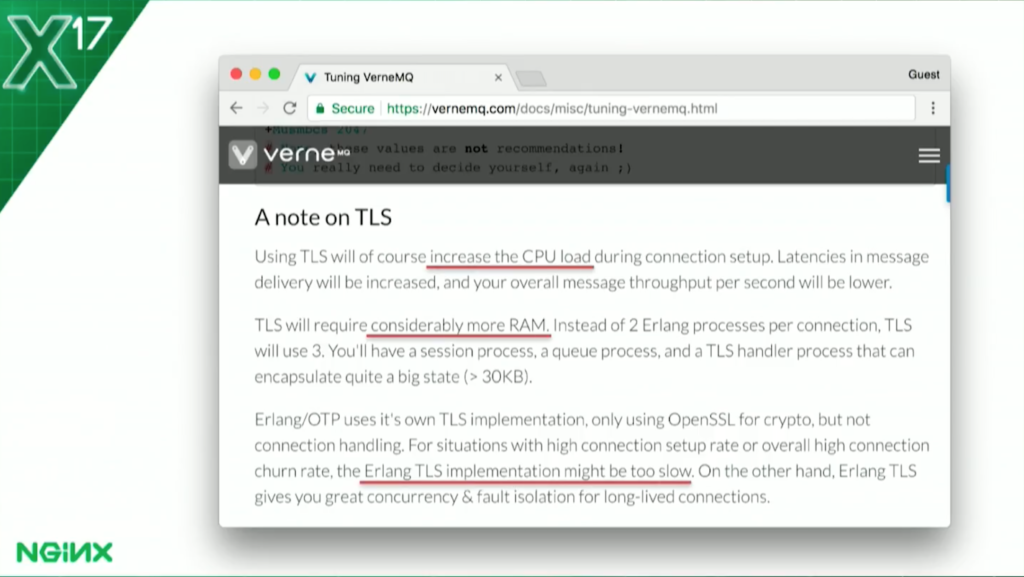

TLS: why do we want TLS? Securing data is one of the big problems facing IT projects. Adding TLS seems like a no-brainer. Why use NGINX for that? All of the MQTT brokers – at least the ones I’ve come across – support TLS. So, we can use that. But, all the web application servers I’ve come across support TLS as well.

And generally speaking, it’s best practice to try to offload that to a frontend, to a reverse proxy, to a load balancer. The same holds true for IoT traffic and IoT as well.

I’m not just picking on VerneMQ here; it’s the same for everything. If you’re performing TLS on the broker, the broker will consume more resources. For best performance, let the broker do the brokering, offload as much as you can to the frontend, and that way, you can scale a load balancer for network and crypto throughput, and scale the broker for clustering and messaging throughput.

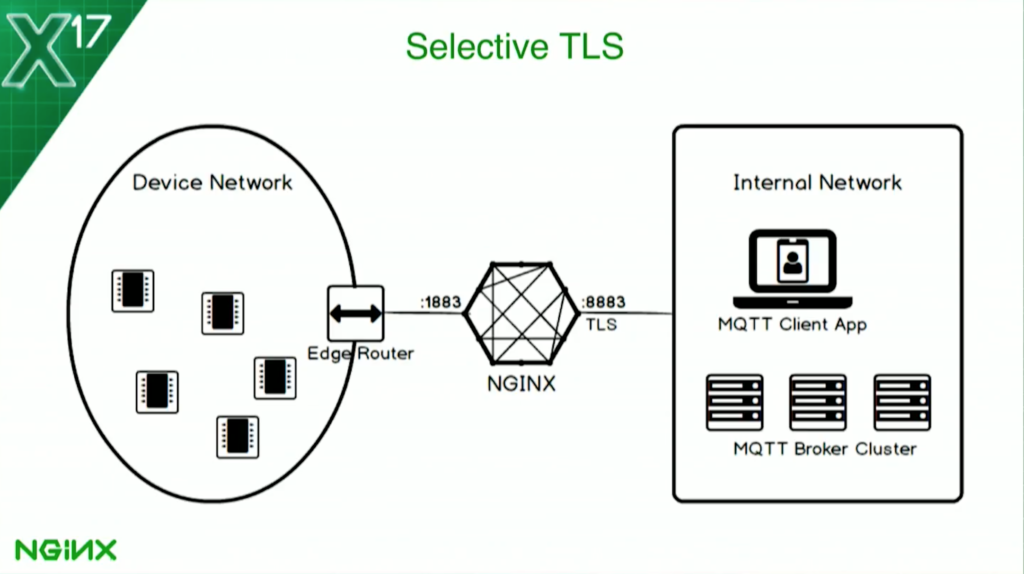

What we’re going to do is use TLS. But you can’t turn TLS on for all connections. Our vehicle has enough compute power to handle TLS, but my little battery-powered sensor on a little chipset doesn’t.

Being pragmatic, what I’m going to do is set up selective TLS. I’m going to have plaintext on my device network – all these guys are doing is publishing permission data. But, on my internal network, where I’ve got a client application – when I want to send connections, I’m going to have NGINX listen on the MQTT secure port 8883, and I’m going to have TLS on there. Not only am I going to have TLS for data encryption, I’m going to use X.509 client certificates to authenticate the application to the broker. There’s an app for that.

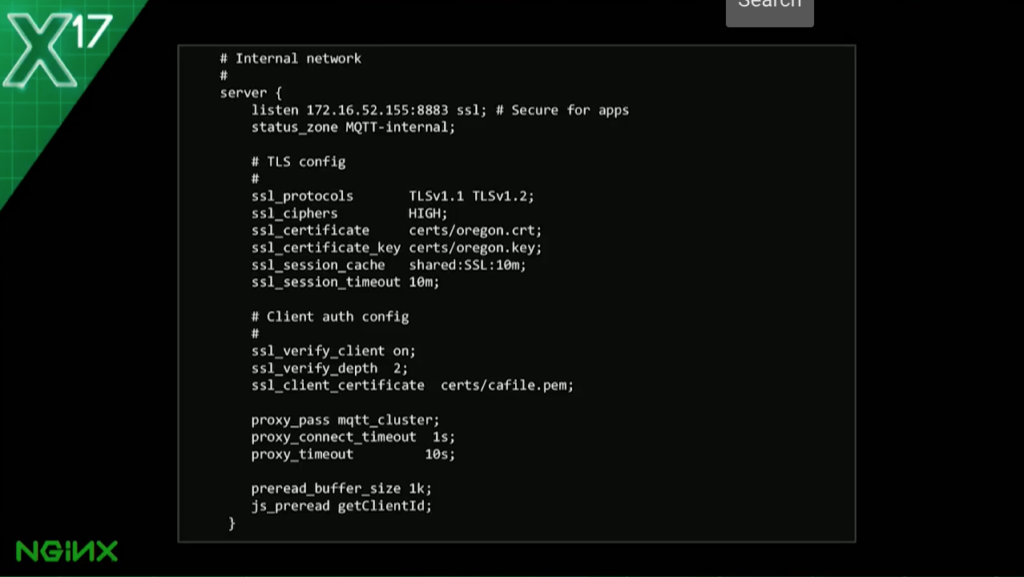

Let’s first look at the config. To do the selective TLS, it’s as simple as splitting our server block into two. This time, I’m being specific: I’m listening on an IPv6 address on port 883, as before, and I’m listening on an IPv4 address on the internal network requiring port 883 and the ssl parameter so that we’re going to do TLS.

Then there are a bunch of ssl_* directives that we don’t need to go through here. We’re going to be specifying certificates; we’re satisfying cipher suites, protocols, and the client certificate verification as well. Then we’ll proxy_pass both to the cluster.

25:57 What the Actual Config Looks Like

The actual config looks like this. There’s a quite a lot of SSL stuff you need to do, but it’s all well-documented and it should all work.

26:06 Blinkenlights

Let’s move to the final demo. I’m going to add some blinking lights to my little device and we’ll see if we can turn them on and off. If we can finish with some blinking lights, I’ll be happy.

NGINX is still running. I’ve got my four devices as before. Let’s get them closer, within range of the edge. I’m looking at the LEDs for each of them. There’s ID 3, 4, 5, and 6. Let’s start this up.

They’re pairing on and they’re trying to connect. They flash green when they’re trying to connect. That’s not too interesting. What I’m going to do is show you my back-office application. It’s called led_on It’s just a mosquitto command. This time, using port 8883, specifying my PKI stuff and my certificate. The topic this time is command. I’m sending message [-m] value 1. That means turn on the red LED.

The demo gods are happy with me: we’re published. We’ve got two LEDs on three, four LEDs. If I’m really lucky, we can make them blink. That’s good enough. That’s the blinking lights.

28:12 Adding Protocol Awareness for MQTT

This has been about adding protocol awareness for MQTT, load balancing, active health checks, session persistence using nginScript, selective TLS, and client certificate authentication.

28:47 Q&A

Question: Do you think that we’ll see more, or all, IoT devices be able to handle TLS?

Liam Crilly: Absolutely, I think more. I think many will – those which have the compute power. I think we’ll see a lot more. Anything that’s capable of handling TLS, I think, we’ll see become capable of it, just in the way that I think many that can’t run an IP stack today will move toward being able to run an IP stack.

Moore’s Law is almost starting over in the IoT space as the cost of chips can get less. But they’re still going to be these devices that are battery-powered, that wake up and go to sleep, and I think that either the physical constraints (or the power constraints, moreover) will keep those from becoming fully secure.

Smaller certs, elliptic-curve cryptography – I think we’ll see a lot more coverage of TLS implementations, and I think that’s the right way to go. DIY crypto is always a disaster. So, moving more protocols toward using TLS instead of trying to wrap up some encrypted payload in some other way, I think, has to be the way things move. And, therefore, you’ll be able to do the crypto offload and the frontend. There will be many exceptions, I’m sure, but that’s how I see it going.

The post NGINX and IoT: Adding Protocol Awareness for MQTT appeared first on NGINX.

Leave a Reply