nginx.conf 2016 – Keynote with Owen Garrett NGINX: Past, Present, and Future

td {

padding-right: 10px;

}

This post is adapted from the keynote, NGINX: Past, Present, and Future, delivered at nginx.conf 2016 by Owen Garrett, Head of Products at NGINX, Inc. More blog posts and videos from other presentations at the conference will be published in the coming months.

Table of Contents

| 0:00 | Introduction |

| 0:44 | NGINX Conference Two Years Ago |

| 1:39 | 12 Years of NGINX |

| 4:18 | Pace of Innovation in NGINX |

| 5:44 | What Drives the NGINX Community? |

| 7:26 | What’s New in 2016 |

| 10:40 | What’s Next? |

| 10:53 | What Keeps You Awake at Night? |

| 12:16 | Architectural Complexity |

| 13:04 | Platform Complexity |

| 14:39 | Networking Complexity |

| 15:52 | Traditional Approaches Don’t Always Fit |

| 16:56 | What Is NGINX’s Role? |

| 17:48 | Three Future Initiatives |

| 17:57 | nginScript |

| 18:13 | nginScript (cont) |

| 19:58 | NGINX Amplify |

| 26:42 | Microservices Reference Architecture |

| 27:18 | Microservices Reference Architecture (cont) |

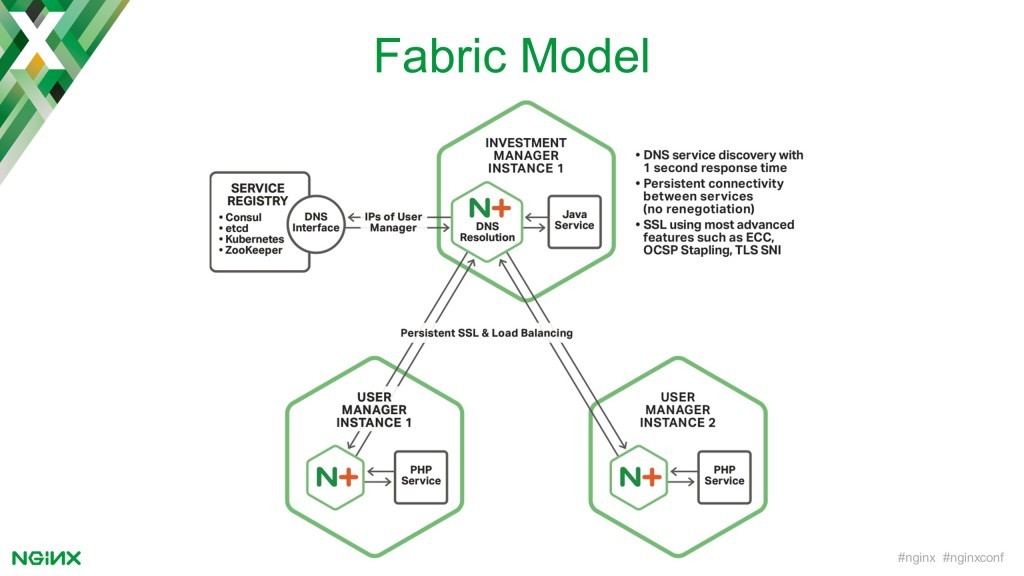

| 28:08 | Fabric Model |

| 28:18 | Fabric Model (cont) |

0:00 Introduction

Hello, and thank you, Igor. There’s a lot of stuff going on with the product and engineering teams at NGINX. What Igor shared with you is just one of the many projects that are currently underway, and I want to share a little bit more in this session. But before I do that, can I also extend my greetings?

Welcome to the conference. It is really encouraging to see the size of the crowd and the faces out here. This morning as I mingled at reception, there were a few faces I recognized, but there were also a lot of new faces. Things have really changed at NGINX over the last couple years.

0:44 NGINX Conference Two Years Ago

Two years ago we held our first conference in a small airport hotel in San Francisco. At the conference then we had just a handful of employees. Well I hope we didn’t put people off that conference. I think rather what we’re seeing is the huge trajectory of growth amongst the NGINX community over the last couple of years.

Here, we have end users, we have module developers, we have partners – all critical members of the ecosystem that make NGINX the technology and the product that it is now. NGINX might be a small part of your entire application stack and infrastructure, but it’s absolutely critical to what matters so much to you – flawless delivery of those services.

1:39 12 Years of NGINX

NGINX goes back much further than the two years that we’ve been running conferences and user events like this. Right from the beginning, we were facing the challenge of solving modern application problems. Back in 2004, the problem that Igor was facing – managing the infrastructure for a Russian portal site – was commonly known as the C10K problem.

How can you scale a platform to handle a large volume of requests? This was impacting the service response time and led to unreliable applications. That challenge was the genesis of the NGINX project. The first open source release was in October, 2004, carefully timed to coincide with the 47th anniversary of Sputnik.

NGINX grew slowly for a number of years, but then the trajectory went stratospheric. Three years ago, based on figures from W3Techs, more of the 1,000 busiest sites in the world used NGINX than any other web server or web delivery platform. Two years ago, the 10,000 busiest sites in the world used NGINX.

These are the expert system administrators, the people for whom performance really matters. More of those 10,000 sites used NGINX. Last year 100,000 of the busiest sites in the world used NGINX over anything else. From Netcraft figures, the chart shows that the number of domains running NGINX has climbed to over 180 million worldwide, and they all depend on this technology.

Igor never set out to dominate the world. He had a problem, he needed to solve it, and that was the beginning of NGINX. We’re all humbled by the growth in the community and by the adoption of our technology.

We never set out to take market share from other players, we never set out to be the world leader, but with a relentless focus on what matters – building the fastest, most efficient, most rock-solid open source web server – that’s what’s driven us to the place where we are now, enabling you to publish websites and build applications that operate flawlessly.

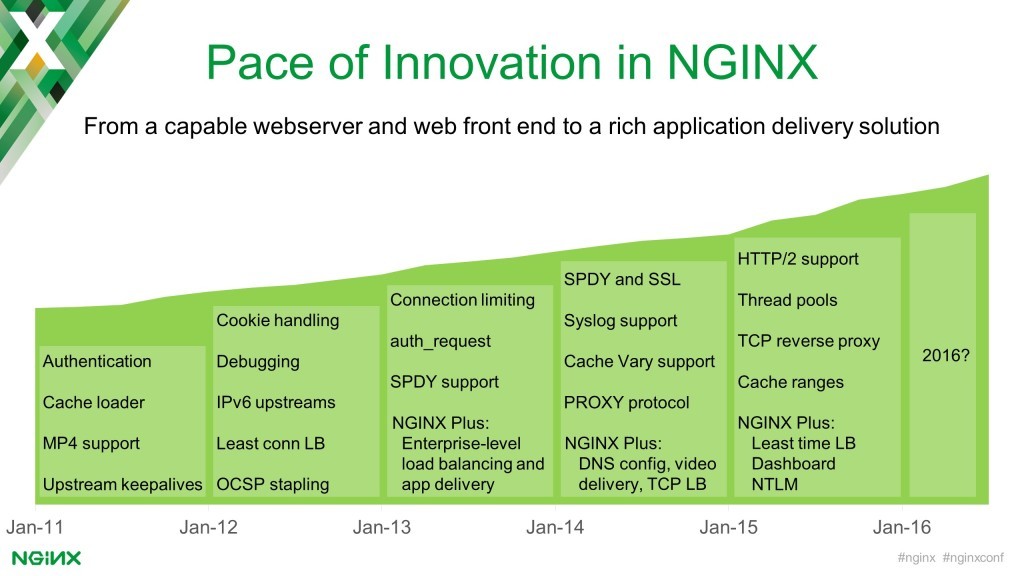

4:18 Pace of Innovation in NGINX

There’s been a steady pace of innovation over the last five years at NGINX, and it’s only growing as we extend the capacity of our engineering team and as we embrace more and more innovation from the community. Over the last few years, you’ll have seen focus and security, SSL, SPDY, HTTP/2, caching, and authentication. We then released NGINX Plus, our commercial variant with additional capabilities as an application frontend and application delivery controller.

All of this sits underneath the huge contributions from our module developers and community contributors, such as fantastic third-party modules like mod_lua that I know many of you depend upon.

In terms of investment in NGINX, it may not seem big. The open source NGINX codebase five years ago was 100,000 lines of code; now [it’s] 180,000 lines of code. It’s not huge, it’s probably smaller than many of the applications that you build, but behind that is a set of core values that I know our users and we share.

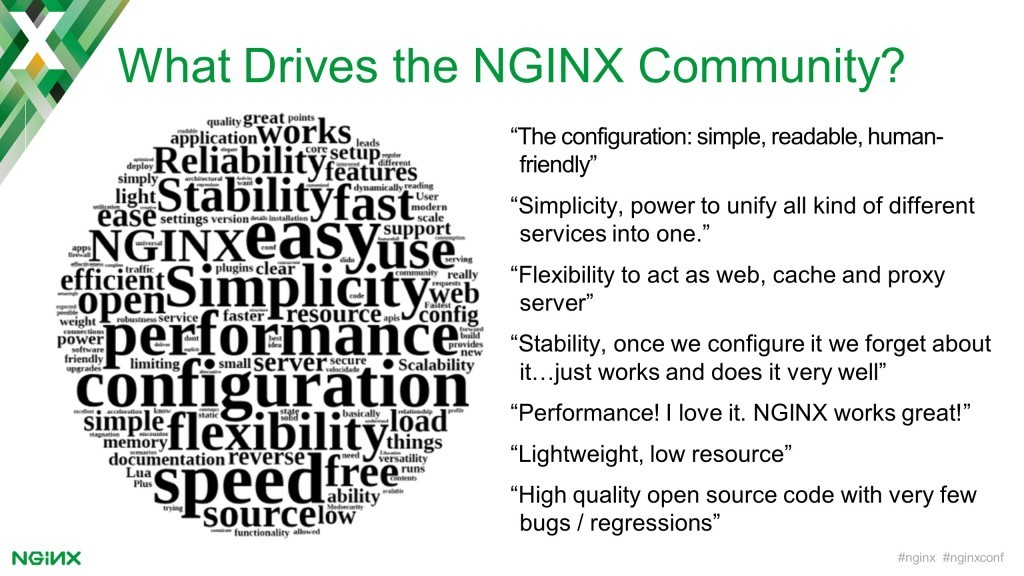

5:44 What Drives the NGINX Community?

Every year we run a survey with our community to find out how we are doing as a company and how the NGINX project is doing. The core values that our users share are the core values that are reflected in our own products: performance, lightweight, simple configuration, easy and rapid deployment, stability, and flexibility to meet a number of different needs such as web caching, reverse proxying, and load balancing.

As members of the NGINX community, you share these values. This is what you buy into, this is what we’re building. If you are a contributor to NGINX, if you’ve tracked the activity on the mailing list, you will have seen the phenomenal degree of scrutiny that goes into every single line of code in NGINX. Also, what you see publicly is just the tip of the iceberg to the discussions we have internally to make sure that we are building the best possible technology.

NGINX is a 1 MB binary. It’s 1/10 the size of Apache, it’s 1/30 the size of Node.js, it’s pretty much the same size in lines of code and memory footprint as something as quick and lightweight as the bash shell. This little, lightweight, better technology is driving 180 million websites worldwide. In fact, at a little bit over 1 MB in size, it’s considerably smaller than the average web page it’s asked to serve.

7:26 What’s New in 2016

So what’s new? What’s happened since our last conference and the last status update we gave you? The features what we’ve been developing continue to reflect these core values. We’ve added dynamic modules, which are all about ensuring the core of the product stays lightweight and simple.

Dynamic modules provide you and partners with an easier, reliable way to build new features and to screw them into the core. UDP load balancing speaks to the flexibility of the product, the simplicity of the product, the ability to unify services into one. This came about because we found that more and more of our users were using NGINX as a frontend not just for HTTP traffic, but for other services as well.

They came to us with opportunities and a desire to move out some of their legacy infrastructure and use NGINX more heavily – to front not just the public-facing part of their applications but internal parts as well. These were protocols beyond HTTP. Features like UDP load balancing were generated by that desire.

In our commercial product, NGINX Plus, we focused on flexibility. We added OAuth authentication, and essentially what OAuth authentication does is it teaches NGINX who the individual user behind every single request is. You can do great stuff with NGINX: rate limiting per IP address, shaping traffic based on user agents. With OAuth authentication, you can now do that per individual user.

When you drop NGINX into a large distributed microservices-like application running in a container environment, then suddenly the challenge of locating services and connecting those services together becomes paramount. Service discovery capabilities speak to that.

Finally, we recently announced the first release of the ModSecurity [WAF] for NGINX. This is the first official release, built in partnership with Trustwave. It’s been a project for over a year or two, and Trustwave has been engaged in refactoring the ModSecurity product that was originally written as an Apache module with shims for other web servers like NGINX.

Trustwave has been refactoring that into a dedicated security library with dedicated connectors for each platform, NGINX being the first on the list. We’ve been working with ModSecurity, supporting their open source efforts through engineering contributions.

Now, we’re at the point where you can take the leading open source web application firewall and combine that with the leading open source high-performance web server and have a fantastic solution bringing both of those together.

10:40 What’s Next?

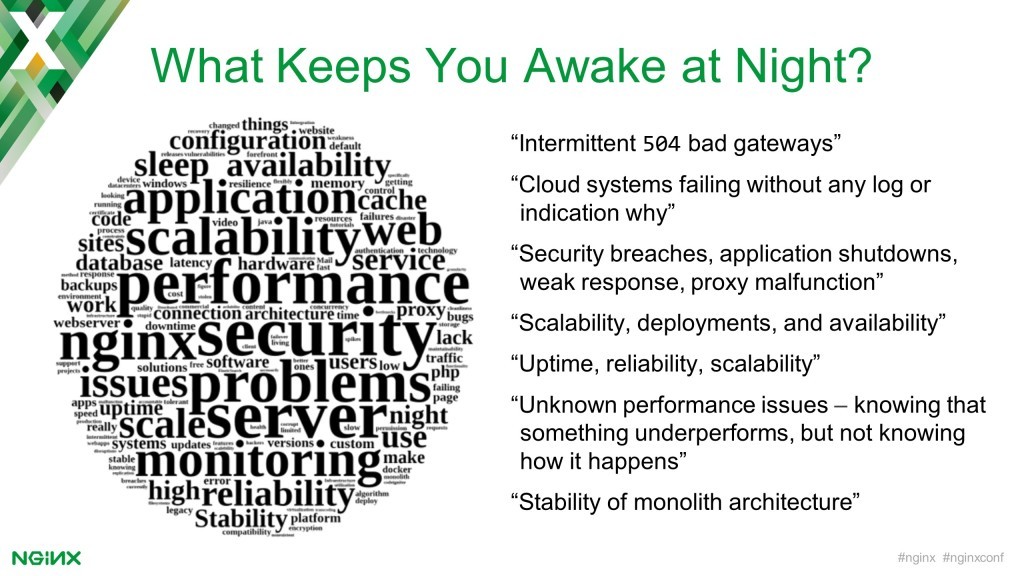

What’s next? What do we see in the future for NGINX? What do I see in the future for our industry? For the first indicators, let’s go back to our annual user survey.

10:53 What Keeps You Awake at Night?

We asked our users what keeps them awake at night. These are the top problems that, if we do our job well, we can help to address. They fell into a range of buckets:

- Reliability – People were concerned about the reliability of their backend applications. How would it impact the public facing part of their app?

- Scalability – When traffic comes, can NGINX scale to deal with demand?

- Underperformance – Will individual parts of the application underperform?

- Security – Are there data leaks with NGINX?

But what brought all of those together was the biggest concern: not knowing about these problems until it was too late. This is the fear of having what should have been the best day in the history of their business turning into their personal worst day.

These operational challenges are also increased by some of the demands that you as developers are facing, that businesses put on you. This is the desire to bring new features to market faster, the desire to iterate and develop more quickly, and the drive for faster time-to-market.

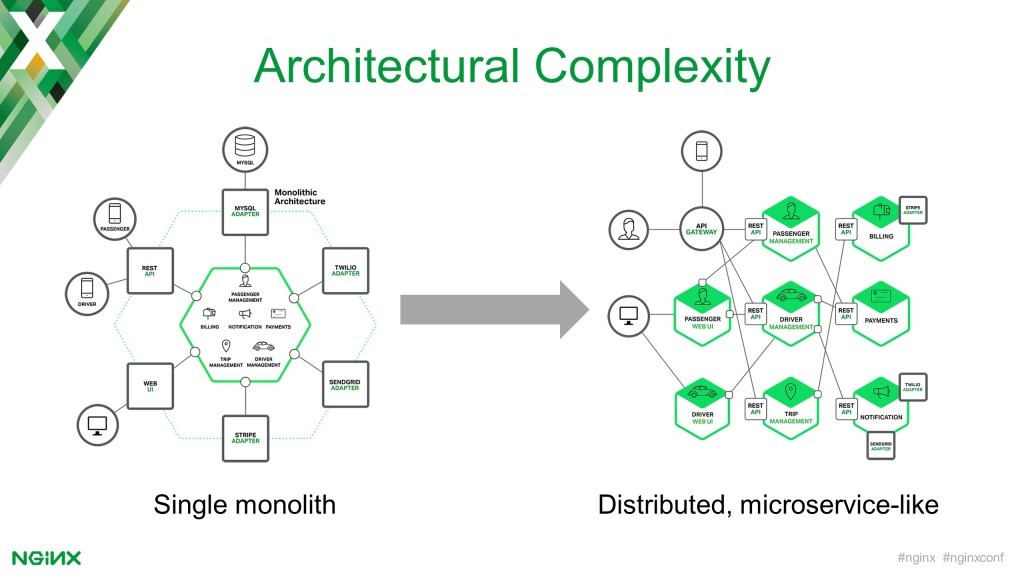

12:16 Architectural Complexity

There’s a massive shift in architectural approaches from a single model – a tried, tested, and trusted approach, but one that is very heavyweight and slow to iterate – to a more decoupled microservices-like application architecture where individual components can be managed individually.

But as soon as you do that, you bring a number of extra elements into the picture, and they can cause challenges. Now your application depends critically on the performance, the reliability, and the availability of underlying services such as the network, making the performance and reliability of your app considerably harder to confidently deliver.

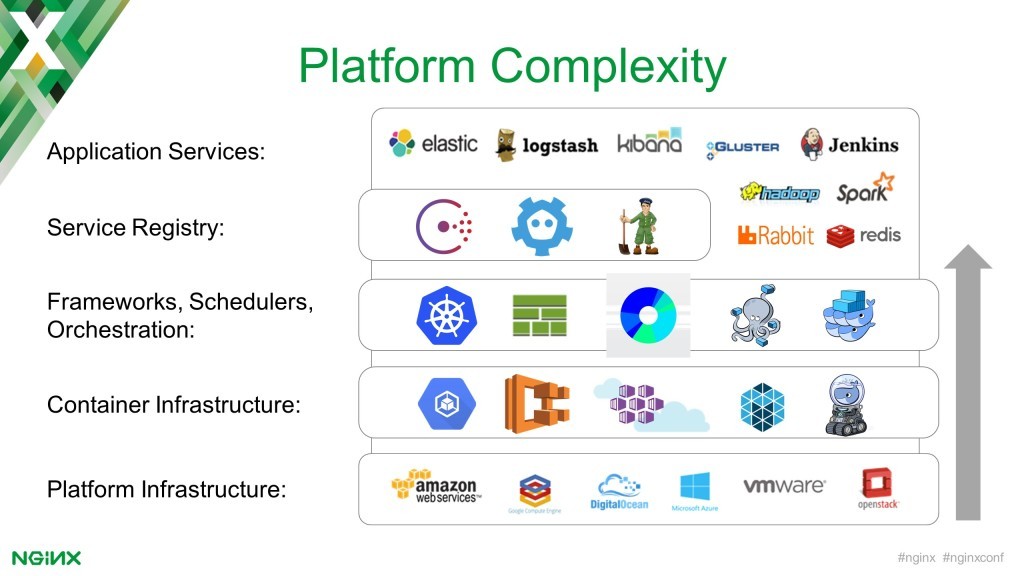

13:04 Platform Complexity

With this architectural complexity comes new platform complexity. There is an oncoming tsunami of choices that you face. It’s a range of imperfect, immature solutions shouting for your attention and trying to take you in a particular direction to build the core platform for your applications.

There’s a choice in platform infrastructure such as Amazon, Google, DigitalOcean, VMware, OpenStack, and countless others. If you’re going to adopt containers, and I know that many of you are, then there are choices around the container platform in the infrastructure: Google Container Engine, Amazon ECS, Azure, Mesosphere, and Docker technology.

On top of that, you then need to think about the frameworks, the schedulers, and the orchestration tools that are going to manage the services that you deploy as containers – Kubernetes, CloudFormation stack from Amazon, Marathon from the Mesos team, Compose and Swarm from Docker. Then [you need a] service registry tracking what you’ve deployed and where it is.

There’s potential for immense jeopardy. Every technology choice you make risks locking you into a particular deployment environment, a particular technology stack, or a particular road. We know what users are looking for: an acceptance of the risk that you take on, but also a way forward so that you can find a way of dealing with that risk.

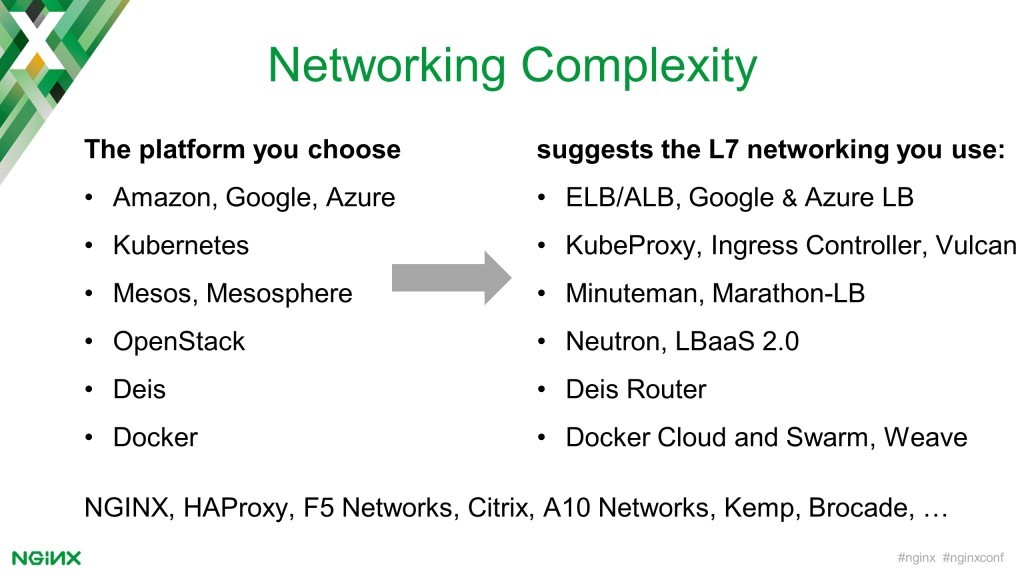

14:39 Networking Complexity

Let’s take a simple example of networking in all of these environments. The platform you choose – Amazon, Google, Azure and others – will often direct you in a particular route for the networking technology you’re going to deploy on top of that platform.

It’s great that lots of that networking technology is in fact built on top of open source components. Amazon’s recently released Amazon Load Balancer, ALB, is built on top of open source NGINX with a number of modifications the Amazon team have made.

That doesn’t make it a perfect solution, unfortunately. When you look at a service with a provider like that, you have to appreciate that there are often limitations. The limitation is often based on the keyhole that the API gives you into that technology. Amazon’s API keyhole opens up certain bits of functionality, but limits others. You might look at established load‑balancer providers as an alternative.

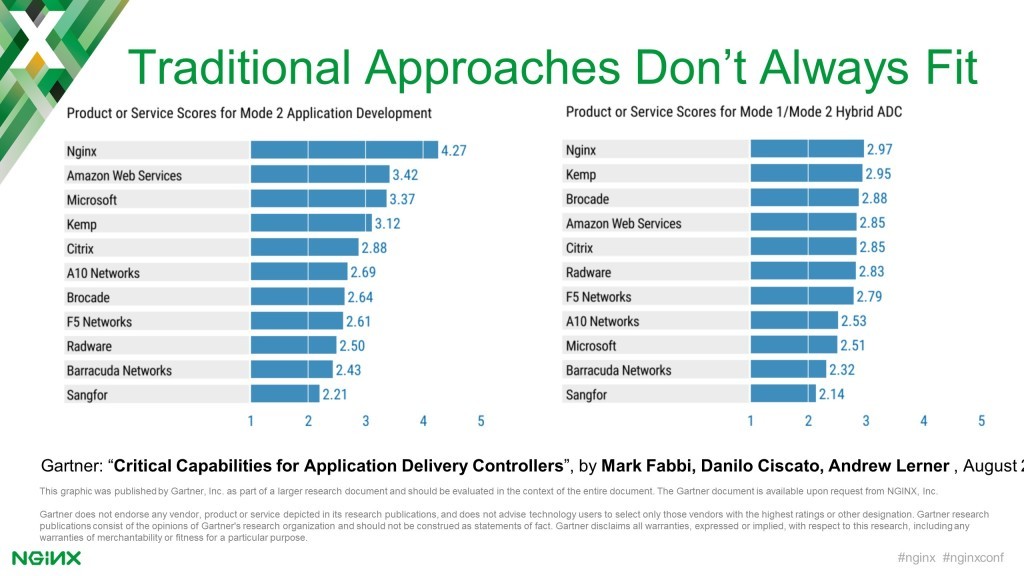

15:52 Traditional Approaches Don’t Always Fit

Many of these aren’t suited for the new world I’ve just described of turbulent, rapidly changing applications. We believe that traditional approaches don’t always fit, and as Gus mentioned, we’re delighted to have been included for evaluation in Gartner’s most recent Application Delivery Controller (ADC) Magic Quadrant.

NGINX is the first and only open source technology to be included among that group of applications. Gartner has done a very detailed study evaluating the suitability of traditional, existing, and some of the new emerging load balancer and ADC solutions for different use cases.

We look at this data and we interpret it as support for our belief that NGINX is the ideal solution for what the industry is calling Mode 2 applications – fast-changing, modern, developer-led applications.

16:56 What Is NGINX’s Role?

So with that in mind, what is the role for NGINX in the new world that I’ve described? Where reliability, scalability, performance, and security are all competing for your attention and you have a plethora of imperfect choices in front of you. NGINX will always remain where it brings the most benefit: right next to the application and embedded, as Igor described, within the application.

HTTP is the lingua franca, the common language of modern applications. The volume of HTTP is increasing and as you know there is no better way to handle HTTP at scale than with NGINX. If flawless application delivery is what matters to you, then you start with NGINX.

17:48 Three Future Initiatives

There are a number of future initiatives we’re working on. Igor shared some, I’d like to add a little bit of color to those in the last few minutes. The first is nginScript.

17:57 nginScript

We announced nginScript last year. Igor and his team have been working on this technology for the ensuing 12 months, and we’re making a great degree of progress.

18:13 nginScript (cont)

The challenge that nginScript seeks to address is that, as we know from our user surveys, users love the configuration language. They love the simplicity, the logical layout, and the declarative form of the config language. But that can sometimes be an Achilles heel.

When the configuration language is so elegant, if you need to do something a little bit non-standard, the configuration language may not be able to be bent sufficiently to meet your requirements.

nginScript is the solution that we propose for this. We’ve built a virtual machine for running bytecode, a virtual machine that is very tightly integrated with the way that NGINX operates event-driven memory management. We’ve built a parser in a compiler for a large subset of the JavaScript languages that then builds bytecode to run on that virtual machine.

We’ve hooked into the NGINX core through a set of internal APIs, and the net result is that you’ll be able to use nginScript to extend NGINX by creating new directives in JavaScript. Where the NGINX configuration would normally evaluate a variable as part of a processing request, you’ll be able to evaluate a JavaScript function at that point.

We’re not seeking to be an application engine, we’re not trying to replace Node.js, we’re not trying to build something that is as rich and powerful as the Lua module. What we’re trying to do reflects our core values: create something that is quick, familiar, simple, and solves the problem that you have at hand.

19:58 NGINX Amplify

Next I’d like to introduce NGINX Amplify and I’d like to introduce you to my colleague Nick Shadrin, who is going to join me on stage and give you a quick demo. NGINX Amplify addresses the challenge that comes as you use NGINX more and more within your application infrastructure. More NGINX instances give you challenges around monitoring, coordinating the configuration, verifying that you’re running with the best practice configuration; but it also creates opportunities.

With NGINX everywhere, NGINX sees your application traffic. You’re not just monitoring and inspecting how NGINX operates, NGINX knows how your application operates as well. NGINX Amplify is our new management and monitoring platform that lets you track your NGINX’s state from a single pane of glass to find out what’s going on. So please welcome Nick onstage and he’ll give you a little bit more of the technical details. Thank you, Nick.

26:42 NGINX Microservices Reference Architecture

So if you’re interested in managing and monitoring your NGINX’s state, make sure you don’t miss those events. One last initiative I’d like to share with you before we close the morning is our work on microservices architecture.

We’ve been trying to understand and deduce the best practices for building scalable, reliable, high‑performance, distributed applications with a goal of creating a reference architecture that we can share with the community. This is an architecture that embodies the best practices that we believe in, and an architecture that also feeds into the future product roadmap for NGINX.

27:18 Microservices Reference Architecture (cont)

The challenges that users who adopted this sort of architecture face is that when you deploy applications in a containerized environment, the topology is hugely unpredictable. It’s very challenging to reliably connect, control, and scale these applications, and as part of our reference architecture we’re looking at best practice techniques for service discovery.

We’re looking for security – SSL through the application, load balancing, and health checks – that provides capabilities like circuit breakers for each of the application instances that you’re running with a goal of there being no delay, no single point of failure, and having an architecture that perfectly mirrors the distributed architecture of your application.

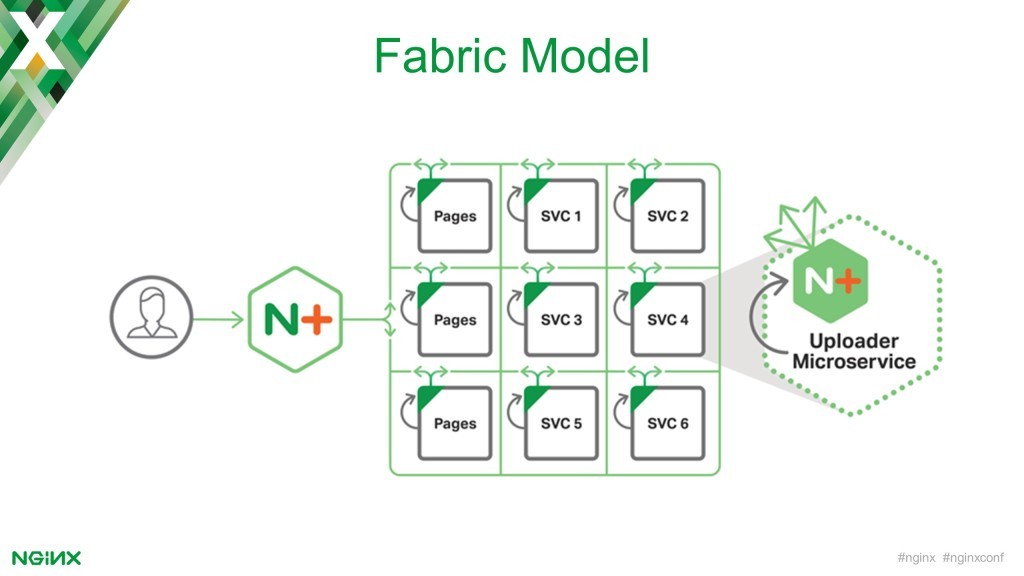

28:08 Fabric Model

Embedding NGINX at the core of the fabric model, NGINX acts as a forward and reverse proxy within each individual application instance.

28:18 Fabric Model (cont)

Services can discover other services in the application for health checks, and run persistent SSL connections. We have measured that this reduces the SSL overhead in our test application by 99.7%. The net effect is that your distributed application can then operate at massive scale.

If you’re interested in finding out more about our progress in this area, please do catch Chris Stetson’s talk Thursday afternoon, where he will present the work that we have done so far on this reference architecture.

So as we close for this morning, I want to thank you again for coming and supporting us here. I hope you can see that not only has NGINX had an exciting past, but there’s an exciting future ahead of us as well. We don’t exist in a vacuum. Our partners in our user community are also building and innovating solutions on top of NGINX.

So in the short break we have coming up now, please catch the expo hall, meet some of our partners, meet other NGINX users, come and find us at the NGINX booth or anyone with an NGINX t-shirt. Ask us questions, find out what’s happening, but most of all make the most of and enjoy the conference this week. Thank you again.

To try NGINX Plus yourself, start your free 30‑day trial today or contact us for a live demo.

The post nginx.conf 2016 – Keynote with Owen Garrett NGINX: Past, Present, and Future appeared first on NGINX.

Source: nginx.conf 2016 – Keynote with Owen Garrett NGINX: Past, Present, and Future

Leave a Reply