NGINX Conf 2018: Migrating Load-Balanced Services from F5 to NGINX Plus at AppNexus

In his session at NGINX Conf 2018, Ernesto Chaves, a Senior Network Engineer at AppNexus, describes how and why the company replaced all of the F5 Networks BIG‑IP hardware load balancers in its global data centers with NGINX Plus. He details the motivations, pitfalls, and successes of the transition from proof of concept through to implementation.

In this blog we highlight some key takeaways. You can watch the complete video here:

Key Takeaways

- Solutions that were the best choice at the time don’t always stay that way. Six years after their initial deployment at AppNexus in 2009, F5 BIG‑IP load balancers were struggling to keep up with increasing traffic. They also suffered from hardware failures, random rebooting, and memory leaks, among other issues.

- Hardware solutions usually come at a premium. The NGINX Plus solution for AppNexus’s issues was 95% less expensive than the proposed F5 solution ($144,000 vs. $3,000,000), for the same or better performance.

- When it becomes obvious your hardware solution will not scale further, stop patching and spending time on workarounds. Guided by your current requirements, determine whether you can modify the solution you have now or need to find a new one.

F5 BIG‑IP Wasn’t Meeting AppNexus’ Needs Anymore

AppNexus is a digital advertising platform. Back in 2009, they chose BIG‑IP hardware load balancers from F5 Networks as the best option available. However, as AppNexus grew and deployed more and more business‑critical applications and services behind the BIG‑IPs, problems with scalability, flexibility, and reliability grew as well: the hardware was having a hard time keeping up with the growth in traffic.

The problems were of various types, too: performance degradation under load, high CPU utilization, real‑world performance below published specs, limits on processing of SSL connections, port exhaustion, memory leaks and core dumps, hardware issues (with disk drives and power supplies, for example), random rebooting of daemons and the chassis, and frequent patching and upgrades to address critical vulnerabilities.

After opening 32 support cases in 4 years and spending countless hours troubleshooting, fixing bugs, and finding workarounds, Ernesto’s team decided enough was enough.

Finding a New Solution

In an attempt to correct the problems, the team met with F5 in November, 2015 and presented their list of issues. F5 proposed what Ernesto describes as an “insane and impractical idea” depicted in this topology diagram, which basically amounted to throwing more BIG‑IP hardware at the problem. The team’s reaction is captured aptly in the meme.

Not only did the proposed solution increase F5’s physical and power footprint in AppNexus’ data centers, it added more points of failure in multiple data centers, didn’t guarantee a gain in performance, and came at a total cost of more than $3 million. To top it all off, F5 couldn’t provide visibility into when the next‑generation hardware in the proposal would be available, beyond saying that it would be at least 18 months.

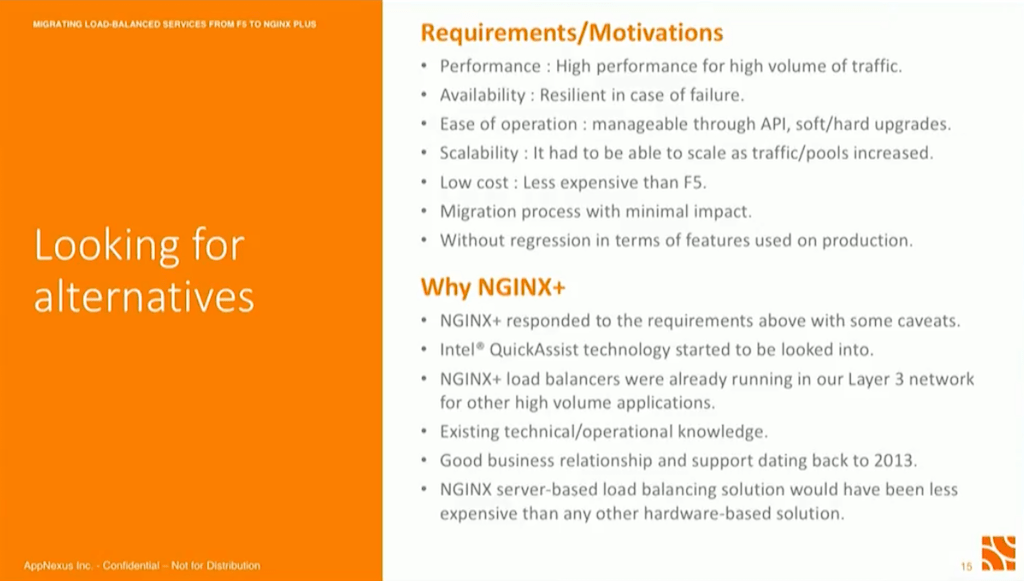

Unable to wait for improvement, the team started looking for a workable and less expensive solution. They formulated seven requirements:

- High performance for a high volume of encrypted and unencrypted traffic

- Resilience in case of failure

- Ease of operation: manageable through an API, and with software and hardware upgrades transparent to end users

- Scalability

- Less expensive than the F5 solution

- Migration from the F5 solution had to have minimal impact on production traffic

- No regression in terms of features already used in production

Why Did AppNexus Choose NGINX Plus?

The proposed F5 solution came with a price tag of $3 million. The NGINX Plus solution cost $144,000 as reported by Ernesto – savings of 95%, even without counting how much AppNexus was already paying for the current, problematic F5 solution. Our own research shows NGINX Plus saves you 78% to 87% on a per‑instance basis.

AppNexus had already been enjoying stellar performance from NGINX Plus for the previous two years, in their Layer 3 network for other high‑volume applications. This meant that during the migration from F5 hardware to NGINX Plus, Ernesto’s team would benefit from in‑house expertise as well as NGINX’s award‑winning technical support.

After thorough testing, Ernesto’s team began its transition to software load balancing with NGINX Plus. Watch the complete presentation to hear Ernesto explain the testing process in detail.

Is your hardware load balancer failing to meet your needs? Try NGINX Plus – start your free 30-day trial today or contact us to discuss your use cases.

The post NGINX Conf 2018: Migrating Load-Balanced Services from F5 to NGINX Plus at AppNexus appeared first on NGINX.

Source: NGINX Conf 2018: Migrating Load-Balanced Services from F5 to NGINX Plus at AppNexus

Leave a Reply