NGINX Sprint 2.0: Clear Vision, Fresh Code, New Commitments to Open Source

Each year at NGINX Sprint, we share our latest and greatest technologies. It’s the best part of the year! We unveil the fresh code and product versions we’ve created as we strive to make the everyday lives of our community and customers better. This year is a bit different, though. Of course, we’ll unveil new projects and products. More on that below. But I’d be remiss if I didn’t take a moment to step back and be explicit about the vision that guides our efforts. And most importantly, how that vision results in a set of commitments we want to make to our community.

Adaptive Applications Are the Future

Here at F5, our vision for the future is applications that adapt. What do we mean by “adaptive applications“? Think apps that act like organisms. They grow, shrink, heal, and defend themselves autonomously, helping to reduce the need for constant manual intervention.

I emphasize the word “autonomously” for a reason. As we create the next generation of digital services, the underlying applications can no longer perform at human speed and be limited by the presence of humans in the loop. They must be intelligent enough to adapt to their environment, based on context and conditions. They must make changes on their own, like living organisms.

We are already exploring how machine learning can power adaptive intelligence. There are some intermediate steps required to get us there, though. Here’s how we are thinking about the road to adaptive apps, and how our technology releases and product roadmap contribute to achieving that vision.

The “Cluster Out” Pattern for Building Modern Apps

We believe there are three waves of modern application delivery. The focus of the first wave was enabling massive concurrency and scale. This was the wave that birthed NGINX, in response to the rise of web‑scale applications.

The second wave, which we’re surfing right now, is when applications decouple into microservices and connect via APIs to become more distributed and resilient. Kubernetes and containerization are driving this wave, which really started with the growth of cloud computing. The second wave has also enabled tremendous progress in automation, delivering the first generation of adaptive apps. Adaptive behavior can be as simple as auto‑scaling and as complex as policy engines that self-correct by observing how APIs and applications are performing.

The second wave lays the groundwork for the third wave, which will be about endowing applications with more sophisticated intelligence and machine learning – making them environmentally aware and truly able to adapt without human intervention.

We’ve seen a common pattern emerging among NGINX users and customers who have successfully deployed modern apps in Kubernetes clusters as the second wave continues. We call it the “Cluster Out” pattern and it includes three stages, depicted in the diagram and described below.

Stage #1: Build a Solid Kubernetes Foundation

Lots of enterprises are still getting up to speed with Kubernetes and containers. And, frankly, Kubernetes requires considerable customization and tuning to make it production ready. Though it is a powerful general‑purpose platform for numerous use cases, Kubernetes out-of-the-box still lacks the application‑delivery and application‑security capabilities you really need to deploy, manage, and safeguard production apps.

Bottom line, if a production Kubernetes environment is not stable, developers become reluctant to deploy their code to it. To give developers the confidence they need, you must add Layer 7 networking, observability, and security to your Kubernetes production environment. You solve this challenge with Ingress controllers, WAFs, and service meshes, along with other cloud‑native projects such as Prometheus.

Stage #2: Securely Manage APIs In and Out of the Cluster

Once you have a production‑grade Kubernetes foundation, developers are willing to deploy more code to it. This is the “if you build it, they will come” phenomenon. You’ll find that the number of microservices and apps grows quickly, along with the number of APIs they use to communicate, both with other services in the cluster and with external clients and applications. Internal (service-to-service) API calls often surpass external ones (app-to-client) by a factor of 10 or more.

As your app environment grows, new challenges arise that your base Kubernetes foundation cannot solve, including requirements for more sophisticated API authentication, authorization, routing/shaping, and lifecycle management. It is critical to have tools that help you secure, manage, version, and retire APIs – which may number in the hundreds or thousands in complicated environments. In this stage of movement outward from the cluster, you need technologies like API gateways, API management, and API security tools – they allow continued scaling of services as developers make changes, and make applications more robust.

Stage #3: Make the Cluster Resilient

The third stage in implementing the Cluster Out pattern is thinking about how to connect a Kubernetes environment with other environments, be they other clusters or app deployments on VMs or bare metal. After all, we are designing cloud‑native applications that are distributed, loosely coupled, and resilient.

At a bare minimum, modern applications must be able to communicate across multiple Kubernetes clusters to create higher levels of resilience and to run smarter policies (for cost control, for example). More broadly, though, modern applications are rarely islands. They are far more likely to be woven into a web of external services, storage buckets, and partner APIs that are likely located in other environments. Even internally, complex applications may need to talk to other internal applications that are not colocated in a cluster, availability zone, or data center.

This is the next stage of moving from the cluster out, and here again out-of-the-box Kubernetes doesn’t solve its challenges. You need to connect your Kubernetes perimeter – the Ingress controller – automatically to external technologies like Layer 4 load balancers, application delivery controllers, and DNS services to route traffic and handle failovers.

Building A Bigger Open Source Future for NGINX

In reality, the Cluster Out pattern is just a recipe for Kubernetes success that we’ve seen early adopters using. As a prioritization framework, it’s a logical and methodical path to operating containers at scale in an enterprise environment that helps you more efficiently adopt Kubernetes and modern apps.

The power of this approach is that it gives platform teams a systematic way to shore up Kubernetes in production. But that’s not where Kubernetes deployments start. They start with developers building application stacks made up of open source tools. We get that. For more than 15 years, open source has been at the very heart of NGINX, and it will continue to be what drives us forward into the future. Over the coming year, we will be taking significant steps to increase our support for open source by investing in community engagement, fostering open source innovation, and adhering to open source best practices.

Here are our open source commitments for the next year.

Commitment #1: More Open Source, More Community Interaction

We are living proof of what open source can achieve. NGINX powers 400+ million sites and more of the Internet than any other server – and we take our job seriously. We want to move faster and increase the number of projects we develop to create a new generation of open source solutions for Layer 7 networking, security, and observability.

As we proceed, we want to increase community participation. That’s why we commit to using GitHub as the repository for all of our new projects. So we’re promising not only to do more open source work, but to do it in the most transparent way possible, with high‑quality documentation, issue tracking, and release notes. We also want to encourage our community to contribute to our projects and help shape the future with us.

Commitment #2: Continue to Innovate at the Data Plane…and Beyond

I’ve heard many users say “the proxy is a commodity”, meaning you can swap out any proxy – NGINX, Envoy, HAProxy, et al. – for any other. We don’t believe that’s true. There is still plenty of innovation happening at the data plane. We’ve seen new service discovery, encryption, authentication, security, and tracing capabilities introduced in just the last year alone.

Here at NGINX, we believe an intelligent proxy is the keystone of delivering modern apps. We commit to more open source capabilities at the data plane layer. Our goal is to leapfrog the current state of the art. And it doesn’t stop at proxies and the data plane. We commit to innovating at all layers of the modern application technology stack, including the control plane and the management plane. To this end, we plan to open source more control plane technologies and deliver new management plane technologies to provide more complex, extracted workflow capabilities.

Commitment #3: Be Clear about What Is Open Source and What Is Commercial

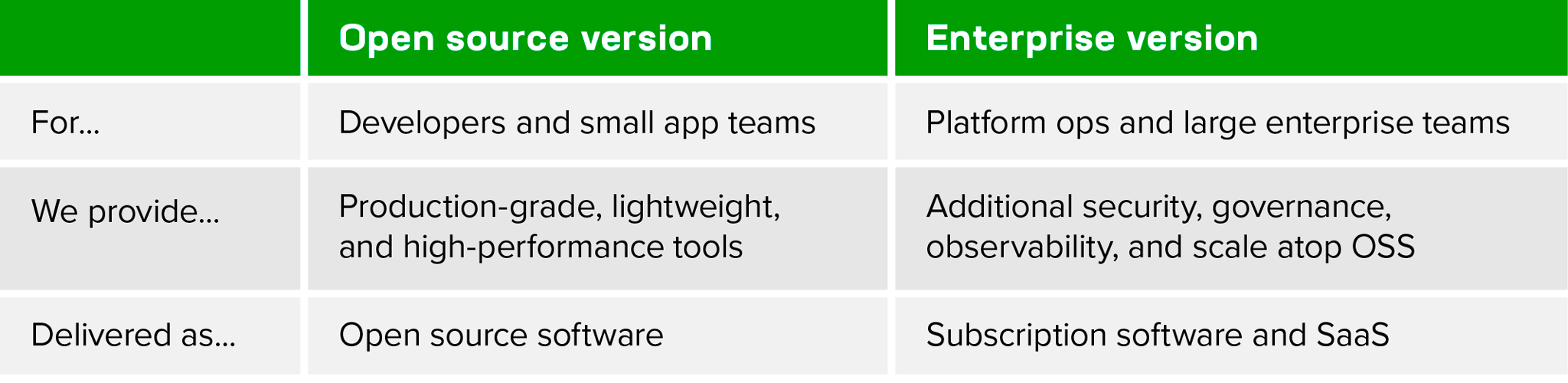

As we look to increase the number of open source solutions we provide, we need a clearly defined model for building commercial versions. That’s why we commit to a transparent, consistent model so that every team can easily understand the different types of NGINX products and get value from the products most appropriate to their needs.

In more detail:

- NGINX open source software will always be production‑grade, a reliable base for building your modern apps. It will remain lightweight, fast, and easy to use, designed specifically for developers and individual application teams. Rest assured that – like NGINX Open Source – every open source tool we create will be useful in and of itself. We won’t hold features back that prevent developer and application teams from building great apps.

- Our commercial NGINX products will build on top of our open source tools, providing the additional capabilities that platform and large enterprise teams need to operate and deliver modern apps at scale in production. Specifically, commercial tools will include additional security, governance, observability, and scalability features. This model will be consistent across all NGINX projects. Although the exact features will vary based on the purpose of the tool, Platform Ops teams can be confident that NGINX commercial offerings are designed with their specific needs in mind.

How We’ll Honor Our Commitments

We know commitments aren’t worth much until we make good on them. We need to show, not just tell – please hold us to that over the next year. And we’re are already moving to make these commitments a reality. We have three announcements on that front.

Announcement #1: Additional Resources for the Kubernetes Community

For many years, the Kubernetes community has relied on NGINX to power its Ingress resource and controller. Alongside this community version, NGINX itself offers the NGINX Open Source Ingress Controller and commercial NGINX Plus Ingress Controller. We’ve learned a lot from the community over the years and want deepen our involvement in advancing the Kubernetes Ingress project. Today we’re announcing two things:

- We’re dedicating a full‑time employee to help manage the community Ingress project.

- We’re assigning multiple employees to the Kubernetes Gateway API SIG to help advance Kubernetes ingress and egress functionality. We are excited to put a lot more resources into Ingress and other Kubernetes‑related projects. This great community has done amazing work over the years and we are proud to strengthen our partnership with them.

Announcement #2: New Open Source Projects at Every Layer of the Delivery Stack

We’ve always participated in open source data‑plane technologies, namely NGINX Open Source, NGINX JavaScript, NGINX Unit, and the NGINX ModSecurity WAF. But as I mentioned above, we’re extending our open source technologies to include control and management plane technologies.

To be specific, we will release two brand‑new open source projects in the coming months – one focused on microservices networking and one that enables Platform Ops teams to easily operate and manage NGINX instances in their organization. We will also be releasing a platform‑agnostic open source developer tool (unrelated to core NGINX technology) for developing modern applications. I’d love to get into the details now, but we don’t want to unveil the new projects until we can provide a great developer experience – including the GitHub repositories, documentation, and discussion groups needed to support you.

These new tools will help developers manage large fleets of NGINX instances and connect seamlessly into and across Kubernetes environments. All of them will be free and open source, with additional commercial versions designed specifically for Platform Ops teams.

Announcement #3: A New, Open Source Modern Apps Reference Architecture

Modern apps are built on an ecosystem of tools, but stitching together multiple tools or projects is usually painful and requires lots of manual configuration. Today we’re announcing the NGINX Modern Apps Reference Architecture (MARA), available now on GitHub.

The reference architecture addresses the key pillars of a modern application architecture – portability, scalability, resilience, and agility. NGINX has partnered with several other vendors and organizations to create a complete, fully operational microservices‑based application that you can get up and running in minutes, hosted in a single GitHub Repo. MARA is easily deployable, production‑ready, “stealable” code that developers, DevOps, and Platform Ops teams can use to build and deploy modern apps in Kubernetes environments.

The architecture takes a modular approach, providing free and open source modular components that come pre‑wired and pre‑integrated to make the process of building modern apps simpler, more reliable, and less manual. We will continue to evolve this reference architecture, and we ask that the community join us. Do you have a particular code repository you use? Or a different CI or CD tool? Build a module that integrates that technology into the reference architecture, and contribute the code back. Our goal is to lower the barrier for any company looking to get Kubernetes up and running in production, quickly and reliably.

We’ll be providing all the details about the reference architecture in a separate blog tomorrow.

Join Us at NGINX Sprint 2.0

To learn more about our vision and the open source innovation we’re ramping up, join us for NGINX Sprint 2.0, which kicks off today. It’s a three‑day virtual event that includes keynotes, interactive demos, workshops, and in‑depth training. It wraps up by noon each day, because we know your time is valuable. And it’s completely free!

Make sure to reserve your virtual seat and tune in to hear the latest NGINX news, dive deep into NGINX technologies and solutions, and as always, connect with the rest of the incredible NGINX community. We hope to see you there! And don’t worry if you can’t make it on any of the three days. We will post all the content after the event for on‑demand viewing.

The post NGINX Sprint 2.0: Clear Vision, Fresh Code, New Commitments to Open Source appeared first on NGINX.

Source: NGINX Sprint 2.0: Clear Vision, Fresh Code, New Commitments to Open Source

Leave a Reply