Overcoming Ephemeral Port Exhaustion in NGINX and NGINX Plus

NGINX and NGINX Plus are extremely powerful HTTP, TCP, and UDP load balancers. They are very efficient at proxying large bursts of requests and maintaining a large number of concurrent connections. In general these characteristics make NGINX and NGINX Plus particularly vulnerable to ephemeral port exhaustion. (Both products have this issue, but for the sake of brevity we’ll refer just to NGINX Plus for the remainder of this blog.)

In this blog, we discuss the components of a TCP connection and how its contents are decided before a connection is established. We then show how to determine when NGINX Plus is being affected by ephemeral port exhaustion. Lastly, we discuss strategies for combatting those limitations using both Linux kernel tweaks and NGINX Plus directives.

A Brief Overview of Network Sockets

When a connection is established over TCP, a socket is created on both the local and the remote host. These sockets are then connected to create a socket pair, which is described by a unique 4-tuple consisting of the local IP address and port along with the remote IP address and port.

The remote IP address and port belong to the server side of the connection, and must be determined by the client before it can even initiate the connection. In most cases, the client automatically chooses which local IP address to use for the connection, but sometimes it is chosen by the software establishing the connection. Finally, the local port is randomly selected from a defined range made available by the operating system. The port is associated with the client only for the duration of the connection, and so is referred to as ephemeral. When the connection is terminated, the ephemeral port is available to be reused.

Recognizing Ephemeral Port Exhaustion

As mentioned in the introduction, NGINX Plus by nature is vulnerable to ephemeral port exhaustion and the problems it causes. When a request is proxied through the load balancer, the default behavior is to bind the socket for the proxied request automatically to a local IP address and an ephemeral port available on the server that is running NGINX Plus. If the connection rate increases and the sockets that are being established are moved into a waiting state faster than existing open sockets are being closed, eventually the available ports are exhausted and any new sockets cannot be created. This results in errors from both the operating system and NGINX Plus. Here’s an example of the resulting error in the NGINX Plus error log.

2016/03/18 09:08:37 [crit] 1888#1888: *13 connect() to 10.2.2.77:8081 failed (99: Cannot assign requested address) while connecting to upstream, client: 10.2.2.42, server: , request: "GET / HTTP/1.1", upstream: "http://10.2.2.77:8081/", host: "10.2.2.77"Port exhaustion also causes a spike in 500 errors originated by NGINX Plus rather than the upstream server. Here is a sample entry in the NGINX Plus access log.

10.2.2.42 - - [18/Mar/2016:09:14:20 -0700] "GET / HTTP/1.1" 500 192 "-" "curl/7.35.0"To check the number of sockets in the TIME-WAIT state on your NGINX Plus server, run the following ss command in the Linux shell. The example shows there are 143 sockets open with status TIME-WAIT. In the example we get the count by using the wc command to list the number of lines in the command output.

# ss -a | grep TIME-WAIT | wc -l

143Tuning the Kernel to Increase the Number of Available Ephemeral Ports

One way to reduce ephemeral port exhaustion is with the Linux kernel net.ipv4.ip_local_port_range setting. The default range is most commonly 32768 through 61000.

If you notice that you are running out of ephemeral ports, changing the range from the default to 1024 through 65000 is a practical way to double the number of ephemeral ports available for use. For more information about changing kernel settings, see our Tuning NGINX for Performance blog post.

Enabling Keepalive Connections in NGINX Plus

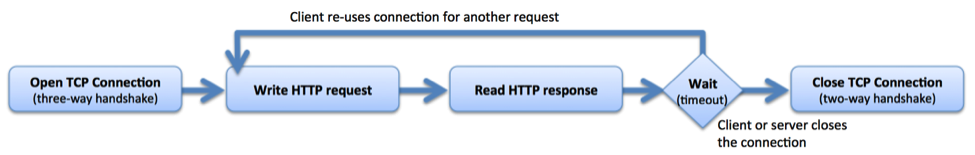

Another way to reduce ephemeral port exhaustion is to enable keepalive connections between NGINX Plus and upstream servers. In the simplest implementation of HTTP, a client opens a new connection, writes the request, reads the response, and then closes the connection to release the associated resources.

A keepalive connection is held open after the client reads the response, so it can be reused for subsequent requests.

Use the keepalive directive to enable keepalive connections from NGINX Plus to upstream servers, defining the maximum number of idle keepalive connections to upstream servers that are preserved in the cache of each worker process. When this number is exceeded, the least recently used connections are closed. Without keepalives you are adding more overhead and being inefficient with both connections and ephemeral ports.

The following sample configuration tells NGINX Plus to maintain at least 128 keepalive connections to the servers defined in the upstream block called backend.

upstream backend {

server 10.0.0.100:1234;

server 10.0.0.101:1234;

keepalive 128;

}When enabling keepalive connections to your upstream servers, you must also use the proxy_http_version directive to tell NGINX Plus to use HTTP version 1.1, and the proxy_set_header directive to remove any headers named Connection. Both directives can be placed in the http, server, or location configuration blocks.

proxy_http_version 1.1;

proxy_set_header Connection "";Dynamically Binding Connections to a Defined List of Local IP Addresses

Optimizing the kernel and enabling keepalive connections provide much more control of ephemeral port availability and usage, but there are certain situations where those changes are not enough to combat the excessive use of ephemeral ports. In this case we can employ some NGINX Plus directives and parameters that allow us to bind a percentage of our connections to static local IP addresses. This effectively multiplies the number of available ephemeral ports by the number of IP addresses defined in the configuration. To accomplish this we use the proxy_bind and split_clients directives.

In the example configuration below, the proxy_bind directive in the location block sets the local IP address during each request according to the value of the $split_ip variable. We set this variable dynamically to the value generated by the split_clients block, which uses a hash function to determine that value.

The first parameter to the split_clients directive is a string ("$remote_addr$remote_port") which is hashed using a MurmurHash2 function during each request. The second parameter ($split_ip) is the variable we set dynamically based on the hash of the first parameter.

The statements inside the curly braces divide the hash table into “buckets”, each of which contains a percentage of the hashes. Here we divide the table into 10 buckets of the same size, but we can create any number of buckets and they don’t have to all be the same size. (The percentage for the last bucket is always represented by the asterisk [*] rather than a specific number, because the number of hashes might not be evenly dividable into the specified percentages.)

The range of possible hash values is from 0 to 4294967295, so in our case each bucket contains about 429496700 values (10% of the total): the first bucket the values from 0 to 429496700, the second bucket from 429496701 to 858993400, and so on. The $split_ip variable is set to the IP address associated with the bucket containing the hash of the $remote_addr$remote_port string. As a specific example, the hash value 150000000 falls in the fourth bucket, so the variable $split_ip is dynamically set to 10.0.0.213 in that case.

http {

upstream backend {

server 10.0.0.100:1234;

server 10.0.0.101:1234;

}

server {

...

location / {

...

proxy_pass http://backend;

proxy_bind $split_ip;

proxy_set_header X-Forwarded-For $remote_addr;

}

}

split_clients "$remote_addr$remote_port" $split_ip {

10% 10.0.0.210;

10% 10.0.0.211;

10% 10.0.0.212;

10% 10.0.0.213;

10% 10.0.0.214;

10% 10.0.0.215;

10% 10.0.0.216;

10% 10.0.0.217;

10% 10.0.0.218;

* 10.0.0.219;

}

}

Conclusion

Tweaking the Linux kernel setting for the number of ephemeral ports provides more efficient availability and usage. Enabling keepalive connections from NGINX Plus to your upstream servers makes their consumption of ephemeral ports more efficient. Lastly, using the split_clients and proxy_bind directives in your NGINX Plus configuration enables you to dynamically bind outgoing connections to a defined list of local IP addresses, greatly increasing the number of ephemeral ports available to NGINX Plus.

The post Overcoming Ephemeral Port Exhaustion in NGINX and NGINX Plus appeared first on NGINX.

Source: Overcoming Ephemeral Port Exhaustion in NGINX and NGINX Plus

Leave a Reply