Powering Microservices and Sockets Using NGINX and Kubernetes

td {

padding-right: 10px;

}

This post is adapted from a presentation delivered at nginx.conf 2016 by Lee Calcote of SolarWinds. You can view a recording of the complete presentation on YouTube.

Table of Contents

| Introduction | |

| 1:56 | Our App |

| 2:39 | Case Study |

| 3:31 | Our Bloat-a-Lith |

| 5:30 | The Challenge |

| 7:21 | Shaping Up the App |

| 9:33 | Benefits of Microservices |

| 10:50 | Kubernetes and NGINX to the Rescue |

| 11:27 | Our Microbloat v1 |

| 14:27 | Comparing Services |

| 15:24 | Why NGINX? |

| 17:50 | Microbloat v2 |

| Additional Resources |

Introduction

Lee Calcote: My name is Lee Calcote. Today we’ll be talking about microservices, sockets, NGINX, and a little bit of Kubernetes as well.

While I’ll be the one speaking today, Yogi Porla also contributed a lot to this presentation. He’s not here today, but without him, this presentation wouldn’t have been possible.

Here are various ways you can reach out to me. I’m involved in quite a few things and I also host a couple of meetups here in Austin, which lets me stay connected with the community. I also do a bit of writing, a little bit of analysis.

One of the places that I write for is The New Stack. They just released the fourth installment of their ebook. If you want to know about container security, storage, or networking, it’s great. The networking chapter is particularly juicy (that was mine.)

I’m also authoring a book on CoreOS, Tectonic, and Kubernetes.

Most recently, I’ve joined up with SolarWinds here in Austin.

1:56 Our App

Let’s talk about the app – the case study.

You guys know this app. You guys have seen this app. Sometimes it’s out on the beach, and other times it takes the shape of a 1‑GB JAR file. It’s all just monolithically stuffed in there.

So the point is, be careful what you feed your app.

2:39 Case Study

In this case, not only was our app a 1‑GB JAR file, but it was a piece of software written by a small startup here in town, MaxPlay. They are a game engine/design and development studio, moreover.

They were creating a collaborative game‑development environment. Basically a Google Docs for game development. Let’s say you’re creating a new sprite, or you’re working within a scene and you change the lighting, or you change the color of an object, or build out, or render a new object. This lets you do so in a collaborative, almost real‑time way; it lets you work with others on the same game, and same design, with you.

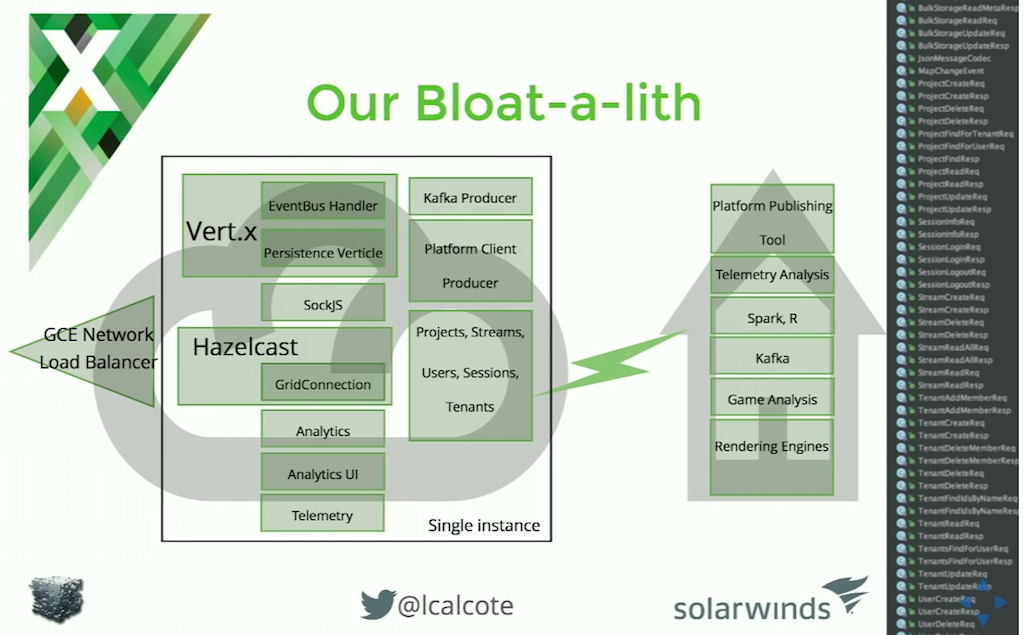

3:31 Our Bloat-a-Lith

This is what the bloat‑a‑lith looked like. The lightning bolt indicates connectivity.

You can see this is essentially a number of game‑design‑specific services hosted in the cloud. In this case, the cloud was Google Cloud Platform (GCP).

A few interesting frameworks were being used: Vert.x for the reactive services that were offered, Hazelcast, and SockJS. Initially, GCE network load balancing was being used.

These cloud‑based services were joined up with some services hosted on premises, which were a big‑data deployment focused on analytics. The analytics looked at telemetry – how people are using the design software, as well as, once games were published, the manner in which games were being played, and what features were being used. These two aspects worked in unison.

One thing to take note of is that 1‑GB JAR file I mentioned is contained within the single instance shown on the slide. That means that when you need to scale up, you don’t scale out – you literally kind of just scale up. You re‑create that instance, and you have multiples of it. That seemed to work well up to about 10 users, but then performance started to go downhill. Something needed to change.

5:30 The Challenge

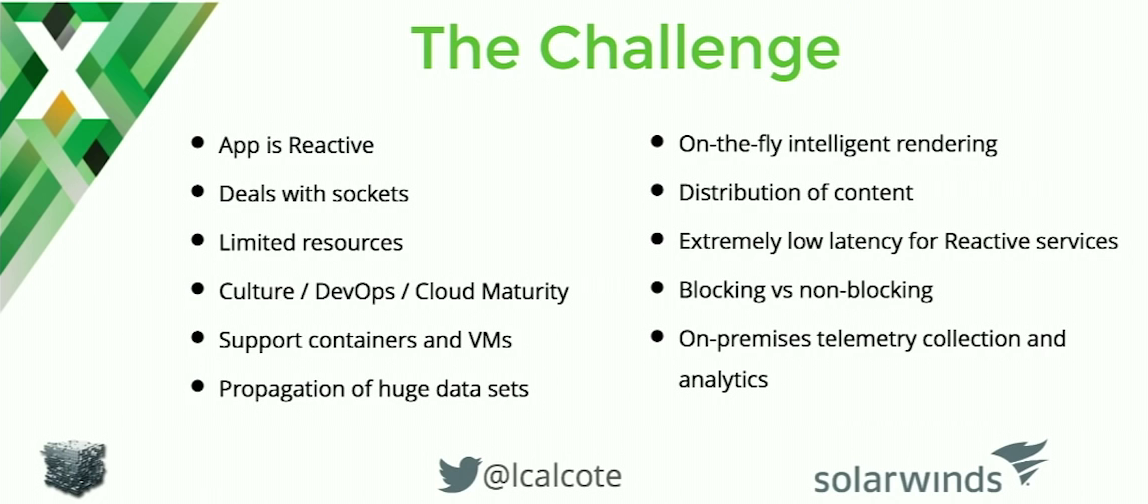

Here are some of the challenges we faced and needed to overcome.

Our app is reactive. To deliver the intended user experience, our app had to be very responsive; there was a lot of eventing happening. For example, one game designer might make a change to the lighting of a scene, so that would need to be re‑rendered, and that event sent over to another user in a remote location who is working on the same scene, so that they could collaboratively design that. There were some significant performance requirements there, to make sure that the user experience was extremely low‑latency.

We also had some challenges around distribution of content. Some of the content was the objects that I just talked about, like a Maya object that was rendered. Other content was audio or video that was part of the game that you’re designing. Some of the video ended up being 4K video, so content size could be substantial.

We’re not going to go over how to solve all of the challenges today, but this is just some background to help you understand how it is that NGINX and other technologies we decided to use came to bear.

There was also a softer side of the challenge, in regards to the engineers at MaxPlay and their heritage, and how much DevOps and cloud experience they had. A lot of them were used to working on more enterprise‑architected software. That kind of background made it a scary proposition to move to microservices and into the cloud. Change is not always easy.

7:21 Shaping Up the App

We figured out what the challenges were, and based off that list, we started to shape this app up.

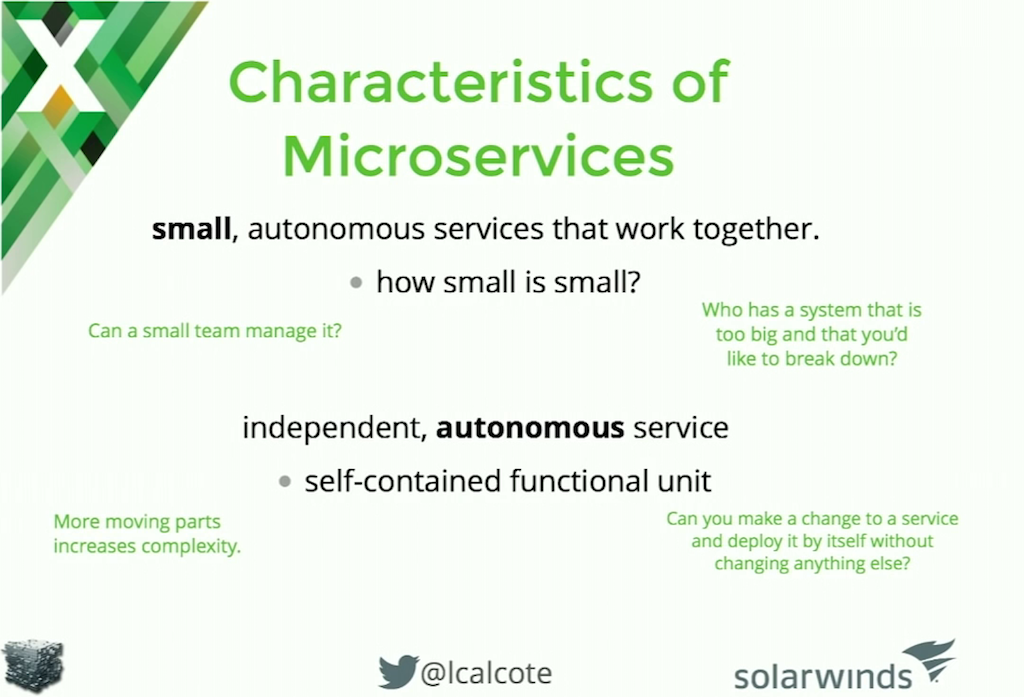

Many of you are into microservices by now. You may have your own ideas, your own definitions of what a microservice is. In fact, for some of you, microservices may feel like they have been around forever, and in some respects, they sort of have.

I’m not going to go deep into defining microservices. There are plenty of talks, presentations, and blog posts that cover that. But here are a couple of interesting questions to ask yourself if you’re trying to do microservices right.

Microservices are small. They’re autonomous units, autonomous pieces of functionality. But what is small? I think we have an easier time sensing when something is big. I said “1‑GB JAR file”, and I sensed a visceral “ew” from the audience. We have a good sense of when something is too big. But how small is small enough? I think one of the things we can ask is, “Can a small team manage that whole microservice?”

Another characteristic of a microservice is that it’s autonomous. It should be able to function on its own, and have its own versioning. One test is whether you can make a change to the microservice, upgrade it, and version it independently. Can you deploy a new version of a service without upgrading anything else?

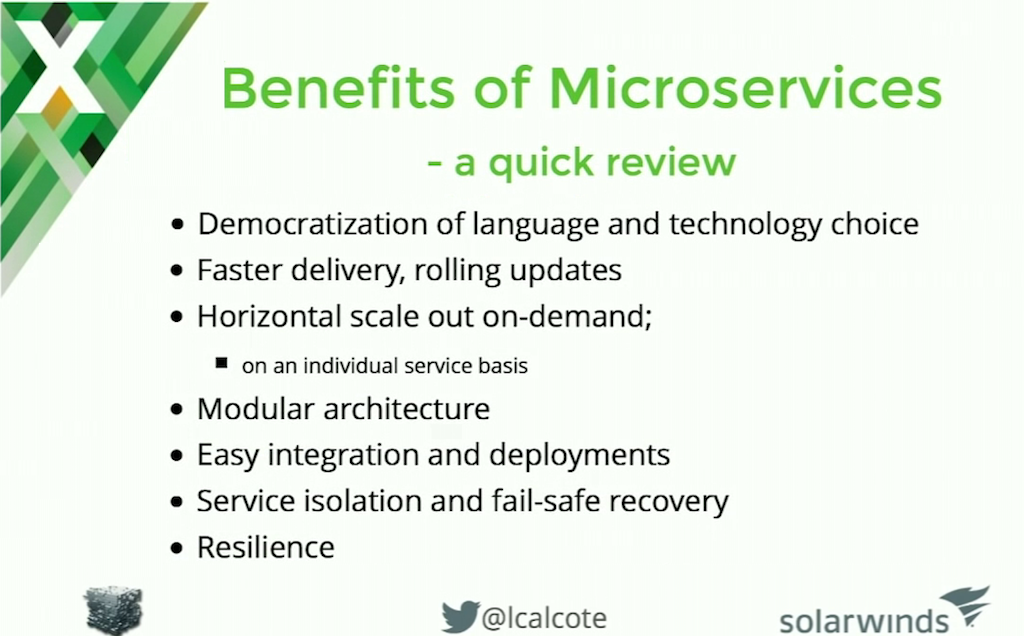

9:33 Benefits of Microservices

I won’t go through all of these, but I do want to highlight one major point. One of the beautiful things about microservices is that there’s a democratization of technologies, frameworks, and languages.

Microservices are encapsulated. Your contract is the API that the other services are speaking to. While you probably wouldn’t want to go hog wild and run Oracle here, MySQL there, PostgreSQL here – just a bunch of random technologies – there is some amount of freedom in that regard.

Service owners get to choose best‑fit technologies or best‑fit languages for the problem they’re trying to solve. As long as they maintain that contract (API) on the outside, they get to make that choice. For me that’s one of the huge benefits of microservices.

There’s also a lot of other benefits listed here, and you can read them but I won’t go into detail. Some of them aren’t necessarily specific to microservices, but [also apply to] continuous delivery and SaaS in general.

10:50 Kubernetes and NGINX to the Rescue

Coming to the rescue are these two technologies: Kubernetes and NGINX.

Because MaxPlay had a relationship with Google, we chose GCP as the cloud environment. As we started to break down that bloat‑a‑lith into smaller microservice components, Kubernetes came up as the first choice in terms of container orchestration, and NGINX came up first for us for handling the two basic use cases discussed on the next slide.

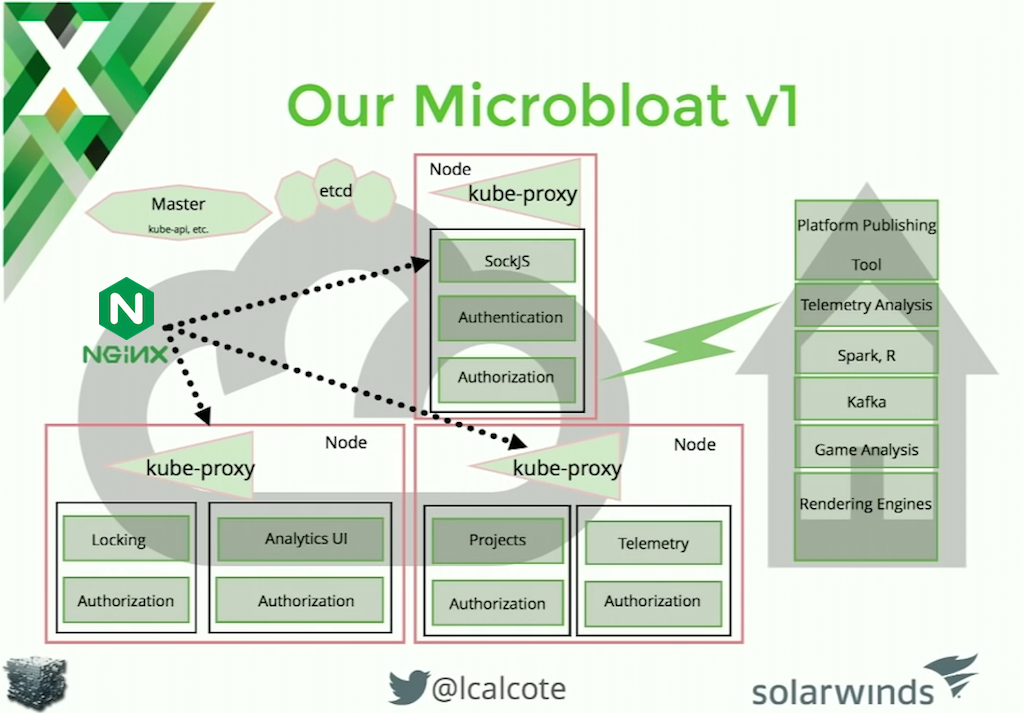

11:27 Our Microbloat v1

Much of what we’re going to talk about today [the two use cases] is just basic functionality. Number one is SSL termination, or SSL offloading. You’d think that by now that would be a pretty ubiquitous capability. And it is getting to be, but it’s not entirely there yet. Kubernetes, for example, as of its current release 1.3, doesn’t natively support [SSL termination] inside of a kube‑proxy. That’s where NGINX comes into play.

It’s perhaps not that easy to tell from the slide, but we’re getting SSL offloading from NGINX. NGINX is directing and still leveraging the native load balancer of Kubernetes, kube‑proxy, but it’s decrypting SSL traffic up front, and taking that load off of kube‑proxy.

The second initial use case was around load balancing for WebSocket. This is shown by the upper black arrow going directly to SockJS. This was the framework that this startup was using for socket connections and this let us achieve high‑performance eventing, such as when someone made a change in one scene and somebody else needed to receive that.

At this point we still had our on‑premises, big‑data deployment [the vertical stack on the right in the slide], but we were beginning to step into this new land of cloud architecture. We were leveraging very basic capabilities of NGINX and also very basic capabilities of Kubernetes. Just what you see here on the slide in terms of pods and services, and also a little bit in terms of DaemonSets.

In case you’re not familiar with a DaemonSet, let me walk you through an example. You can see authorization as a component in each of the pods, which on the slide are represented by the black‑outlined boxes. Authorization functionality, in the pod context, means every time someone wants to use a service, the HTTP request needs to be checked and verified to make sure the caller is authorized. To implement in that in our architecture, we used Kubernetes DaemonSets to place this capability on every node.

[In answer to a question from the audience:] Also, in case it’s not clear, authorization and authentication are different capabilities. Authentication is confirming who you are and authorization controls what you can do.

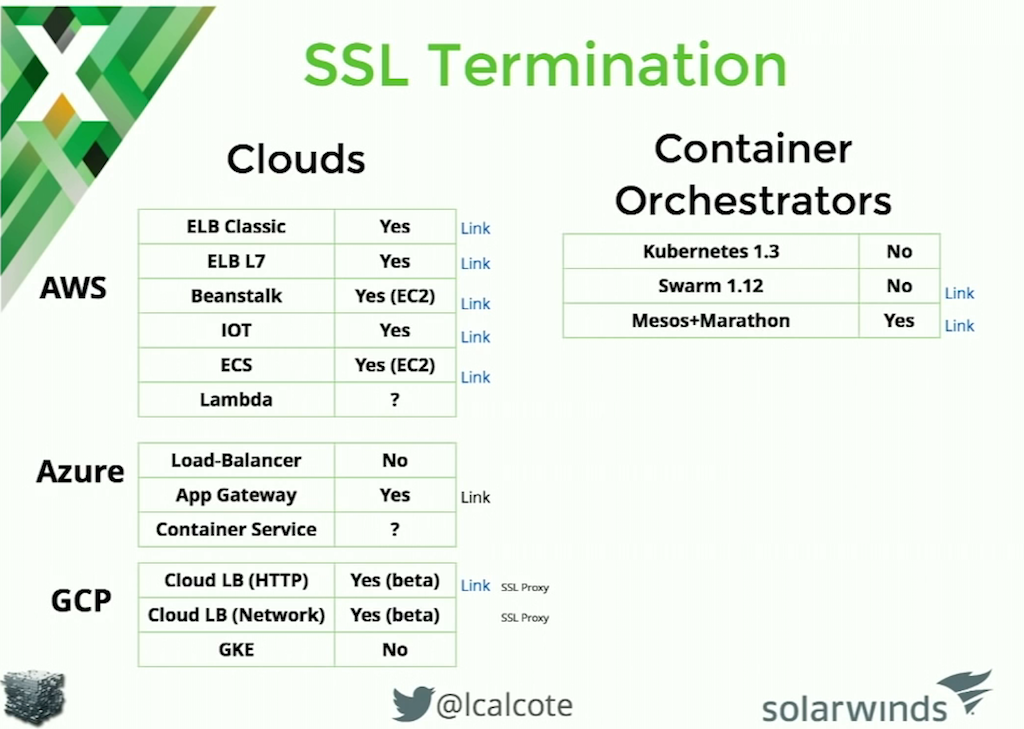

14:27 Comparing Services

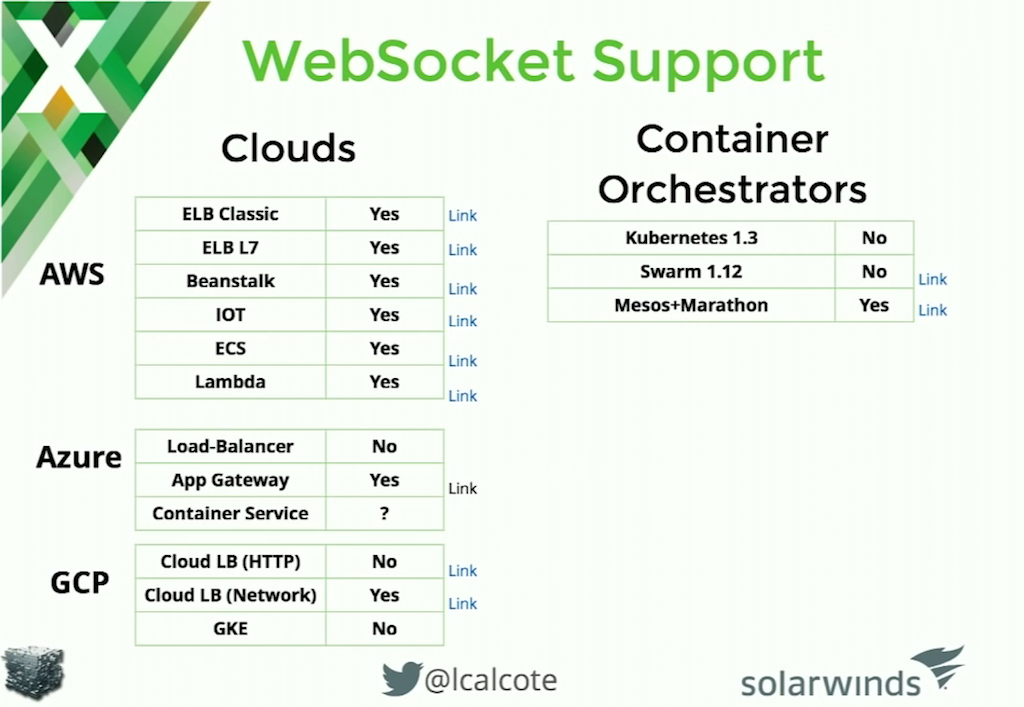

Let’s look at a comparison of some of the cloud services and container orchestration tools out there. I have only a few minutes left, but you can come back to the slides for this section later to look at the details.

[Editor – On the following slides, at the right end of most rows in the tables there’s a link to vendor documentation. The links are live on the slides that are accessible via the link in the previous sentence.]

Even when it comes to support for basic functionality like SSL termination or SSL offloading, you’ll find it’s a mixed bag out there. Things are changing quickly, though. For example, GCP is now starting to add SSL capability as a beta functionality; GCP calls it SSL proxy.

Here’s a breakdown of WebSocket support among the different cloud platforms and tools. Support is a bit better than for SSL termination.

15:24 Why NGINX?

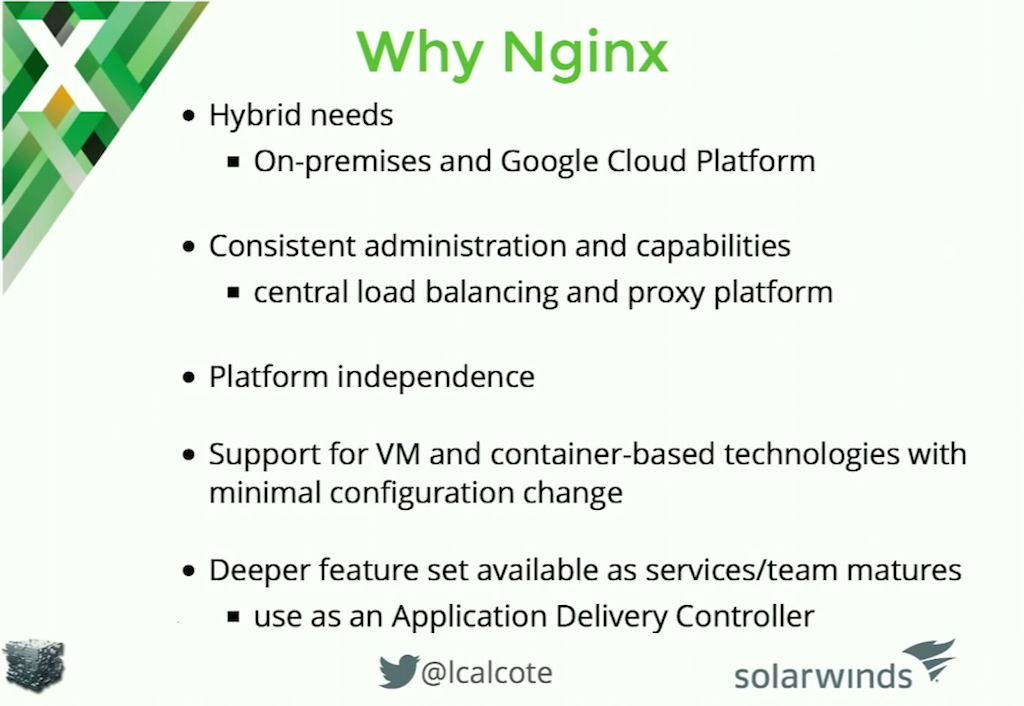

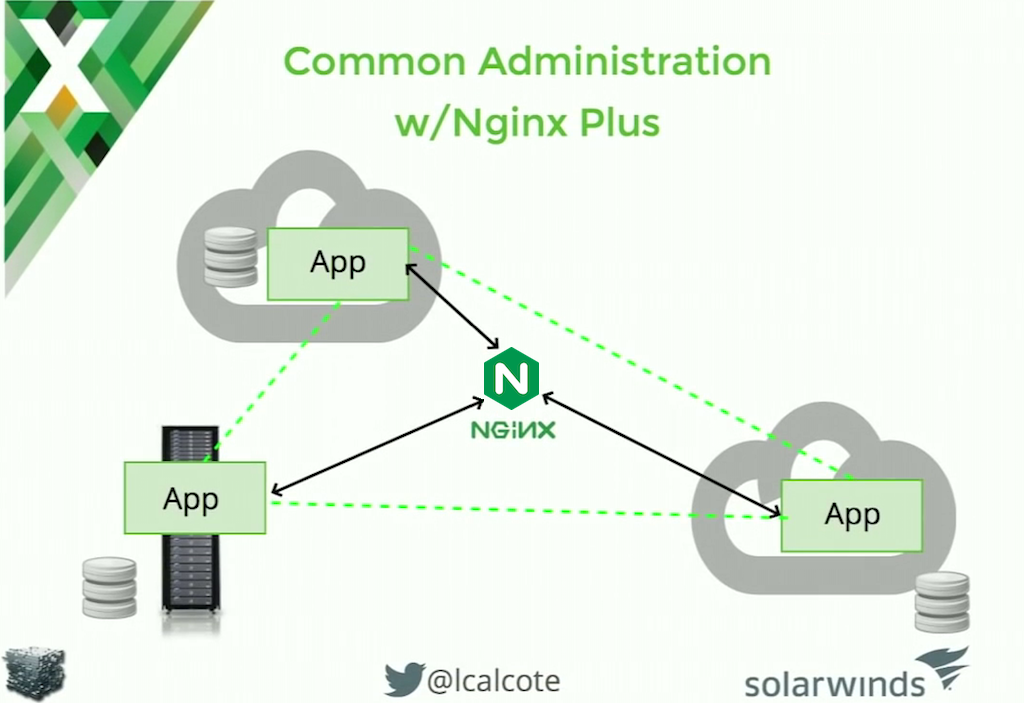

Why did we choose NGINX versus kube‑proxy? One of the reasons is that we were in a hybrid cloud scenario.

We have both load balancing on premises and cloud load balancing. It was nice to be able to get a consistent administrative interface [from NGINX], and to be able to use the tools you already know. That was definitely an attraction for our team.

There’s also the fact that whether we run these services on bare metal, in VMs, or in containers, we could have that same consistent administrative experience and the same consistent capabilities, almost irrespective of what technologies are being used.

I’ll note that in the container space, you might find different capabilities available depending on how you deploy NGINX. I’ve been talking about NGINX running as a stand‑alone entity running in its own VM. You can also deploy NGINX as a Kubernetes Ingress controller [for more about this, see 17:50 Microbloat v2 below].

Finally, as the team grew and matured in its skill set, we anticipated they would begin to look at additional capabilities like those on the next two slides. We knew that NGINX Plus would be there with the feature set to support us.

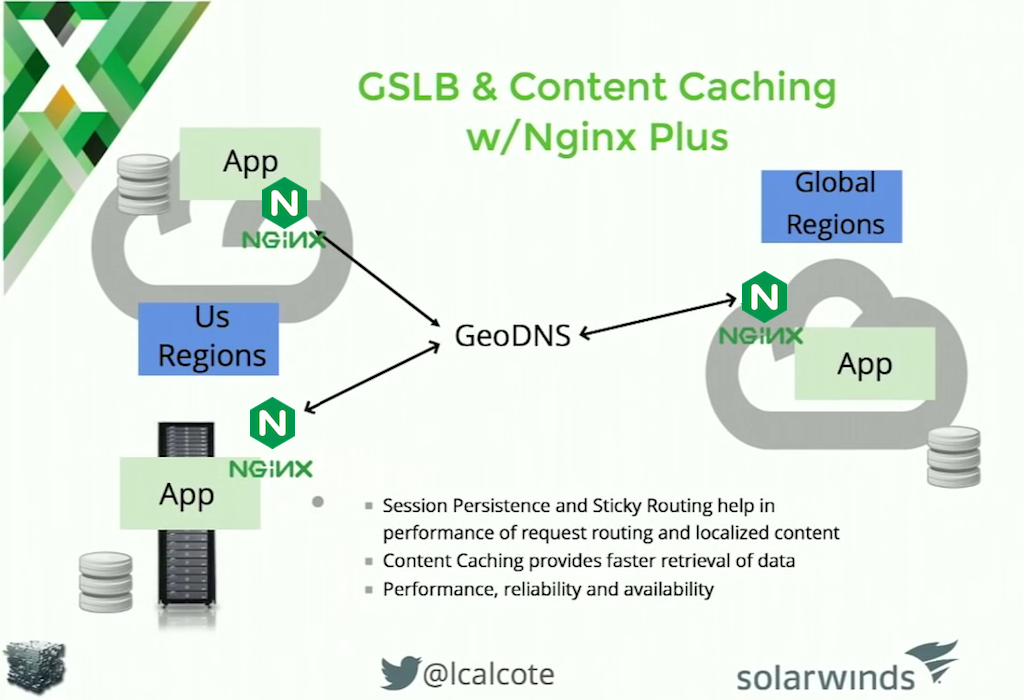

NGINX Plus offered us things like content caching, or GeoDNS [global server load balancing], letting us serve up audio and video files locally. As we matured into these types of use cases, we knew NGINX would be there for us.

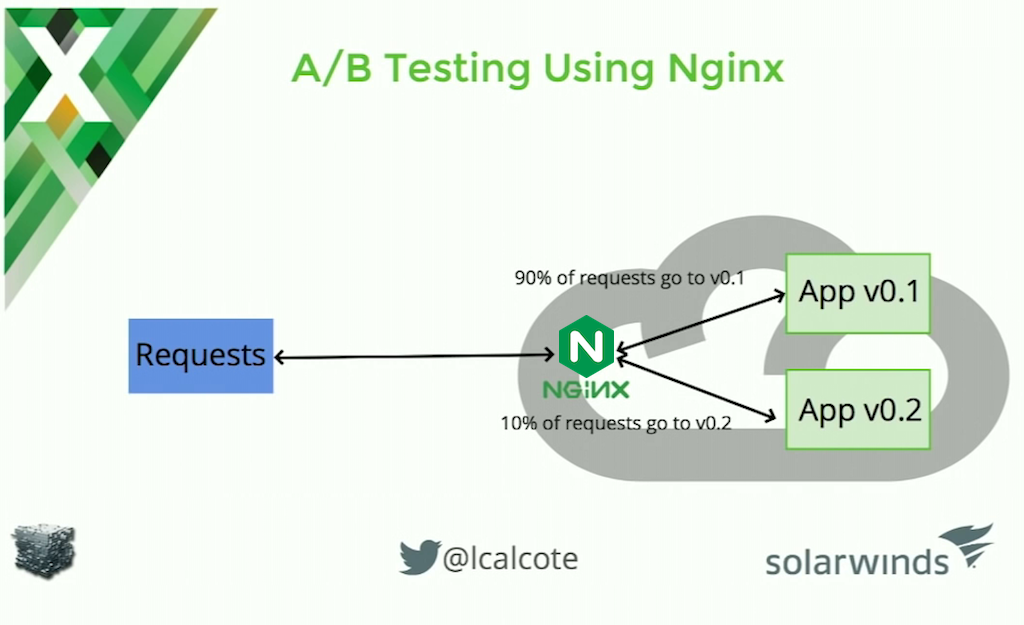

Another use case was A/B testing, helping us try out some of our new functionality with new users and do testing.

The team didn’t get to these features [GSLB, content caching, and A/B testing] at first, but the scalability NGINX provided us – having this capability there and allowing the team to grow into it – was part of the reason we chose it.

17:50 Microbloat v2

The way which NGINX was deployed in our Microbloat version 1 was as a stand‑alone entity running in its own VM, but you can also deploy NGINX as a Kubernetes Ingress controller.

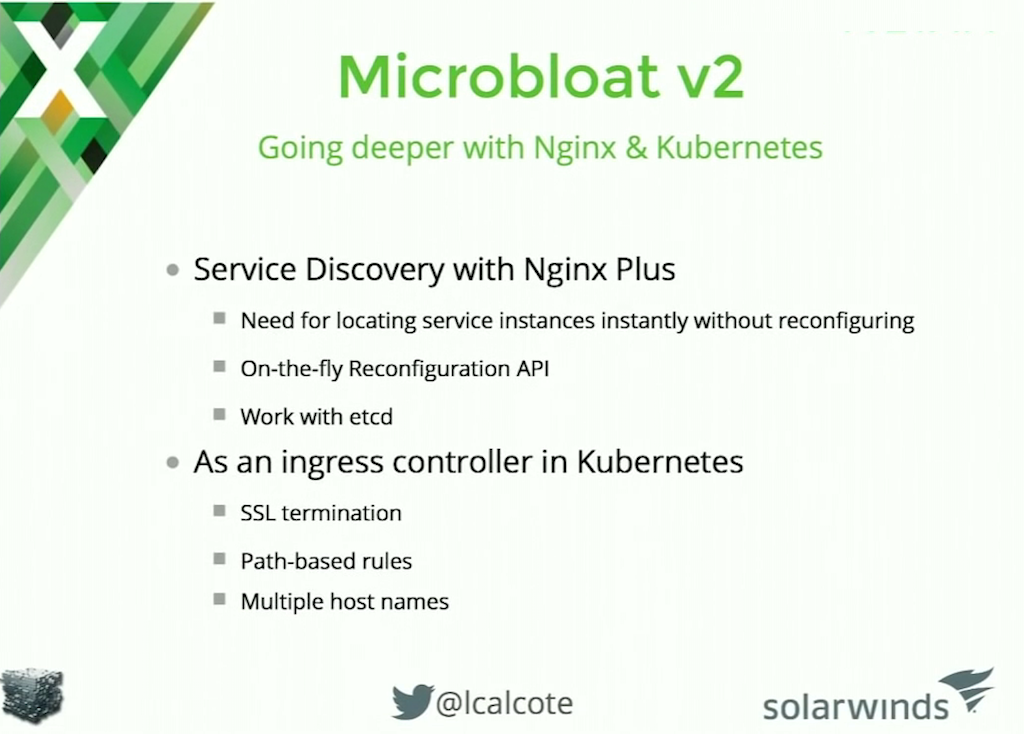

As we grew and matured our architecture into version 2, we wanted to get tighter integration with Kubernetes and NGINX. We did that by taking of advantage of this Kubernetes construct called Ingress.

Ingress defines the way in which network traffic is handled as it comes into the system. You can choose what component, or what Ingress controller, you have service that traffic. In our case we had NGINX as as an Ingress controller. The nice thing is, with NGINX Plus, there’s some tightly integrated service discovery that happens there.

[Editor – NGINX, Inc. has developed Ingress controllers for both NGINX Plus and open source NGINX; see the Additional Resources.]

So again, the team didn’t need this immediately. But as we scaled, as we grew and expanded our use cases, we would.

Additional Resources

- Moving to Microservices: Highlights from NGINX

- NGINX and NGINX Plus Ingress Controllers for Kubernetes Load Balancing

- Using NGINX as a WebSocket Proxy

To try NGINX Plus, start your free 30‑day trial today or contact us for a demo.

The post Powering Microservices and Sockets Using NGINX and Kubernetes appeared first on NGINX.

Source: Powering Microservices and Sockets Using NGINX and Kubernetes

Leave a Reply