Running Microservices on OpenShift with the NGINX MRA’s Fabric Model

table, th, td {

border: 1px solid black;

}

th {

background-color: #d3d3d3;

align: left;

padding-left: 5px;

padding-bottom: 2px;

padding-top: 2px;

line-height: 120%;

}

td {

padding-left: 5px;

padding-bottom: 5px;

padding-top: 5px;

line-height: 120%;

}

td.center {

text-align: center;

padding-bottom: 5px;

padding-top: 5px;

line-height: 120%;

}

Kubernetes has become a popular container platform for running microservices applications. It provides many important features, such as fault tolerance, load balancing, service discovery, autoscaling, rolling upgrades, and others. These features allow you to seamlessly develop, deploy, and manage containerized applications across a cluster of machines.

Red Hat’s OpenShift 3 is a container platform built on top of Kubernetes. OpenShift extends Kubernetes with additional features and also includes commercial support, a requirement for many enterprise customers.

In this blog post we will show you how you can use NGINX Plus to improve your microservices applications deployed on OpenShift. NGINX, Inc. has developed the Microservices Reference Architecture (MRA) with several models for deploying microservices that take advantage of NGINX Plus’ powerful capabilities. The most advanced of them – the Fabric Model – solves many of the challenges facing applications that use a microservices architecture. It is also very portable, making it ideal for running on container platforms such as OpenShift.

Since OpenShift is built on top of Kubernetes, most of this blog post applies to Kubernetes as well. This article assumes familiarity with core OpenShift concepts, including Pods, Deployments, Services, and Routes. You can find an overview of the core concepts in the OpenShift documentation.

The Fabric Model in a Nutshell

When building microservices applications, the following challenges arise quite frequently:

- Making the application resilient to failures

- Supporting dynamic scaling

- Monitoring the application

- Securing the application

As we will detail in this post, the Fabric Model addresses these challenges more effectively than typical approaches to deploying applications on OpenShift. So, what is the Fabric Model?

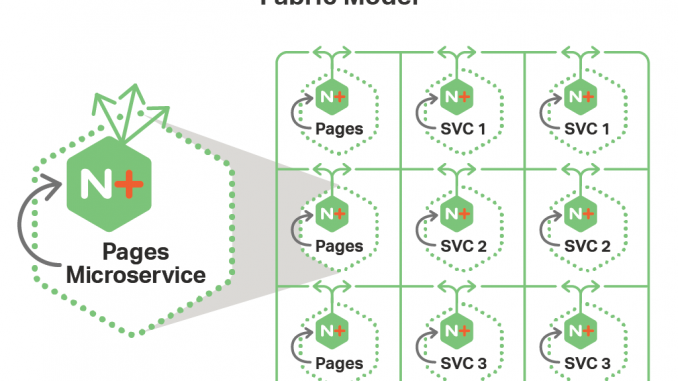

The Fabric Model provides a secure network, or a fabric, through which microservices communicate with each other. To create the fabric, every instance of a microservice is deployed with its own instance of NGINX Plus. And all communication of a microservice instance – incoming and outgoing requests – happens through its NGINX Plus instance. A little confused? Let’s look at the diagram below.

The diagram shows a microservices application deployed with the Fabric Model. The application consists of several microservices: Pages, SVC 1, SVC 2, and SVC 3. Each microservice instance is represented by a square. As we previously mentioned, every instance of each microservice has a corresponding instance of NGINX Plus. When an instance of Pages makes a request to an instance of SVC 1, the following happens:

- The Pages instance makes a local request to its NGINX Plus instance.

- The NGINX Plus instance passes the request to the NGINX Plus instance running alongside a SVC 1 instance.

- The NGINX Plus instance for the SVC 1 instance passes the request to it.

As you can see, NGINX Plus is present on both sides of communication, which effectively addresses the challenges from the beginning of this section:

- NGINX Plus health checks make the application more resilient

- NGINX load balancing and service discovery support scaling

- NGINX Plus live activity metrics provide monitoring of interservice communication

- NGINX Plus secures all connections with SSL to protect interservice communication

You can also configure additional NGINX features to refine the performance of the fabric, such as connection timeouts, request and connection limits, and many others.

In this blog post we’re focusing on how to deploy the Fabric Model to enhance an OpenShift deployment. To learn more about the Fabric Model independent of a specific platform, see our blog.

Implementing the Fabric Model on OpenShift

For simplicity’s sake, we will describe how to implement the Fabric Model for a simple microservices application. The application has only two microservices: Frontend and Backend. A user makes a request to Frontend. To process the user request, Frontend makes an auxiliary request to Backend.

Implementing the Fabric Model for our application requires the following steps:

- Deploy the Frontend and Backend microservices

- Create the fabric:

- Enable service discovery

- Secure interservice communication

- Configure health checks

- Expose the application externally

In reality, Steps 1 and 2 happen together as you deploy the preconfigured Fabric Model with your microservices at the same time. We’re representing them as two steps to help you better understand the Fabric Model.

Step 1: Deploy Microservices

In the Fabric Model communication between microservices goes through NGINX Plus, requiring a deployment of NGINX Plus along with each microservice instance. OpenShift gives us two options for how to pack NGINX Plus and a microservice instance together:

- Deploy NGINX Plus within the same container as the microservice instance

- Deploy NGINX Plus in a separate container but within the same pod as the microservice instance

In the rest of this blog post we assume that NGINX Plus and our microservices are deployed in a pod, regardless of whether each pair is deployed in the same container or separate containers. However, deploying NGINX Plus within the same container makes the Fabric Model implementation portable across platforms (which don’t all have the Pod concept).

As required, we configure Frontend to make requests to Backend through NGINX Plus. To accomplish that we create a local endpoint for Backend (for example, http://localhost/backend).

To deploy our pods in the cluster, we can use OpenShift’s Deployment or Replication controller resources.

Step 2: Create the Fabric

Now our microservices are deployed and each microservice instance is connected to its local NGINX Plus instance.

For Frontend and Backend to communicate, we need to create the fabric – connect the NGINX Plus instances associated with our Frontend instances to the NGINX Plus instances associated with our Backend instances. (For the sake of brevity, we refer to these as the Frontend NGINX Plus instances and Backend NGINX Plus instances.)

Step 2a: Enable Service Discovery

First we need to enable the Frontend NGINX Plus instances to become aware of the Backend NGINX Plus instances, a process called service discovery. NGINX Plus supports service discovery using DNS; that is, it can query a DNS server to learn the IP addresses of microservice instances. OpenShift supports DNS service discovery with Kube DNS. We configure the Frontend NGINX Plus instances to get the IP addresses of the Backend NGINX Plus instances from Kube DNS, as shown in the figure.

To enable discovery of our Backend instances in OpenShift, we create a Kubernetes Service resource to represent the set of Backend pods. Once the Service resource is created, NGINX Plus can resolve the Backend service name into the IP addresses of its instances via Kube DNS. NGINX Plus periodically re‑resolves the DNS name of Backend and so continually updates its list of IP addresses for Backend instances.

Additionally, in this step, we can configure NGINX Plus to use a different load balancing algorithm that is better suited to the Fabric Model than the default (Round Robin).

Step 2b: Secure Interservice Communication

In the previous step, we created a fabric that connects our microservices. However, it is not yet secure. To add security to our application, we encrypt all the connections going through the fabric, by performing the following linked instructions:

- Configuring SSL termination on both the Backend NGINX Plus instances and (because Frontend is exposed to our users) the Frontend NGINX Plus instances

- Setting up SSL on the Frontend NGINX Plus instances for the connections to Backend

In addition, you need to install SSL certificates in the Docker containers. Certificates are secrets of the first order and so need specific attention when handling them. Depending on the organization and your security controls, you have a range of options for dealing with them, from baking them into the containers to deploying them on the fly in the environment where they are deployed. Stay tuned for the upcoming blog post on using an internal certificate authority with the Fabric Model.

To make our fabric fast, we must configure the Frontend NGINX Plus instances for persistent SSL connections between microservices.

After completing the instructions, we have the setup shown in the diagram:

By using NGINX Plus to secure interservice communication, we gained the following:

- Encryption/decryption is offloaded from the microservices software to NGINX Plus, which simplifies the software.

- Persistent SSL connections between NGINX Plus instances make the fabric fast: connections are reused and there is no need to establish new ones, which is a resource‑intensive task.

Step 2c: Configure Health Checks

Now that the fabric is secure, we can improve it even further by configuring health checks.

We configure health checks on the Frontend NGINX Plus instances. If any instance of Backend becomes unavailable, the Frontend NGINX Plus instances quickly detect that and stop passing requests to it. Implementing health checks makes our application more resilient to failures. The diagram below shows health checks in action:

A health check is a synthetic request that NGINX Plus sends to service instances. It can be as simple as expecting the 200 response code from the service, or as complex as searching the response for specific character strings. Using health checks allows you to easily implement the circuit breaker pattern in your microservices application.

OpenShift provides health checks in the form of container Readiness and Liveness probes. For maximum resilience, we recommend using both NGINX Plus and OpenShift health checks.

Step 3: Expose the Application Externally

So far we deployed our microservices and connected them via a fast, secure, and resilient fabric. The final step is to expose the application externally, making it available to our users.

As mentioned previously, Frontend is a client‑facing service. To expose it externally, we use the OpenShift router. The router is the OpenShift external load balancer, capable of doing simple HTTP and TCP load balancing. Connections to our application must be secure. As you remember, in Step 2b we configured SSL termination for Frontend, which secures the connections. Now to load balance Frontend externally, we need to configure the router as a TCP load balancer to pass SSL traffic to the Frontend instances.

The Benefits of Using the Fabric Model

The table below summarizes the benefits of using the Fabric Model to deploy a microservices application versus a typical deployment on OpenShift.

| Feature | OpenShift | OpenShift with Fabric Model |

|---|---|---|

| Interservice communication | An overlay network | Fast, secure network fabric with consistent properties on top of the overlay network |

| Internal load balancing | Basic TCP/UDP load balancing by the Kube proxy | Advanced HTTP load balancing by NGINX Plus |

| Resilience | Liveness/Readiness probes | Advanced HTTP probes, circuit breaker pattern |

Additionally, NGINX Plus, through the Status module, provides detailed metrics about how your microservice instances are performing. Those metrics give you a great level of visibility into your microservices application.

An important benefit of the Fabric Model is portability: the model provides a consistent framework for interservice communication that works across different platforms. With a minimal effort we could move our application from OpenShift to Docker Swarm or Mesosphere.

The Fabric Model is a powerful solution for medium and large‑size applications. Although our sample application is simple and small, the principles we used to deploy it can be used to deploy much bigger microservices applications.

Summary

The Fabric Model extends OpenShift platform with a powerful framework to connect the microservices of your application. The model provides a fast, secure, consistent, and resilient fabric, through which microservices are connected, addressing many of the concerns of developing applications with a microservices architecture.

If you are interested in learning more about the Fabric Model and seeing a live demo, contact the NGINX Professional Services team today. The team can help determine whether the Fabric Model suits your requirements and guide you through the process of implementing the model.

To try NGINX Plus, start your free 30‑day trial today or contact us for a demo.

The post Running Microservices on OpenShift with the NGINX MRA’s Fabric Model appeared first on NGINX.

Source: Running Microservices on OpenShift with the NGINX MRA’s Fabric Model

Leave a Reply