Why DNS Is Critical for Modern Application Deployments

In a previous blog, I explained how application outages can impact customer confidence and bring your business to a standstill. In today’s marketplace, failing to adapt and deliver new services to the market quickly can be just as harmful.

With the rise of DevOps and improvements to the tooling that supports continuous integration and continuous delivery (CI/CD), companies can improve their ability to deploy and ship code with excellent results. But in rolling out new updates, they still want to mitigate their risk of a bad deployment, minimizing the chance of downtime for their customers.

This blog post explores different deployment strategies DevOps and NetOps teams can use to seamlessly and safely deploy updates to production, and explains how DNS can work with specific deployment models.

Blue-Green Deployments

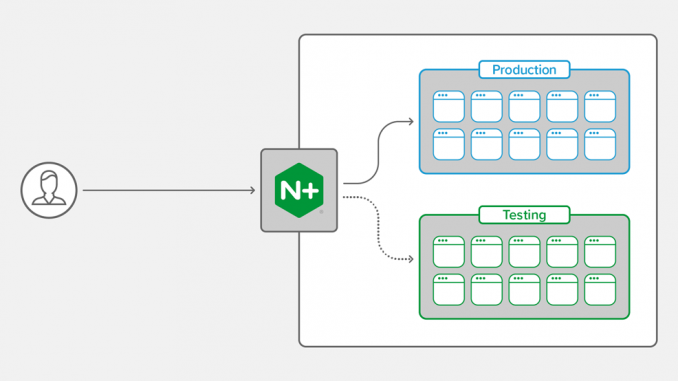

Blue-green deployments are a strategy in which you maintain two identical environments, referred to as blue and green. As depicted in the following graphic, NGINX Plus is forwarding user requests to the blue environment where the production version of your app is running. The new version of the app is running in the green environment, and you can test it there. Once all the tests check out, you update the NGINX Plus configuration to point traffic to the green environment.

The main benefit of this deployment strategy is that switching traffic between environments is simple with the right tooling in place – with NGINX Plus, for example, you can use the NGINX Plus API to change the set of backend servers, without the need for a restart. Risk is mitigated because if something goes wrong in the green environment, rolling back to the blue environment requires only a single call to the NGINX Plus API.

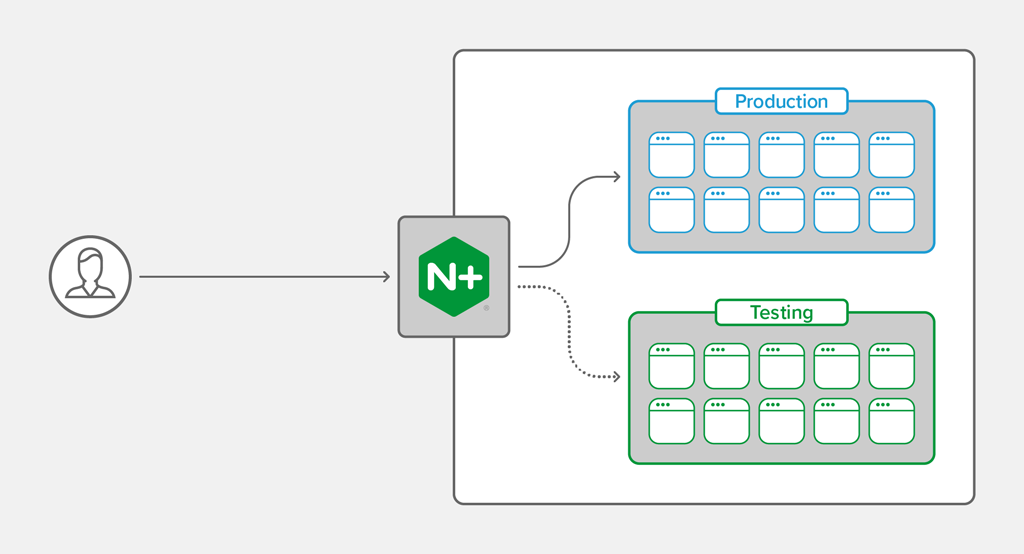

Rolling Blue-Green Deployments

A rolling blue‑green deployment is the same idea, but here we slowly divert traffic from the blue environment to the green environment until the latter is receiving 100%. A weighted load‑balancing algorithm allows you to specify the appropriate balance between the environments.

The main benefit is that you can slowly ramp up traffic to the green environment as you gain confidence in the stability of the release. Any unexpected issues only impact a small number of users.

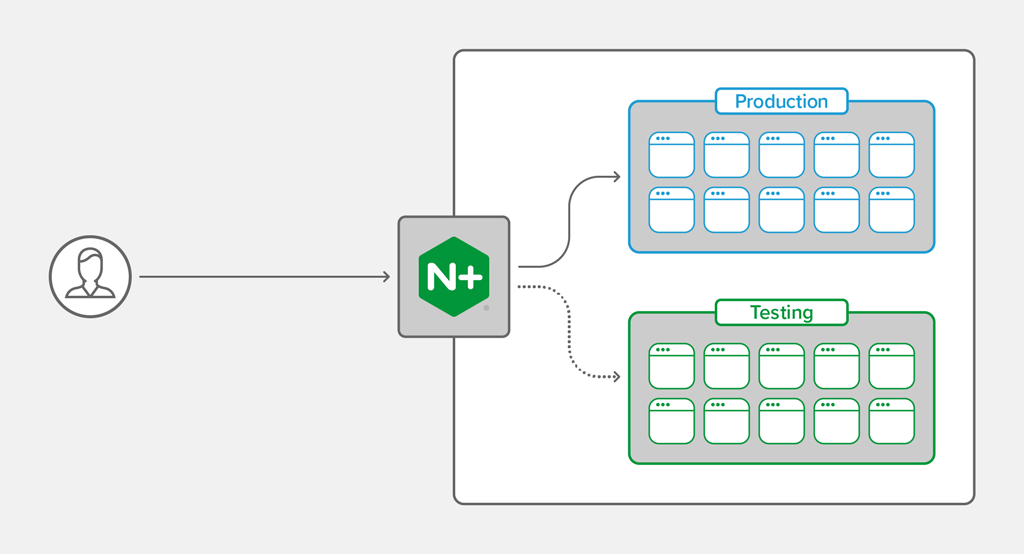

Canary Deployments

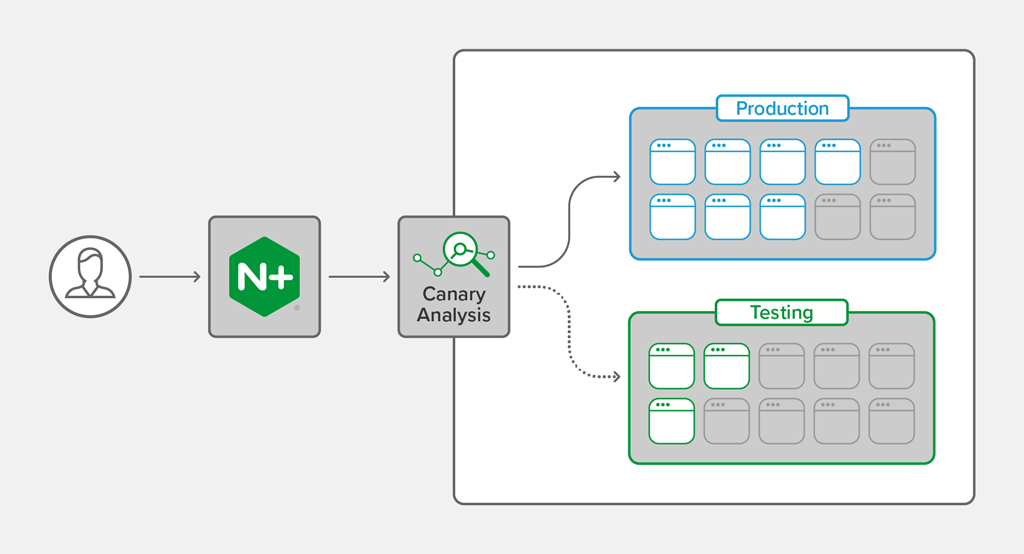

Canary releases are used to deliver a new deployment to a percentage of your application users or environments, similar to a rolling blue‑green deployment. The critical difference is that software monitors your app’s key performance metrics to ensure that the new deployment is operating as well as the current version in production.

As the canary software observes no significant changes to your performance metrics, it slowly directs more traffic to the new environment until it reaches 100%.

To implement canary releases well, you need tooling capable of performing the precise traffic shifting required. Rollout time can take longer, depending on the level of traffic your application sees.

Using DNS as a Part of Your Deployment Strategy

How can DNS play a role in implementing these deployment strategies?

DNS is the first touchpoint between your application and its users. Why not use it to direct clients to a new version of your application?

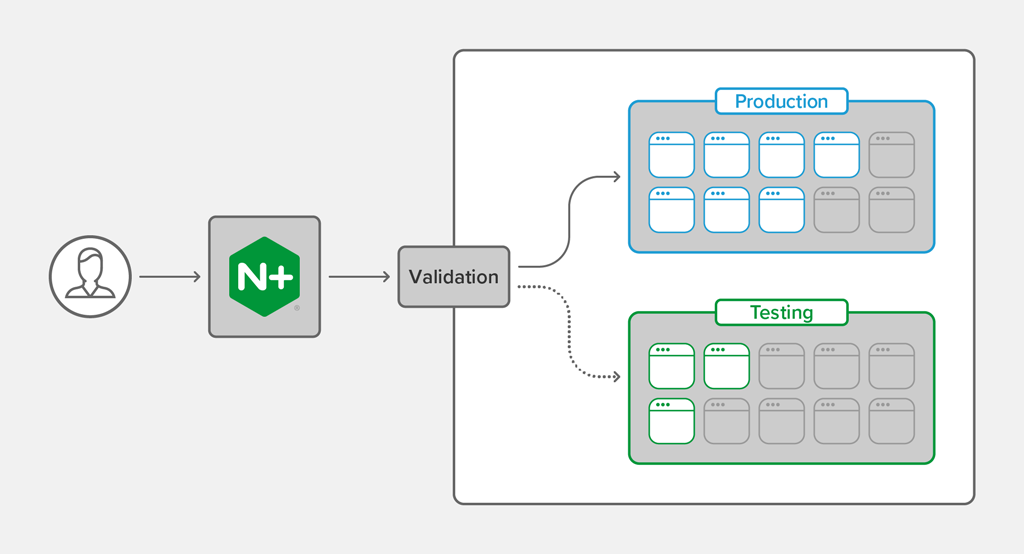

F5 DNS Load Balancer Cloud Service is a modern managed‑DNS solution that is easily integrated into your CI/CD pipelines. It also enables you to take into account a user’s location as a criterion for traffic steering while implementing these deployment strategies via DNS. Ratio‑based load‑balancing algorithms used with F5 DNS Load Balancer can help teams implement a blue‑green deployment across data centers, availability zones, or cloud providers.

A capable API‑driven DNS solution can also help you implement a rolling blue‑green strategy to facilitate migrating to a new cluster, like our partner Red Hat accomplished in helping OpenShift customers migrate to OCP v4. By leveraging F5 DNS Load Balancer’s declarative API, OpenShift operators can safely test a new cluster and reduce risk with a small portion of its users. An Ansible playbook is used to adjust the ratio of traffic being directed to the new cluster.

Summary

We’ve discussed several strategies for successfully rolling out updates to your live applications and how F5 DNS Load Balancer, as a modern global traffic management solution, can assist in those rollouts.

Please take advantage of our DNS Load Balancer free‑tier offering to see how NGINX software and F5 Cloud Service solutions can boost your applications’s performance and manageability.

You can also request access to our upcoming NGINX Plus Integration Early Access Preview for DNS Load Balancer. This integration enables you to configure your NGINX Plus instances to share their configurations and performance information with DNS Load Balancer to optimize global application traffic management.

The post Why DNS Is Critical for Modern Application Deployments appeared first on NGINX.

Source: Why DNS Is Critical for Modern Application Deployments

Leave a Reply