Announcing NGINX Ingress Controller for Kubernetes Release 1.6.0

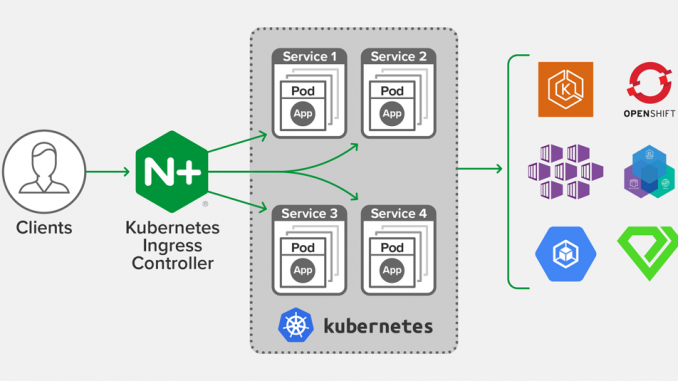

We are happy to announce release 1.6.0 of the NGINX Ingress Controller for Kubernetes. This release builds upon the development of our supported solution for Ingress load balancing on Kubernetes platforms, including Amazon Elastic Container Service for Kubernetes (EKS), the Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), Red Hat OpenShift, IBM Cloud Private, Diamanti, and others.

In the 1.6.0 release, NGINX Ingress Resources – a new configuration approach introduced earlier this year – are enabled by default and ready for production use.

NGINX Ingress Resources are an alternative to the standard Kubernetes Ingress Resource. They provide a richer and safer way to define complex ingress rules, and they support additional use cases. You can use NGINX Ingress Resources at the same time as standard Ingress Resources, which gives you the freedom to define each service by using the most appropriate approach.

Release 1.6.0 also includes:

- Improvements to NGINX Ingress Resources, adding support for richer load balancing behavior, more sophisticated request routing, redirects, direct responses, and blue-green and circuit breaker patterns;

- Support for OpenTracing, helping you to monitor and debug complex transactions;

- An improved security posture, with support to run the Ingress Controller as a non-root user.

With this release, we are also pleased to share a new documentation site, available at docs.nginx.com/nginx-ingress-controller.

There, you will find the complete changelog, including bug fixes, improvements, and changes.

What Is the NGINX Ingress Controller for Kubernetes?

The NGINX Ingress Controller for Kubernetes is a daemon that runs alongside either NGINX Open Source or NGINX Plus instances within a Kubernetes environment. This daemon monitors both Ingress resources and NGINX Ingress Resources to discover requests for services that require ingress load balancing. It then automatically configures NGINX or NGINX Plus to route and load balance traffic to these services.

Multiple NGINX Ingress controller implementations are available. The official NGINX implementation is high‑performance, production‑ready, and suitable for long‑term deployment. We focus on providing stability across releases, with features that can be deployed at enterprise scale. We provide full technical support to NGINX Plus subscribers at no additional cost, and NGINX Open Source users benefit from our focus on stability and supportability.

Introducing NGINX Ingress Resources

In Release 1.5.0, we previewed a new approach to configure request routing and load-balancing. We took advantage of how the Kubernetes API can be extended using custom resources and created a set of alternative resources – NGINX Ingress Resources – with the following goals:

- To support a wider range of real use cases. NGINX provides rich functionality, including load-balancing, advanced request routing, split-clients routing, and direct responses. With NGINX Ingress Resources, we make it easy for application teams to access these features when defining how to route traffic to their services.

- To reduce complexity by eliminating the dependency on annotations for advanced features and fine-tuning. Annotations are a general-purpose extension mechanism for all Kubernetes resources, but they are not suited for expressing complex configuration rules and they lack type safety. With NGINX Ingress Resources, all features and tuning options are part of the formal specification.

- To provide a familiar, Kubernetes-native user experience. NGINX Ingress Resources follow the Kubernetes API style, making them easy to work with and to automate.

- To support RBAC and self‑service use cases in a scalable and predictable manner. An administrator or superuser can delegate part of the NGINX Ingress Resource configuration to other teams (in their namespaces), enabling those teams to take responsibility for their own applications and services, safely and securely.

We’re happy to announce that in Release 1.6.0 NGINX Custom resources are enabled by default and suitable for production use.

Improvements to NGINX Ingress Resources

At the core of the NGINX Ingress Resources is the VirtualServer resource. This is used to define:

- the host-level parameters used to publish one or more Kubernetes services for external access, such as the host header and TLS parameters;

- the upstreams (the Kubernetes services) that implement the externally-accessible services,

- the routes that match requests and assign them to the upstreams.

NGINX Ingress Controller can manage multiple VirtualServer resources at the same time. When it receives a request, it uses the host-level parameters to determine which VirtualServer resource handles that request.

Ingress Controller then processes the routes in the resource in order to determine the action for the request. Commonly, the action will select an upstream, and Ingress Controller will load-balance the request across the Kubernetes pods in that upstream.

For RBAC purposes, a route and upstream may be delegated to a separate VirtualServerRoute resource, located in a different Kubernetes namespace. This grants fine-grained control of upstream definitions and routing rules to other application teams in a safe and secure manner.

Routes Configuration

Routing rules are contained in a routes configuration. This configuration contains a list of path matches, and the corresponding rules to handle requests that match the path.

Patch matches can use either prefix matches or regular expressions (new in the 1.6.0 release).

The routing rules that select how a request is handled are particularly rich. Prior to the 1.6.0 release, the rule simply defined an upstream to handle the request.

New in the 1.6.0 release:

- Action: The rule may immediately select a single action (see below) that defines several ways to handle the request.

- Enhanced conditional matches: The Match configuration defines a set of complex matches, based on headers, cookies, query string parameters and NGINX variables, to select the appropriate action. With Release 1.6.0, it is now possible to define several independent sets of matches, where the first set that matches successfully “wins” and selects an action.

- Improved Traffic Splitting: The Splits configuration selects one of several different actions at random, with user-defined weights. This makes it possible to configure Canary releases, or to automate Blue-Green deployments

In 1.6.0, conditional match can select a Splits configuration, making it possible to create complex rules such as:

- For test traffic identified with a ‘debug’ cookie, always forward traffic to

upstream-v3 - For all other production traffic, perform a canary test, routing 1% of traffic to

upstream-v2and the remainder toupstream-v1.

Actions Configuration

Each routing rule ultimately selects an Action configuration. This is a terminal operation that defines how the request should be handled.

Prior to the 1.6.0 release, the only supported action was to select an Upstream Group and load-balance the request to the service instances in that group:

- Pass: Handle the request using the named Upstream Group. NGINX Ingress Controller uses the parameters in the Upstream configuration (see below) to determine how to load-balance the request and handle any errors from the selected service instance.

New in the 1.6.0 release:

- Redirect: Issue a user-defined redirect, telling the client to request a different URL. The URL may be fixed, or it may be constructed dynamically using parameters from the request.

- Respond: Send a response directly to the client. The response may be a fixed, static response, or it may be constructed dynamically using parameters from the request.

Upstreams Configuration

The Upstreams configuration defines one or more Upstream Groups, each identified by a name. An upstream group references the Kubernetes Service. When an upstream group is selected by the routing rules and actions, NGINX Ingress Controller will load-balance the request across the pods that are members of that service.

In the 1.6.0 release, you can select a subset of the pods of a service using labels. Additionally, for NGINX Plus, you can use a service of the type ExternalName.

Also new in the 1.6.0 release, you will find much richer control over how NGINX forwards and load-balances requests. You’ll gain more control over load balancing, keepalive and timeout settings, request retries, buffering and performance tuning, as well as support for websockets and NGINX Plus’ slow-start capability. When using the Ingress Controller with NGINX Plus, you can also configure request queuing, upstream health checks and session persistence.

OpenTracing

In Release 1.6.0, we’ve added support for OpenTracing in the Ingress Controller, using the open source module (nginx-opentracing) created by the OpenTracing community. You can find out more in the accompanying blog post.

Other Improvements

Release 1.6.0 brings a number of additional stability, performance and security improvements. These are described in detail in the 1.6.0 Release Notes.

Of particular note is the change to run the Ingress Controller container as a non-root user.

Running containers as non-root users is a best practice. Prior to the 1.6.0 release, the Ingress Controller daemon and the NGINX master processes ran as root, while the NGINX worker processes used the unprivileged ‘nginx’ user. In Release 1.6.0, all processes inside the Ingress Controller container run as the ‘nginx’ user. The securityContext field in the pod template in the installation manifests has been updated to add the NET_BIND_SERVICE capability, so that the NGINX master process can continue to bind to privileged ports (80 and 443).

Updated Documentation

Finally, the NGINX Ingress Controller documentation has been moved from GitHub to a new location on docs.nginx.com. Improving the documentation is a work in progress, and we’d welcome any feedback through the GitHub project page.

To test the NGINX Ingress Controller for Kubernetes with NGINX Plus, start your free 30-day trial of NGINX Plus today or contact us to discuss your use cases.

To try NGINX Ingress Controller with NGINX open source, you can obtain the release source code, or take a pre-built container from DockerHub.

The post Announcing NGINX Ingress Controller for Kubernetes Release 1.6.0 appeared first on NGINX.

Source: Announcing NGINX Ingress Controller for Kubernetes Release 1.6.0

Leave a Reply