Announcing NGINX Ingress Controller Release 1.9.0

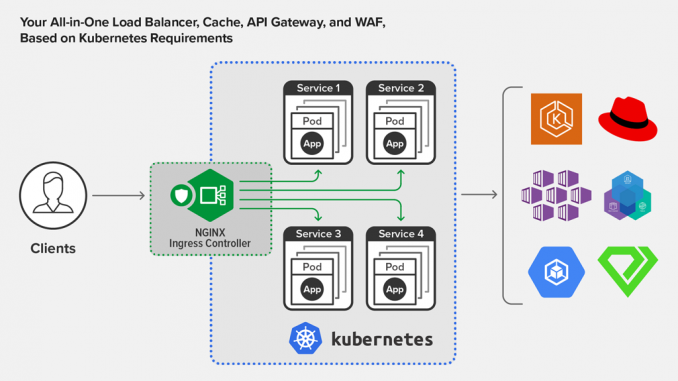

We are happy to announce release 1.9.0 of the NGINX Ingress Controller. This release builds upon the development of our supported solution for Ingress load balancing on Kubernetes platforms, including Red Hat OpenShift, Amazon Elastic Container Service for Kubernetes (EKS), the Azure Kubernetes Service (AKS), Google Kubernetes Engine (GKE), IBM Cloud Private, Diamanti, and others.

With release 1.9.0, we continue our commitment to providing a flexible, powerful and easy-to-use Ingress Controller, which can be configured with both Kubernetes Ingress Resources and NGINX Ingress Resources:

- Kubernetes Ingress resources provide maximum compatibility across Ingress Controller implementations, and can be extended using annotations and custom templates to generate sophisticated configuration.

- NGINX Ingress resources provide an NGINX‑specific configuration schema, which is richer and safer than customizing the generic Kubernetes Ingress resources. In release 1.8.0 and later, NGINX Ingress resources can be extended with snippets and custom templates, so you can continue using NGINX features that are not yet supported natively with NGINX Ingress resources, such as caching and OIDC authentication.

Release 1.9.0 brings the following major enhancements and improvements:

- New WAF capabilities – NGINX App Protect now supports additional WAF policy definitions, including JSON schema validations, user defined URLs, and user defined parameters. For information about integrating NGINX App Protect with the NGINX Ingress Controller, see our blog.

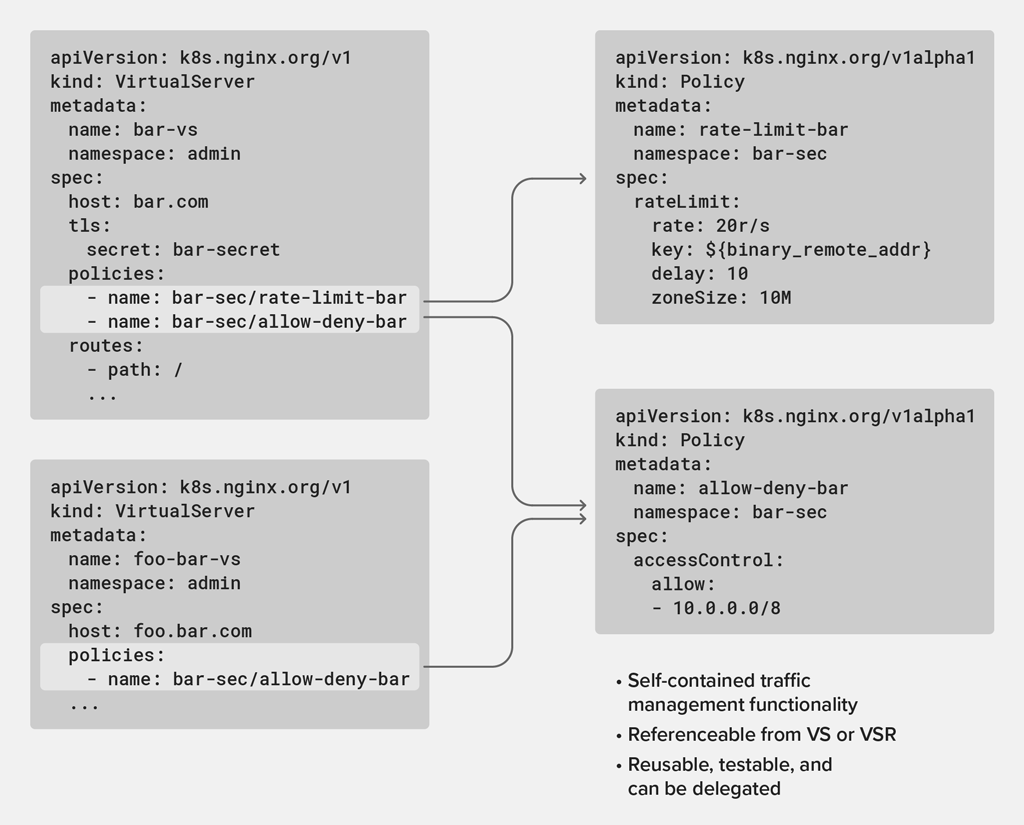

- More policies – Policies are defined as a collection of rules in a separate Kubernetes object that can be applied to the Ingress load balancer. Policies can be delegated to different teams, and assembled once they are executed. Release 1.9.0 introduces three additional policies: JWT validation, rate limiting, and mTLS authentication.

- Improved visibility – This release enriches existing Ingress Controller metrics and adds new ones, including upstream‑specific metrics, reload reason, request latency and distribution, and more. The Grafana dashboard is extended to leverage the metrics, providing additional visualization capabilities.

- Service mesh integration – The NGINX Ingress Controller can seamlessly integrate with the newly announced NGINX Service Mesh to securely deliver applications running in the mesh to external services.

-

- Graceful handling of host collisions

- Kubernetes Ingress v1 API updates

- Support for the NGINX Ingress Operator for OpenShift in standard Kubernetes environments

What Is the NGINX Ingress Controller?

The NGINX Ingress Controller for Kubernetes is a daemon that runs alongside NGINX Open Source or NGINX Plus instances in a Kubernetes environment. The daemon monitors Kubernetes Ingress resources and NGINX Ingress resources to discover requests for services that require Ingress load balancing. The daemon then automatically configures NGINX or NGINX Plus to route and load balance traffic to these services.

Multiple NGINX Ingress controller implementations are available. The official NGINX implementation is high‑performance, production‑ready, and suitable for long‑term deployment. We focus on providing stability across releases, with features that can be deployed at enterprise scale. We provide full support to NGINX Plus subscribers at no additional cost, and NGINX Open Source users benefit from our focus on stability and supportability.

What’s New in NGINX Ingress Controller 1.9.0?

Three New Policies

In release 1.8.0 of the NGINX Ingress Controller, we introduced policies as a separate Kubernetes object for abstracting traffic management functionality. Policies can be defined and applied in multiple places by different teams, which has many advantages: encapsulation and simpler configs, multi‑tenancy, reusability, and reliability. You can attach policies to an application simply by referencing the name of the policy in the corresponding VirtualServer (VS) or VirtualServerRoute (VSR) object. This gives you the flexibility to apply policies, even to granular areas of your application.

(As a refresher, the VS Object defines your Ingress load balancing configuration for domain names. You can spread a VS configuration across multiple VSRs which consist of subroutes.)

We are adding three new policies in this release, all of which complement the IP address‑based access control list (ACL) policy introduced in release 1.8.0. The new JSON Web Token (JWT) validation and rate‑limiting policies focus on protecting both infrastructure and application resources within the Kubernetes perimeter, so that they are neither abused by illegitimate users nor overloaded by legitimate users. mTLS policies enable end-to-end encryption between services while preserving payload visibility so that the Ingress Controller can provide routing and security for application requests and responses.

JSON Web Token Validation Policy

Security teams may want to ensure that only users authorized by a central identity provider can access an application. They can accomplish this by attaching a JWT policy to VS and VSR resources.

The first step is to create a Kubernetes Policy object defining the JWT policy. The following sample policy specifies that JWT tokens must be presented from the token HTTP header, and validated against a Kubernetes Secret (jwk-secret) to determine whether the token presented by the client was signed by the central identity provider.

apiVersion: k8s.nginx.org/v1alpha1

kind: Policy

metadata:

name: jwt-policy

spec:

jwt:

realm: MyProductAPI

secret: jwk-secret

token: $http_tokenOnce the Policy definition is created, you can attach it to a VS or VSR resource by referencing the policy name under the policies field.

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: webapp

spec:

host: webapp.example.com

policies:

- name: jwt-policy

upstreams:

- name: webapp

service: webapp-svc

port: 80

routes:

- path: /

action:

pass: webappThe result of applying this VS object is to provision JWT authentication and determine whether the client is granted access to the webapp upstream. This capability is useful not only for authentication, but to enable single sign‑on (SSO), which allows users to login just once with a single set of credentials that are then accepted by multiple applications. It can also be particularly useful in Kubernetes environments, where applications are written in languages and frameworks that make it difficult to comply with authentication standards dictated by the security organization. By provisioning authentication in the Ingress Controller, you also relieve developers from the burden of implementing authentication or parsing JWTs in their applications.

It is worth noting that the default behavior of the JWT policy is to pass the JWT token to the upstream, which is useful in zero‑trust environments where every service is required to authenticate the requester.

You can enable applications to access specific claims in a JWT without requiring them to parse the JWT, by leveraging the header modification capability – introduced in release 1.8.0 for VSs and VSRs – to forward the claim to the upstream as a request header. This ensures that applications don’t spend more computational resources than necessary on unauthenticated users, something that’s particularly important in shared compute platforms such as Kubernetes.

Rate-Limiting Policy

Security and reliability teams often want to protect upstream services from DDoS and password guessing attacks by limiting the incoming request rate to a value typical for genuine users, with an option to queue requests before they are sent to the application upstream. Additionally, they may want to set a status code to return in response to rejected and overloaded requests.

There are many ways of identifying the users subject to rate limits, one of which is by means of the source IP address (using the $binary_remote_addr embedded variable). In the following policy definition, we are limiting each unique IP address to 10 requests per second and rejecting excessive requests with the 503 (Service Unavailable) status code. To learn about all the available rate‑limiting options, please refer to our rate‑limiting documentation.

apiVersion: k8s.nginx.org/v1alpha1

kind: Policy

metadata:

name: ratelimit-policy-cafe

rateLimit:

rate: 10r/s

zoneSize: 10M

key: ${binary_remote_addr}

rejectCode: 503Rate‑limiting policies can be applied to a VS resource to respond with a custom error page or redirect users to another cluster, effectively adapting your applications when resources are overloaded.

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: webapp

spec:

host: webapp.example.com

policies:

- name: rate-limit-policy

upstreams:

- name: webapp

service: webapp-svc

port: 80

routes:

- path: /

errorPages:

- codes: [503]

redirect:

code: 301

url: https://cluster-domain-backup.com

action:

pass: webappmTLS Policies

Security teams with strict requirements need to ensure that the Ingress Controller can validate certificates presented by the client and the upstream service against a configurable Certificate Authority (CA). Once both parties (client and server) authenticate each other, an encrypted connection is established between them. Given the fact that Kubernetes is a multi‑tenant platform, administrators can provision an extra layer of security to ensure that only authenticated clients are proxied to identifiable upstream services before the business logic takes place.

We are introducing two policies which together implement mutual TLS (mTLS): an ingress mTLS policy and an egress mTLS policy. Ingress mTLS validates certificates presented by the client, and egress mTLS validates certificates presented by the upstream service. The policies can be configured with different CAs, and attached independently to a VS or VSR resource for situations where only client‑ or server‑side authentication is required.

Below is an example showing how to apply both policies (ingress and egress mTLS) to a VS resource. We define an ingress mTLS policy that validates a client certificate against the Kubernetes Secret referenced in the clientCertSecret field, and an egress mTLS policy specifying settings (TLS ciphers and protocols) to use when validating server certificates against a trusted CA.

apiVersion: k8s.nginx.org/v1alpha1

kind: Policy

metadata:

name: ingressMTLS-policy-cafe

ingressMTLS:

clientCertSecret: secret-name

--

apiVersion: k8s.nginx.org/v1alpha1

kind: Policy

metadata:

name: egressMTLS-policy-cafe

egressMTLS:

tlsSecret: tls-secret-name

verify: true

trustedCertSecret: egress-trusted-ca-secret

protocols: TLSv1.3We can then attach both policies to the VS resource to configure mTLS on the Ingress Controller.

apiVersion: k8s.nginx.org/v1

kind: VirtualServer

metadata:

name: cafe

spec:

host: cafe.example.com

...

policies:

-name: ingressMTLS-policy-cafe

-name: egressMTLS-policy-caféIn some circumstances, you might want to provision ingress mTLS at the application level instead of at the Ingress Controller level. To do this, you can populate the verifyClient field of the policy with the optional_no_ca value and pass the certificate to the application as a header by setting the requestHeader field of the VS or VSR resource to the $ssl_client_escaped_cert variable which contains a copy of the client certificate. For more details, see the documentation.

Improved Visibility

Release 1.9.0 introduces several new Ingress Controller metrics and enriches existing ones, all of which helps you understand the behavior of your applications and users. To better convey how metrics correlate with each other, we are also adding new Grafana dashboards.

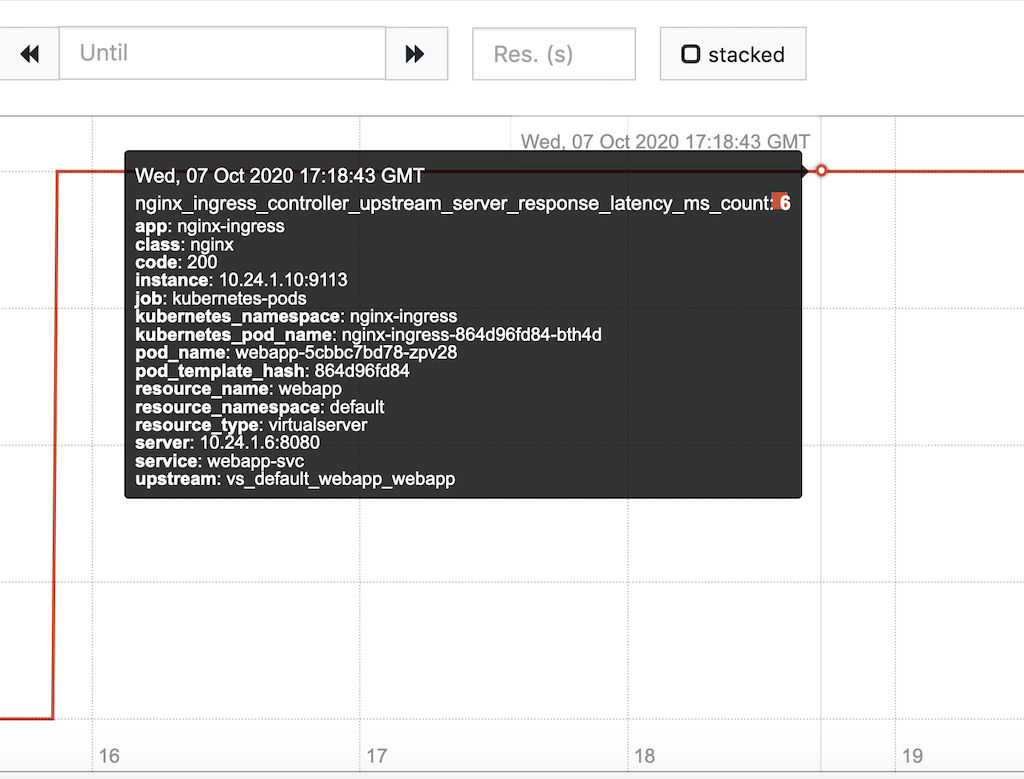

Kubernetes Context on Prometheus Metrics

Upstream‑specific Ingress Controller metrics exported to Prometheus are now annotated with the corresponding Kubernetes service name, Pod, and Ingress resource name. This can simplify troubleshooting applications by correlating performance with Kubernetes objects. For instance, discovering the Pod corresponding to an underperforming upstream group (in terms of requests per second) can help you trace the root cause or take corrective action and remove the Pod.

Here we show an example of an annotation on the nginx_ingress_controller_upstream_server_response_latency_ms_count metric in Prometheus.

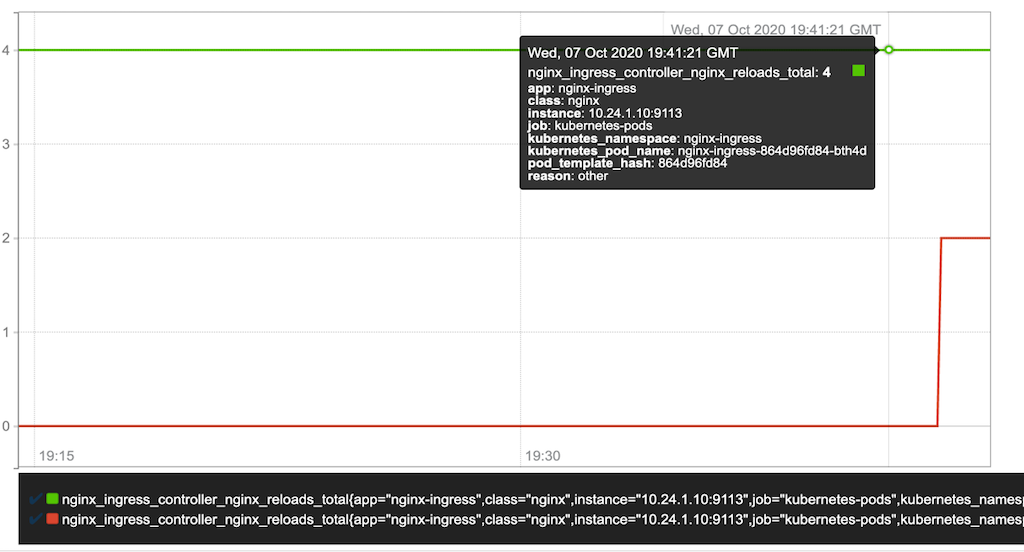

Reload Reason

With the NGINX Plus Ingress Controller, backend service endpoints can be changed without requiring a reload of the NGINX Plus configuration. With the NGINX Open Source Ingress Controller, endpoint changes require a configuration reload, so we are now reporting the reason behind reloads and keeping track of reloads over time. The nginx_ingress_controller_nginx_reloads_total metric reports the number of reloads due to endpoint changes and the number due to other reasons. You can use this information to compute the percentage of reloads due to endpoint changes, which helps you determine whether performance issues might be caused by the configuration reloads triggered by frequent endpoint changes in a highly dynamic Kubernetes environment.

The following chart shows the two annotations corresponding to reloads. The red line represents the number of configuration reloads triggered by endpoint changes and the green line represents the number of reloads for other reasons.

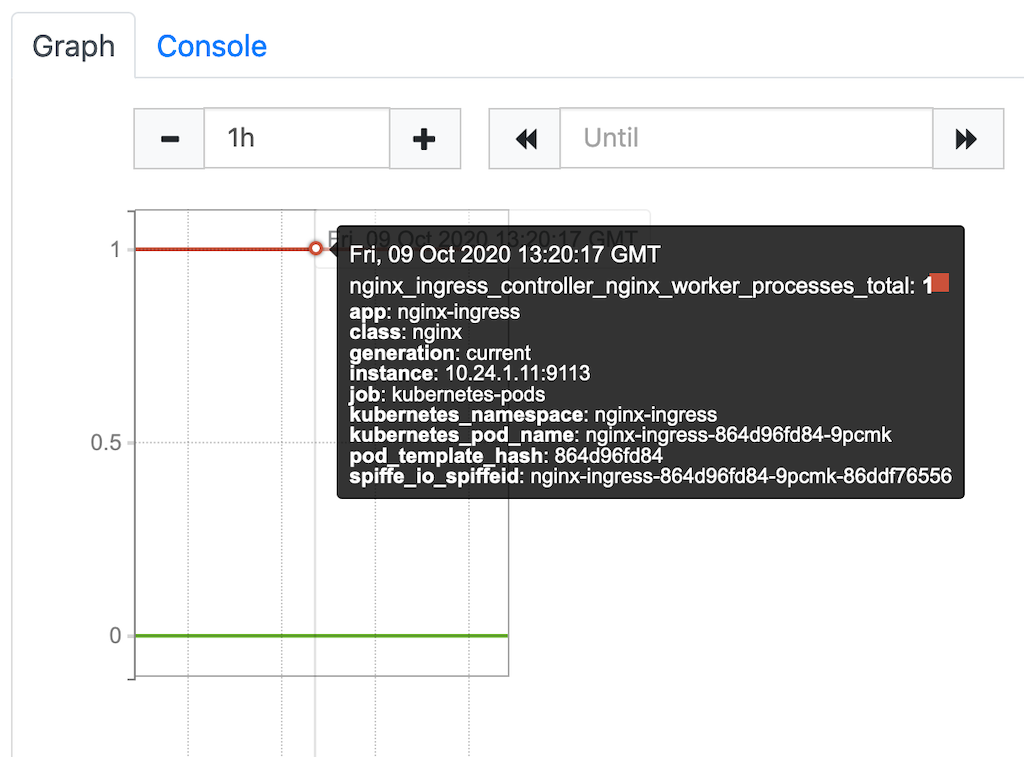

Worker Processes Details

Whenever a configuration reload occurs on the Ingress Controller, a new set of NGINX workers is spawned, and the previous workers continue running until all the connections bound to them are terminated. While this kind of graceful reload doesn’t disrupt application traffic, it also means the previous worker processes continue consuming resources until they exit. Reloading the configuration frequently can therefore cause resource starvation and down time.

To help you manage this limitation efficiently, we are including a built‑in metric indicating how many worker processes are running at any given time. With this knowledge you can take appropriate measures like stopping configuration changes or rebuilding the Ingress Controller from scratch.

Below is a Prometheus plot of the nginx_ingress_controller_nginx_worker_processes_total metric. The red line represents the number of current worker processes, and the green line represents the number of old workers still running after a reload.

Request Latency and Distribution

The Ingress Controller now reports the latency of requests for every upstream application. This metric outlays a distribution of latencies in buckets, giving you a better sense of the performance and user experience of applications.

The metric is referenced as nginx_ingress_controller_upstream_server_response_latency_ms_bucket in Prometheus.

Note that for the Ingress Controller to expose this metric to Prometheus, the –enable-latency-metric flag must be included on the command line. For details, see the documentation.

Grafana Dashboards

Release 1.9.0 enhances visualization capabilities with several Grafana dashboards leveraging all the available metrics. The display also identifies the Kubernetes objects that correlate with the metrics.

The dashboard not only provides insight into your applications, but lets you quickly pinpoint undesirable behavior to simplify troubleshooting and allow you to rapidly take adaptive measures.

Service Mesh Integration

DevOps teams need an Ingress Controller to connect applications in a service mesh to external services outside the cluster. The Ingress Controller can seamlessly integrate with the newly announced NGINX Service Mesh (NSM), specifically for ingress and egress mTLS capabilities with external services.

For ingress mTLS, the Ingress Controller participates in the mesh TLS by receiving certs and keys from the CA for the mesh. For egress mTLS, you can configure the Ingress Controller to act as the egress endpoint of the mesh so that services that are controlled by NSM can communicate securely with external services that are not part of the mesh.

Other New Features

Graceful Handling of Host Collisions

It is possible to create standard Kubernetes Ingress resources and NGINX VirtualServer resources for the same host. Previously, behavior was not deterministic in this scenario. For the same input manifests referencing the same host, it was possible to end up with different NGINX data‑plane configurations based on circumstances outside of the administrator’s control. Even more unfortunately, there was no error or warning when a conflict occurred.

Release 1.9.0 gracefully handles host collisions by choosing the “oldest” resource if there are multiple resources contending for the same host. In other words, if a manifest is submitted for a host that already has one, the second one is rejected, and the conflict is reported in the Events and Status fields of the rejected resource description (in the output of the kubectl describe vs command, for example).

Kubernetes Ingress v1 API Updates

Earlier this year Kubernetes 1.18 introduced changes to the Ingress v1 apiVersion , and we have made the corresponding changes. We also now support wildcard hosts in IngressRule as well as Exact and Prefix path matching under the new PathType field. Lastly, we have adopted the IngressClass resource and Ingress objects with the ingressClassName field. We made corresponding changes to CLI arguments to accommodate this. For more details, see the documentation. As always, we are committed to backward compatibility, so we still support the previous configuration semantics.

Operator Support in Standard Kubernetes Environments

Earlier this year we announced the NGINX Ingress Operator for OpenShift environments. The Operator is now supported in standard Kubernetes environments. For deployment instructions, see our GitHub repository.

Resources

For the complete changelog for release 1.9.0, see the Release Notes.

To try out the NGINX Ingress Controller for Kubernetes with NGINX Plus, start your free 30-day trial today or contact us to discuss your use cases.

To try the NGINX Ingress Controller with NGINX Open Source, you can obtain the release source code, or download a prebuilt container from DockerHub.

The post Announcing NGINX Ingress Controller Release 1.9.0 appeared first on NGINX.

Leave a Reply