Choosing the Right API Gateway Pattern for Effective API Delivery

The ProgrammableWeb directory of APIs has been tracking externally available APIs since 2005, and the count passed the 22,000 mark in June 2019. The number of published APIs increased by nearly 60% in the 4 years leading up to that date, demonstrating that the API economy is not only growing strong – it’s here to stay.

APIs are core to many businesses, delivering enormous value and revenue. Now, more than ever, IT organizations need a way to manage and control access to their APIs if they want to stay ahead of the curve.

The Importance of API Management

As organizations have started to transition away from monolithic applications towards microservices‑based apps, they have also realized that APIs not only facilitate efficient digital communication, but can also be a source of new revenue. For example, Salesforce.com generates more than 50% of its annual revenue through its APIs, while Expedia.com depends on them for almost 90%.

With such high stakes, companies need to ensure their APIs are high‑performance, controlled, and secure – they simply can’t afford issues with their API structure.

API Gateway ≠ API Management

Although the terms API gateway and API management are sometimes used interchangeably, it is important to note that there is a difference.

An API gateway is the gatekeeper for access to APIs, securing and managing traffic between API consumers and the applications that expose those APIs. The API gateway typically handles authentication and authorization, request routing to backends, rate limiting to avoid overloading systems and protect against DDoS attacks, offloading SSL/TLS traffic to improve performance, and handling errors or exceptions.

In contrast, API management refers to the process of managing APIs across their full lifecycle, including defining and publishing them, monitoring their performance, and analyzing usage patterns to maximize business value.

Common API Gateway Deployment Patterns

So, how do you effectively deliver APIs?

As the world’s number one API gateway, NGINX delivers over half of the API traffic on today’s web. While there is not technically a “correct” pattern, here are the most common API gateway patterns we typically encounter.

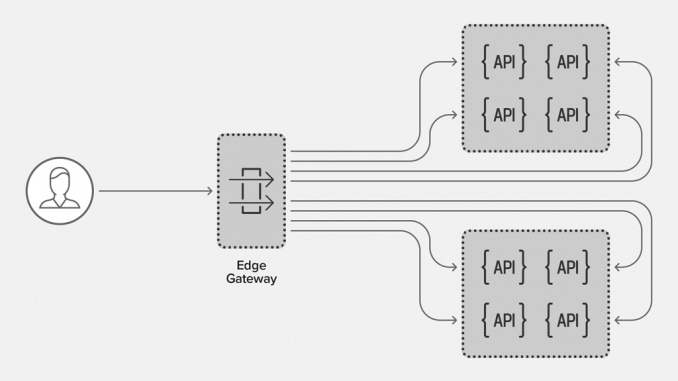

Centralized, Edge Gateway

This is the most common API gateway pattern and follows a traditional application delivery controller (ADC) architecture. In this pattern, the gateway handles almost everything, including:

- SSL/TLS termination

- Authentication

- Authorization

- Request routing

- Rate limiting

- Request/response manipulation

- Facade routing

This approach is a good fit when publicly exposing application services from monolithic applications with centralized governance. However, it is not well‑suited for microservices architectures or situations that require frequent changes – traditional edge gateways are optimized for north‑south traffic and are not able to efficiently handle the higher volume of east‑west traffic generated in distributed microservices environments.

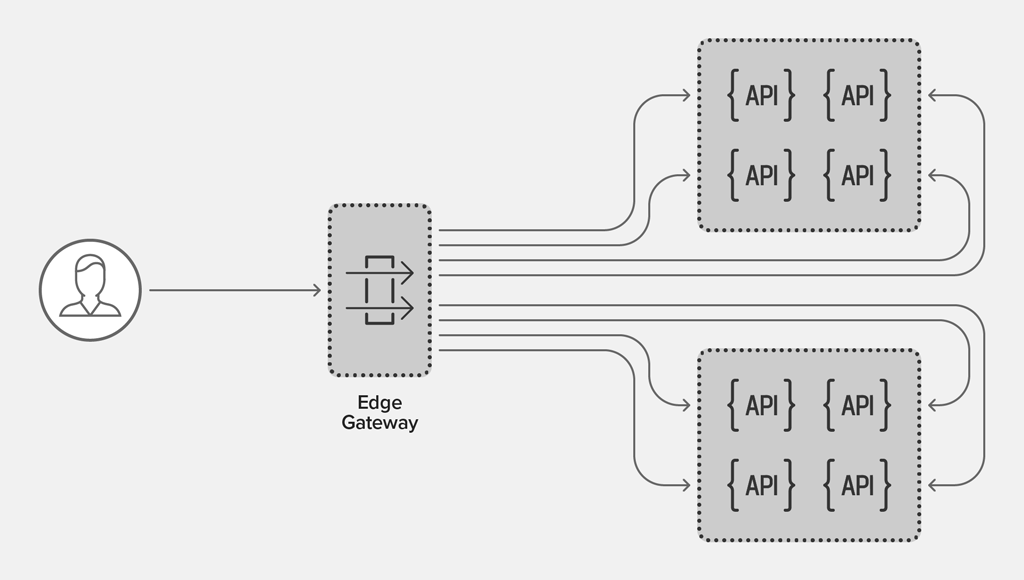

Two-Tier Gateway

As services are slowly becoming smaller and more distributed, many organizations are moving towards a two‑tier (multi‑layer) gateway pattern that introduces a separation of roles for multiple gateways.

This approach utilizes a security gateway as the first layer to manage:

- SSL/TLS termination

- Authentication

- Centralized logging of connections and requests

- Tracing injection

A routing gateway acts as the second layer, handling:

- Authorization

- Service discovery

- Load balancing

A two‑tier gateway pattern works best in situations requiring flexibility for dispersed services and independent scaling of functions. However, this approach can introduce issues when there are multiple teams managing different environments and applications, as it does not support distributed control.

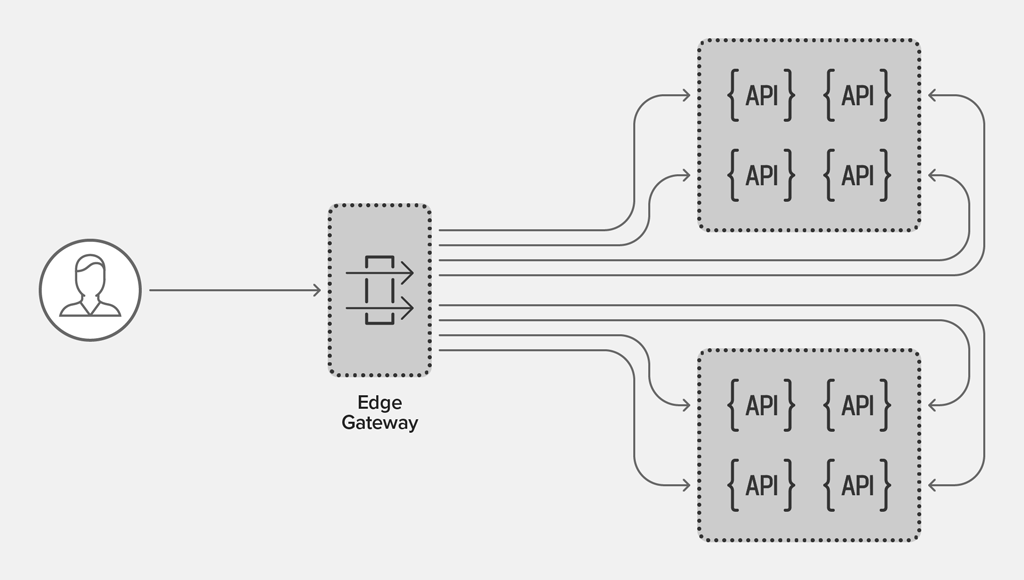

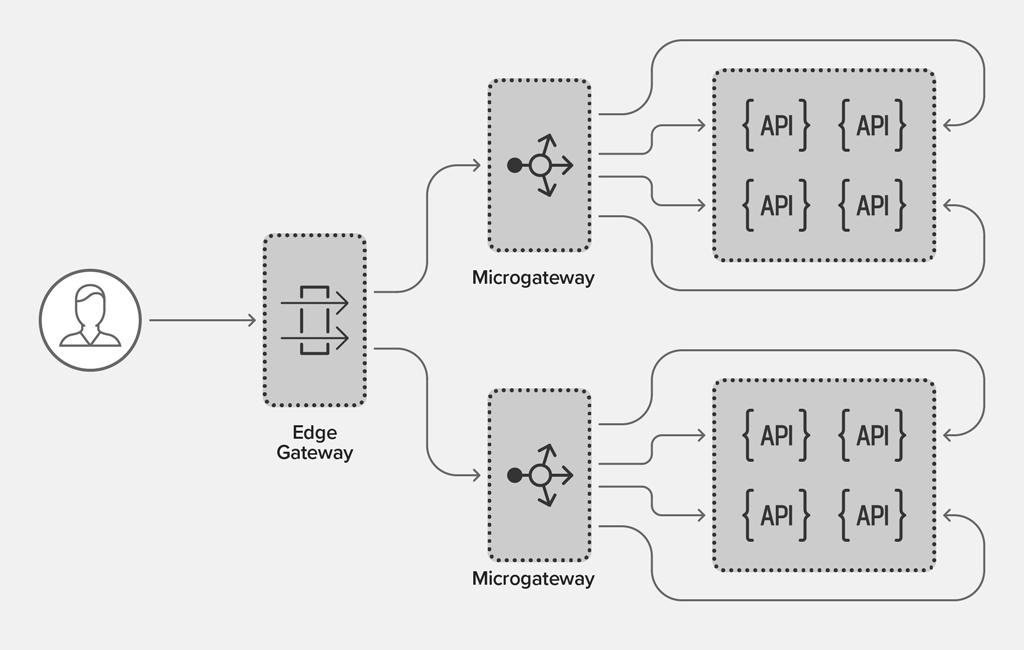

Microgateway

A microgateway gateway pattern builds on the two‑tier approach by providing a dedicated gateway to individual DevOps teams, which not only helps them manage traffic between services (east‑west traffic), but lets them make changes without impacting other applications.

This pattern enables the following capabilities at the edge:

- SSL/TLS termination

- Routing

- Rate limiting

Organizations then add individual microgateways for each service that manage:

- Load balancing

- Service discovery

- Authentication per API

Although microgateways are designed to work alongside microservices, they can also make it difficult to achieve consistency and control. Each individual microgateway may have a different set of policies, security rules, and require aggregation of monitoring and metrics from multiple services. A microgateway can easily become the opposite of “micro” – requiring a full configuration based on the business purposes of an API or set of APIs.

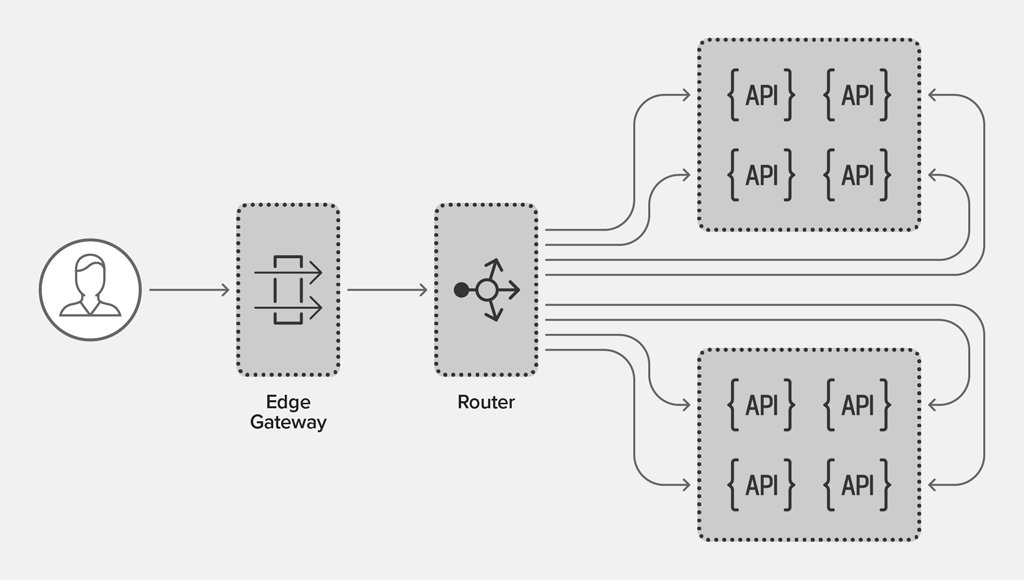

Per-Pod Gateways

The per‑pod gateway pattern modifies the microgateway pattern by embedding proxy gateways into individual pods or containers. The gateway manages ingress traffic to the pod, applies policies such as authentication and rate limiting, then passes the request to the local microservice.

The per‑pod gateway pattern does not perform any routing or load balancing, so it is often deployed in combination with one of the patterns above. Specifically, you might use the per‑pod gateway to perform some or all of the following capabilities:

- SSL/TLS termination for the application in the pod

- Tracing and metrics generation

- Authentication

- Rate limiting and queuing

- Error handling, including circuit breaker‑style error messages

The per‑pod gateway is typically lightweight and its configuration is static. It only forwards traffic to the local microservice instance, so does not need to be reconfigured if the topology of the application changes. The microservice pod can be redeployed with new proxy configuration if one of the policies needs to be changed.

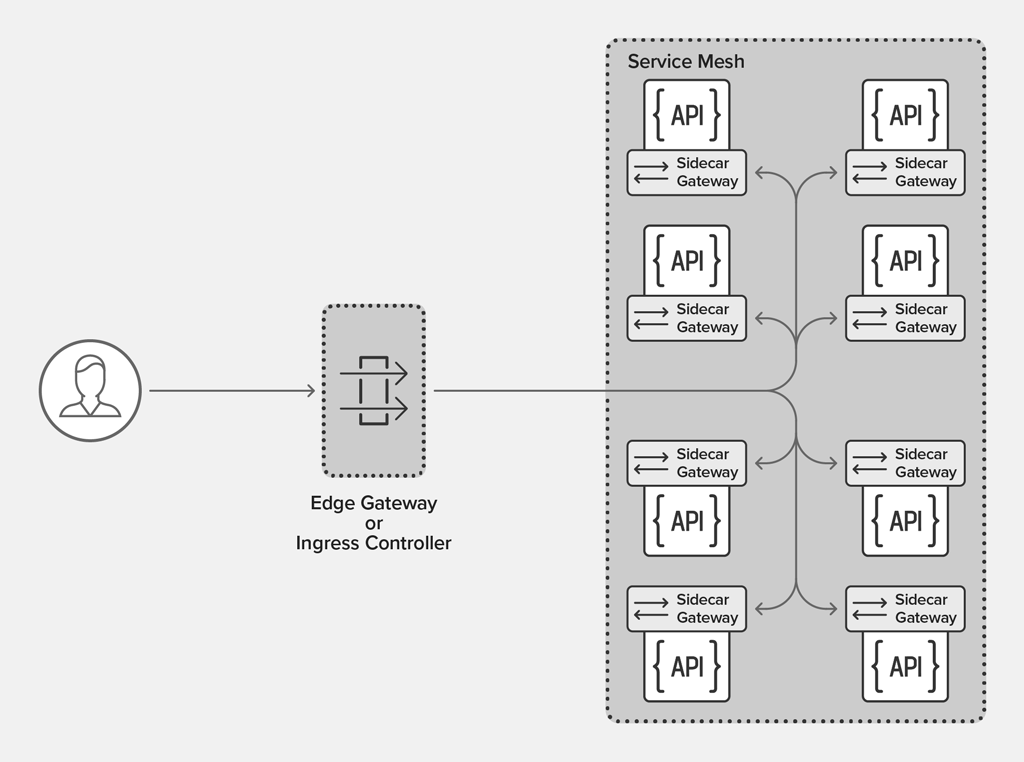

Sidecar Gateways and Service Mesh

The sidecar gateway pattern deploys the gateway as an ingress and egress proxy alongside a microservice. This enables services to speak directly to each other, with the sidecar proxy handling and routing both inbound and outbound communication.

This pattern uses an edge gateway that manages:

- SSL/TLS termination

- Authentication

- Centralized logging

- Tracing injection

Sidecar proxies are then used as the entry point to each service, providing:

- Outbound load balancing

- Service discovery integration

- Inter‑service authentication

- Authorization

The sidecar pattern introduces significant control‑plane complexity because the sidecar proxy can perform outbound routing and load balancing as required only if it’s aware of the entire application topology, which changes frequently. The pattern also introduces data‑plane complexity, as the sidecar proxy must transparently intercept all outbound requests from the local application. The pattern is most easily implemented using a sidecar‑based service mesh, which provides the sidecar proxy, injection, traffic capture, and integrated control plane required by the pattern. The sidecar proxy pattern is slowly emerging as the most popular (albeit still maturing) approach to service meshes, enabling role‑based access control for multiple teams that configure individual proxies from the service‑mesh control plane.

NGINX Supports Effective API Delivery

Today, technology is the primary differentiator for innovation, growth, and profitability. APIs are the connective tissue that drives modern digital businesses, enabling access to services and data that allow organizations to deliver improved experiences to customers, internal users, and partners. The more APIs you have, the more critical it is to have the right modern API management solution in place to streamline, scale, and secure APIs.

NGINX provides the fastest API management solution available, combining the raw power and efficiency of NGINX Plus as an API gateway with NGINX Controller, which empowers teams to define, publish, secure, monitor, and analyze APIs at scale across a multi‑cloud environment.

Learn more about why organizations rely on NGINX to manage, secure, and scale their business‑critical APIs.

The post Choosing the Right API Gateway Pattern for Effective API Delivery appeared first on NGINX.

Source: Choosing the Right API Gateway Pattern for Effective API Delivery

Leave a Reply