HTTP/2 Theory and Practice in NGINX Stable, Part 2

This transcription blog post is adapted from a live presentation at nginx.conf 2016 by Nathan Moore. This is the second of three parts of the adaptation. In Part 1, Nathan described SPDY and HTTP/2, discussed proxying under HTTP/2, and summarized HTTP/2’s key features and requirements. In this Part, Nathan talks about NPN/ALPN, NGINX particulars, benchmarks, and more. Part 3 includes the conclusions and a Q&A.

td {

padding-right: 10px;

}

Table of Contents

| 11:58 | HTTP/2 De Facto Requirements |

| 13:56 | NPN/ALPN – Why You Need to Know |

| 14:55 | NPN/ALPN – Support Matrix |

| 15:05 | NGINX Particulars – Practice |

| 16:36 | Mild Digression – HTTP/2 in Practice |

| 17:14 | More HTTP/2 in Practice |

| 17:35 | NGINX Stable: Build From Scratch |

| 17:56 | Benchmark Warnings! |

| 18:35 | Sample Configuration |

| 18:47 | HTTP/2 Benchmarking Tools |

| 19:11 | HTTP/2 Benchmark |

| 19:58 | HTTP/1.1 with TLS 1.2 Benchmark |

| 20:51 | HTTP/1.1 with No Encryption Benchmark |

| 21:42 | Thoughts on Benchmark Results |

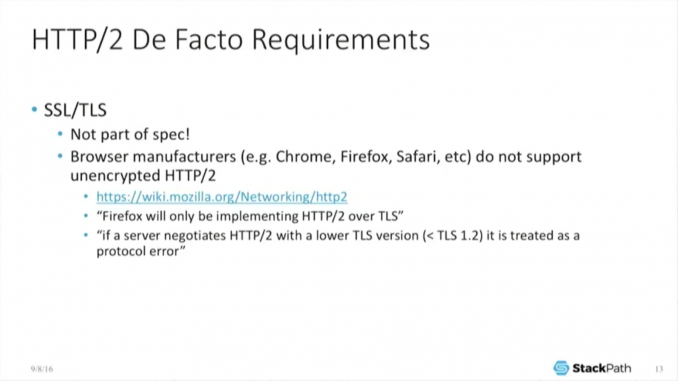

11:58 HTTP/2 De Facto Requirements

Okay, the real downside to this is that what wound up happening is: the formal spec nobody could agree on, whether to force SSL or not, whether to force encryption or not. And so all the browser manufacturers got together and decided, well, you know, we’re just not going to support unencrypted H2, so it’s a de facto requirement, is not part of the spec.

But if you intend to support it, you have to do it anyway. And again, this makes the configuration a little bit difficult, a little bit different, because now you’re stuck dealing with the SSL even for pictures of cats or other things which may not necessarily require SSL encryption, and it gets a little bit worse than that, and I quote from Mozilla here, and you can read that yourself, of course.

It’s not only that Firefox will only be implementing H2 over TLS along with the other major browsers, but if the server negotiates H2 with the lower TLS, lower than 1.2, it is treated as a protocol error. So now you can’t support TLS 1.1 and expect your Firefox users to be able to negotiate an HQ connection to you.

Now that’s a protocol error, and of course it’s going to be different per browser. Some browsers will let you do it, some won’t.

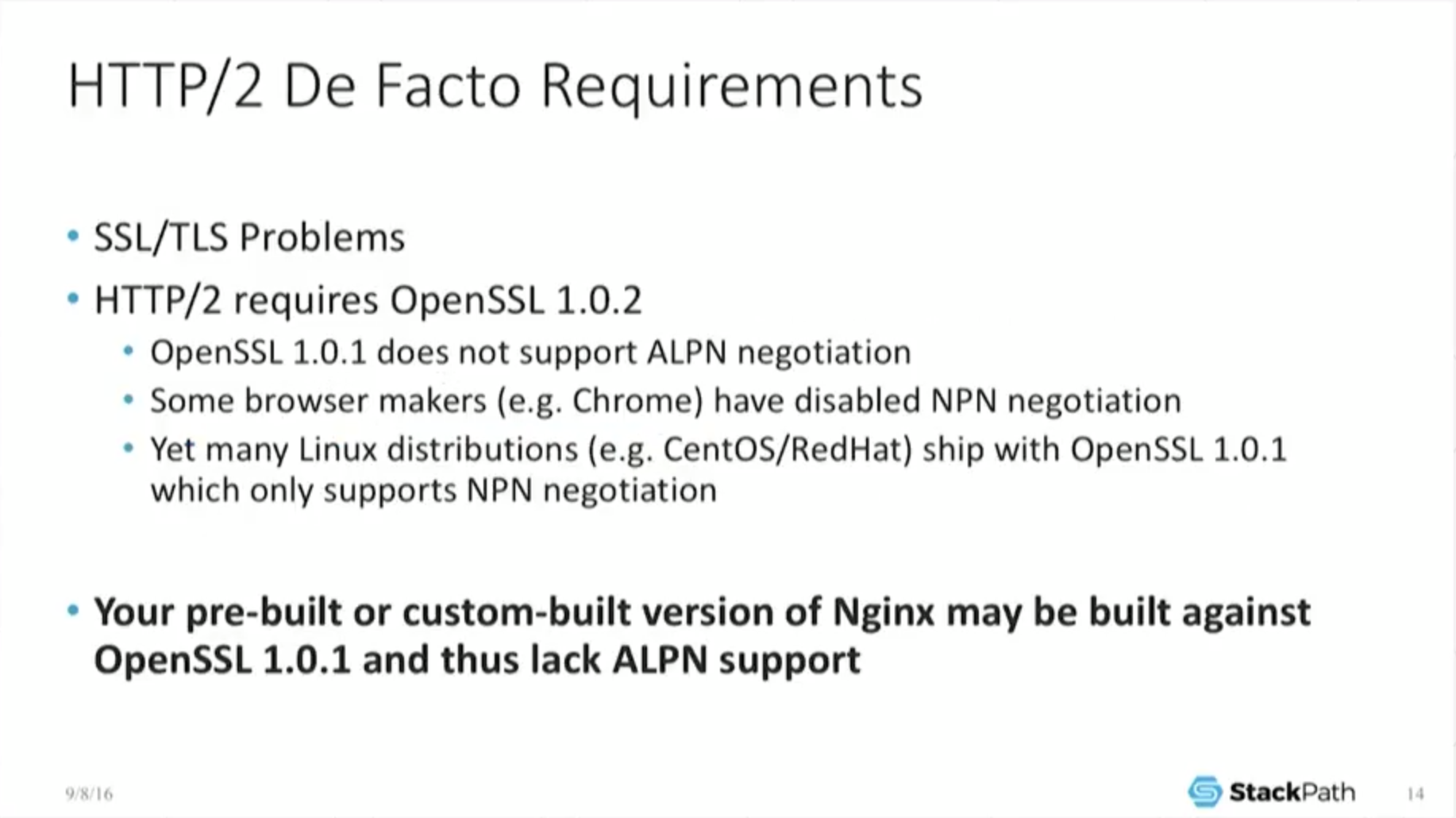

Now it gets a little bit stranger than that is, because in order to make this whole thing work – so back in the Speedy days, Speedy implemented something called NPN, next protocol negotiation, that’ll be the next slide, and unfortunately, that bundled support is in OpenSSL 10.1. But H2, H2 is unhappy with just NPN, and again, that’s not part of the spec it winds up being.

One of the browser manufacturers has decided that they want a different protocol. Why is this a big deal? Well, most Linux distributions like Red Hat sent us, even Sinha Seven, if you run with that while it ships with OpenSSL 10.1.

So, you know, your pre-build or custom-built version of NGINX may be built against an old version of OpenSSL and thus lack the needed ALPN support which HTTP/2 de facto requires for some browsers.

13:56 NPN/ALPN – Why You Need to Know

So NPN, Next Protocol Negotiation, it had the very noble beginning. One of the problems is: it was absolutely recognized, SSL has an overhead. It takes a while to negotiate it, and the goal was to remove a step in the protocol negotiation by moving the next protocol negotiation into the SSL handshake itself, thus saving at least one, possibly more round trips.

So this was done for performance reasons. It’s not just we felt like throwing in additional complexity for the sake of it. So Speedy, the earlier protocol, relied on NPN to do it, but when H2 came along, it was recognized that the scope was way too narrow for NPN.

It only applied basically to H2 – well, Speedy, in this case. And what they wanted was something that was much more general, that you could apply to any possible application that made Speedy choose to use this in the future, hence Application Layer Protocol Negotiation, which is what ALPN actually stands for.

And up there, I just have the abbreviated handshake, real quick, just to show how it is that it’s working.

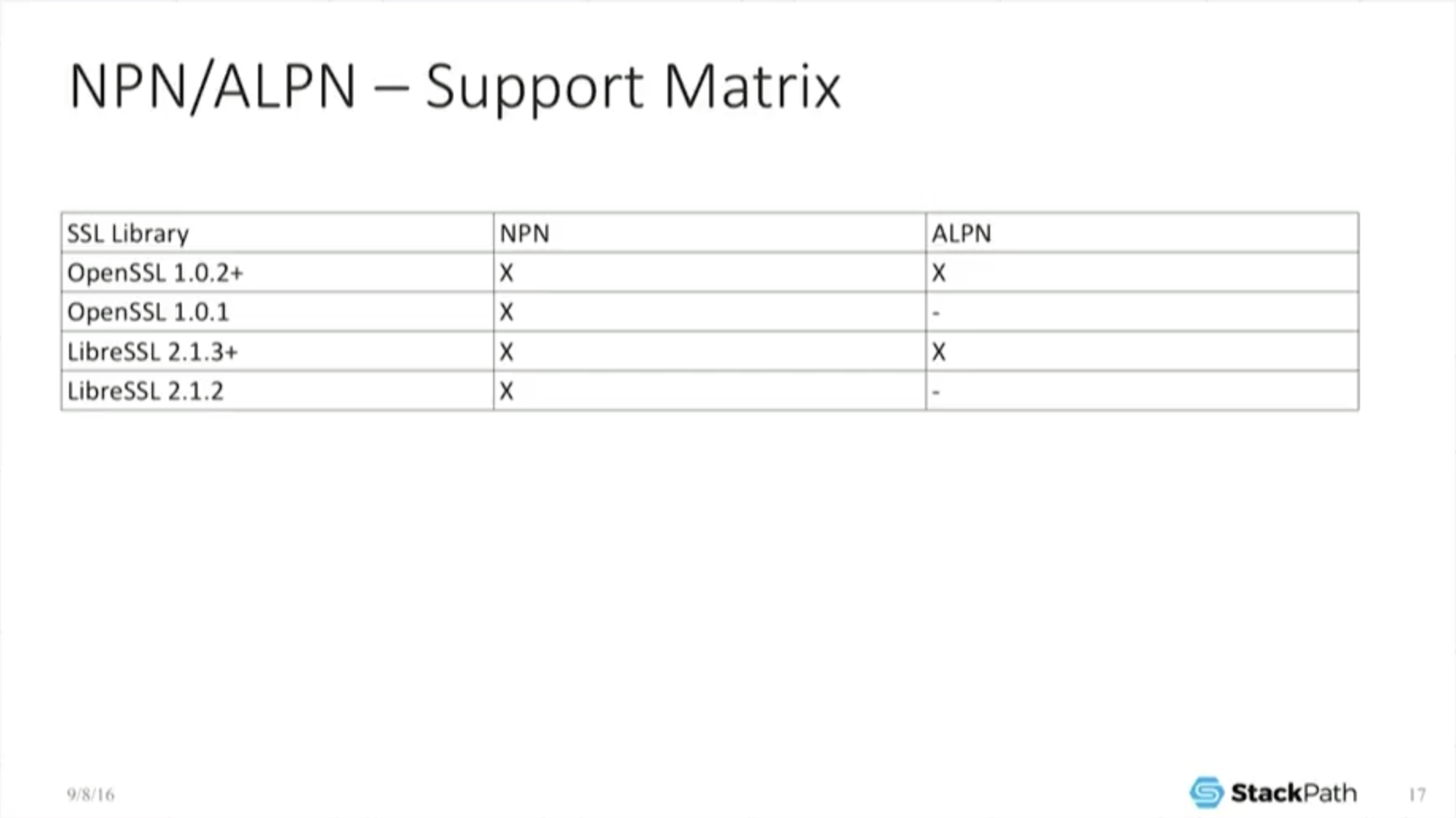

14:55 NPN/ALPN – Support Matrix

So again, the RFC associate with that is in there. So when you look at it, and since I know that a lot of people will be just looking at the slides later, I included the support matrix just to keep it clear – you need the newer version of OpenSSL.

15:05 NGINX Particulars – Practice

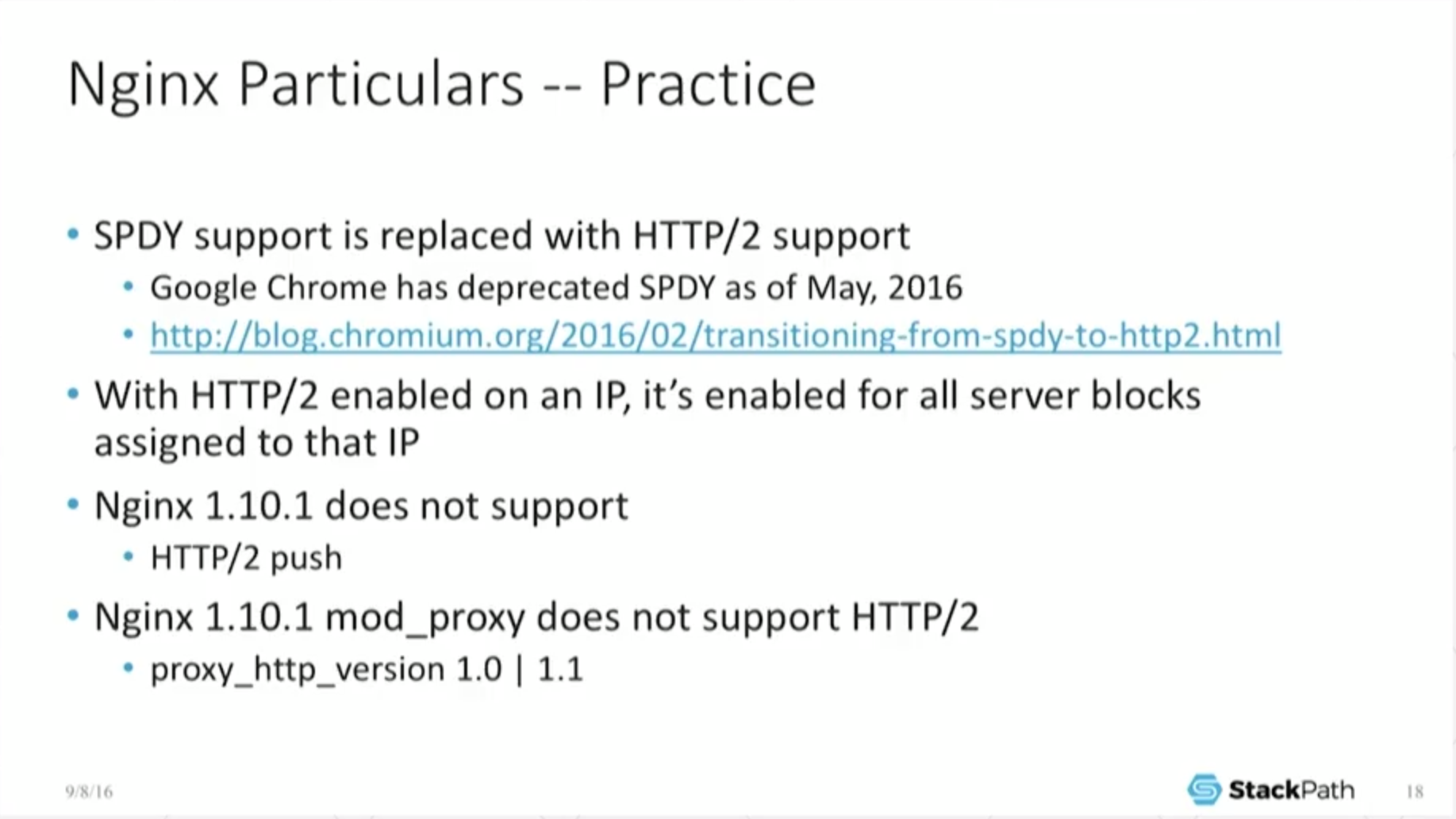

Alright, so now some of the practice. That was enough of the theory – that’s kind of what it does for us – what about the practice? When we go through to actually do this, especially inside of NGINX, what are the implementation particulars? What are the gotchas? What’s actually going on?

Part of it is: Speedy support is now gone. This is not intrinsically a bad thing because Google itself deprecated Speedy support as of May. So all the newer builds have crumbled. They don’t do Speedy anyway. It’s not that big of a deal

There are other H2 implementations in which Speedy can coexist. Again, it comes down to the NPN and ALPN thing you can negotiate to the other protocols, but NGINX doesn’t.That’s fine.

With H2 enabled on the IP, it’s enabled for all server boxes, some assigned to that IP which is why, from our perspective with our dedicated IP service, that was a big deal to us, and that’s how we can control whether we allow it or disallow H2 access, assuming you have a dedicated IP.

If you use a shared IP with us and use a shared cert, too bad, you’re getting H2 whether you like it or not. So NGINX 1.10, the main line, does not currently support H2 push. It might in the future bug the NGINX guys, but currently it doesn’t.

And what is most interesting here is the fact that mod_proxy does not support H2, and this is one of Valentin’s points in his talk yesterday which is an excellent, excellent point, in that it may not necessarily ever support H2 because it may not necessarily make sense. So just bear that in mind.

16:36 Mild Digression – HTTP/2 in Practice

Real quick digression: if you want to know that you are testing, how do I know that if I want H2, that I’m actually getting it? How do I test for it? If you want H2 support, you require a newer version of Curl 7.34 or newer, and once again, build it against the newer OpenSSL, otherwise it can’t do ALPN negotiation.

If you’re using a web browser like Chrome, if you use the developer tools, that also displays which protocol it is that it’s negotiated. So it’ll tell you if it’s got H2 and, of course, NGINX itself will log this.

So if you look at the common log form, sure enough – and I have it highlighted in blue – it actually tells you yes, I did get an H2 connection and I wrote it down right here. Fine.

17:14 More HTTP/2 in Practice

A little bit – another side note: what happens if you cannot negotiate an H2 connection? Well, you downgrade gracefully to H1. Once again, you’re not allowed to break the Web.

This has to have some method of interoperability. So the failure mode of H2 is that you just downgrade. Sure, it may take a little bit longer, but you know whatever, it still works. You still get your content. It does not break.

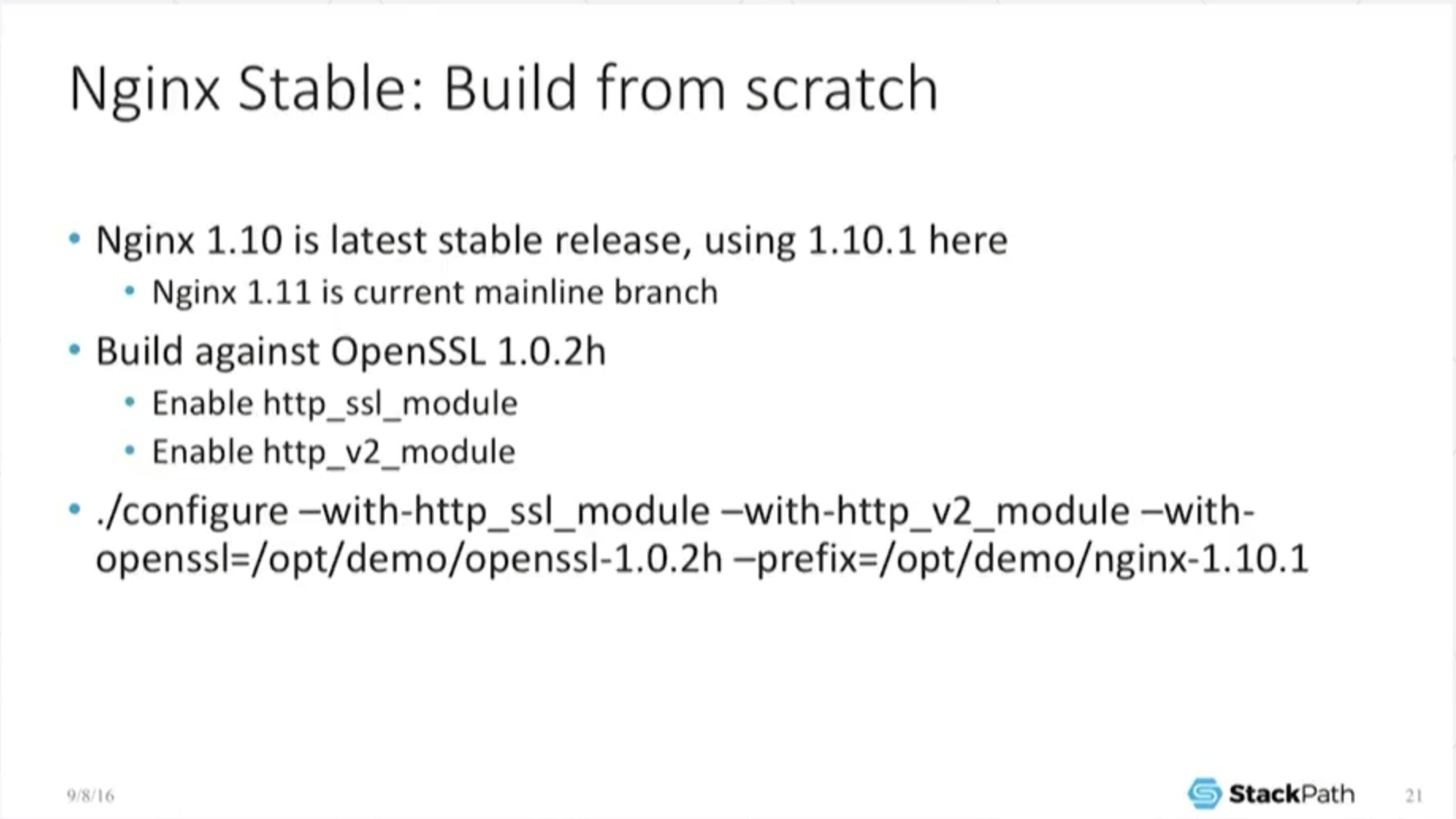

17:35 NGINX Stable: Build from Scratch

So I’m going to show some results of doing some very, very basic, simple benchmarking. It’s sort of comedically simple and it’s here to make a big point about this. And so I’m just showing you what it is that I’ve done. I’m actually, you know, enable SSL, enable a V2 module. Fine.

17:56 Benchmark Warnings!

So this little benchmark is run on this little Macbook Air. If your app has so few users and so little traffic that you can actually host it on a Macbook Air, then, you know, this benchmark probably does not apply to you. I did this deliberately.

So I want to emphasize this does not simulate your app. It does not simulate a real-world production environment. It’s very deliberately a limited environment, and I promise the point that I’m trying to make out of this is going to pop out and it will be really, really clear once we actually start looking at some of the results.

And it’s only going to take another second. I just want to emphasize again: when you go and do this sort of benchmarking yourself, chances are you’re going to get a different result because you’re going to do it on a real server, not on your little Macbook Air.

18:35 Sample Configuration

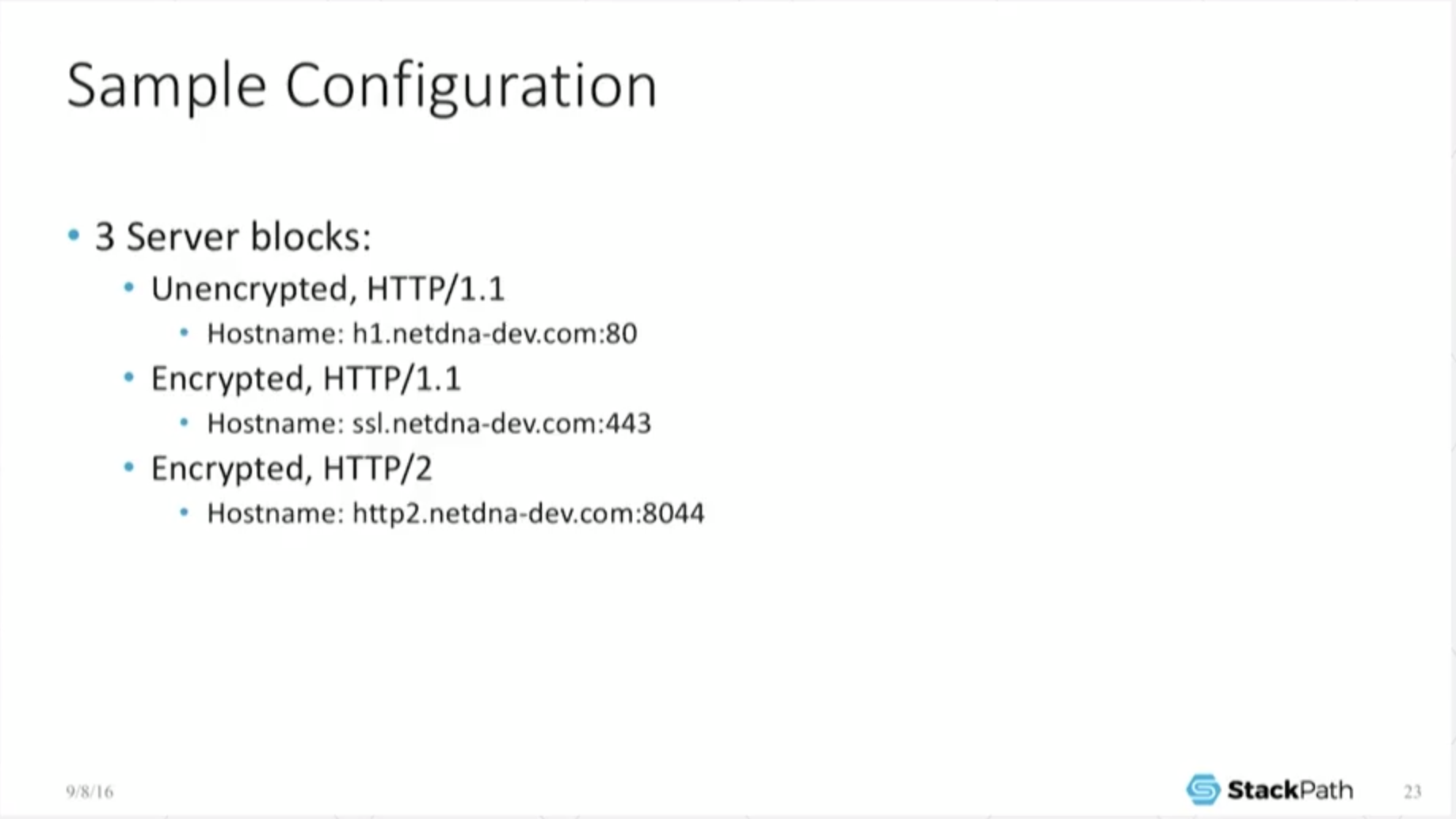

So all I’ve done here is: I’ve configured three little server blocks: one is unencrypted H1, one is encrypted H1 using TLS 1.2, and one is H2, right?

18:47 HTTP/2 Benchmarking Tools

So the benchmark I’m using, real simple: H2 load. It comes out of the NDHCB 2 project. It’s really simple.

All it’s doing is opening up a connection, making a request in this case for a 100 Byte object – again, stupid-simple – and it asks for it as fast as it possibly can and does it, returns back and measures, well, how many did I get back within a given period of time? In my case, I’m just asking for 50,000 of the exact same object one after the other. So, fine.

19:11 HTTP/2 Benchmark

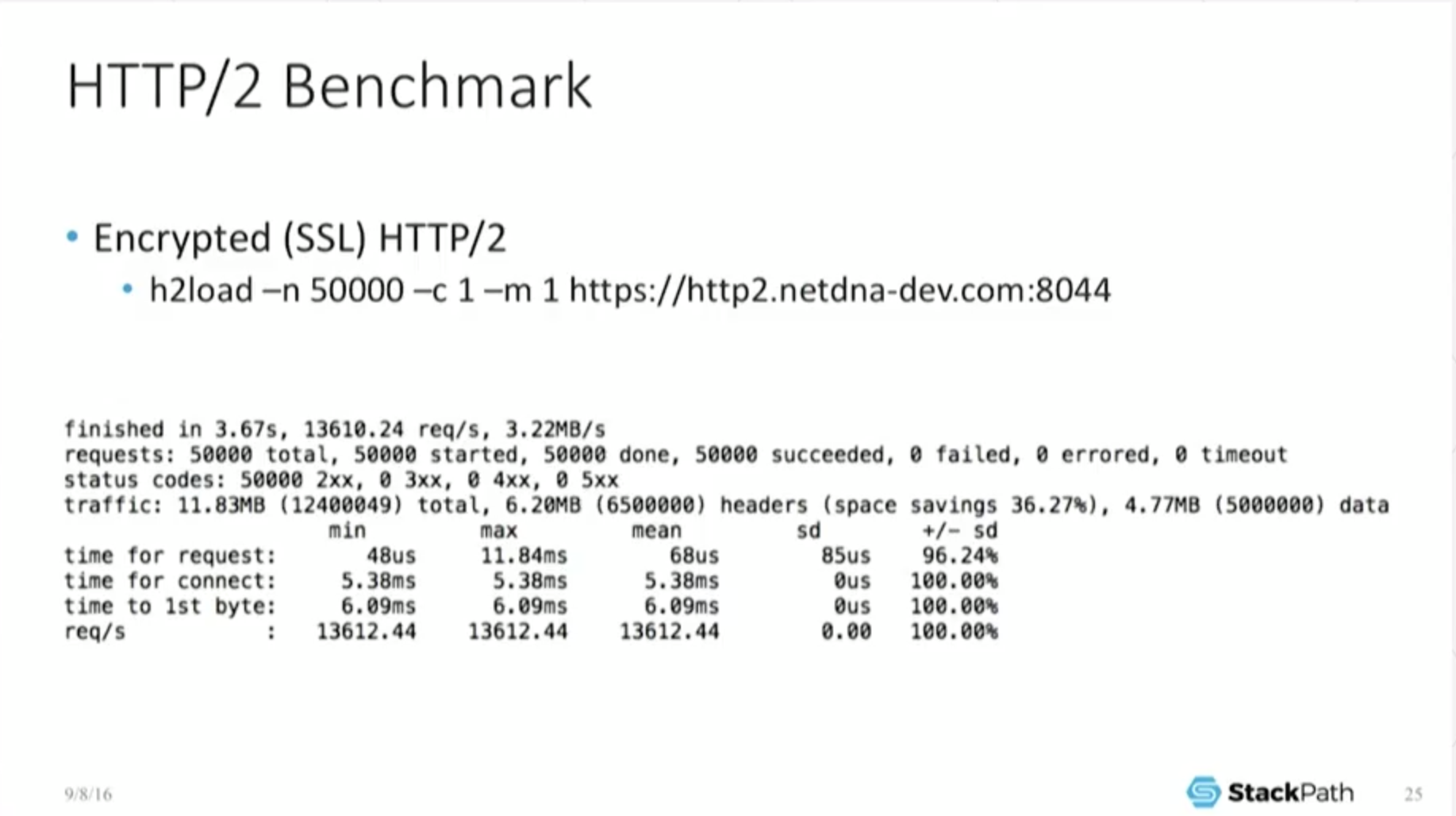

What does H2 look like? And again, it’s a deliberately limited benchmarking environment. This is not a real-world test. But I want to point out, to do 50,000 objects finished in 3.67 seconds, I’m pushing 13,600 odd requests per second.

If you look at the mean time for requests, 68 microseconds, it’s okay. It’s not too shabby. And what I want to point out here is that in this benchmark, it says its space savings is 36 percent. Again, you won’t see this in the real world.

This is because I have a 100 Byte object. So if I’m able to compress my headers down – my headers are actually a very reasonable percentage of the total object size – and that’s why that number is so high. But it’s here to show that yes, the header compression really works. It really is doing something.

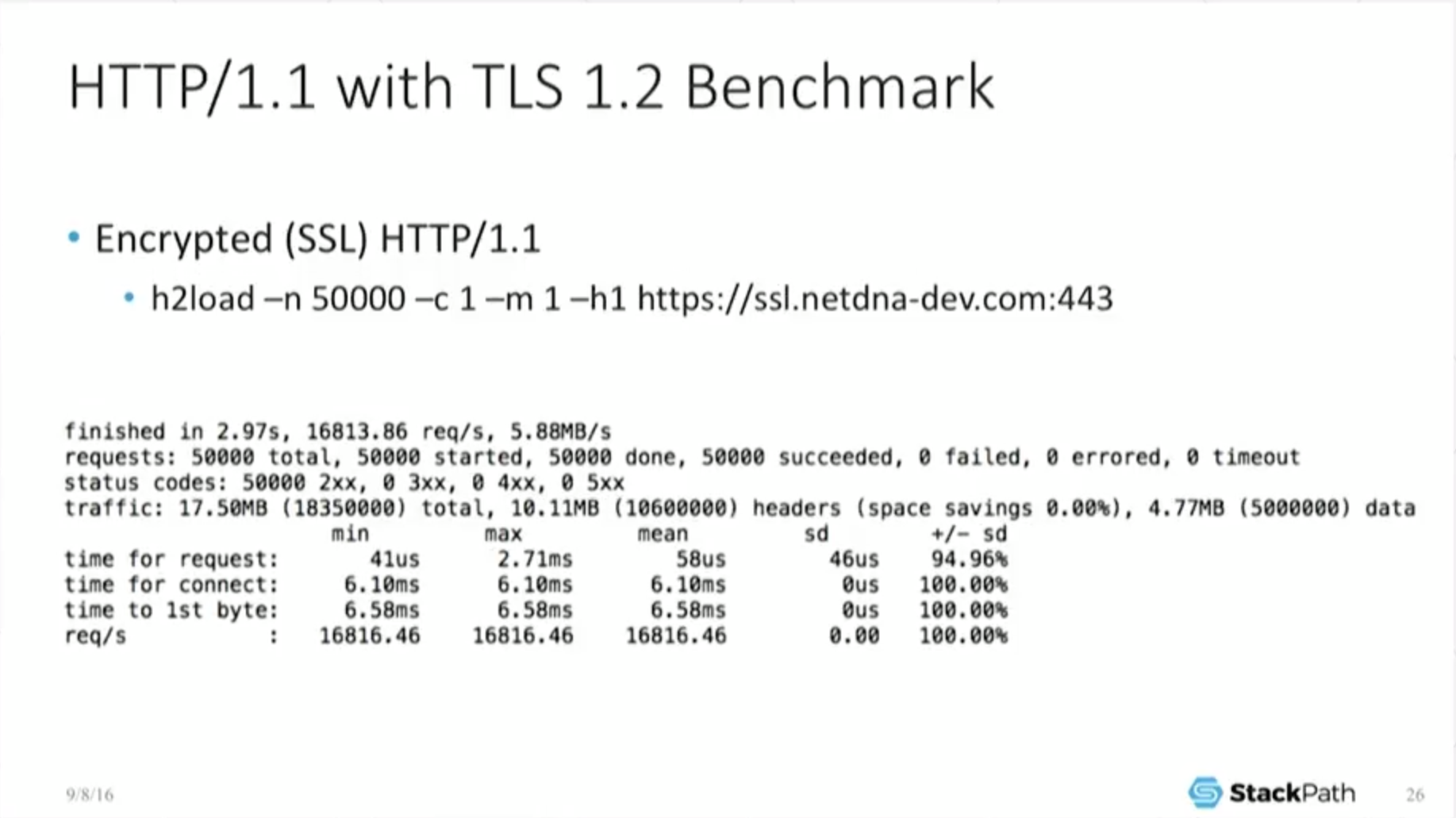

19:58 HTTP/1.1 with TLS 1.2 Benchmark

So, drumroll please. What if I only want to hit it with HTTP/1.1 again TLS 1.2 – exact same setup, exact same object, exact same little testing environment – and all this I finished in 2.97 seconds. Well, that’s weird.

So if you look at the mean – all this time down to about 58 microseconds time for request as opposed to 68, and I can push, what,16.8 thousand requests per second versus the 13.6 thousand I was doing in the last benchmark.

So Valentin’s point was spot on. H2 actually does have additional overhead especially on the server side, and that can slow you down. It really is there and we can see it in the actual Vinh Truong.

This is why I wanted this limited benchmark set, because now it’s comedically obvious that there really is a difference there. So you have to be careful. This is not a magic make-it-go-faster button.

20:51 HTTP/1.1 with No Encryption Benchmark

But it gets a little bit more interesting because, well, let’s take a look at what unencrypted does. If I make a plain old H1 unencrypted connection, now I complete in 2.3 seconds. I’m pushing 21.7 thousand requests for seconds instead of 16.8 thousand, instead of 13.6.

So not only does H2 have an overhead, SSL also has a major, major overhead that can be very, very substantive and look: now my mean time for request is down to 45 microseconds, right? So this expands. You can see it. You can see it. It actually is here.

And you have to be very, very careful for your use case that 1, you have to make sure that you need the de facto requirements of H2 which is SSL. You need to know that you have to be able to utilize the actual use cases that H2 provides, that header compression has to make sense for you, that, you know, you can handle the interleave request.

21:42 Thoughts on Benchmark Results

And you can’t just assume that everything is magically going to go faster just because you enabled it. So you really, really, really have to keep in mind that there is a cost associated with this. You better make sure the benefit that you need is there in order to justify doing it.

Which is why at Little MaxCDN, we’re very careful to make sure that this is user choose abortion, and we trust the end user to be smart enough and clever enough to have done their own benchmarking to know whether or not it actually works for them. So it affects both throughputs and latencies.

This transcription blog post is adapted from a live presentation at nginx.conf 2016 by Nathan Moore. This was the second of three parts of the adaptation. In Part 1, Nathan described SPDY and HTTP/2, discussed proxying under HTTP/2, and summarized HTTP/2’s key features and requirements. In this Part, Nathan talked about NPN/ALPN, NGINX particulars, benchmarks, and more. Part 3 includes the conclusions and a Q&A.

The post HTTP/2 Theory and Practice in NGINX Stable, Part 2 appeared first on NGINX.

Leave a Reply