Maximizing Python Performance with NGINX, Part II: Load Balancing and Monitoring

Introduction: Using Multiple Servers

Part I of this two-post blog series tells you how to maximize Python application server performance with a single-server implementation and how to implement static file caching and microcaching using NGINX. Both kinds of caching can be implemented either in a single-server or – for better performance – a multiserver environment.

Python is known for being a high-performance scripting language; NGINX can help in ways that are complementary to the actual execution speed of your code. For a single-server implementation, moving to NGINX as the web server for your application server can open the door to big increases in performance. In theory, static file caching can roughly double performance for web pages that are half made up of static files,as many are. Caching dynamic application content can lead to even bigger increases in application performance.

However, you may not find all these steps practical, and at some point the performance increases you achieve may not be enough. At this point, you need to consider “scaling out” – moving to a multiserver implementation that’s powerful, flexible, and almost infinitely scalable, at low cost.

Moving to a multiserver setup is also a good time to consider upgrading to NGINX Plus from open source NGINX software. Open source NGINX has its own good points; it has community support, it’s widely used, and provides prebuilt distributions as well as the source code. It’s often a good choice for use as a web server for a single-server site.

NGINX Plus adds preconfigured distributions; professional support; access to NGINX engineers; advanced load balancing of HTTP, TCP, and UDP traffic; intelligent session persistence; application health checks, live activity monitoring; media delivery features; and subscription-based pricing, per instance, bundle, or application.

These NGINX Plus features are useful on a standalone web server, but really shine in a multiserver architecture. You can use NGINX Plus to create fast, flexible, resilient, and easy-to-manage applications.

For Steps 1-5, see Part I of this blog post.

Step 6. Deploy NGINX as a Reverse Proxy Server

Deploying a reverse proxy server might seem like a big step up when moving from a single-server environment, but it can be surprisingly easy, and very powerful. Adding a reverse proxy server gives you an immediate performance boost and opens the door to additional steps:

- Boosting performance–Put an NGINX server in front of your existing Python application. You don’t need to change either the web server software or the configuration. The existing combination of web server and application server just runs in a bubble, isolated from direct access to web traffic, and spoon-fed requests by the reverse proxy server.

- Even more performance–Implement static file caching and microcaching for application-generated files, as described in our previous Python post. But now, implement them on the new reverse proxy server instead of the application server. The workload drops on the server running the application, often sharply, increasing the application’s capacity and delivering better performance to all users.

- Scaling out–Add more application servers, implement load balancing among them (NGINX Plus has advanced features), and use session persistence for continuity in each user’s experience.

- Adding high availability–Make your site highly available by mirroring your reverse proxy server to a live backup and having spare application servers.

- Monitoring and management–NGINX Plus includes advanced monitoring and management features, plus active health checks – when configured to do so, the reverse proxy server proactively issues out-of-band requests to servers to verify their availability.

NGINX also has a number of security advantages in various configurations. When implemented as a reverse proxy server, you can optimize and update NGINX for security, including protection from denial-of-service attacks, while configuring application servers for maximum performance.

Both static caching and micro-caching are powerful tools for enhancing performance. To enable content caching, include the proxy_cache_path and proxy_cache directives in the configuration:

# Define a content cache location on disk

proxy_cache_path /tmp/cache keys_zone=mycache:10m inactive=60m;

server {

listen 80;

server_name localhost;

location / {

proxy_pass http://localhost:8080;

# reference the cache in a location that uses proxy_pass

proxy_cache mycache;

}

}

There are several other directive for fine-tuning your content caching configuration. For details, see NGINX Content Caching in the NGINX Plus Admin Guide.

NGINX also gives you flexibility across different cloud deployment options and owned servers. For instance, some NGINX users run traffic on their on-premise servers up to a certain point, then add cloud instances to handle traffic peaks and cover for internal server downtime. This kind of flexibility and capability is relatively easy to achieve once you take the initial step of deploying NGINX as a reverse proxy server.

Using a reverse proxy server involves additional costs — for the server, either hardware or a cloud instance – and some work to set up. However, the benefits to your site are achieved without changes to your application code or web server configuration.

Step 7. Rewrite URLs

Web server configurations often include URL rewrite rules. You can make “pretty” URLs that are understandable to users, keep a fixed URL when a resource has moved, and manage cached vs. dynamically generated traffic, among other purposes.

NGINX configuration, including URL rewriting, uses a small number of directives and is widely said to be simple and clear. However, there is a learning curve if you’re not familiar with it. This blog post, Creating NGINX Rewrite Rules, serves as an introduction.

Here’s a sample NGINX rewrite rule that uses the rewrite directive. It looks for URLs that begin with /download and also include the /media/ or /audio/ directory later in the path. It replaces those elements with /mp3/ and adds the appropriate file extension, .mp3 or .ra. The $1 and $2 variables capture the path elements that aren’t changing. As an example, /download/cdn-west/media/file1 becomes /download/cdn-west/mp3/file1.mp3.

server {

...

rewrite ^(/download/.*)/media/(.*)..*$ $1/mp3/$2.mp3 last;

rewrite ^(/download/.*)/audio/(.*)..*$ $1/mp3/$2.ra last;

return 403;

...

}

You may have existing rewrite rules in Apache which you want to reimplement in NGINX, either directly or as a starting point for your NGINX configuration. Apache uses .htaccess files, which NGINX does not support. (Because .htaccess files are also hierarchical, and the effects are cumulative wherever multiple levels of .htaccess files apply, you might need to do some investigation to identify the configuration rules which are active in a given context.)

Here’s a brief example. The following Apache rule adds the http protocol identifier and the www prefix to a URL:

RewriteCond %{HTTP_HOST} example.org

RewriteRule (.*) http://www.example.org$1

To accomplish the same thing in NGINX, in the first server block you match requests for the shorter URL and redirect them to the second server block, which matches the longer URL.

# USE THIS CONVERSION

server {

listen 80;

server_name example.org;

return 301 http://www.example.org$request_uri;

}

server {

listen 80;

server_name www.example.org;

...

}

For additional detail and more examples, see our blog post, Converting Apache Rewrite Rules to NGINX Rewrite Rules.

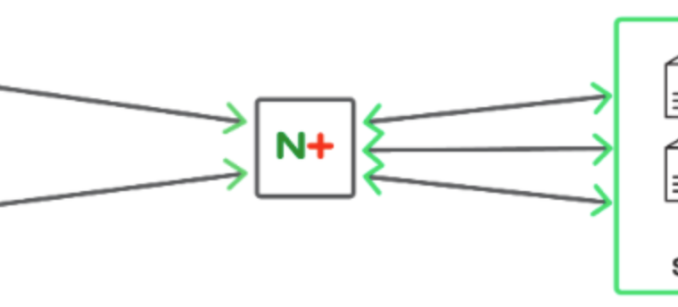

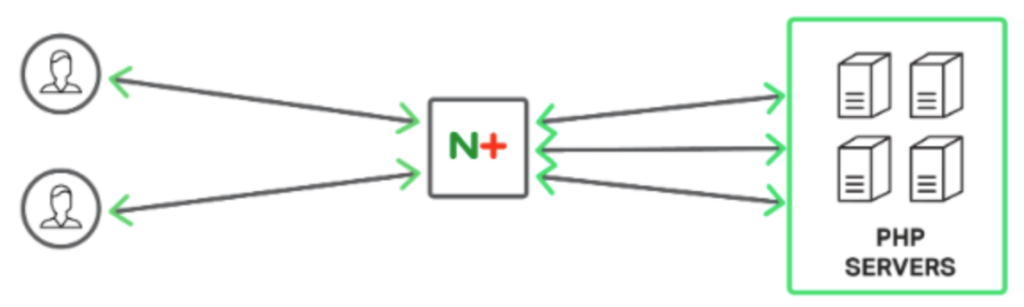

Step 8. Implement Load Balancing

The ultimate method for expanding your website’s capacity and availability is to run multiple application servers and implement load balancing. This is a very widely used capability of NGINX, and NGINX Plus includes additional, sophisticated load balancing algorithms and functionality.

NGINX load balancing begins with creating a server group made up of the servers you’ll be balancing among. The following configuration code includes a server weight – if you have a more capable server, assign it a larger weight to increase the proportion of traffic sent to it.

upstream stream_backend {

server backend1.example.com:12345 weight=5;

server backend2.example.com:12345;

server backend3.example.com:12346;

}

Load balancing on NGINX Plus offers a range of algorithms for routing new requests, including Round Robin (the default), Least Connections, Least Time (to respond), generic Hash (for instance, of the URL), and IP Hash (of the client IP address). For Python and other application languages, using the optimal load-balancing algorithm can be very effective, since any one request can take a long time; getting each new request to the least-busy server is a powerful optimization.

Step 9. Implement Session Persistence and Session Draining

The web is designed for stateless interaction by default; when state-specific information is needed, there are several ways to implement it. If you have state on the application server, you need to have the same server handle all requests from a given user for the duration of the session. This is referred to as session persistence.

NGINX Plus offers advanced session persistence capabilities via the sticky directive, including the use of a sticky cookie; a learn parameter, which generates session identifiers and uses them for repeated server assignment; and sticky routes, which let you specify a specific server in advance.

The following code shows the sticky directive in use.

upstream backend {

server webserver1;

server webserver2;

sticky cookie srv_id expires=1h domain=.example.com path=/;

}

Session draining is a useful part of your toolkit for managing multiple load-balanced servers. When you set the drain parameter in the server directive of a server in an upstream group, NGINX Plus lets that server “wind down”. That is, NGINX Plus doesn’t send it new requests, but lets existing connections continue until the session ends. To put the revised configuration (with the drain parameter in force) into use, you can directly edit the configuration file and have NGINX Plus reload it, or use the on-the-fly configuration API.

For more on session persistence and session draining, see Session Persistence with NGINX Plus.

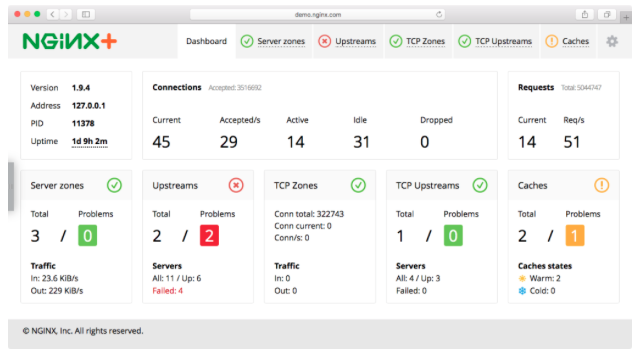

Step 10. Use Monitoring and Management

When your server configuration is more complex, monitoring and management become critical for maintaining high performance and avoiding downtime. NGINX Plus runs active health checks that let you know when a server may be in trouble, but you need to be monitoring your system to act on that information before problems occur.

With NGINX Plus, you get a built-in dashboard for monitoring the health of your NGINX server. You can track data flow into and out of your NGINX servers as a group and drill down to details about each particular server.

NGINX Plus also provides real-time statistics in JSON format. You can send this data to monitoring tools such as Data Dog, Dynatrace, New Relic, or into your own tool.

Conclusion

Python has a strong following among a wide range of developers who’ve come to love the expressiveness, high performance, and extensive community support for the language. However, exactly by being so capable, Python can help you create websites that attract many simultaneous users and need performance support.

NGINX is useful for <a href="http://Part I“>single-server implementations and multi server implementations (this post), across your own servers and cloud hosts, giving you tremendous flexibility and capability.

NGINX Plus really shines in multiserver deployments. Start your free 30-day trial today or contact us for a live demo.

The post Maximizing Python Performance with NGINX, Part II: Load Balancing and Monitoring appeared first on NGINX.

Source: Maximizing Python Performance with NGINX, Part II: Load Balancing and Monitoring

Leave a Reply