Optimizing Web Servers for High Throughput and Low Latency

td {

padding-right: 10px;

}

This post is adapted from a presentation at nginx.conf 2017 by Alexey Ivanov, Site Reliability Engineer at Dropbox. You can view the complete presentation, Optimizing Web Servers for High Throughput and Low Latency, on YouTube.

Table of Contents

| 3:13 | Hardware |

| 14:45 | Sysctl tuning Do’s and Don’ts |

| 16:10 | Libraries |

| 18:13 | TLS |

| 20:42 | NGINX |

| 22:30 | Buffering |

| 24:41 | Again, TLS |

| 30:15 | Wrapping Up |

| 33:05 | Q&A |

First, let me start with a couple of notes. This presentation will move fast, really fast, and it’s a bit dense. Sorry about that. I just needed to pack a lot of stuff into a 40-minute talk.

A transcript of this talk is available on the Dropbox Tech Blog. It’s an extended version with examples of how to profile your stuff, how to monitor for any issues, and how to verify that your tuning has worked. If you like this talk, go check out the blog post. It should be somewhere at the top of Hacker News by now. Seriously, go read it. It has a ton of stuff. You might like it.

About me, very quickly: I was an SWE (Software Engineer) at Yandex Storage. We were mostly doing high-throughput stuff. I was an SRE (Site Reliability Engineer) at Yandex Search. We were mostly doing low-latency stuff. Now, I’m an SRE for Traffic at Dropbox, and we’re doing both of these things at the same time.

About Dropbox Edge: it’s an NGINX-based proxy tier designed to handle low-latency stuff – website views, for example – along with high-throughput data transfers. Block uploads, file uploads, and file downloads are high-throughput data types. And we’re handling both of them with the same hardware at the same time.

A few disclaimers before we start:

This is not a Linux performance analysis talk. If you need to know more about eBPF/perf or bcc, you probably need to read Brendon Gregg’s blog for that.

This is also not a TLS best practices compilation. I’ll be mentioning TLS a lot, but all the tunings you apply will have huge security implications, so read Bulletproof SSL and TLS or consult with your security team. Don’t compromise security for speed.

This is not a browser performance talk, either. The tunings that’ll be described here will be mostly aimed at server-side performance, not client-side performance. Read High Performance Browser Networking by Ilya Grigorik.

Last, but not least, the main part of the talk is: do not cargo-cult optimizations. Use the scientific method: apply stuff and verify each and every tunable by yourself.

We’ll start with the lowest layers, and work our way up to the software.

3:13 Hardware

On the lowest layers, we have hardware.

When you’re picking your CPU, try looking for AVX2-capable CPUs. You’ll need at least Haswell. Ideally, you’ll need Broadwell, or Skylake, or the more recent EPYC, which also has a good performance.

For lower latencies, avoid NUMA. You’ll probably want to disable HT. If you still have multi-node servers, try utilizing only a single node. That will help with latencies on the lowest levels.

For higher throughput, look for more cores. More cores are generally better. Maybe NUMA isn’t that important here.

For NICs, 25G or 40G NICs are probably the best ones here. They don’t cost that much; you should go for them. Also, look for active mailing lists and communities around drivers. When you’ll be troubleshooting driver issues, that’ll be very important.

For hard drives, try using Flash-based drives. If you’re buffering data or caching data, that‘ll also be very important.

Let’s move to the kernel stuff.

Right in-between the kernel and your hardware, there will be firmware and drivers. The rule of thumb here: upgrade frequently, but slowly. Don’t go bleeding edge, stay a couple of releases behind. Don’t jump to major versions when they’re just released. Don’t do dumb stuff, but update frequently. Also, try to decouple your driver updates from your kernel upgrades. That will be one thing less to troubleshoot, one thing less to think about when you upgrade your kernel. For example, you can pack your drivers with DKMS (Dynamic Kernel Module Support) or pre-built drivers for all your kernel versions. Decoupling really helps troubleshooting.

On the CPU side, your main tool will probably be turbostat, which allows you to look into the MSR registers of the processor and see what frequencies it’s running at. If you’re not satisfied with the time the CPU spends in idle states, you can set your governor to “performance.” If you’re still not satisfied, you can set X86_energy_perf_policy to “performance.” If the CPU is still spending a lot of time in idle states, or you’re dropping packets for some reason, try setting cpu_dma_latency to zero. But that’s for very low-latency use cases.

For the memory side, it’s very hard to give generic memory management advice, but generically, you should set THP (Transparent Huge Pages) to madvise. Otherwise, you may accidentally get a 10x slowdown when you’re going for a 10% to 20% increase in speed. Unless you went with utilizing a single NUMA node, you’ll probably want to set zone_reclaim to zero.

Speaking about NUMA, that’s a huge subject, really huge. It all comes down to recent multiple servers locked together in single silicon. You can ignore all that, disable it in BIOS, and get just a mediocre performance across the board. You can deny it, for example, specifically by single-node servers. That’s probably the best case, if you can afford it. Or you can embrace NUMA and try to treat each and every NUMA node as a separate server with separate PCI Express devices, separate CPUs, and separate memory.

Let’s move to network cards. Here, you’ll mostly be using Eth22. You’ll be using it for looking at stats of your hardware and tuning your hardware at the same time.

Let’s start with ring buffer sizes. The rule of thumb here is that more is generally better. There are lots of caveats to that – lots of them, but bigger ring buffers will protect you against some minor stalls or packet bursts. Don’t increase them too much, though, because it will also increase cache pressure, and on older kernels, it will incur bufferbloat.

The next thing to tune is interrupt affinity for low latency. You should really limit the number of queues you have to a single NUMA node, then select a NUMA node where your network card is terminated physically, and then pin all these queues to the CPUs one-to-one.

For high-throughput stuff, generally it’s OK to just spread interrupts across all of your CPUs. There are, once again, some caveats around that: you may need to tune stuff a bit, bring up interrupt processing on one NUMA node and your application on other NUMA nodes – but just verify it by itself. You have that flexibility.

Interrupt coalescing: for low latency, generally, you should disable adaptive coalescing, and you should lower microseconds and frames. Just don’t set them too low. If you set them too low, you’ll get an interrupt problem and it will actually increase your latencies.

For high throughput, you can either depend on hardware to do the right thing, such as enabling adaptive coalescing, or just increase microseconds and frames to some particularly large value, especially if you have huge string buffers – that will also help.

Then, on hardware offloads inside the NIC: the recent NICs are very smart. I could probably give a separate talk just about hardware offloads, but there are still a couple of rules of thumb you can apply: don’t use LRO, use GRO – LRO will break stuff miserably, especially on the router side. Be careful with TSO – it can corrupt packets, it may stall your network card, and stuff may break. TSO is very complicated. It’s in the hardware, so it’s highly dependent on firmware-version drivers. GSO, on the other hand, is OK. Again, on the older versions of kernels, it will lead to bufferbloat, either TSO or GSO. Be careful there also.

On the packet steering side: generally, on modern hardware, it’s OK to rely on the network card to do the right thing. If you have old hardware: some 10G cards can only RSS to the first 16 queues, and if you have 64 CPUs, they won’t be able to steer packets across all of them. On the software side: you have RPS. If your hardware can’t do that efficiently, you can still do it in software. For example, use RPS and spread packets across more CPUs. Or, if your hardware can’t RSS the traffic type you’re using, IPinIP or GRE tunneling, for example, then again, RPS can help. XPS is generally OK to enable, just free performance-enable it.

Now we’re up to the network stack.

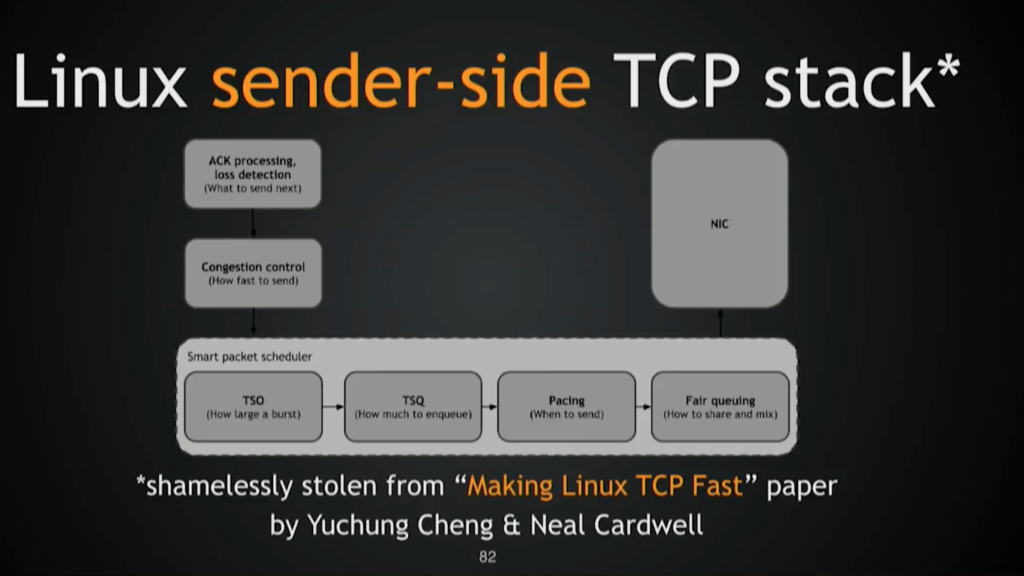

This is a great picture stolen from the Making Linux TCP Fast paper, basically from the people who brought you BBR (Bottleneck Bandwidth and RTT) Linux Network stack performance improvements for the last four years. It’s a great paper. Go read it. It’s fun to read. It’s a very good summary of a huge work.

We’ll start again with the lowest levels and go up. Fair queuing and pacing: fair queuing is responsible for sharing bandwidth fairly across multiple flows, as the name implies, placing packets within the flow with regard to a sending rate set by a congestion control protocol. When you combine both of them together, you can use fair queueing and pacing – this is a requirement for BBR, but you can still use it with Cubic, for example, or on any other congestion protocol; it greatly decreases retransmit rates. We’ve observed 15%, 20%, 25%, which improves performance. Just enable it: again, free performance.

For congestion control: there’s been a lot of work done in this area; some by Google, some by Facebook, and some by universities. People are usually using tcp_cubic by default.

We’ve been looking at BBR for quite a long time, and this is data from our Tokyo PoP. We had a six-hour experiment some months ago, and you can see that download speed went up for pretty much all percentiles. The clients we considered were bandwidth-limited, basically TCP-limited in some sense, or congestion control-limited. But enough of that.

Let’s talk about ACK processing and loss detection. Again, that’s a very frequent theme in my talk: use newer kernels. With newer kernels, you’ll get new heuristics turned on by default, and old heuristics will be slowly retired. You don’t need to do anything; you upgrade your kernel and you get these for free. So, upgrade your kernel. Again, it doesn’t mean that you need to stay bleeding edge. Use the same guidelines for firmware and driver supply: upgrade slowly, but frequently.

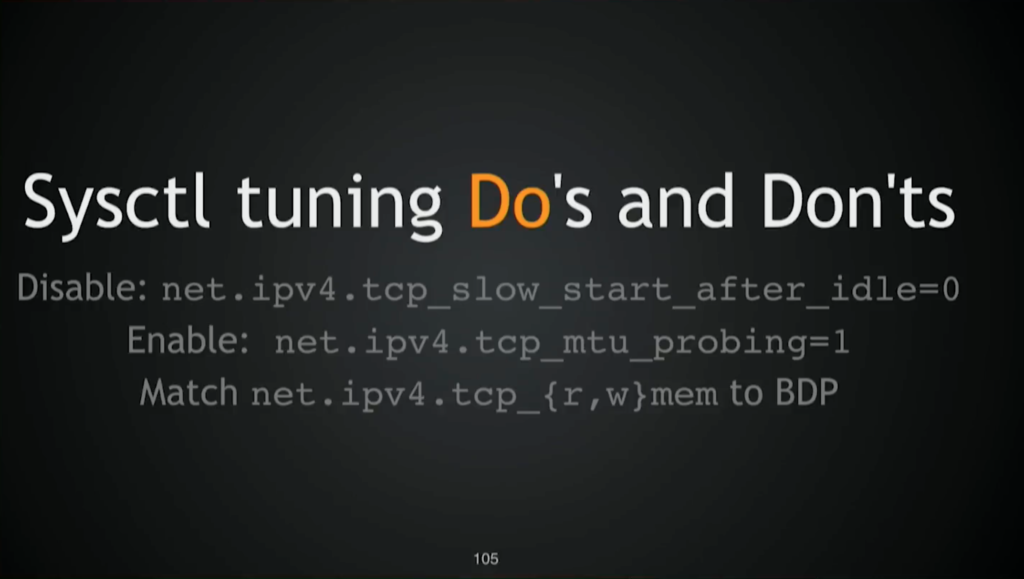

14:45 Sysctl tuning Do’s and Don’ts

You can’t actually do any network optimization talk without mentioning sysctl. Here are some do’s and don’ts.

Let’s start with stuff you shouldn’t do: Don’t enable TIME-RATE recycle. It was already broken for NAT (Network Address Translation) users; it will be even more broken in newer kernels. And it’ll be removed, so don’t use it. Don’t use times. Don’t disable timestamps unless you know what you’re doing. It has very non-obvious implications, for syncookies, for example. And there are many, so unless you know what you’re doing, unless you’ve read the code, don’t disable them.

Stuff you should do, on the other hand: you should probably disable tcp_slow_start_after_idle. There’s no reason to keep it on. You should also enable tcp_mtu_probing just because the Internet is full of ICMP black holes. Again, you should enable it. Read and write memory: you should bump it up. Don’t do it too much, though, just match it to BDP. Otherwise, you may get built-in kernel working.

Oh, that went fast!

16:10 Libraries

Now we’re at library levels. We’ll discuss the commonly used libraries in the web servers.

If all the previous do’s and don’ts were basically applicable to pretty much any high-load network server, these are more web-specific.

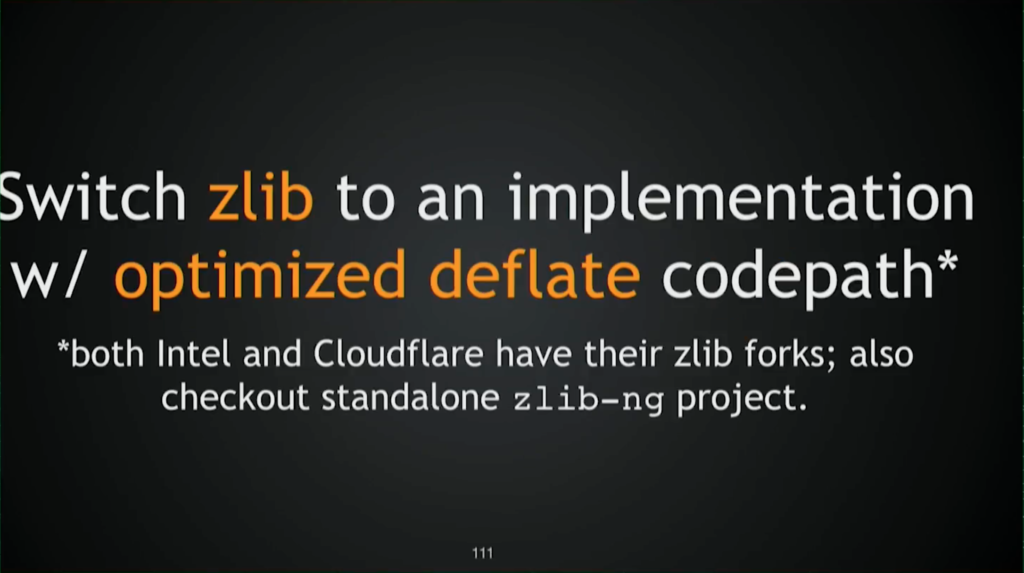

If you run perf top, you see deflate there – then maybe it’s time to switch to a more optimized zlib portion. Intel and Cloudflare both have zlib forks, for example.

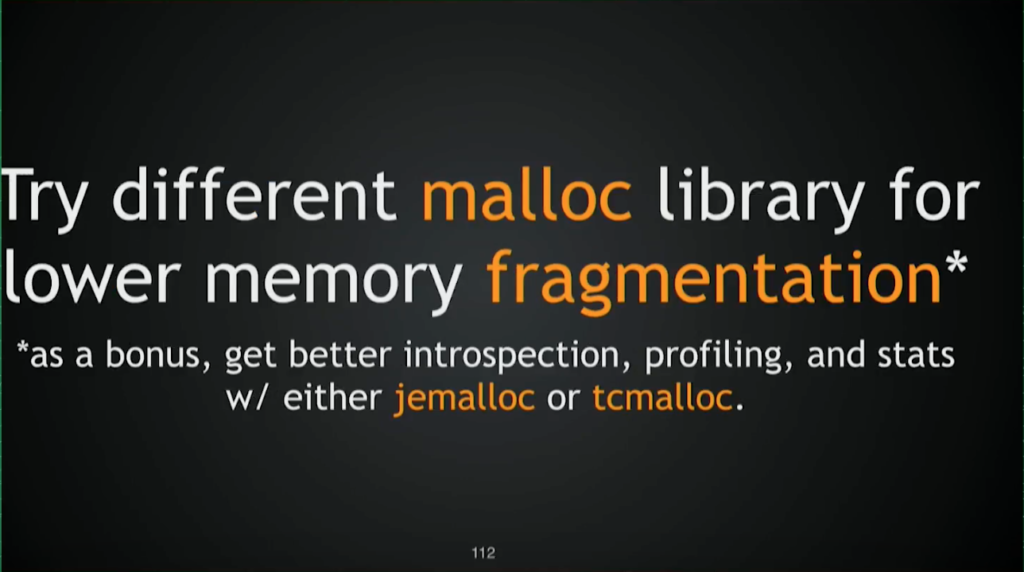

If you see stuff around malloc – you either see memory fragmentation or you see that malloc takes more time than it should – then you can switch your malloc implementation to jemalloc or tcmalloc. That also has the great benefit of decoupling your application from your operating system. It has its own drawbacks, of course, but generally, you don’t want to troubleshoot new malloc behavior when you switch operating systems, for example, or you upgrade your operating system. Decoupling is a good thing. You’ll also get the introspection profile, and stats and stuff.

If you see PCRE symbols in perf top, then maybe it’s time to enable JIT. I would say it’s a bit questionable. If you have lots of regular expressions in your configs, maybe you need to just rewrite it. But again, you can enable JIT and see how it behaves in your particular workload.

18:13 TLS

TLS is a huge subject, but we’ll try to quickly cover it. First, you start by choosing your TLS library. Right now, you can use OpenSSL, LibreSSL, and BoringSSL. There are other forks, of course, but the general idea is that the library you choose will greatly affect both your security and your performance, so be careful about that.

Let’s talk about symmetric encryption. If you’re transferring huge files, then you’ll probably see OpenSSL symbols in perf top. Here, I can advise you to enable ChaCha. First, it will diversify your cipher-suites. You will not be AES only, which is good. Second, if your library supports it, you can enable equal preference cipher groups and let client decide which ciphers to use. For example, your server will still dictate the order of preference, but within the same group, the client can decide. For example, low-speed mobile devices will pick ChaCha instead of AES because they don’t have hardware support for AES.

For asymmetric encryption, you should use ECDSA certs along with your RSA certs just because they’re 10 times faster on the server side. Another piece of advice: don’t use uncommon crypto parameters. Let me elaborate on this. First of all, uncommon crypto is not really the best one from a security standpoint, so you should do that only if you know what you’re doing. Second, a great example is 4k RSA keys, which are 10 times slower than 2k RSA keys. Don’t use them unless you really need them, unless you want to be 10 times slower on the server side. Again, the rule of thumb here is that the most optimized script is the most common one. The crypto that is used most will be the most optimized.

20:42 NGINX

Finally, we’re up to the NGINX level. Here, we’ll talk about NGINX-specific stuff.

Let’s start with compression. Here, you need to decide what to compress first. Compression starts with mime.types and gzip_types. You can probably just auto-generate them from mime-db and just have all the types defined so NGINX knows about all registered mime types.

Then, you need to decide when to compress stuff. I had a blog post on Dropbox Tech Blog, again, about deploying Brotli for static content at Dropbox. The main idea is that if your data is static, you can pre-compress it beforehand to get the maximum possible compression ratios. It will be free because it’s an offline operation; it’s part of your static build step. So, when to compress is a question. Try to pre-compress all the static.

How to compress is also question. You can use gzip. NGINX also has a third-party ngx_brotli module. You can use them both. You also need to decide on compression levels. For example, for dynamic stuff, you need to optimize for full round-trip time. Round-trip time means encryption transfer and decryption, which means maximum compression levels are probably not the right thing for dynamic content.

22:30 Buffering

Buffering: there’s lots and lots of buffering along the way from your application through NGINX to the user, starting from socket buffers to library buffers, such as gzip or TLS. But here, we’ll only discuss NGINX internal buffers, not library buffers or socket buffers.

Buffering inside NGINX has its pros. For example, it has backend protection, which means if client sends you byte by byte, then NGINX won’t hold the backend thread; it will get the full request and send it to the backend. On the response side, it’s the same. If your client is malicious and requests one byte at a time from you, NGINX will buffer the response from your backend and free up that backend thread, which can do useful work.

So, buffering has its pros. For example, on production, it can also retry requests on the request side. When you have full requests, you can actually retry it against multiple backends in case one request fails.

Buffering also has its cons. For example, it will increase latency, but it will also increase memory and I/O usage.

Here, you need to decide statically, at config generation time, what you want to buffer and what not. It’s not always possible. You can’t decide where you want buffering, and then how much buffering, and where you want to buffer on disk. You can always leave it up to an application to decide. You can do that with the X-Accel-Buffering buffering header. On response, your application might say whether it wants the response to be buffered or not.

24:41 Again, TLS

Again, TLS. We talk a lot about that stuff.

On the NGINX side, you can save CPU cycles both on your client side and on your server side by using session resumption. NGINX supports session resumption through session IDs. It’s widely supported on the client side also, but it requires server-side states. Each session will consume some amount of memory, and it is local to a box. Therefore, you’ll either need “sticky” load balancing or distributed cache for these sessions on your server side. And it’s only slightly affecting perfect forward secrecy (PFS). So, it’s good.

On the other side, you can use session resumption with tickets. Tickets is mostly supported on the browser length. It depends on your TLS library on the other side. It has no server-side state. It’s basically free. But it highly depends on your key-rotation infrastructure and the security of that infrastructure because it greatly limits PFS. It limits it to a duration of a single-ticket key which means that if attackers can get hold of that key, they can retrospectively decrypt all the traffic they collected. Again, there are lots of security implications to any tunable TLS layer that you do, so be careful about that. Don’t compromise your user’s privacy for performance.

Speaking about privacy, OCSP stapling: you should really just do that. It may shake some RTTs. It can also improve your availability. But mostly, it will protect your user’s privacy. Just do that. NGINX also supports it.

TLS record sizes: the main idea is that your TLS library will break down data in chunks. Until you receive the full chunk, you cannot decrypt it. Therefore, during the config generation time, you need to decide what servers are low-latency and what servers are high-throughput. If you can’t decide that during the config generation phase then Cloudflare, for example, has a dynamic TLS record patch that you can apply, and it will gradually increase your record sizes as request streams. Of course, you may say, “Why don’t you just set it to 1k?” Because if you set it too low, it will incur a lot of overhead on the network layer and on the CPUs. So, don’t set it too low.

A really huge topic: eventloop stalls. NGINX has great architecture. It’s asynchronous event-based. It’s paralyzed by having multiple shared-nothing processes – almost shared-nothing. But when it comes to blocking operations, it can actually increase your tail latency. So, the rule of thumb is do not block inside the NGINX eventloop.

A great source of blocking is AIO, for example, especially if you’re not using flash drives. If you’re using spinning drives, enable AIO thread pool, aio_write, especially if you’re using buffering or caching. I really have no reason not to enable it. That way, all your read and write operations will be basically asynchronous.

You can also reduce blocking on log emission. If you notice that your NGINX blocks on log writes a lot, you can enable buffer and then gzip in the access_log directive. That won’t fully eliminate blocking, though.

To fully eliminate it, you need to log to syslog in the first place. That way, your log emission will be fully integrated inside NGINX eventloop and it won’t block.

Opening files can also be a blocking operation, so enable open_file_cache, especially if you’re serving a ton of static files or you’re caching data.

30:15 Wrapping Up

It went faster than I thought it would. Again, that was a lot. I skipped the most crucial parts here to make it fit into presentation format and make it fit into 40 minutes. I made a lot of compromises. And I skipped the most crucial parts: how you verify whether you need to apply tuning, and how do you verify that it worked. All these tricks whisper eBPF and stuff. If you want to know more about that, go check out the Dropbox Tech Blog. It has way more data and a ton of references, such as references to source code, references to research papers, and references to other blog posts about similar stuff.

Wrapping up: in the talk, we mostly went through single-box optimizations. Some of them improved efficiency and scalability and removed artificial choke points that your web server may have. Other ones balanced latency and throughput, and sometimes improved both of them. But it’s still a single-box optimization that affects the server.

In our experience, most performance comes from global optimizations. Good examples are load balancing, internal and external, within your network and around the edge, and inbound and outbound traffic engineering. These are problems that are on the edge of knowledge right now. Even huge companies such as Google and Facebook are just starting to tinker with this, especially outbound traffic engineering. Both companies just released papers on that. This is a very hot subject. It’s full of PID controllers, feedback loops, and oscillations.

If you’re up to these problems, or maybe anything else – after all, we also do lots of storage – if

you’re up for the challenge: yes, we’re hiring; we’re hiring SWEs, SREs, and managers. Feel free to apply.

33:05 Q&A

And finally, Q&A. I’m not sure how much time I have, but let’s see how it goes. Also, I’ll be here today and tomorrow. This is my Twitter address (@SaveTheRbtz), so feel free to ask me any questions on Twitter. Email address: [email protected]

Q: What about QuickAssist?

A: QuickAssist sounds nice, but there are a couple of caveats. It implies that your software needs to know how to talk synchronously to your offload engine.

Presentation graphs from QuickAssist look awesome, especially around RSA 2K. But again, in the case of SSL handshake offload, it depends on OpenSSL library having support for asynchronous operations.

The only use case I see for QuickAssist right now is basically RSA 2K, because ECDSA

is very fast on the modem CPU. Stuff that I was talking about such as AVX- and BMI- and EDX-capable CPUs, like Broadwell and EPYC, are really good at elliptic curve stuff.

That’s all. Thank you guys.

The post Optimizing Web Servers for High Throughput and Low Latency appeared first on NGINX.

Source: Optimizing Web Servers for High Throughput and Low Latency

Leave a Reply