Updating the NGINX Application Platform

Today, our vision to simplify the journey to microservices takes a big step forward. We are announcing the upcoming availability of the next version of our flagship software, NGINX Plus R15, with exciting new features, and the upcoming launches of NGINX Unit 1.0 and NGINX Controller R1.

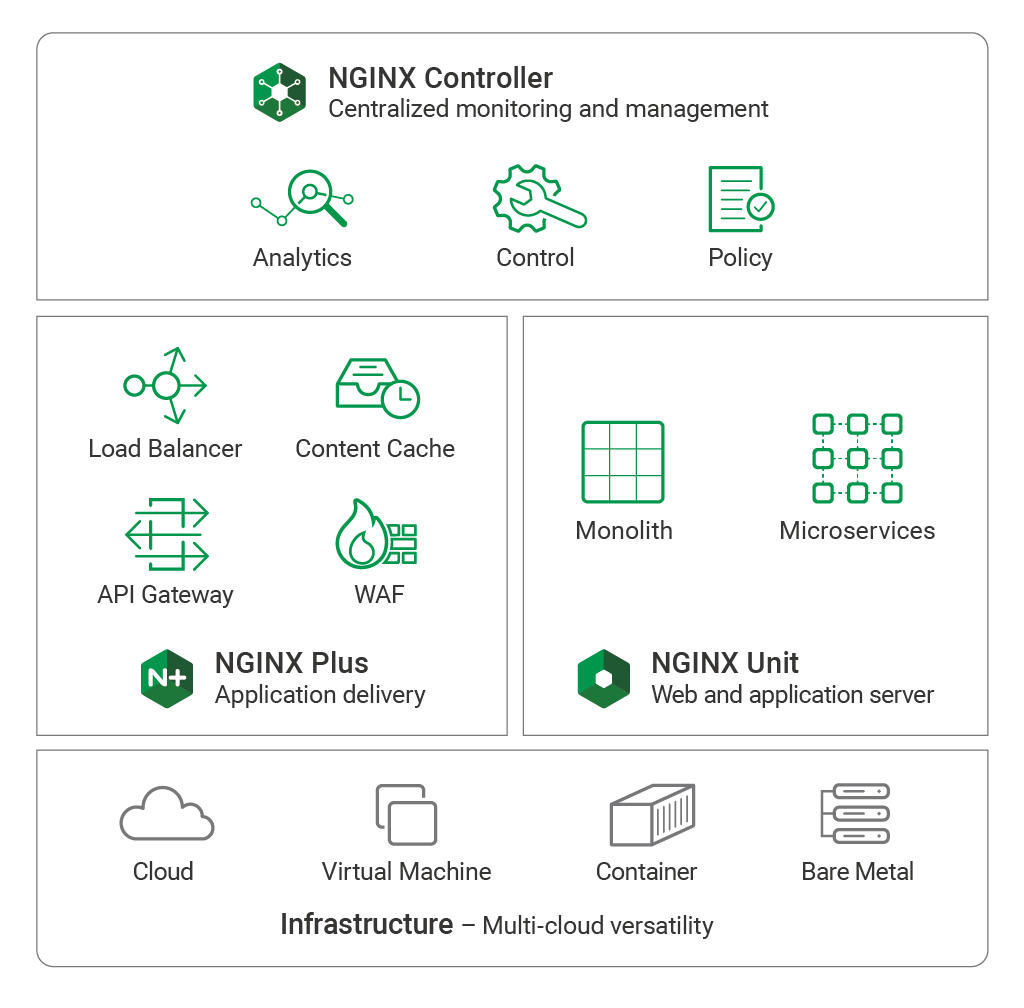

Combined, these launches advance the NGINX Application Platform, a product suite that includes a load balancer, content cache, API gateway, WAF, and application server – all with centralized monitoring and management for monolithic apps, for microservices, and for transitional apps.

The NGINX Application Platform has already gained tremendous traction. More than 11 million new domains install NGINX Open Source each month – four instances every second. Three million instances of NGINX Open Source are running in production microservices environments. One million instances are running as a Kubernetes Ingress controller.

There are about 250 NGINX customers running NGINX Plus in production microservices as a reverse proxy, load balancer, API gateway, and/or Kubernetes Ingress controller. We believe that NGINX has the largest footprint of software running in such environments.

In this blog post, I want to summarize the details of our various product releases. First, though, I’d like to describe our motivations for creating this platform, and how we believe it helps organizations greatly reduce cost and complexity.

To learn more about what’s new in the NGINX Application Platform, register for our live webinar on April 25, 2018 at 10:00 AM PDT.

Why Create the NGINX Application Platform?

Since our initial announcement of the NGINX Application Platform, we’ve talked to a number of companies with complex infrastructure and application stacks. These organizations have a mix of open source software, proprietary solutions, and custom code making up their load balancing and API gateway tier. This has happened over years of patching old applications, developing new applications, and acquiring infrastructure.

This mix of technologies and software has become a nightmare for the companies to manage. The infrastructure has become so fragile that they fear making any changes. This is frustrating for operators and developers who are looking to deploy updates without added overhead, and who need to move with agility to stay competitive.

The situation only gets worse as companies start migrating to microservices. Microservices require additional tooling and complexity to work properly. For many companies, the microservices initiative and legacy infrastructure end up running as completely separate entities – what Gartner calls bimodal IT. This further complicates operations, creating two separate application stacks to manage and monitor.

When we talk to these companies, they’re surprised by how much of their infrastructure they can consolidate onto NGINX Plus.

Operational Complexity

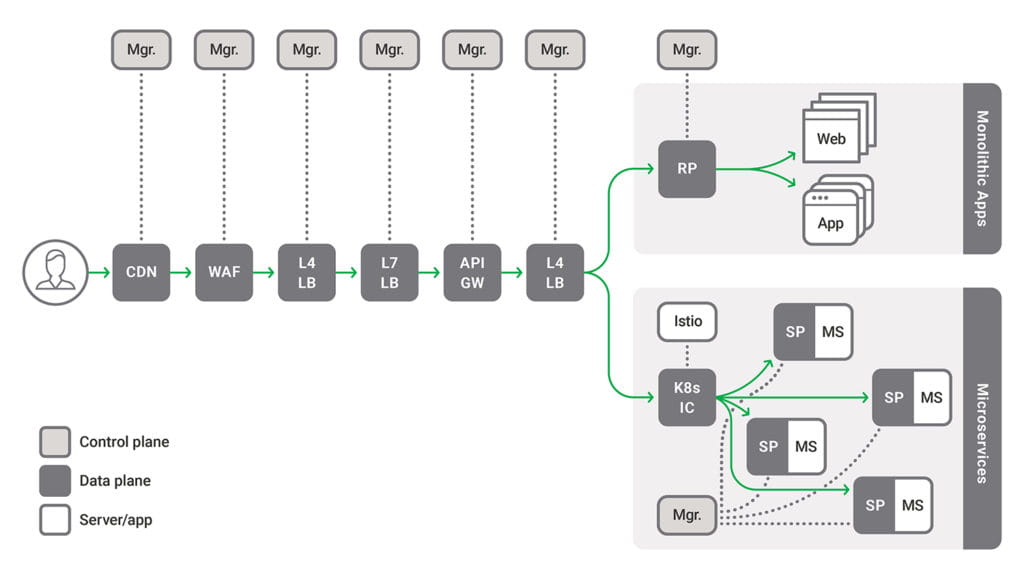

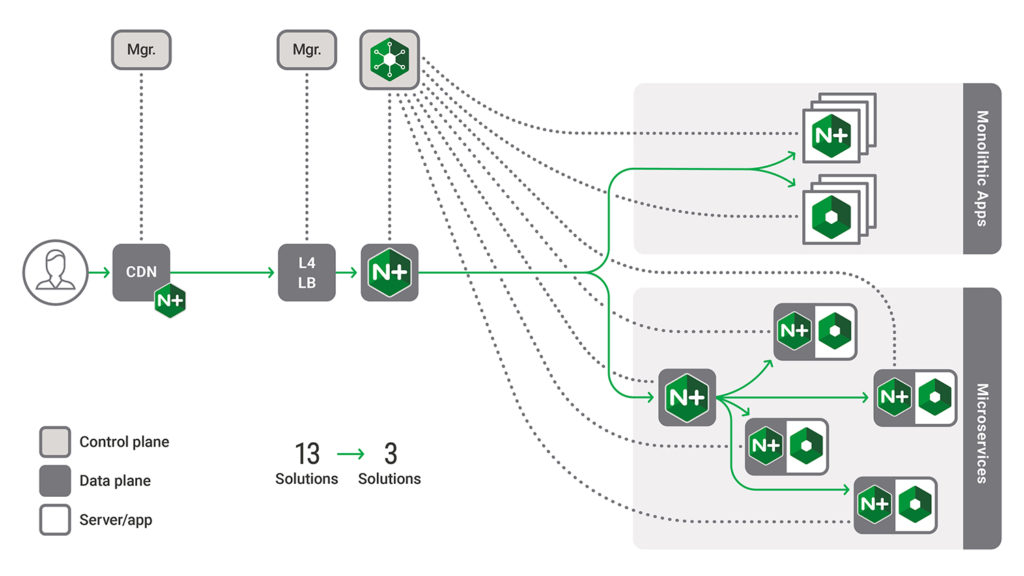

The diagram below represents what we see in a lot of organizations today. In fact, it’s based on the architecture one NGINX customer had in place as it was beginning to add microservices to its traditional virtual machine‑hosted monolithic applications.

What are you seeing here? First, a CDN for static content, a WAF-as-a-Service for security, then a fast, simple Layer 4 load balancer and a more sophisticated Layer 7 load balancer. An API gateway is followed in the stack by another Layer 4 load balancer. Also, as shown in the figure, each of these pieces of software requires its own monitoring and management tools, or a complex, consolidated management solution.

The second Layer 4 load balancer – the one downstream from the API gateway – routes traffic to either legacy monolithic apps, with a reverse proxy server at the front end, or to one or more Kubernetes Ingress controller instances, each in front of a microservices app. The microservices apps include a separate sidecar proxy instance for each of the app’s many service instances.

Even if we just focus on the multiple load balancers, there’s a lot of complexity. Often times, these are a mix of hardware load balancers, cloud provider load balancers, and open source load balancers. IT typically manages the hardware load balancers, while the application teams manages the cloud and open source load balancers – without IT knowing they exist, in many cases. This leads to unnecessary complexity, separate operational silos, and mistakes, all cutting into performance and uptime.

When moving to microservices, we see organizations using multiple application servers, reverse proxies, and sidecar proxies to stitch together a service mesh. And then, when it comes to monitoring, there is no overall solution that provides the necessary analytics, dashboards, and alerts to gauge the health of the application.

How the NGINX Application Platform Helps

The NGINX Application Platform helps reduce this complexity by consolidating common functions down to far fewer components – in many cases, down to a single piece of software.

In most cases there is no need to run multiple load balancers. NGINX Plus is a full‑featured HTTP, TCP, and UDP load balancer that can take the place of most hardware load balancers. In a public cloud environment, you can use NGINX Plus for the bulk of your load balancing, adding simple use of a basic Layer 4 load balancer – such as the AWS Network Load Balancer (NLB) – as the ingress point to a cloud deployment or data center.

Modern applications tend to be more complex than ever, with multiple application servers, different types of web servers, sidecar proxies (in a service mesh architecture), and other overhead needed to create and manage them. We have also noticed the lack of a simple and flexible application server that serves as a base on which developers can build applications, and we created NGINX Unit to be this base.

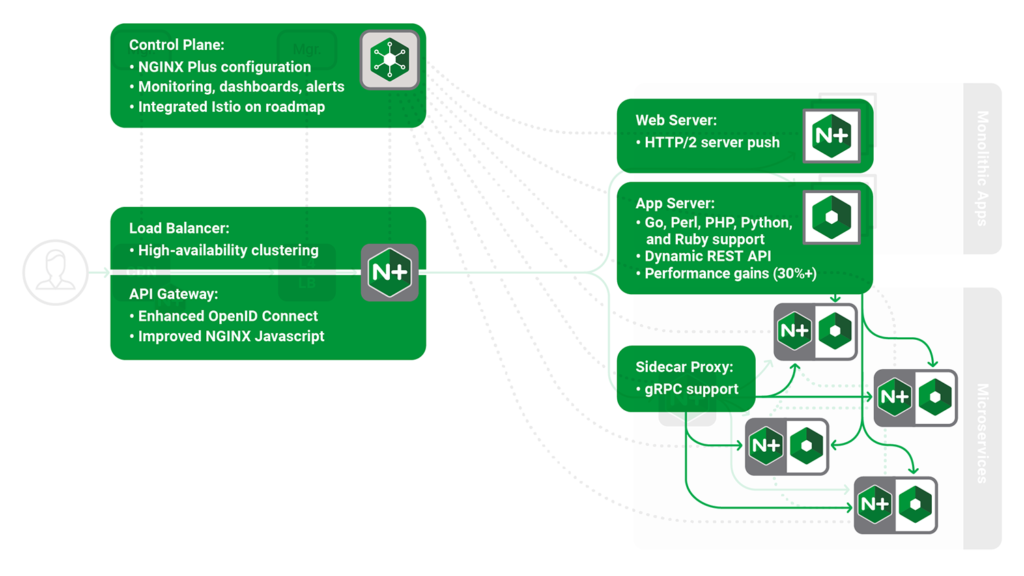

NGINX Unit further reduces complexity by supporting multiple languages, which enables organizations to consolidate their application servers. At launch, Go, Perl, PHP, Python, and Ruby are supported, with support for more languages coming soon.

With NGINX Controller, you can monitor and manage your entire set of NGINX Plus instances from a single point of control. Our long‑term roadmap is for NGINX Controller to manage and monitor all of our products, including NGINX Unit, helping businesses consolidate monitoring and management solutions.

What’s New in the NGINX Application Platform

We have several exciting product announcements coming up:

- NGINX Plus R15. A new release of NGINX Plus, including HTTP/2 server push, gRPC support, and new clustering capabilities, previewed in recent releases of the open source NGINX software.

- NGINX Unit 1.0. The first generally available (GA) version of the new NGINX dynamic web and application server – which, uniquely, supports multiple programming languages and language versions.

- NGINX Controller R1. The first GA release of our new monitoring and management software for NGINX Plus. NGINX Controller also serves as monitoring‑only software for NGINX open source and NGINX Unit.

These announcements will serve to empower current users and extend the capabilities of the overarching NGINX Application Platform.

Using the same diagram as above, here is a summary of all the features we’re delivering to simplify application infrastructures. Below we break down each product and the associated new features in our upcoming releases.

NGINX Plus R15

On April 10 we will release the fifteenth version of NGINX Plus, our flagship product. Since its initial release in 2013, NGINX Plus has grown tremendously, in both its feature set and its commercial appeal. There are now more than 1,500 NGINX Plus customers.

NGINX Plus R15 will contain a number of enhancements which have a wide variety of uses, and which address load balancing, API gateway, and service mesh use cases:

- Support for gRPC. NGINX Plus R15 can proxy gRPC traffic, one of the most frequently requested features from our community. gRPC is used for both client‑service and service‑service communications. The Istio service mesh architecture uses gRPC exclusively.

- HTTP/2 server push. Satisfying another frequent request from our community, NGINX Plus R15 can push content to the browser in anticipation of an actual request for it, reducing round trips and improving performance.

- OpenID Connect Single Sign‑On (SSO). You can use NGINX Plus R15 to link to Okta, OneLogin, and other identity providers to enable SSO for both legacy and modern applications. This extends NGINX Plus as an API gateway.

- Support for making subrequests with the NGINX JavaScript module. NGINX Plus R15 can make multiple subrequests in response to a single client request. This is helpful in API gateway use cases, giving you the flexibility to modify and consolidate API calls using JavaScript.

- Clustering support and state sharing. With NGINX Plus R15, you can use Sticky Learn session persistence in a cluster. The next few releases of NGINX Plus will include additional clustering features. These changes will help make NGINX Plus more reliable and more manageable in large deployments.

NGINX Unit 1.0

On April 12 we will release NGINX Unit 1.0. NGINX Unit is our new, dynamic web and application server. It’s an open source initiative led by Igor Sysoev, the original author of NGINX Open Source. You can expect the same level of quality and performance from Unit that you get with NGINX.

With Unit, we aim to reduce complexity by consolidating multiple functions into a single, compact binary:

- Multiple language support. A single application server supports multiple languages within an application. Multiple versions of the same language, and multiple versions of different languages, can run simultaneously on the same server. At launch Unit will support Go, Perl, PHP, Python, and Ruby. More language support will follow in future releases, including Javascript and Java.

- Dynamic configuration. NGINX Unit is managed by a REST API, and all configuration changes are made directly in memory. NGINX Unit is fully dynamic, able to be reconfigured without service interruption, thus meeting the needs of modern web stacks.

- Static and dynamic content. By the end of Q2 2018 we will release support for serving static content in Unit. You will not need a separate web server, as you do with with other application servers.

NGINX Controller R1

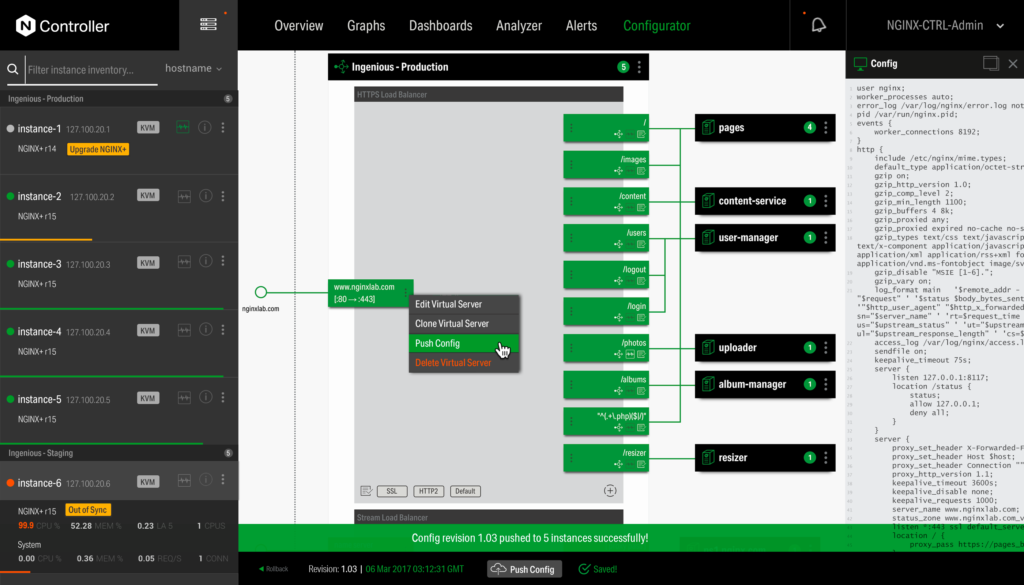

In Q2 we will release NGINX Controller. Our vision is for NGINX Controller to become a smart node within an organization’s infrastructure, absorbing information and carrying out policy decisions in real time, with little human intervention.

Our long‑term roadmap is for NGINX Controller to manage and monitor all of our products, including NGINX Unit and NGINX open source. At launch, NGINX Controller will provide centralized monitoring and management for NGINX Plus, with the following features:

- Centralized management. NGINX Controller can create virtual load balancers using a simple, graphical wizard. This simplifies the creation of new load balancers and reduces the need for specialized configuration management skills.

- Centralized monitoring. NGINX Controller complements existing monitoring capabilities for NGINX Open Source, NGINX Plus, and NGINX Unit, with centralized management of NGINX Plus. Having a central point of management simplifies and scales the management of large deployments, including clusters.

- Automation with full API support. NGINX Controller has a REST API for developers to use. Dynamic APIs automate NGINX Controller workflows and provide a DevOps‑friendly way to manage NGINX Plus.

Conclusion

Many of the customers we talk to have seen their infrastructure get more and more complex over the years, due to the need to support new initiatives alongside existing applications. They’ve deployed dozens of point solutions, with each new tool adding cost and overhead to their application architecture. These organizations need a new approach to their infrastructures, allowing them to steadily transform their legacy apps as they evolve to digital services based on cloud and microservices architectures.

We created the NGINX Application Platform to help our customers cut the cost of delivering legacy applications and reduce time-to-market for new microservices applications and monolith-to-microservices transitions. The NGINX Application Platform includes NGINX Plus for application delivery and load balancing, the NGINX WAF for security, and NGINX Unit to run application code, all monitored and managed by NGINX Controller. As a suite of complementary technologies, the NGINX Application Platform helps enterprises that are journeying to microservices develop and deliver ever‑improving applications.

With this latest round of new products and features, the NGINX Application Platform helps enterprises to further simplify application architectures. Stay tuned as we provide product‑specific deep dives in future blogs as each software product is released.

The post Updating the NGINX Application Platform appeared first on NGINX.

Leave a Reply